Cours

Kling AI just released version 3.0 for its AI video models. Similar to the release of Seedance 2.0, it comes as a Chinese response to an AI landscape dominated by US-made models, and it might just be the best AI video model out there.

In this article, you’ll learn how to fully use Kling 3.0 through its web interface to build characters and make videos where characters remain consistent across shots.

If you want to learn more about the latest releases in this space, I recommend checking out our guide on other top video generation models.

What is Kling AI?

Kling AI is a generative AI platform designed to create videos from text prompts, images, or a combination of both. Developed by the Chinese technology company Kuaishou, it quickly became one of the best AI models for character consistency. The videos it generates come with native sound, making them feel polished and ready to use.

Kling AI Pricing and Access

As with many AI video models, Kling AI's pricing is based on credits. We pay a monthly subscription that gives us access to a fixed number of monthly credits. There are also other small features locked behind each subscription tier, but the main differentiator remains the number of generation credits we're given.

To write this article, I used their Pro plan ($32.56 a month), which comes with 3,000 credits. For more details on their pricing plans, check the Kling AI official pricing page.

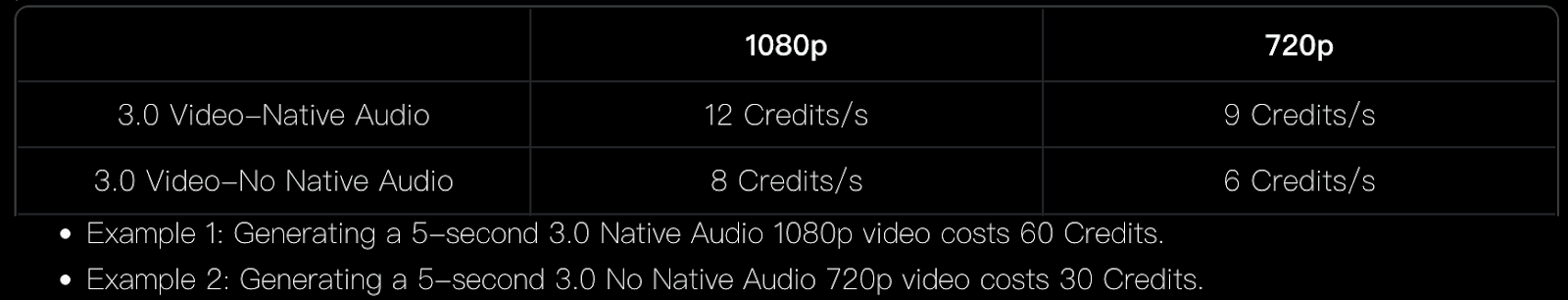

How many video credits does it cost to generate a video?

Credit usage is primarily determined by three factors:

- The video's length (3 to 15 seconds)

- The resolution (720p or 1080p)

- Whether or not audio is generated

Assuming we generate videos with native audio, with a Pro subscription, we can expect to generate around 6 minutes of 720p video or 4 minutes of 1080p video per month.

Key Features of Kling 3.0

The examples in this section were taken from the Kling 3.0 model official user guide.

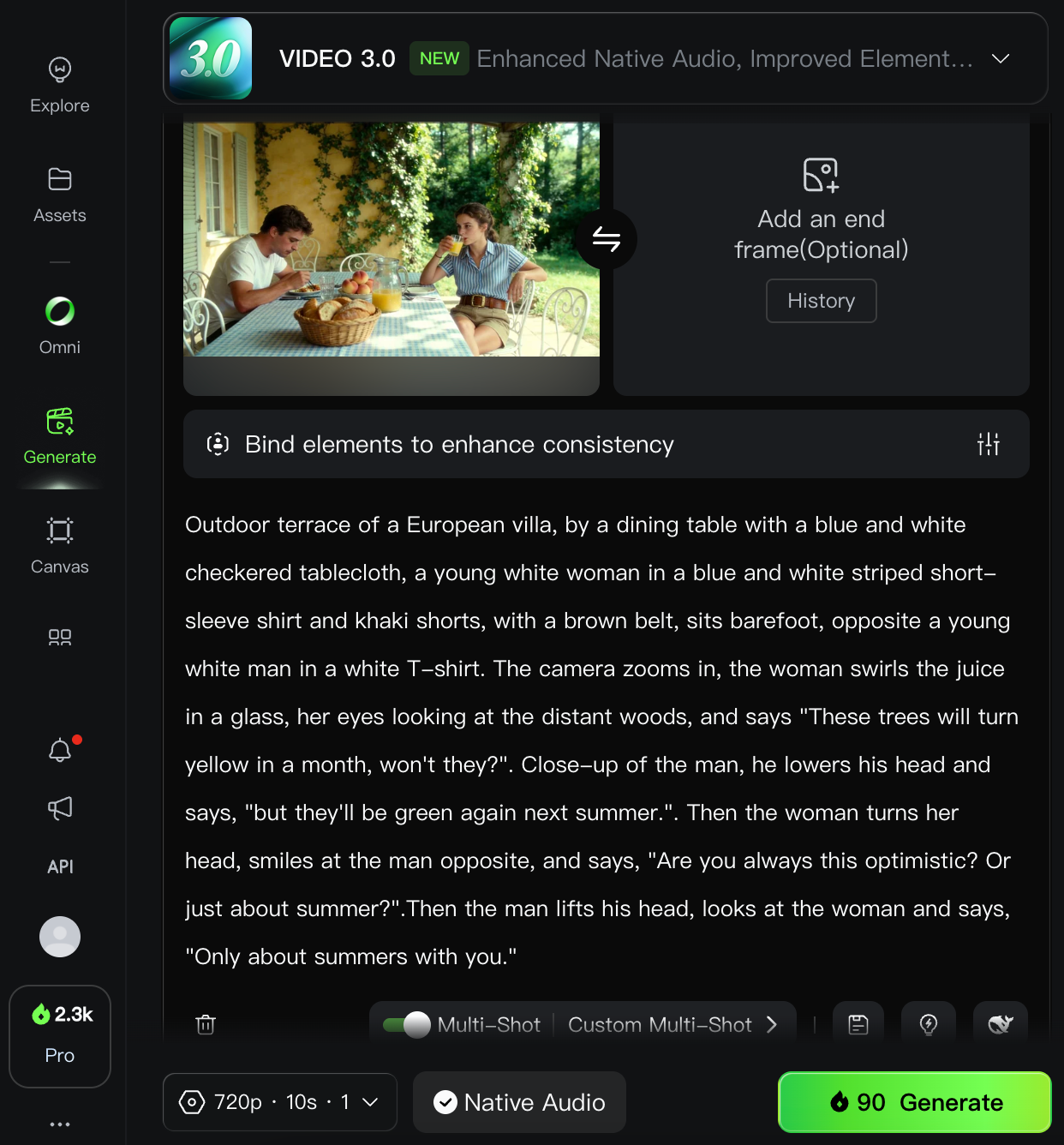

Implicit multi-shot scenes

Most AI video models work best when the prompt describes a single shot or action. Kling 3.0 is able to understand a prompt that describes multiple shots in a single prompt.

The following example prompt describes the video's setting and four shots without explicitly listing them:

Outdoor terrace of a European villa, by a dining table with a blue and white checkered tablecloth, a young white woman in a blue and white striped short-sleeve shirt and khaki shorts, with a brown belt, sits barefoot, opposite a young white man in a white T-shirt.

The camera zooms in, the woman swirls the juice in a glass, her eyes looking at the distant woods, and says, "These trees will turn yellow in a month, won't they?"

Close-up of the man, he lowers his head and says, "But they'll be green again next summer."

Then the woman turns her head, smiles at the man opposite, and says, "Are you always this optimistic? Or just about summer?"

Then the man lifts his head, looks at the woman, and says, "Only about summers with you."The prompt was paired with an image, which the model used as the first frame of the video.

Here's the result:

I was very impressed by this video. The prompt adherence is strong, and the video looks quite realistic to me.

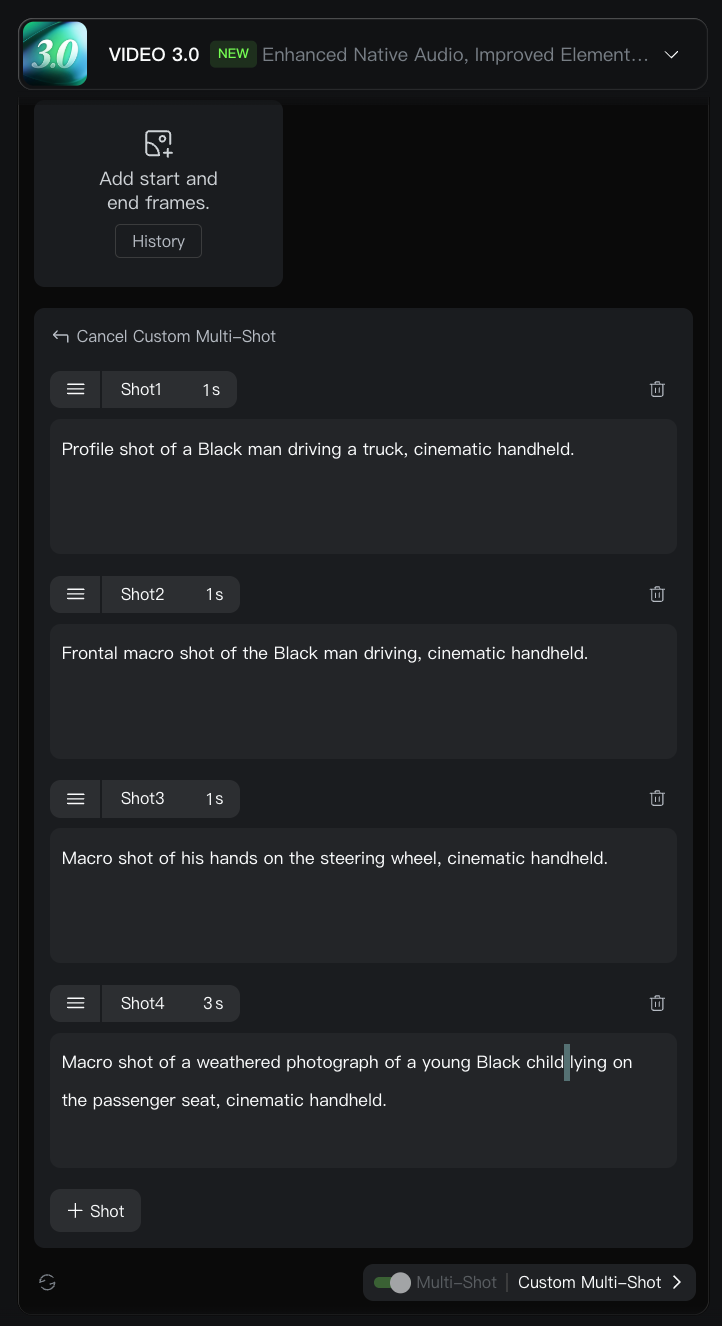

Explicit multi-shot scenes

Despite the model's ability to understand where one shot ends and the next starts from a text prompt, it's not perfect and may interpret the prompt differently than we intended. We can force the structure of a scene by explicitly mentioning the scenes in the prompt.

Shot 1: Profile shot of a Black man driving a truck, cinematic handheld.

Shot 2: Frontal macro shot of the Black man driving, cinematic handheld.

Shot 3: Macro shot of his hands on the steering wheel, cinematic handheld.

Shot 4: Macro shot of a weathered photograph of a young Black child lying on the passenger seat, cinematic handheld.To specify each shot, we click the Custom Multi-Shot button at the bottom of the prompt input. There, we can provide a prompt for each shot and also set the duration. The model allows up to 6 shots per video.

Below is the result from this multi-shot prompt:

Audio and tone controls

As we've seen so far, Kling 3.0 allows us to generate videos with native audio. But it goes further than that. We can guide the generated audio by specifying what we want in the prompt. For instance:

Home setting with a faint hum of the living room air conditioner in the background for a realistic daily vibe.

Mom (softly, in a surprised tone): Wow, I didn't expect this plot at all.

Dad (in a low voice, agreeing, in a calm tone): Yeah, it's totally unexpected. Never thought that would happen.

Boy (in an excited tone): It's the best twist ever!

Girl (nodding along, in an enthusiastic tone): I can't believe they did that!In this example, the prompt provides information about the background sounds and how people in the video should talk. When it comes to dialogue, the structure is usually:

<who is speaking> (<how things are said>) <what they say>Here's the video generated with the prompt above:

This example was generated from a first-frame image. This helps the model associate the characters with the prompt. It's impressive that just with the image, the model can identify who the dad, the mom, the boy, and the girl are.

Multilingual speech capabilities

The model supports generating speech in the following languages:

- Chinese

- English

- Japanese

- Korean

- Spanish

We can specify the language that is spoken together with the tone description:

<who is speaking> (<how things are said>, <language>) <what they say>Here's an example:

On the rooftop of a Korean high school, distant city lights glimmer in the background with a soft wind rustling, and stars twinkle in the night sky. The girl leans against the railing, lost in thought. The boy walks over with two cans of cola, hands one to her, and she takes it and pops the tab open.

Boy (casual tone, Korean): "숙제 다 했어? 왜 여기 있어?"

Girl (sighing, Korean): "시험이 너무 무 서워"".

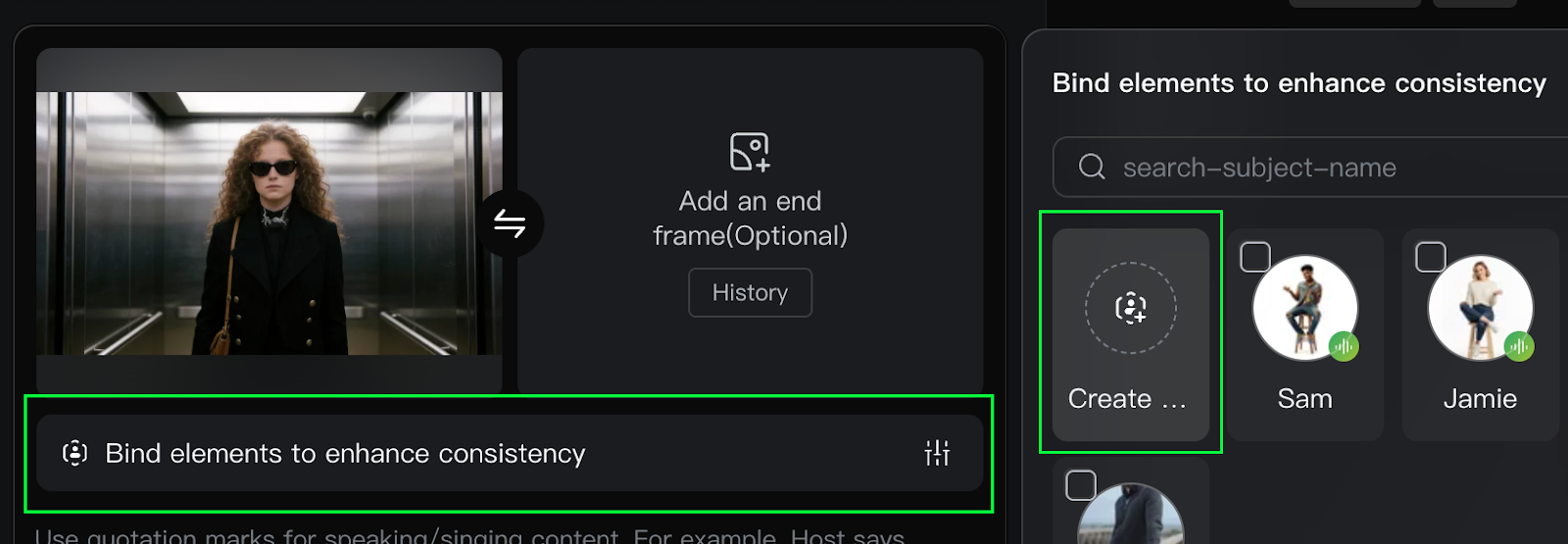

Boy (gentle tone, Korean): "4정 마, 넌 잘할 거야."Subject binding

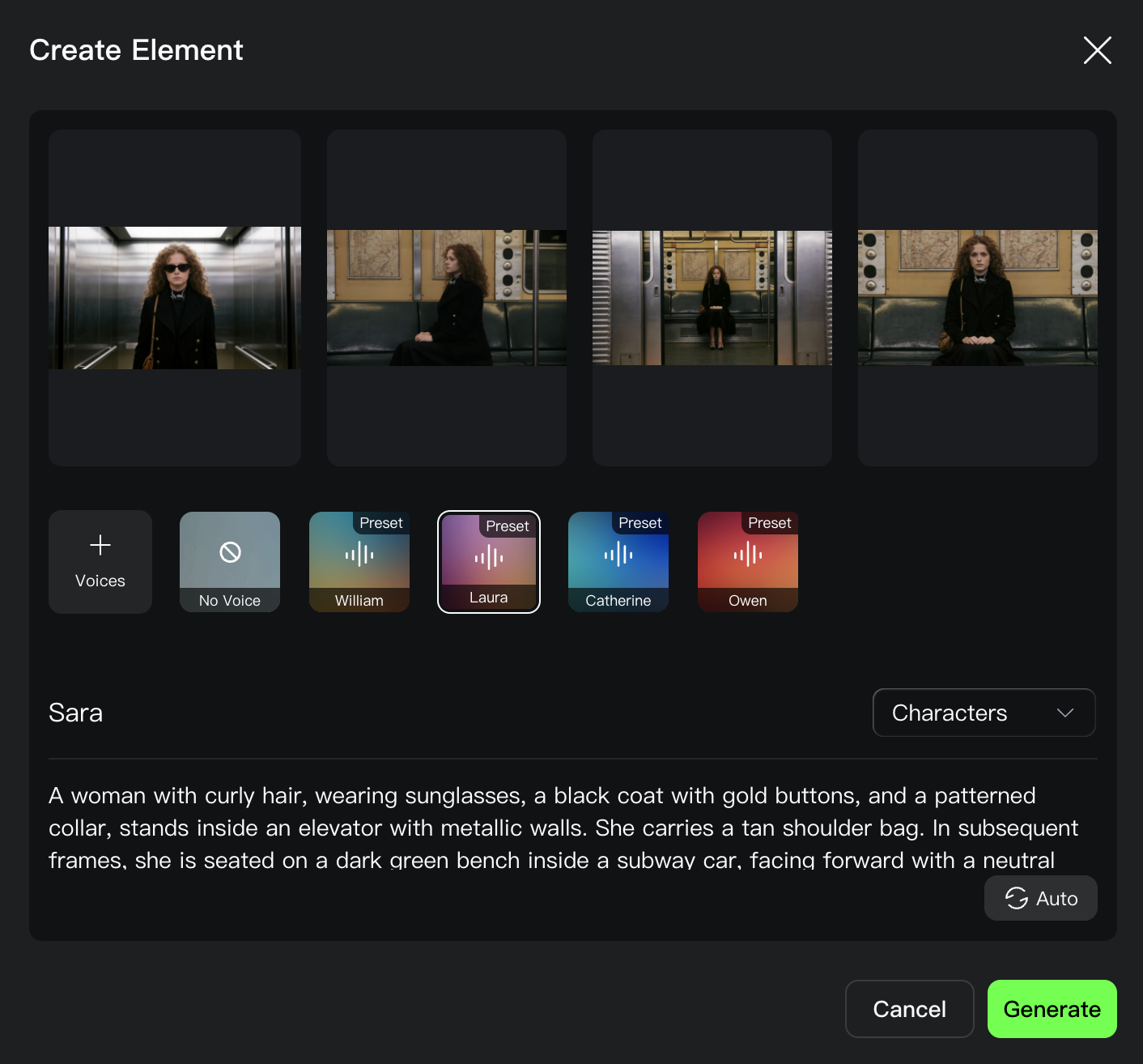

Subject binding is a feature that allows specific characters or visual elements to remain consistent throughout a generated video. Locking a subject’s appearance and characteristics helps ensure the main focus stays stable and recognizable, even when camera movements such as zooming, panning, or tilting occur.

After uploading an image as the initial frame, we can activate this feature by selecting the Bind elements to enhance Consistency option. This creates a reference that the system uses to maintain visual stability and prevent unwanted changes to the subject during video generation.

Without subject binding, the model will have to guess the features of the subject that aren't visible in the initial image. For example, in the image above, the subject is wearing sunglasses. So, it would be useful to provide an image of the subject's face. Providing other poses, such as the subject looking left and right, is also useful if we want the model to "not invent" what the person looks like.

When creating an element, we can provide up to three images. We're also given the option to select a voice and a name for the character.

Here's the result:

I personally find these features to be a game-changer because character consistency was one of the aspects of AI video generation that I found the most frustrating to work with. It often required very detailed text prompts and iterating over and over to get it right.

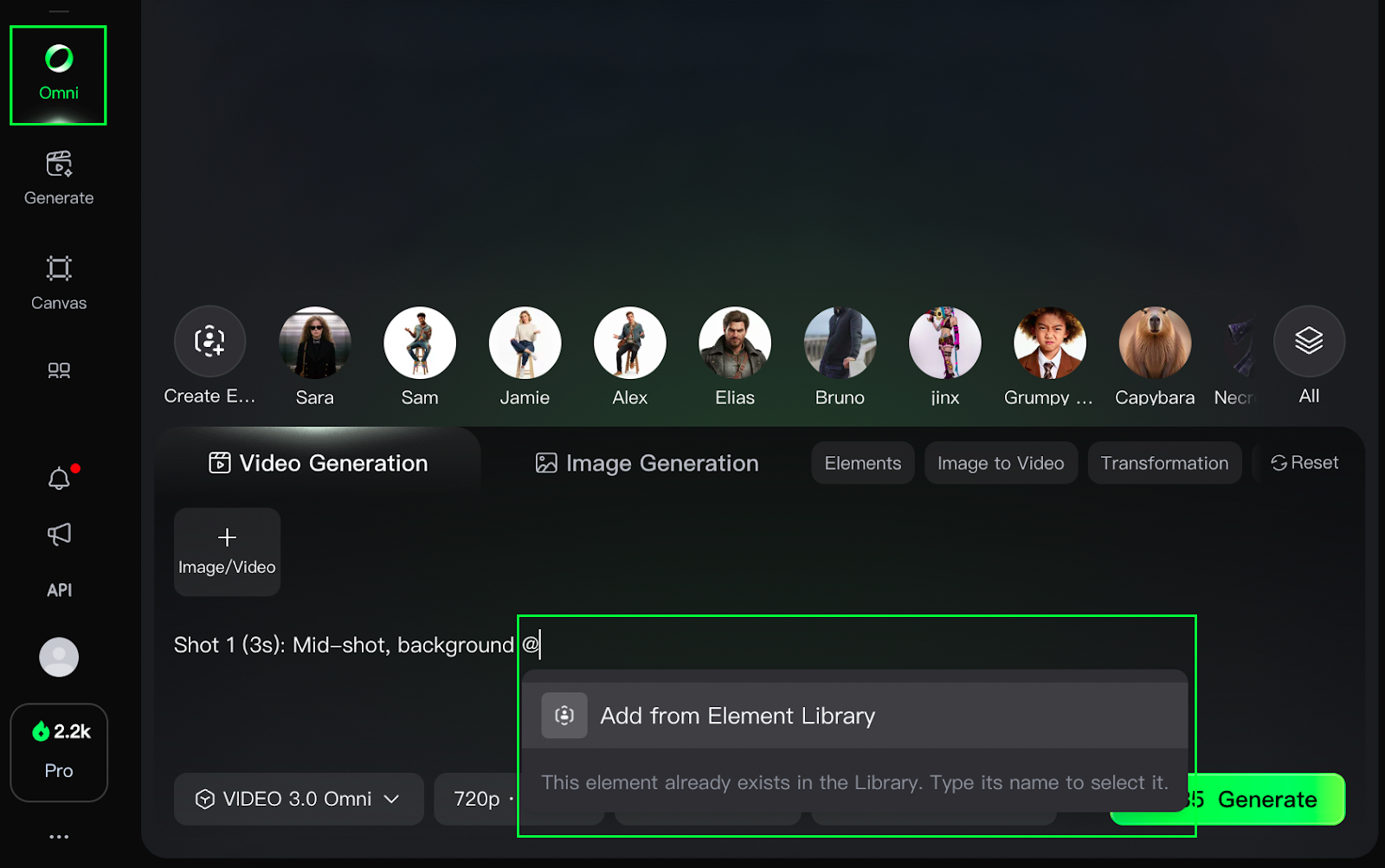

Omni mode

The Omni mode from Kling AI brings every feature together into a single model. We can think of it as subject binding on steroids, as it allows us to create elements (characters, scenes, items, etc.) and then bring them all together into a single prompt. This makes it possible to generate very complex scenes with a high level of accuracy.

For example, we can create a character element by providing reference images and then a scene element, easily placing the character in that scene. As another example, we can create multiple characters and develop back-and-forth dialogue between them by referring to these characters in the prompt.

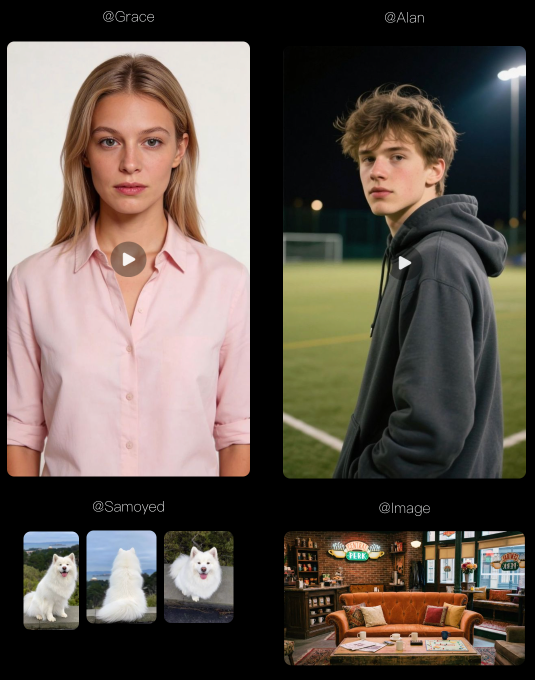

When using Omni mode, we can refer to previously created elements using @. This will open a pop-up where we can select the element we want to refer to.

Here's a three-shot example showcasing the power and versatility of Omni:

Shot 1 (3s): Mid-shot, background @Image. @Grace sits on the sofa eating cookies as @Alan walks in holding @Samoyed.@Samoyed lunges for the cookie in @Grace's hand. @Grace says, "Hey! Watch your dog!"

Shot 2 (2s): @Alan sits beside her, pulling the leash and lifting @Samoyed. Close-up, @Alan says, "He just likes cookies more than me."

Shot 3 (3s): Close-up, @Grace smiles and says, "Well, he has good taste at least."In this example, @Image, @Grace, @Alan, and @Samoyed are elements created separately and used together in a single prompt.

Testing Kling 3.0

In the previous section, we learned about the core capabilities of Kling 3.0 and showcased a few examples taken from their official website. I think the results are very impressive, but one should always be skeptical of the examples companies provide, as they are usually curated, cherry-picking only the best examples.

In this section, we take it for a spin and test it on new examples to see whether the model really lives up to the expectations.

Example 1: Generating a character

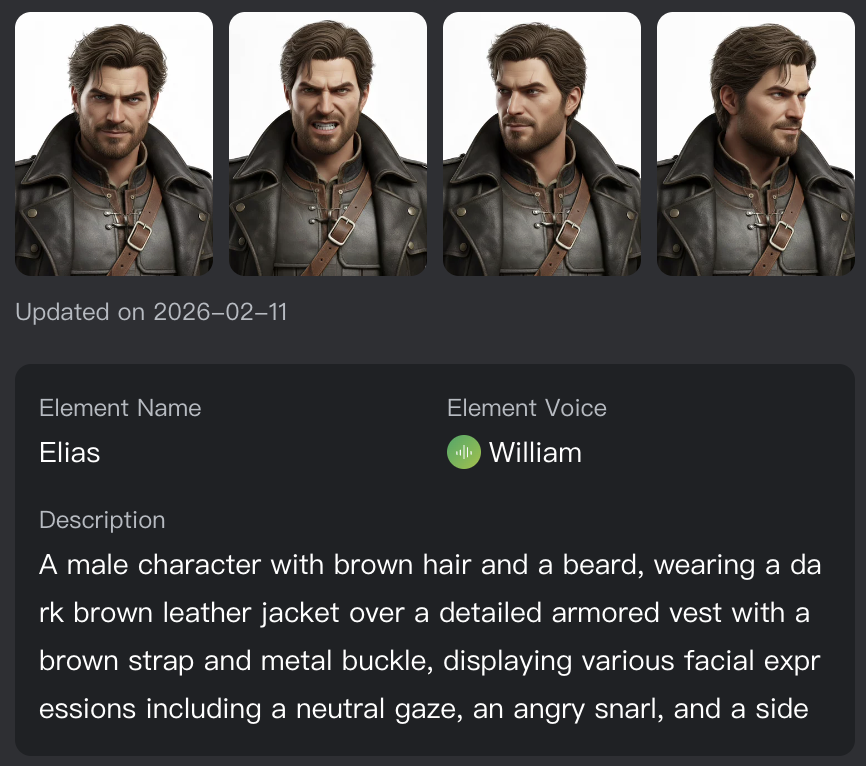

I used AI to generate a fantasy character. I generated a full-body image and then a few different poses, like looking left, looking right, and having a mad expression.

I combined those elements to create a character I named Elias. I then generated an image for the first frame of the video and used subject binding to bind the two.

This is the prompt I used:

Elias stands alone in front of the burning village. Elias is breathing heavily, his hands clenched into tight fists at his sides.

Elias looks at something just off-camera, something we cannot see. Elias says, in a low and dangerous voice, "I told you what would happen if you crossed that line."

Elias pauses for a second.

The camera zooms in close to his face. His face burns with anger, and he says, "I gave you my word, and I gave you a choice." Note that it doesn't use @ references because I didn't use Omni mode for this video, only subject binding. Here's the result

Example 2: Generating a comic sketch with Omni

Here, I tried to create a sitcom scene between friends. I created three characters, Alex, Jamie, and Sam, using AI. As before, I generated a few different poses for each of them.

I also generated an image for the location and created a scene element with it.

This is the final prompt I used. I used a text prompt to describe the shots instead of their multi-shot functionality because that limits the scene to six shots. Turns out Kling 3.0 is fully capable of handling this as well.

Shot 1: Mid-shot, background @Image.

Shot 2: @Jamie and @Sam sit on the couch as @Alex rushes into the coffee shop and says, "I just liked my ex’s photo from 2016."

Shot 3: @Jamie turns to @Alex and says, "How bad?"

Shot 4: @Alex replies, "She’s with the guy she left me for."

Shot 5: Camera focuses on @Sam, and he replies, "Delete it, dude!"

Shot 6: @Alex replies, "I did, but what if she saw?"

Shot 7: @Sam says, "Then act confident, like her wedding photo."

Shot 8: @Jamie laughs, and @Alex says, "I need new friends..."And this was the result:

We can see a few mistakes in the video:

- There's an initial frame with the empty couch. This was probably my fault because I didn’t specify that the characters should be on the couch in shot 1. However, when I retried, it also started empty. Only when I provided a first frame with the characters sitting on the couch did I get the expected result. However, in that case, it was hard to make Alex come into the coffee shop.

- There's an extra character sitting on the couch.

However, despite these, I found the result to be very impressive. The characters feel lively and authentic, and overall, the scene flows quite well.

Example 3: Reusing a character in a multi-scene video

In this last example, I tried reusing the characters I had created in a different context. The idea was to get a feel for how consistent they remain between videos to see if the idea of creating multiple scenes with the same characters is viable. Because if the characters remain looking and feeling the same way, then I really believe that Kling 3.0 could be used to generate longer-form content.

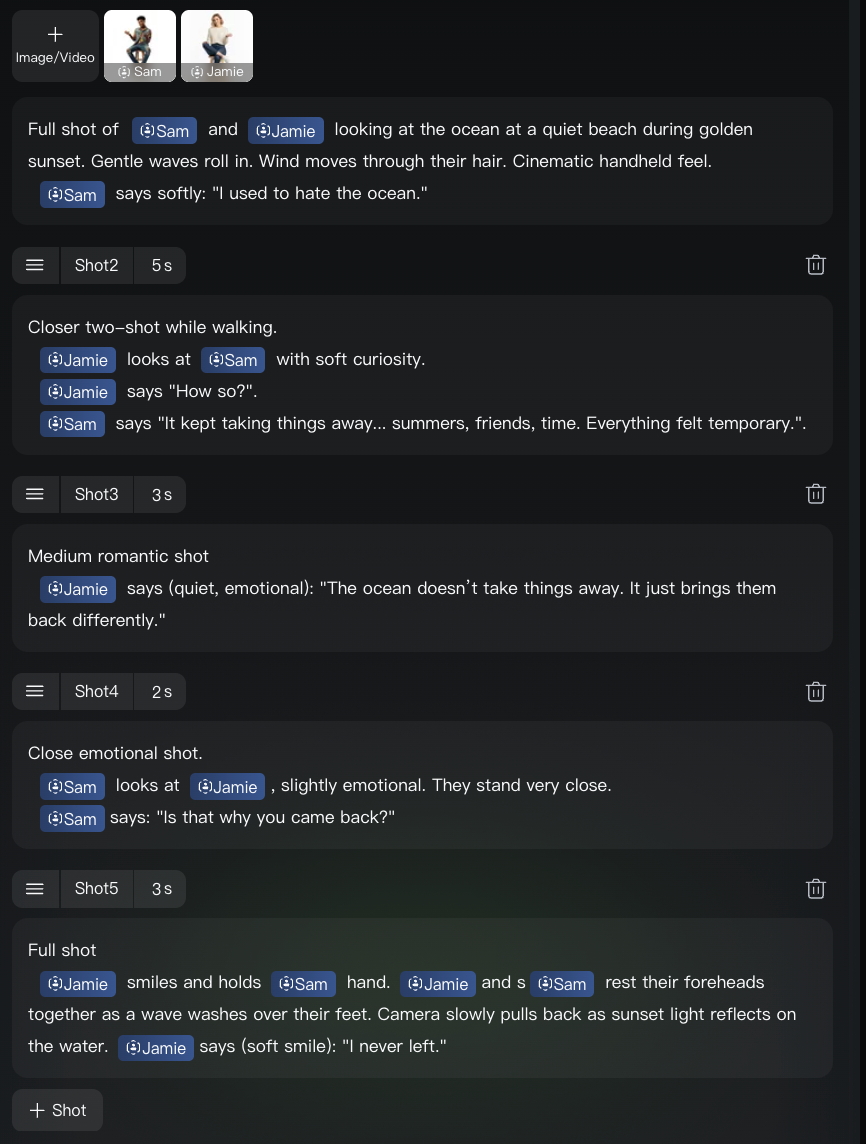

In this case, I used the multi-shot functionality to describe each shot. Here's the scene configuration:

This is the video it generated:

Again, I think the result is very close to what I wanted. Apart from some pauses to add some emotion to the shot, and not seeing the character speak in the last shot, I think it's pretty well done.

Kling 3.0 vs Runway Gen-4.5 Comparison

In my opinion, the best AI video model I've tried before was Runway Gen-4.5. I wanted to close this article by comparing it to Kling 3.0 using a few prompts that I used in this article I wrote about Runway.

Physics understanding

This example aims at testing how well the model understands physics by having an elephant and a mouse on a seesaw. In the first case, the mouse is sitting on the see-saw, and an elephant should drop from the sky; in the second, the opposite.

Both models seem to understand that the mouse is lighter than the elephant. Kling 3.0 adhered a little bit more to the prompt, as in both cases, the elephant and the mouse are supposed to drop from the sky.

Character emotions

The second example tests how well each model can convey character emotions. The prompt asks you to generate a video of someone receiving a very sad text message.

In this example, Kling 3.0 is a clear winner. The emotions feel real, while Runway 4.5 video has strange tears and even some water coming out of the character’s mouth.

Complex fantasy scene

In this last example, we asked both models to generate a complex fantasy scene where the protagonist has a magic brush that can draw things and bring them to life. The protagonist is running away from the bad guys and uses the brush to escape a dead-end alley.

None of the models can fully generate this scene, but Runway’s result is more accurate. However, these were both generated with a single long prompt. I think the scene would be executable using Kling’s Omni features.

Conclusion

Kling 3.0 is the first video AI that genuinely made me feel I could execute what’s in my head, not just approximate it.

With enough credits and a locked script, I’m confident it could carry full, consistent episodes, or even a film, while maintaining character identity, tone, and continuity across scenes. There’s still iteration and quality control involved, but it finally feels like normal production work rather than wrestling the model into compliance.

In my experiments, it didn’t land perfectly every time; now and then, it mixed up two characters or swapped their left-right positions between shots, and on rare occasions, reassigned a line. But, overall, the first attempt at each scene was usually very close to the desired output.

That said, I still find Kling a bit expensive, especially for hobbyists like me. I’d love to push into longer-form content, but at its current pricing, the credit math makes it tough to justify experimentation and retakes.

The good news is the trajectory is clear: quality is rising fast, efficiency is improving, and competition is heating up. I’m confident costs will drop, and access will broaden, and we won’t have to wait long before AI video creation is both cheap and accurate enough for weekend projects and indie productions alike.

If you’re interested in sharpening your AI skills and getting ready for a world where AI is a core skill in the job market, check out our AI Fundamentals course.

Kling 3.0 FAQs

Can Kling 3.0 generate videos with dialogue and sound effects?

Yes, Kling 3.0 can generate synchronized dialogue, background sounds, and tonal variations directly from prompts. Users can specify how dialogue is spoken, including emotions, tone, and background audio cues.

What languages does Kling 3.0 support?

The model supports generating speech in the following languages: Chinese, English, Japanese, Korean, and Spanish.

Do you need images to use Kling 3.0?

No, Kling can generate videos using text prompts alone. However, providing reference images significantly improves character consistency and visual accuracy, especially when using subject binding or Omni mode.

Is Kling 3.0 suitable for long-form storytelling?

Kling 3.0 shows strong potential for long-form content because it supports character reuse, multi-scene consistency, and complex storytelling. However, generating longer productions may require significant credits and iterative refinement.

What are the main limitations of Kling 3.0?

While highly capable, Kling 3.0 can occasionally introduce small inconsistencies such as character swaps, timing pauses, or missed dialogue. Additionally, the credit-based pricing may limit experimentation for casual or hobbyist users.