Course

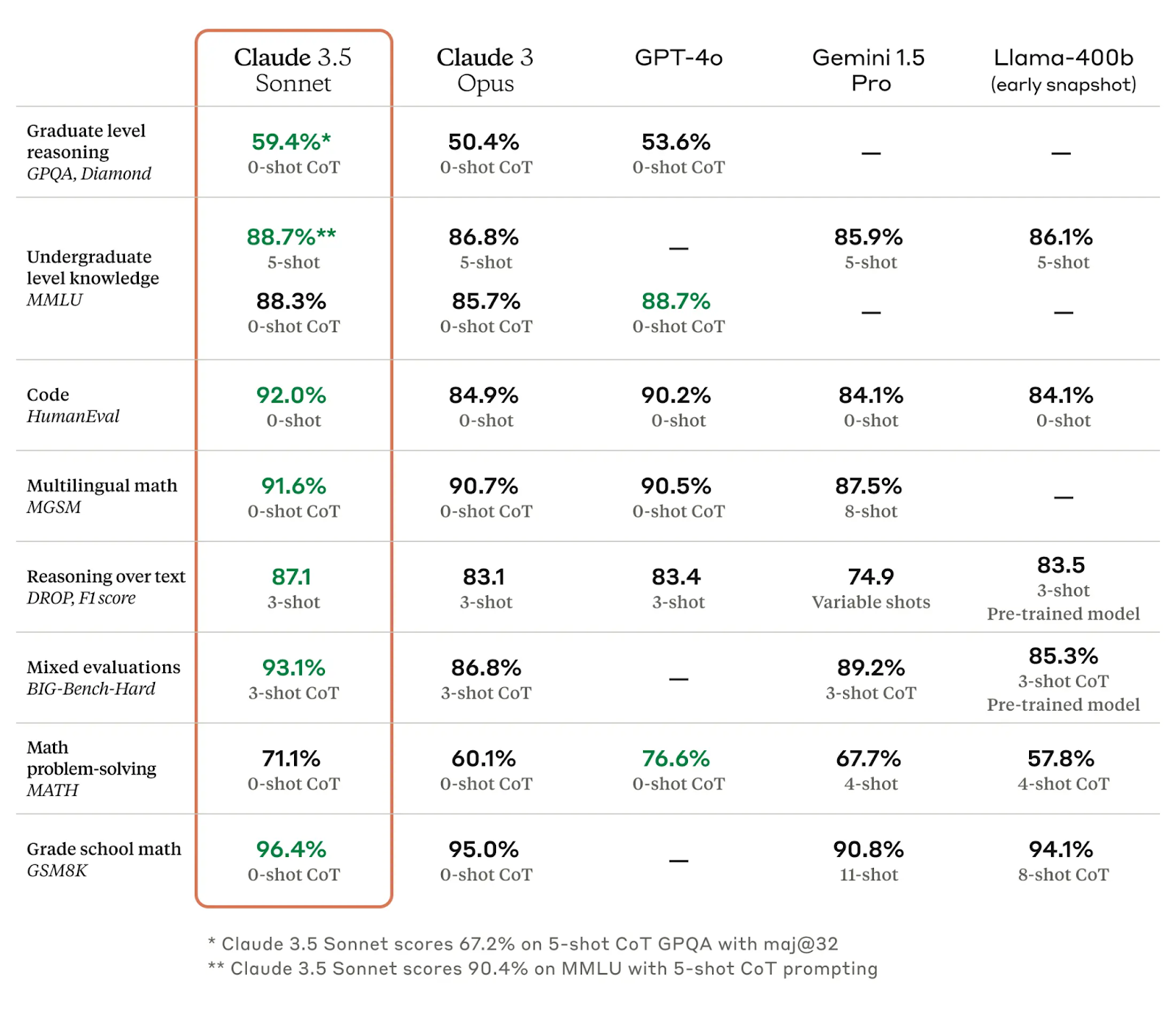

Anthropic has recently released Claude 3.5 Sonnet, a powerful model that has outperformed GPT-4o and Gemini Pro 1.5 in various benchmarks.

Claude 3.5 Sonnet’s visual reasoning capabilities are especially impressive, and you may want to use them in your development workflow via Claude 3.5 Sonnet’s API.

In this article, I'll help you get started with Claude 3.5 Sonnet's API and provide you with a step-by-step guide to using Claude 3.5 Sonnet’s through Anthropic’s API.

If you want to get an overview of Claude 3.5 Sonnet, I recommend this article on What Is Claude 3.5 Sonnet.

What Is Claude 3.5 Sonnet?

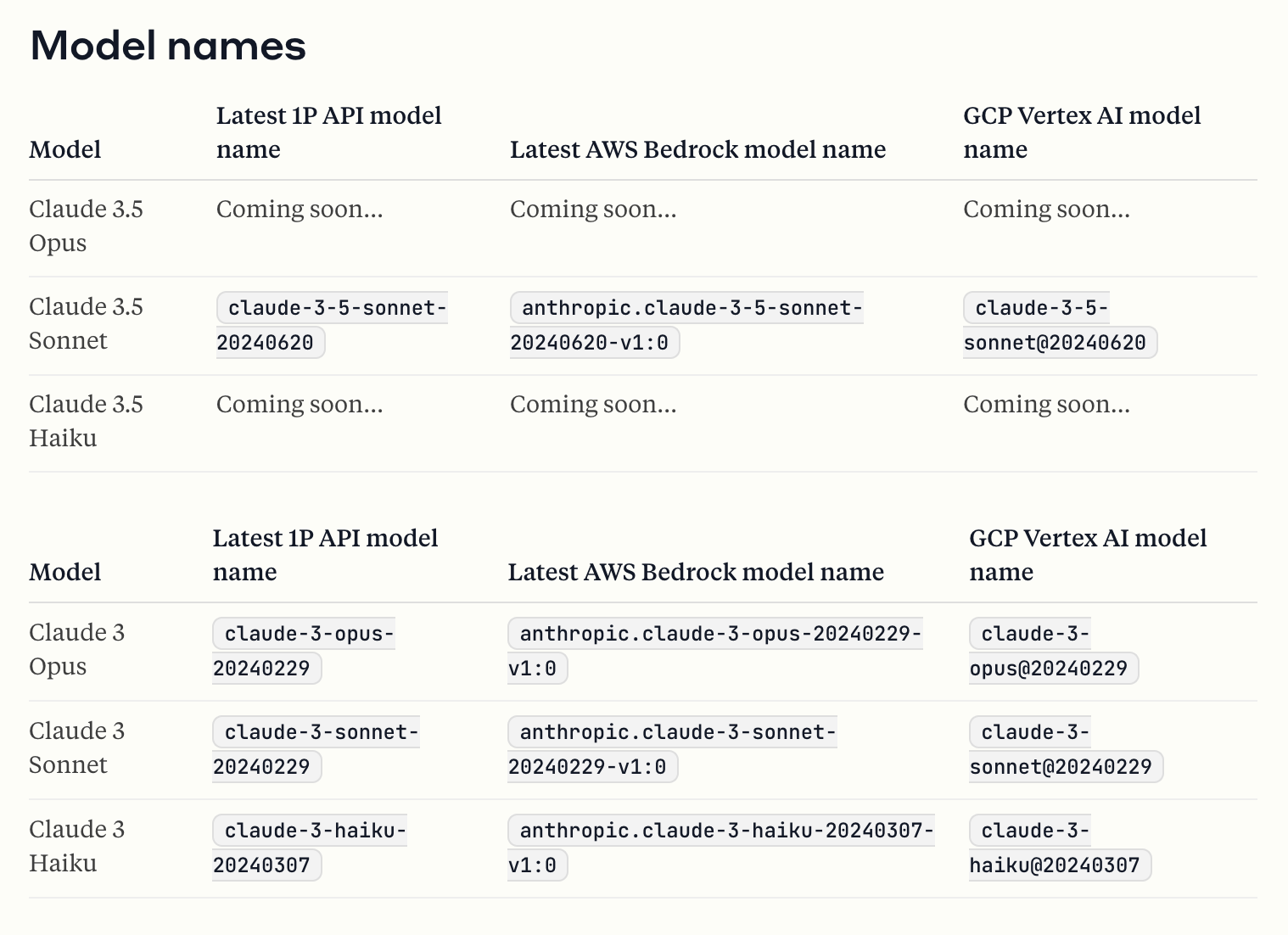

Claude 3.5 Sonnet is a large language model developed by Anthropic. It’s part of the larger Claude 3.5 family—Anthropic will release Claude 3.5 Opus and Claude 3.5 Haiku later this year.

Claude 3.5 Sonnet demonstrates noteworthy improvements in image understanding and generation and has shown strong performance in benchmarks, particularly in coding and reasoning tasks, compared to other models like GPT-4o, Gemini 1.5 Pro, or Llama-400b.

Additionally, it introduces a new feature called Artifacts for enhanced information management within conversations. You can learn more about Artifacts in this introductory article on Claude Sonnet 3.5.

Claude 3.5 Sonnet API: How to Connect to Antrophic API

To start using the Claude 3.5 Sonnet API, you need to sign up for an Anthropic API account and set up the Anthropic’s client. Let’s take it step-by-step.

Obtaining API access

Step 1: Sign up

Visit the Anthropic Console and create an account. You'll need to provide basic information and verify your email.

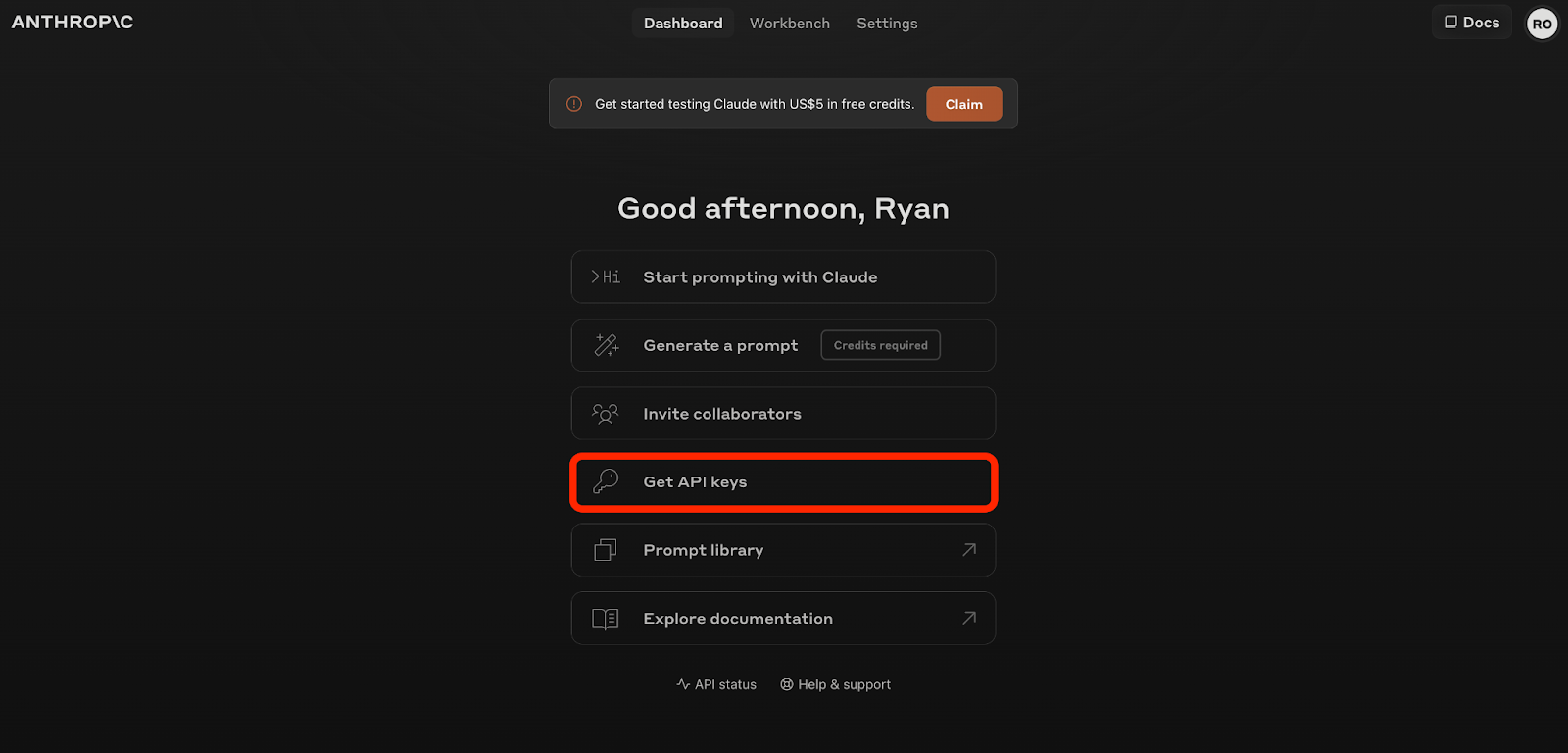

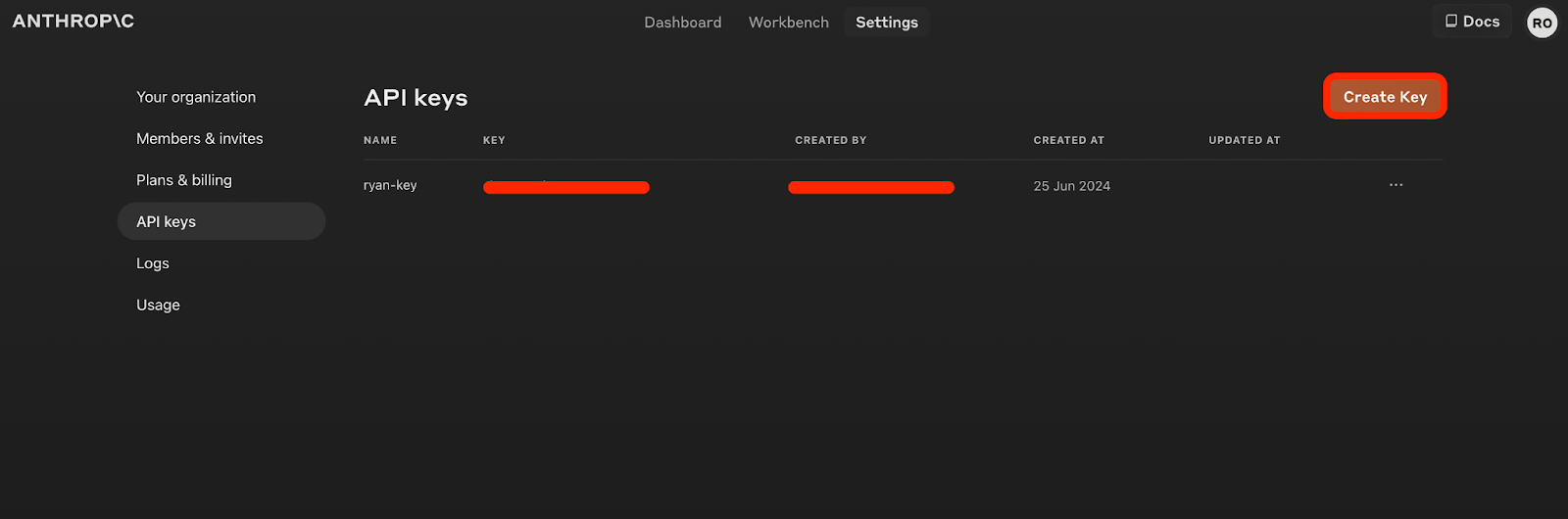

Step 2: API key

Once your account is set up, navigate to Get API keys section and click Create Key to generate your API key.

Setting up your environment

To initialize the Anthropic’s client, you have to first install the anthropic library as follows:

pip install anthropicOnce you have installed the library, you can initialize the Anthropic’s client using your API key.

import anthropic

# Create an instance of the Anthropics API client

client = anthropic.Anthropic(api_key='your_api_key_here') With the anthropic library installed and your Anthropic client initialized with your API, let’s now start exploring Claude 3.5 Sonnet's capabilities.

Claude API: Messages API vs. Text Completions API

Claude 3.5 Sonnet's API offers powerful features, particularly its Messages API, which is designed for creating rich, dynamic interactions.

Note that the Text Completions API is now considered legacy. While it provides basic completion capabilities, it lacks the advanced features and flexibility of the Messages API.

Users are encouraged to migrate to the Messages API for enhanced functionality and future support.

Also, currently, Artifacts are not accessible via the API. To interact with Artifacts, you need to use the web interface provided by Claude.ai, which fully supports editing, referencing, and downloading content generated by Claude 3.5 Sonnet.

Claude 3.5 Sonnet API: Messages API

The Messages API enables you to send a structured list of input messages with text or image content, allowing the model to generate the next message in the conversation.

This API supports both single queries and stateless multi-turn conversations.

To create a message, you can use the anthropic Python library and call the messages.create method with the required parameters. The key parameters include the model, the messages, and various optional settings to control the output.

Required parameters

Let’s explore the required parameters:

model: The model to use (e.g., "claude-3-5-sonnet-20240620").

messages: A list of input messages. Each message must have a role ("user" or "assistant") and content.

As of the date of writing this article, these are the models available through Anthropic’s API:

Optional paramters

Optional parameters include:

max_tokens: The maximum number of tokens to generate.temperature: Controls the randomness of the response.stop_sequences: Custom text sequences that cause the model to stop generating.stream: Whether to stream the response incrementally.system:A system prompt providing context and instructions.tools: Definitions of tools that the model may use.top_k: Only sample from the top K options for each subsequent token. Used to remove "long tail" low probability responses.top_p: Use nucleus sampling to cut off less likely options based on cumulative probability reaching a specified threshold.

Claude 3.5 Sonnet API: Use Cases

Now that we understand all the parameters involved, let's explore practical use cases of the Messages API using Python and the anthropic library:

Single message request

This is how we can make single message request:

response = client.messages.create(

model="claude-3-5-sonnet-20240620",

max_tokens=1024,

messages=[{"role": "user", "content": "Hello, world"}]

)Multi-turn conversation

Let’s now initiate a multi-turn conversation:

response = client.messages.create(

model="claude-3-5-sonnet-20240620",

max_tokens=512,

messages=[

{"role": "user", "content": "Hello there."},

{"role": "assistant", "content": "Hi, I'm Claude. How can I help you?"},

{"role": "user", "content": "Can you explain LLMs in plain English?"}

]

)Including image content

Starting with Claude 3 models, you can include image content in your messages.

response = client.messages.create(

model="claude-3-5-sonnet-20240620",

max_tokens=512,

messages=[

{

"role": "user",

"content": [

{

"type": "image",

"source": {

"type": "base64",

"media_type": "image/jpeg",

"data": "media_file"

}

},

{

"type": "text",

"text": "What is in this image?"

}

]

}

]

)Using system prompts and stop sequences

This is how we can use system prompts and stop sequences:

response = client.messages.create(

model="claude-3-5-sonnet-20240620",

max_tokens=1024,

messages=[{"role": "user", "content": "Write a short story."}],

system="You are a creative writing assistant.",

stop_sequences=["The end."],

temperature=0.9

)Tool definitions

You can define tools for the model to use during interactions. This includes specifying the tool's name, description, and input schema.

# Define the request parameters

model = "claude-3-5-sonnet-20240620"

messages = [{"role": "user", "content": "What's the S&P 500 at today?"}]

tools = [

{

"name": "get_stock_price",

"description": "Get the current stock price for a given ticker symbol.",

"input_schema": {

"type": "object",

"properties": {

"ticker": {

"type": "string",

"description": "The stock ticker symbol, e.g., AAPL for Apple Inc."

}

},

"required": ["ticker"]

}

}

]

# Create a message with the defined parameters

response = client.messages.create(

model=model,

messages=messages,

tools=tools

)Conclusion

In this guide, we've explored how to connect to Anthropic’s API in order to use the Claude 3.5 Sonnet model. We’ve covered key aspects such as setup, authentication, and using the Messages API for various tasks.

Whether you're building a chatbot, a content generator, or any other AI-powered application, Claude 3.5 Sonnet might be a good choice.

To learn more about Claude Sonnet and how it compares to ChatGPT, check out the articles below:

FAQs

What are the main differences between the legacy Text Completions API and the newer Messages API in Anthropic's API?

The Text Completions API provides basic text completion functionality, while the Messages API offers more advanced features, such as the ability to have multi-turn conversations, incorporate images, and use system prompts and tools. The Messages API is designed for more interactive and dynamic interactions, while the Text Completions API is a simpler tool for basic text generation. Anthropic recommends using the Messages API for new projects and migrating existing projects from the Text Completions API.

Can I use Artifacts directly through the Claude 3.5 Sonnet API?

As of now, Artifacts are not directly accessible via the API. You can interact with Artifacts through the web interface on Claude.ai, which supports editing, referencing, and downloading content generated by Claude. However, Anthropic may add API support for Artifacts in the future.

Ryan is a lead data scientist specialising in building AI applications using LLMs. He is a PhD candidate in Natural Language Processing and Knowledge Graphs at Imperial College London, where he also completed his Master’s degree in Computer Science. Outside of data science, he writes a weekly Substack newsletter, The Limitless Playbook, where he shares one actionable idea from the world's top thinkers and occasionally writes about core AI concepts.