Course

In the recent past, computers functioned like efficient but literal librarians. If you searched for "giant robot battles," they would only find titles with those exact words. A perfect match like "Kaiju Mech Warfare" would be overlooked. Computers recognised words but missed the music—they lacked understanding, nuance, and context. This was the keyword search era, which served as our best option for many years.

Then came a revolution. Drawing inspiration from the human brain, artificial intelligence developed a new way of learning. Rather than simply memorising words, AI began comprehending relationships between them. It is understood that "king" relates to "man" as "queen" relates to "woman." It recognised that "happy," "joyful," and "ecstatic" share an emotional territory, while "sombre" exists in a completely different realm.

How did AI accomplish this? By creating a map of meaning.

What is Vector Search? The Map of Meaning

Imagine a vast, multi-dimensional galaxy where every concept—a word, a sentence, an image, a song—is a star. In this galaxy, stars with similar meanings cluster together, while unrelated ones exist light-years apart.

A vector embedding is simply the coordinate of a star in this galaxy. It's a list of numbers (e.g., [0.12, -0.45, 0.89, ...]) that precisely locates a concept on this map of meaning.

Vector search is the revolutionary ability to navigate this map. When you ask, "a film about an orphaned boy who discovers he's a wizard," we don't hunt for those exact keywords. Instead, we:

- Find the coordinate (the vector) for your question on the map.

- Locate the nearest neighbouring stars (our movie plots).

The closest stars represent the most semantically similar results. This is how AI evolved from a literal librarian to a wise sage that understands what you actually mean.

Why is This So Important?

Searching by meaning rather than keywords powers modern AI applications across industries. This capability enables:

- Netflix to recommend unfamiliar movies that share a similar "vibe" with your viewing history.

- Spotify to curate playlists featuring songs that feel like your favourites, regardless of their genre or era.

- Chatbots and LLMs to grasp the intent behind your questions, delivering relevant, context-aware responses.

- E-commerce sites to display products visually similar to images you upload.

Vector search bridges human intent and digital data, fundamentally transforming how we interact with information. This technology makes digital systems feel less mechanical and more like intuitive collaborators.

Let’s Learn: Building Your First Vector Search Application With MongoDB

Welcome to the new frontier of search! MongoDB introduced Vector Search in Atlas. This lets you store and query vector embeddings (like those from text, images, or audio) directly inside MongoDB—no need for a separate vector database like Pinecone or Weaviate.

In this tutorial, we will build a simple but powerful shoes recommendation engine using Python and MongoDB Atlas. You'll learn how to transform text into meaningful numerical representations (vectors) and then use Atlas's built-in Vector Search to find movies with similar plots.

What You Will Learn

- How to connect to a MongoDB Atlas cluster securely using Python's pymongo library.

- How to create and insert documents containing vector embeddings.

- How to generate vector embeddings from text using the sentence-transformers library.

- How to build a Vector Search index in the MongoDB Atlas UI.

- How to perform a semantic search query using the $vectorSearch aggregation pipeline.

Prerequisites

- A free MongoDB Atlas account

- Python 3.9+ installed locally

- Install dependencies:

pip install pymongo sentence-transformers numpyStep 1: Create a MongoDB Atlas cluster

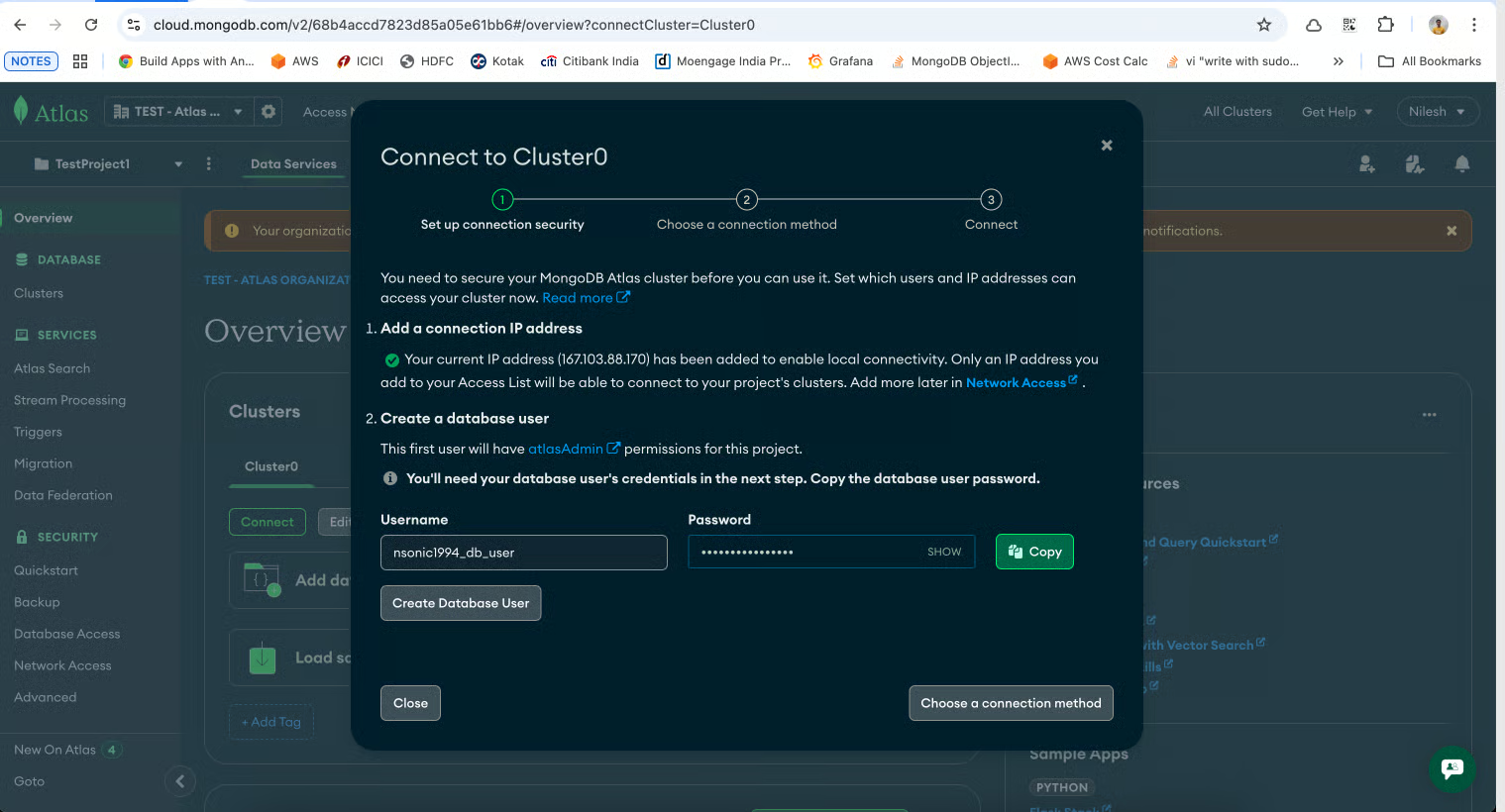

- Sign in to Atlas.

- Create a free cluster. Create a user and copy the username and password.

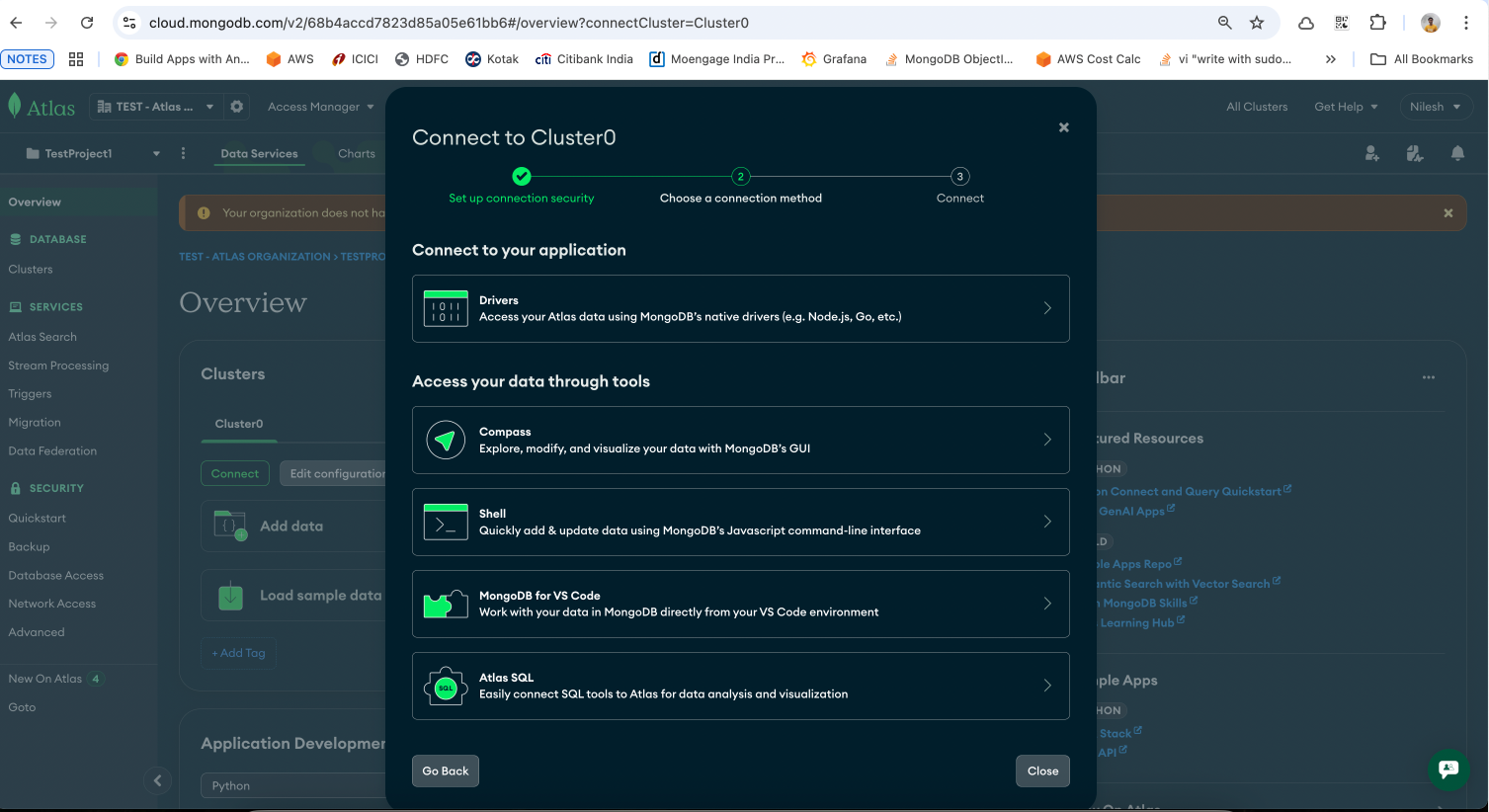

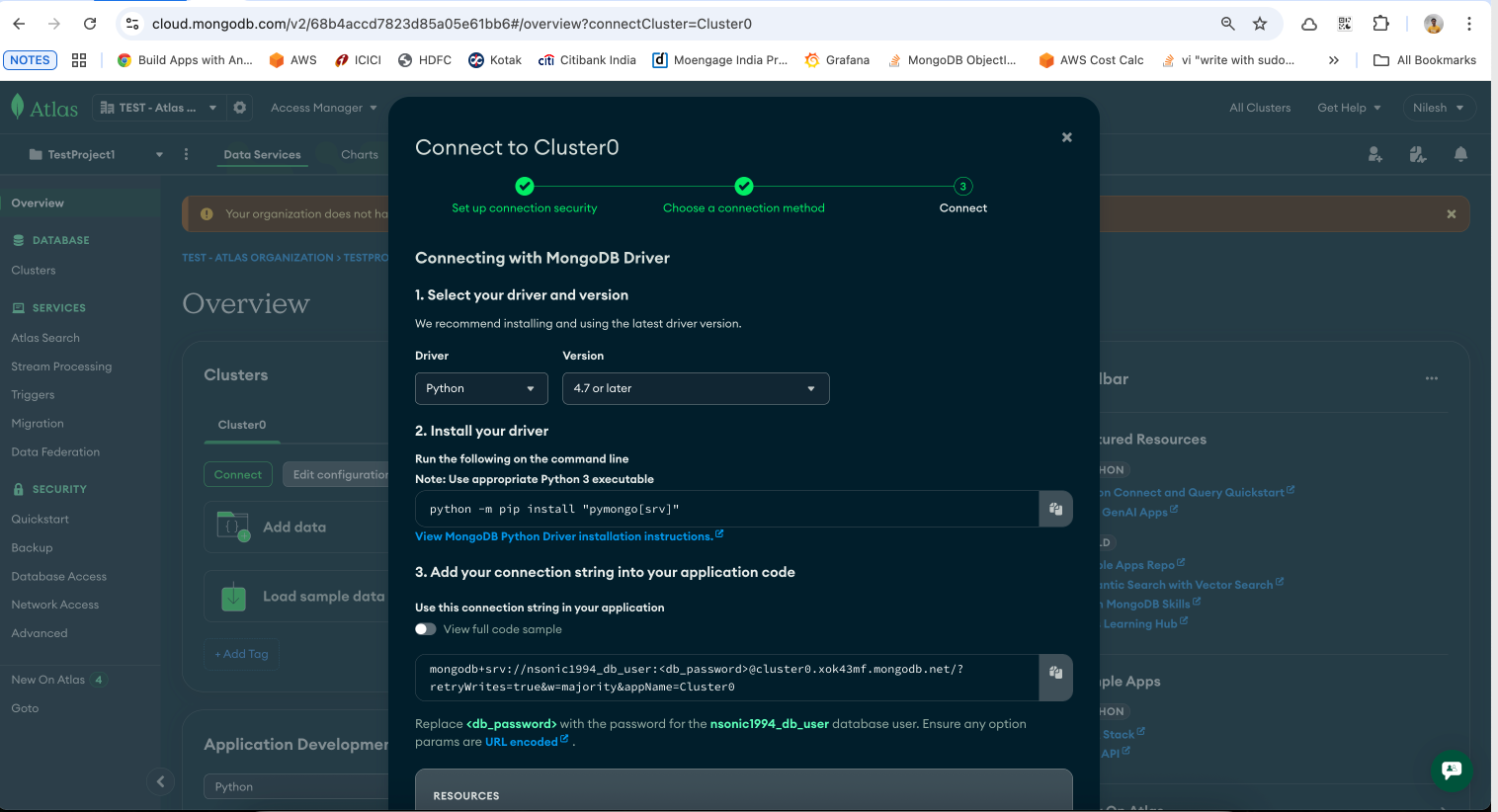

- After creating the user, click Choose a connection method. You will see the first screen below. Click on Drivers and go to the second screen. Select Python as your driver and the appropriate version. Copy the connection string.

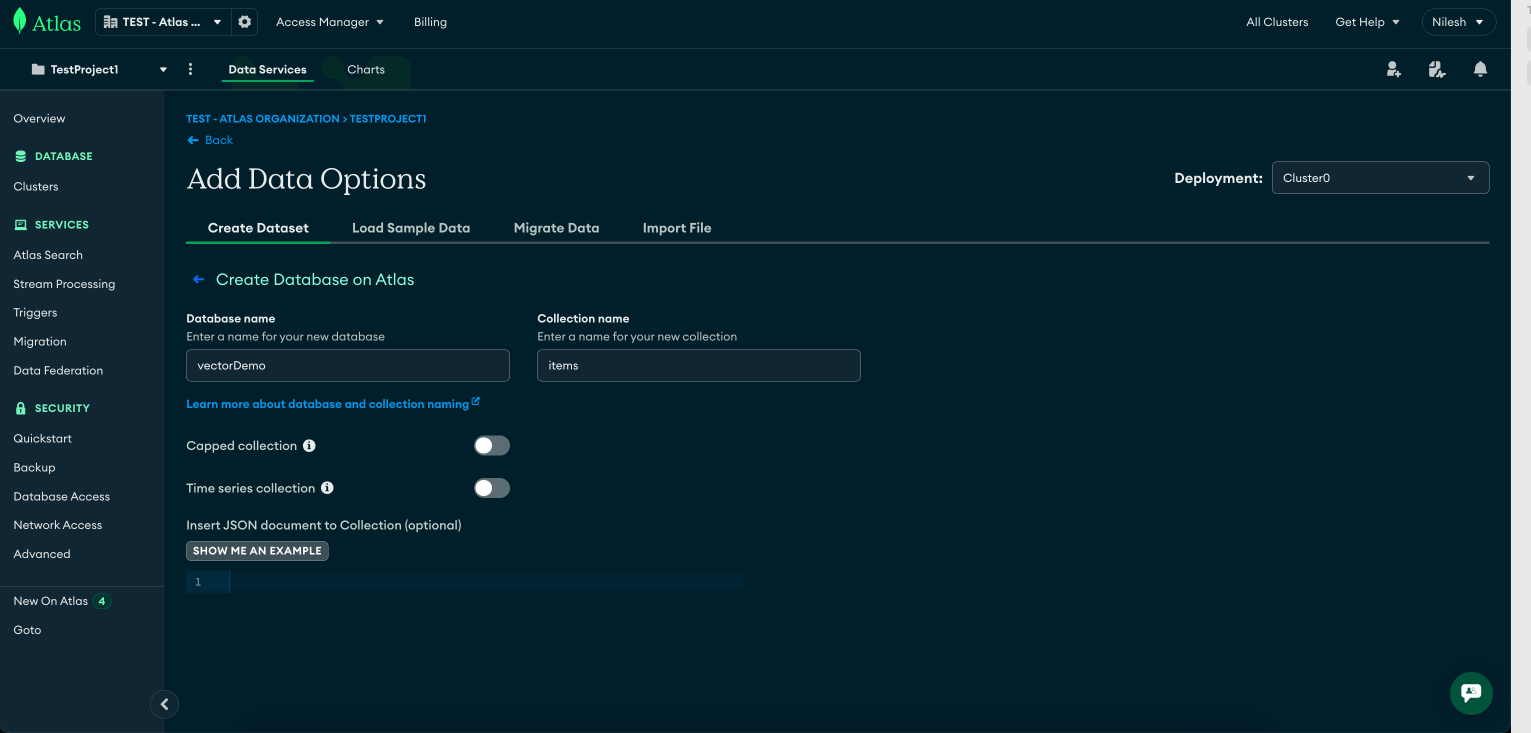

- Go to Database Deployments → Click Browse Collections → Create a new database:

- Database name:

vectorDemo - Collection name:

items

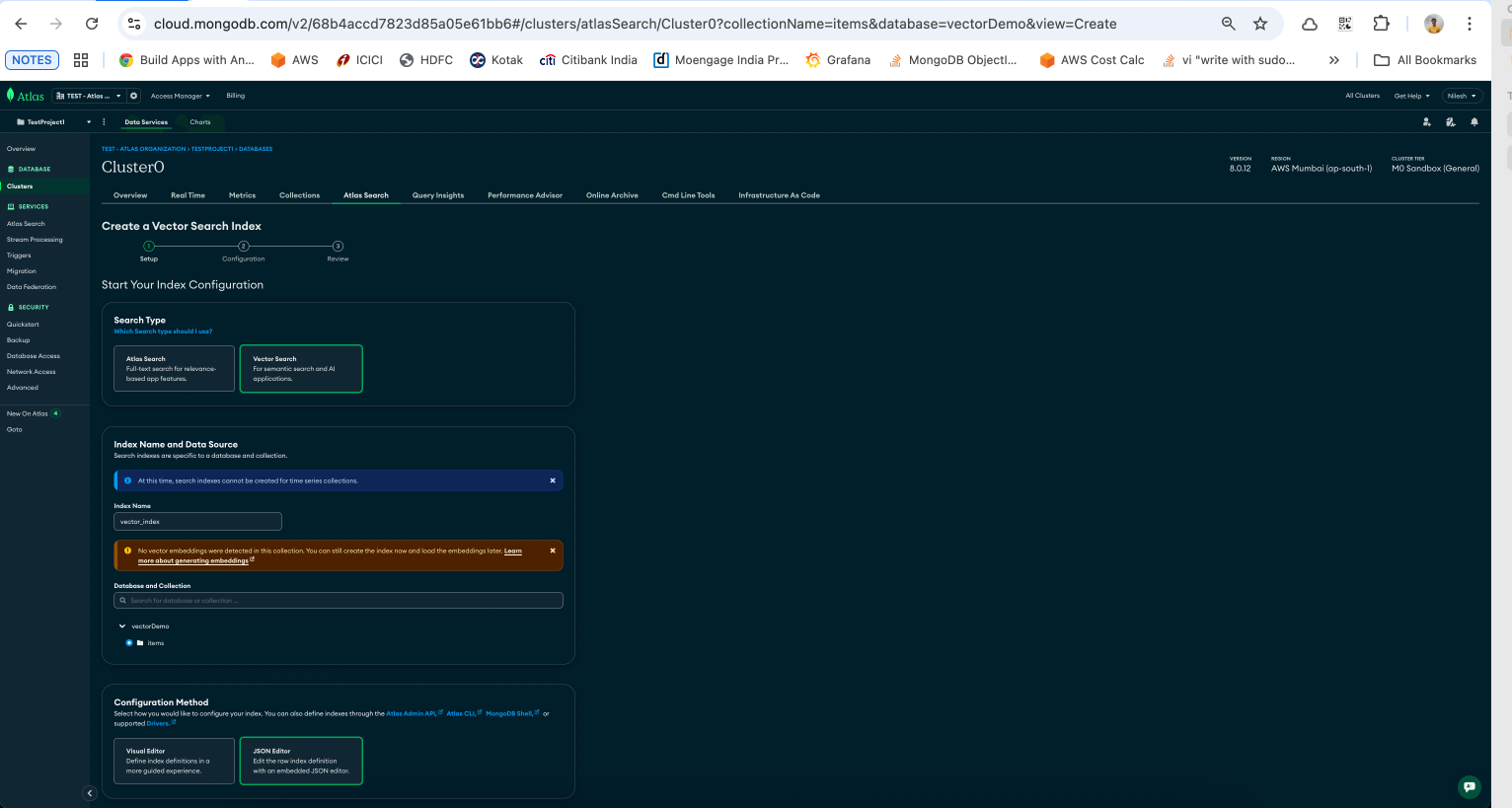

- Enable Vector Search:

- In the MongoDB Atlas UI, navigate to Vector Search Indexes → Create a new index for

items. - Name of index: “vector_index”

- Use this definition:

{

"fields": [

{

"type": "vector",

"path": "embedding",

"numDimensions": 768,

"similarity": "cosine"

}

]

}

Note: 768 is the embedding size we’ll use with the sentence-transformers model.

Understanding the index definition:

"type": "vector": Tells MongoDB Atlas this is a vector index."path": "embedding": Crucially, this points to the field in our documents that contains the vectors."numDimensions": 768: This must match the dimension of the vectors our model produces."similarity": "cosine": The algorithm used to measure the "closeness" of vectors. It is excellent for text-based similarity.

Step 2: Connect Python to MongoDB Atlas

Get your MongoDB Atlas connection string:

- In MongoDB Atlas, click Connect → Drivers → Python.

- Replace

<username>,<password>, and<cluster-url>in the snippet below.

from pymongo import MongoClient

# Replace with your Atlas connection string

uri = "mongodb+srv://<username>:<password>@<cluster-url>/vectorDemo?retryWrites=true&w=majority"

client = MongoClient(uri)

db = client["vectorDemo"]

collection = db["items"]

print("Connected to MongoDB Atlas")

# Clear existing

collection.delete_many({})

print("Cleared collection.")Step 3: Generate embeddings with Python

We’ll use SentenceTransformers to convert text into embeddings.

from sentence_transformers import SentenceTransformer

# Choose model: higher-quality for search -> "sentence-transformers/all-mpnet-base-v2" (768-d)

# If you need faster/smaller: "all-MiniLM-L6-v2" (384-d)

MODEL_NAME = "sentence-transformers/all-mpnet-base-v2"

# MODEL_NAME = "all-MiniLM-L6-v2"

# Note: if you use all-mpnet-base-v2, your Atlas vector index must use numDimensions: 768

# If you use all-MiniLM-L6-v2, index numDimensions: 384

model = SentenceTransformer(MODEL_NAME)

def embed_text(text: str):

return model.encode(text).tolist()Step 4: Insert sample documents with embeddings

Let’s store some product descriptions in MongoDB with their embeddings.

# Each product includes name, description, list of features, use_cases and tags.

products = [

# Marathon / long-distance running (explicit!)

{

"name": "MarathonPro 3000",

"description": "Designed specifically for marathon and ultra distance running: maximum cushioning, breathable knit upper, responsive midsole and engineered heel support for long-run comfort.",

"features": ["marathon", "long-distance", "max cushioning", "breathable", "support"],

"category": "shoes",

"use_cases": ["marathon", "long-distance running", "road running"],

"tags": ["running", "endurance", "comfort"]

},

{

"name": "Enduro LongRun",

"description": "Lightweight long-run trainer optimized for marathon pacing with added cushioning, stable platform, and enhanced forefoot energy return for hours of comfortable running.",

"features": ["long-run", "lightweight", "energy return", "cushioning"],

"category": "shoes",

"use_cases": ["marathon", "road running"],

"tags": ["running","marathon","comfort"]

},

# Road running / neutral

{

"name": "Red Road Runner",

"description": "Neutral road running shoe for daily training: breathable mesh, moderate cushioning and responsive ride; suitable for tempo runs and long easy runs.",

"features": ["road running", "breathable", "moderate cushioning"],

"category": "shoes",

"use_cases": ["training", "road running", "tempo runs"],

"tags": ["running","training"]

},

# Trail running (explicit trail)

{

"name": "TrailMaster RidgeGrip",

"description": "Trail-running shoe with aggressive outsole, rock plate for protection, waterproof upper, designed for technical trails and rugged terrain.",

"features": ["trail", "high grip", "rock plate", "waterproof"],

"category": "shoes",

"use_cases": ["trail running", "hiking", "off-road"],

"tags": ["trail","outdoors"]

},

# Tennis & court

{

"name": "CourtPro Tennis",

"description": "Court shoe with lateral support, non-marking sole and reinforced toe; built for tennis footwork and short intense bursts, not for long-distance running.",

"features": ["court", "lateral support", "non-marking sole"],

"category": "shoes",

"use_cases": ["tennis","court sports"],

"tags": ["tennis","sport"]

},

# Formal

{

"name": "Oxford Leather Formal",

"description": "Classic leather formal shoe for office and events: polished leather, sturdy sole, refined silhouette.",

"features": ["formal", "leather", "office"],

"category": "shoes",

"use_cases": ["formal wear","office"],

"tags": ["formal","leather"]

},

# Hiking

{

"name": "Alpine Trek Boots",

"description": "High-ankle hiking boots with waterproof membrane, ankle support and durable lugged outsole for mountain treks.",

"features": ["hiking", "waterproof", "ankle support", "lugged outsole"],

"category": "shoes",

"use_cases": ["hiking","trekking","outdoor"],

"tags": ["outdoors","hiking"]

},

# Casual sneakers

{

"name": "Classic Canvas Sneaker",

"description": "Casual everyday canvas sneaker with rubber sole, comfortable fit for daily wear and walking.",

"features": ["casual","canvas", "everyday"],

"category": "shoes",

"use_cases": ["casual","walking"],

"tags": ["casual"]

},

# Cross-trainer

{

"name": "CrossFit Trainer",

"description": "Stable cross-trainer shoe engineered for gym workouts, short sprints, and lateral movements; flexible yet supportive.",

"features": ["cross-train","stable","gym"],

"category": "shoes",

"use_cases": ["gym","cross-training"],

"tags": ["training","gym"]

},

# Cleats

{

"name": "Pro Soccer Cleats",

"description": "Low-profile football/soccer cleats with studded outsole; optimized for grip on grass and agility.",

"features": ["cleats","studs","agility"],

"category": "shoes",

"use_cases": ["soccer","football"],

"tags": ["sports","cleats"]

}

]

# ---------- Create 'canonical_text' to improve embedding quality for better scoring ----------

for p in products:

# join features and others into one rich text blob

features = ", ".join(p["features"])

uses = ", ".join(p["use_cases"])

tags = ", ".join(p["tags"])

canonical = f"{p['name']}. {p['description']}. Features: {features}. Use cases: {uses}. Tags: {tags}."

p["canonical_text"] = canonical

# ---------- Embed canonical_text----------

print("Embedding items...")

for p in products:

p["embedding"] = embed_text(p["canonical_text"])

# ---------- Insert into MongoDB ----------

collection.insert_many(products)

By using insert_many, we perform a single, efficient bulk write operation to our database.

Step 5: Perform your first vector search

It's time for the final, most exciting step! We will use MongoDB's aggregation framework and the $vectorSearch—an operator to find the best shoe query.

query_text = "25k long running best shoes"

query_embedding = embed_text(query_text)

pipeline = [

{

"$vectorSearch": {

"index": "vector_index", # Use the vector search index you created

"path": "embedding",

"queryVector": query_embedding,

"numCandidates": 100,

"limit": 3

}

},

{

"$project": {

"name": 1,

"description":1,

"score": {"$meta": "vectorSearchScore"}

}

}

]

results = collection.aggregate(pipeline)

print(f"\nSearch results for: '{query_text}'\n")

for r in results:

print(f"- {r['name']} (score: {r['score']:.4f})")Dissecting the $vectorSearch pipeline:

$vectorSearch: This is the core operator. It efficiently compares our queryVector against the indexedplot_embeddingfield for all documents.$project: This stage reshapes the output. We include thenameanddescription, exclude thenoisy_idand other fields, and most importantly, we add a score field using$meta: "vectorSearchScore". This score, from 0 to 1, indicates how similar the result is to our query.

Expected output

If everything is set up correctly, you’ll see something like this:

Search results for: '25k long running best shoes'

- MarathonPro 3000 (score: 0.7980)

- Red Road Runner (score: 0.7479)

- Enduro LongRun (score: 0.7389)Notice that “MarathonPro 3000” comes out on top—exactly what we’d expect.

Congratulations! You've successfully built a vector search application from scratch. You've learned how to connect to a database, create and validate data, generate vector embeddings, and leverage the power of MongoDB Atlas to find information in a truly intelligent way. Welcome to the future of data interaction.

Conclusion

In this tutorial, you successfully learned how to:

- Connect to MongoDB Atlas using Python and pymongo, forming the foundation for database-driven applications.

- Create embeddings (text-only) from a realistic product catalog using high-quality models like all-mpnet-base-v2, then store them in MongoDB with proper normalisation.

- Build a rich, structured dataset using canonical_text (name, description, features, use cases, tags), which improves embedding relevancy.

- Perform vector similarity searches with MongoDB’s $vectorSearch aggregation stage to find semantically similar products based on natural-language queries.

Next Steps (Try Yourself)

- Store user reviews and build a semantic search engine.

Experiment with different similarity metrics (dotProduct, euclidean).

FAQs

Why is canonical_text richer than just the product description?

canonical_text includes features, use cases, and tags to provide better context for embeddings—helping the model capture user intent more accurately in vector search.

Why did I choose all-mpnet-base-v2 over MiniLM or CLIP?

all-mpnet-base-v2 (768-dim) offers stronger semantic representations for text-only similarity compared to lightweight (384-dim) models. CLIP excels in multimodal tasks but is less precise for nuanced text-only queries.

Can this approach scale to large datasets?

Yes! MongoDB Atlas supports vector indexes with millions of documents. For high-scale use, fine-tuning index parameters (e.g., numCandidates) and using re-ranking only on top-N results keeps performance strong.

What if I want multimodal (text + image) search next?

You can upgrade to a multimodal embedding model (like CLIP or open-source alternatives), normalize embeddings, store them similarly, then use the same vector search + re-ranking strategy for mixed media search.

How do I know which model dimension to use?

Higher dimensions (e.g., 512, 768, 1024, 2056) generally offer better semantic capture but require more storage and compute. Use smaller models (384) for speed or experimentation, and upgrade to 768 when precision matters.

Can I combine vector search with full-text search?

Absolutely. MongoDB Atlas lets you combine a $search stage using compound queries—mixing BM25 full-text filters with knnBeta or $vectorSearch—for rich hybrid search experiences.

Staff Software Engineer @ Uber