Track

PySpark is particularly useful when working with large datasets because it provides efficient methods to clean our dataset. In this article, we'll focus on a common cleaning task: how to remove columns from a DataFrame using PySpark’s methods .drop() and .select().

To learn more about PySpark, check out this Introduction to PySpark course.

Cloud Courses

Why Drop Columns in PySpark DataFrames?

Dropping columns is a common task in data preprocessing for various reasons. Removing unnecessary or irrelevant columns can significantly reduce the amount of data we need to process. This streamlines our dataset, making it easier to analyze and potentially improving the performance of our PySpark jobs.

Additionally, when preparing data for machine learning models, feature selection often involves dropping columns that don't contribute to the model's accuracy or might introduce noise. This step can lead to more efficient and effective models.

Finally, dropping columns can be a strategy to improve data quality. If a column contains a high percentage of missing values or erroneous data, removing it might be the best course of action.

How to Drop a Single Column From a PySpark DataFrame

Suppose we have a DataFrame df with five columns: player_name, player_position, team, minutes_played, and score. The column minutes_played has many missing values, so we want to drop it.

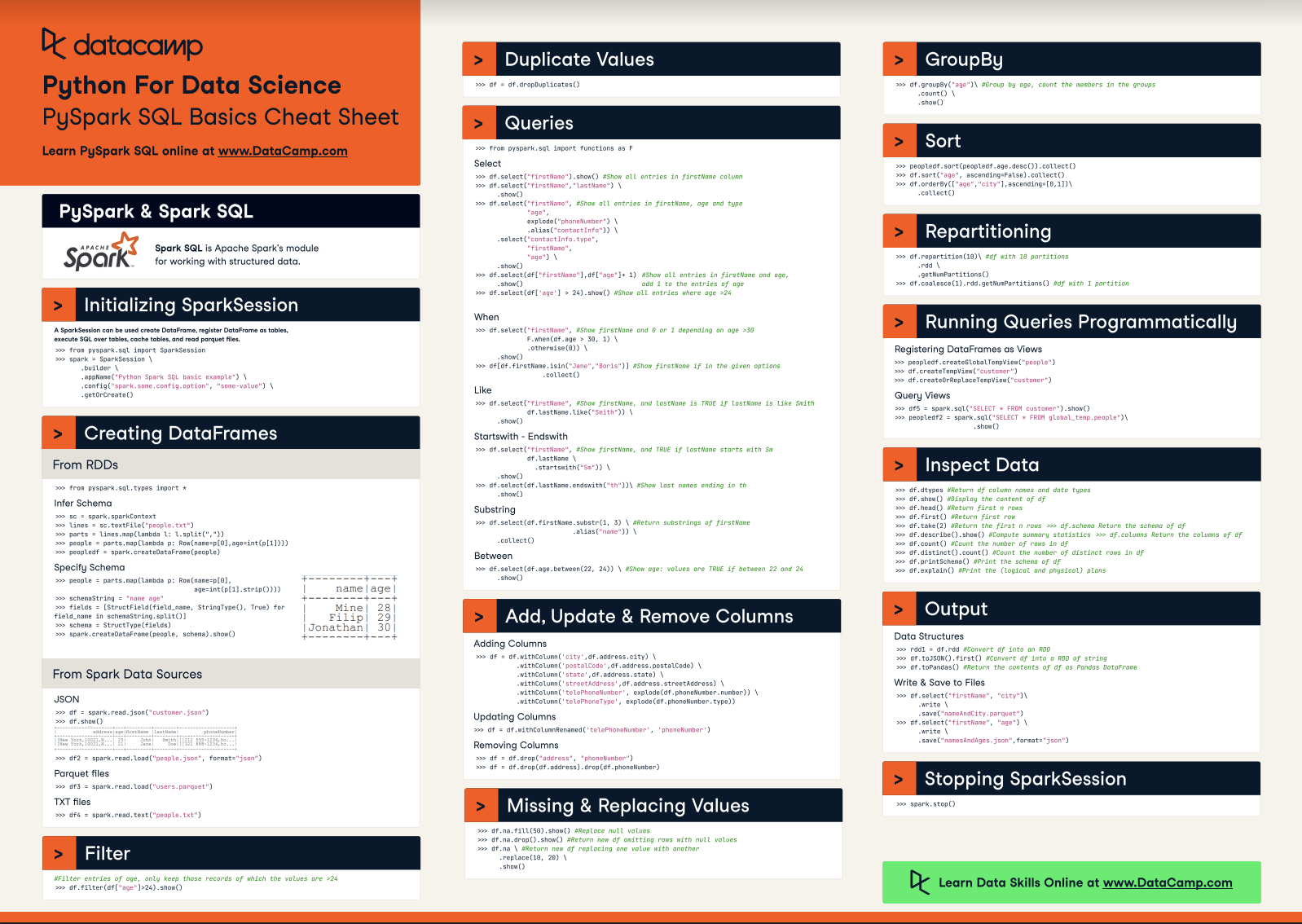

In PySpark, we can drop a single column from a DataFrame using the .drop() method.

The syntax is df.drop("column_name") where:

dfis the DataFrame from which we want to drop the columncolumn_nameis the column name to be dropped.

The df.drop() method returns a new DataFrame with the specified columns removed.

This is how we can drop a column:

df_dropped = df.drop("minutes_played")How to Drop Multiple Columns From a PySpark DataFrame

Let’s imagine we want to analyze our DataFrame df but don’t want to consider the team or the player's position—so we want to drop both columns. There’s more than one way to do this, so let’s explore our options.

To drop multiple columns from a PySpark DataFrame, we can pass a list of column names to the .drop() method. We can do this in two ways:

# Option 1: Passing the names as a list

df_dropped = df.drop(["team", "player_position"])

# Option 2: Passing the names as separate arguments

df_dropped = df.drop("team", "player_position")Alternatively, we can chain multiple .drop() calls. While technically functional, it's generally not considered the most efficient or elegant approach. Each call creates a new DataFrame, which can introduce overhead, especially when working with larger datasets.

df_dropped = df.drop("team").drop("player_position")Dropping Columns Conditionally

Instead of specifying which columns to drop, we can revert the method and select only those that meet a condition or requirement. In that way, our returned DataFrame will no longer contain the unwanted columns.

Using .select()

We can use the .select() method in PySpark along with a list comprehension to drop one or more columns based on certain criteria. The method is flexible, and we can use it from a simple condition to a complex one.

This method follows the syntax df.select([col for col in df.columns if condition]), where:

dfis the DataFrame you're working with.conditionis the criteria used to filter the columns you want to keep.

Let’s work again with our DataFrame df and select all the columns except the team column:

df_sel = df.select([col for col in df.columns if col != "team"])Complex conditions with .selectExpr()

If we're comfortable with SQL and need to apply more complex conditions when filtering columns, PySpark's .selectExpr() method offers a powerful solution. It allows us to use SQL-like expressions to select and manipulate columns directly within our PySpark code.

For instance, consider this example:

# Select specific columns and create a new 'FullMatch' column

df_sel = df.selectExpr("player_name", "player_position", "minutes_played >= 60 as FullMatch")Here, we're not just excluding a column. We're:

- Selecting the

player_nameandplayer_positioncolumns. - Creating a new column called

FullMatchthat indicates whether a player played a full match (60 minutes or more).

This flexibility makes .selectExpr() invaluable for scenarios where simple list comprehensions might not be sufficient.

Best Practices

When deciding to drop columns from our DataFrame, following some best practices is crucial to maintain data integrity, code readability, and efficiency.

1. Backup your data: Before making any modifications to your DataFrame, especially when dropping columns, it's wise to create a backup copy. This ensures that you can revert to the original data if needed.

df_backup = df.persist() # Cache the DataFrame to avoid recomputing it later2. Drop with inplace=False (default): By default, the .drop() method returns a new DataFrame without modifying the original. This is generally safer. If you're absolutely sure you want to modify the original DataFrame, you can use inplace=True, but exercise caution.

df_dropped = df.drop("column_to_drop", inplace=False) # Default behavior3. Document your decisions: If you're working on a collaborative project or anticipate revisiting your code in the future, it's helpful to add comments explaining why you chose to drop certain columns. This documentation can provide valuable context for yourself and others.

4. Consider renaming the columns: Instead of dropping columns, we should consider whether renaming them might be more suitable, particularly if the information contained could be valuable for future analysis. PySpark provides us with the .withColumnRenamed() method that helps us rename columns.

Conclusion

In this tutorial, we’ve learned how to drop single and multiple columns using the .drop() and .select() methods. We also described alternative methods to leverage SQL expressions if we require more complex selection techniques.

If you're looking to expand your skills and leverage PySpark's powerful techniques, consider taking a look at these courses and materials:

FAQs

How do I drop columns based on regex in PySpark?

To drop columns based on a regex pattern in PySpark, you can filter the column names using a list comprehension and the re module (for regular expressions), then pass the filtered list to the .drop() method.

How do I drop columns with the same name in PySpark?

PySpark doesn't allow duplicate column names within a DataFrame. If your data source has duplicate names, you'll likely encounter an error when creating the DataFrame. The best practice is to resolve the duplicate names at the source or during DataFrame creation.

How do I drop columns with only null values in PySpark?

To drop columns containing only null values in PySpark, we can first calculate the count of nulls in each column using count(when(col(c).isNull(), c)). Then, we identify columns where the null count equals the total row count of the DataFrame. Finally, we drop these columns using the .drop() method.

How do I drop columns conditionally in PySpark?

Use df.select([col for col in df.columns if condition]).