Track

Data pipelines continue growing in volume, velocity, and variety, meaning skilled data engineers who can build effective and efficient ETL processes are becoming more important.

Data workflow management tools such as Apache NiFi and Apache Airflow offer data engineers effective solutions for managing data pipelines. Proper data workflow orchestration is a core component of making the ETL process run smoothly.

In this article, we will do an in-depth comparison between Apache NiFi and Airflow.

Why Are Data Workflow Management Tools Important?

Data workflow management tools such as Apache NiFi and Apache Airflow provide flexibility and scalability to the data pipeline. They allow data engineers to quickly understand the stability and health of their data workflows.

Each tool offers unique advantages in terms of deployment, integration with other data services, and intuitive interfaces.

What Is Apache NiFi?

Apache NiFi is an open-source tool designed to automate the flow of data between systems.

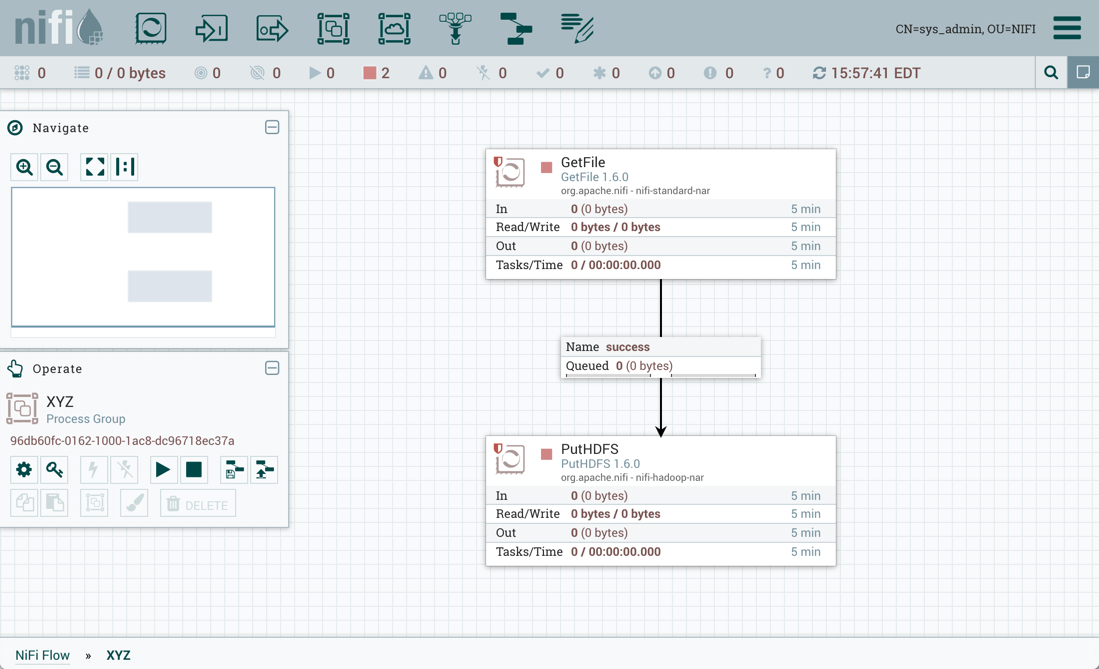

NiFi provides a user-friendly interface for designing data workflows and supports highly configurable directed graphs of data routing, transformation, and system mediation logic.

Users can create, schedule, and monitor data flows, ensuring data moves smoothly between different sources and destinations.

Apache NiFi uses a flow-based programming model, where data flows through a series of processors within a directed graph.

Users design and manage these data flows using a web-based interface by dragging and dropping processors, which perform tasks such as data ingestion, transformation, and routing.

Encapsulated in FlowFiles, data moves through connections acting as queues between processors.

NiFi is particularly useful for scenarios where data needs to be collected, transformed, and routed across different systems in a reliable and scalable manner. It’s often used in data integration, ETL (Extract, Transform, Load) processes, and real-time data processing.

Example of Apache NiFi interface. Image source

What Is Apache Airflow?

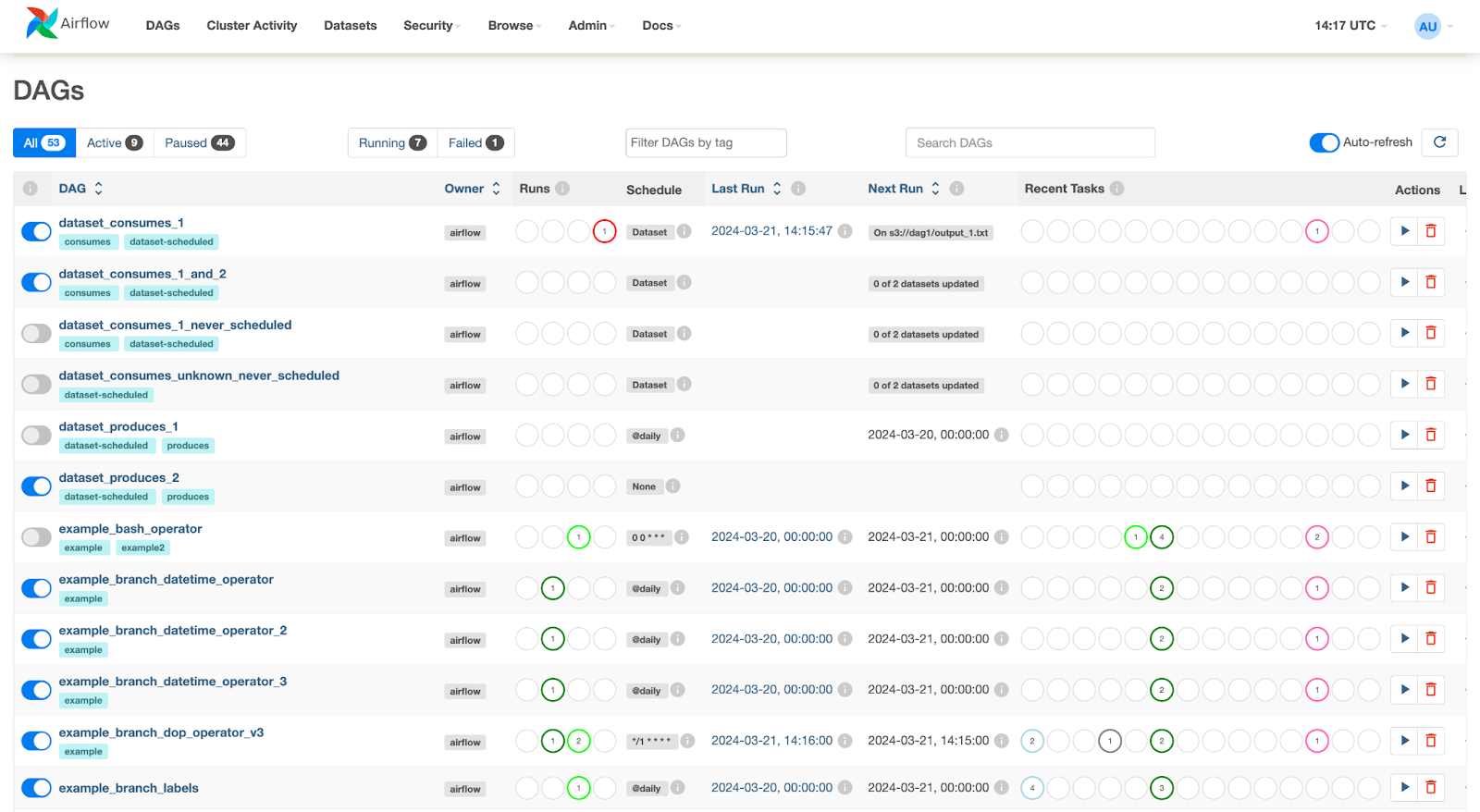

Apache Airflow utilizes DAGs (directed acyclic graphs) combined with operators written in Python for flexible data workflow management and the creation of ETL pipelines.

It simplifies the process of creating workflows by abstracting necessary functions for connecting to different data sources to allow data engineers a straightforward platform for data workflow management.

It operates using an internal scheduler that manages workflows via an internal database that controls various workers that process workflows.

Through a simple web server interface, data engineers have a handy platform for triggering, monitoring, and halting the DAGs as necessary to fine-tune their data flow.

Example of Airflow interface. Image source

Apache NiFi vs Apache Airflow: Similarities

Both NiFi and Airflow are powerful tools capable of handling large volumes of data, managing ETL workflows, and offering flexibility regarding data connections. They share a few core functionalities that offer users similar environments.

User interface

While Airflow is constructed primarily in Python, most of the user interface is web server-based. Apache NiFi offers the same kind of GUI that allows data engineers to observe all their workflows in one place.

Apache NiFi and Airflow have similar home pages that give high-level details about all pipelines, such as their run state (success, failure, running), active number of workflows, and failure messages.

These intuitive interfaces allow users of Apache NiFi and Airflow to understand the state of their data environment at a glance and quickly address any issues that may have arisen.

Flexibility

Both Apache NiFi and Airflow are highly flexible when it comes to their data connections. They can handle a wide variety of data files such as CSV, JSON, XML, Parquet, and more.

Additionally, they easily connect to various cloud data warehouses, such as Amazon Redshift, BigQuery, Azure Synapse, and Snowflake.

Apache NiFi’s modular design can be utilized to create unique data pipelines that perform critical ETL processes. Airflow’s Python script-based design allows users to leverage not only Airflow’s unique connectors but also Python’s technical advantages to perform more complex processing.

Data management

Both NiFi and Airflow are core components of data workflow management. They provide the essential framework for data engineers to perform data collection, processing, and routing. Each allows users to monitor their workflows and manipulate data en route.

Data engineers can easily transport data from location to location while making sure pipelines are running efficiently.

Mastering either Apache NiFi or Airflow is a core skill for any data engineer!

Apache NiFi vs Apache Airflow: Differences

While Apache NiFI and Airflow are very similar in terms of usage, they approach ETL and data pipelines differently. Each has a unique design that defines how it handles scalability, syntax, and monitoring.

Apache NiFi is geared towards ease-of-use and simplicity, whereas Airflow is geared more towards powerful scripting and Python backends.

Scalability

Apache NiFi scales easily through cluster and node management. Airflow recruits more resources as needed based on the number of parallel processes running.

As workloads become more complex, Apache NiFi can scale the number of workers to meet extra computational demand. Airflow, however works in a more serial manner and will take up parallel resources as DAG sizes increase but will take its time processing complex data problems.

Syntax

Apache NiFi utilizes a drag-and-drop GUI interface and needs minimal coding. NiFi makes the data pipeline accessible and straightforward for data engineers while maintaining flexibility and complexity.

Airflow, on the other hand, is entirely constructed using Python and SQL. To build the data workflows, engineers must code each step in DAGs, which are then processed and run via Airflow’s interface.

Monitoring

Apache NiFi has a robust monitoring dashboard built into the interface. It provides users with real-time metrics such as processor performance, number of FlowFiles, and computational resources being consumed. These come out of the box with Apache NiFi.

Airflow offers only basic metrics, such as the state of DAGs and tasks, logging, and Gantt charts, to showcase metrics such as run-time, etc.

To obtain real-time metrics in Airflow, data engineers must build processes using StatsD, OpenTelemetry, or Prometheus. Therefore, it takes longer to set up but offers equally detailed reporting as NiFi.

Apache NiFi vs Apache Airflow: A Detailed Comparison

After reviewing the similarities and differences between these platforms, let’s compare them side by side and select a winner for each category.

User interface

Apache NiFi

NiFi provides a browser-based user interface that allows the visual creation and management of data flows. The user interface makes it easy to design, control, and monitor data flows without needing extensive coding skills.

Users can drag and drop processors onto a canvas, connect them, and configure their properties.

NiFi’s UI supports multiple helpful features. These include features for tracking data provenance, allowing users to see the history and transformations applied to each piece of data, which is crucial for auditing, debugging, and ensuring data integrity.

The UI also supports role-based access control, enabling administrators to define and manage user permissions and ensure only authorized users can access and modify data flows.

The UI even supports the integration and configuration of custom processors, which users can use to extend NiFi’s functionality.

Apache Airflow

Airflow’s intuitive web-server interface gives users a straightforward and minimalistic overview of their data workflows. The main page provides information on the status of current and previous runs.

Additionally, workflows can be turned on and off as needed, triggered, refreshed, and deleted.

When a workflow is selected, you are offered different views of the DAGs, which show the status of individual components.

Data engineers can choose to retry or stop different components of the DAG. They can also select the components of each DAG and look at logs to diagnose any potential issues.

Winner

This is a tie. NiFi offers a more intuitive drag-and-drop interface which makes it easier to run as part of a no-code paradigm.

Airflow offers powerful workflow management and debugging tools that are critical for any data engineer. Depending on the use case and implementation, both have their uses.

Scalability

Apache NiFi

NiFi can efficiently scale to handle various data processing workloads, from small-scale operations to large, enterprise-level data integration tasks.

NiFi can scale out through clusters of nodes, where multiple NiFi nodes work together as a single logical instance. Each node performs the same tasks on the data, but each operates on a different sections of it. NiFi is also designed to scale up and down flexibly.

For scaling up, the number of concurrent tasks on a processor can be increased to increase throughput. It can also be scaled down enough to run on devices with limited hardware resources, such as edge devices.

This scalability makes NiFi a robust and flexible solution for managing data flows in diverse environments.

Apache Airflow

Airflow can be made to scale with data workflows. It offers parallel computing of pipelines but relies on resources set by the base machine. It does not scale as dynamically based on computational power but rather scales based on the number of nodes/DAGs running concurrently.

Airflow depends on the resources provided by the machine hosting the Airflow server. As data workflows increase, Airflow will require more memory and processing power.

Engineers often opt for running Airflow on the cloud, where the dynamics of its resource scaling will be determined by the cloud server.

Winner

Apache NiFi makes it much easier to scale with flexibility. Since it dynamically adjusts the number of nodes necessary to perform operations, it can better manage workloads. In this instance, NiFi is the better option.

Monitoring

Apache NiFi

NiFi offers comprehensive monitoring features that give users real-time insights into their data flows, ensuring efficient and reliable data processing.

The browser-based UI displays detailed metrics on processor performance, including the number of FlowFiles processed, data throughput, and processor status.

Users can configure alerts and notifications to be informed of any anomalies or failures in the data flow, facilitating proactive management and minimizing downtime.

Additionally, NiFi’s monitoring capabilities extend to system-level metrics, such as CPU usage, memory consumption, and disk space utilization, ensuring that resources are effectively managed.

Apache Airflow

As mentioned, Airflow offers easy high-level monitoring of all workflows simultaneously with its dashboard.

It offers email alerts, notifications, and logging to help you understand workflow issues. You can even track timing and performance through various Gantt charts.

Airflow allows users to view processes moving from step to step in real time and can offer post hoc analysis of workloads.

Detailed live monitoring requires StatsD, telemetry, and a monitoring framework known as Prometheus. Extracting these metrics from Airflow will require some extra work.

Winner

Apache NiFi wins in terms of monitoring and logging for its simplicity. The system allows for speedy and straightforward monitoring of computational resources and anomalies to manage workflows in real time better than Airflow.

Flexibility

Apache NiFi

NiFi offers remarkable flexibility, making it an adaptable solution for diverse data integration and processing needs.

Its modular architecture allows users to build complex data flows by connecting a wide array of pre-build processors, each designed to handle specific tasks. Users can also develop custom processors to meet unique requirements.

NiFi supports various data formats and protocols, including JSON, XML, CSV, HTTP, and more, ensuring compatibility with different data sources and destinations.

NiFi’s real-time data flow management, coupled with its robust scheduling and prioritization features, allows for fine-grained control over data processing, ensuring timely and efficient data handling. This flexibility makes NiFi a versatile and powerful tool for managing data flows in various environments.

Apache Airflow

With a broad offering of operators, Airflow is highly flexible regarding implementation. It connects easily to cloud providers such as AWS, GCP, Azure, and Snowflake.

You can also build custom operators for specific use cases and implement them directly into your DAG.

With a highly modular design, Airflow provides customizability to fit any data workflow.

Because Airflow manages the data flow, it comfortably manages a wide range of data formats, such as CSV and Parquet files.

Since it is built in Python, you can do further custom ETL or management based on the flow of incoming data. Airflow is an extremely diverse tool for managing data workflows in different environments.

Winner

Although both tools offer wide compatibility across different platforms, data types, and pipeline designs, Apache Airflow provides greater flexibility by allowing users to leverage Python.

Users can also create custom classes and functions that allow Airflow to connect to non-standard data sources or perform complex data processing tasks.

Ultimately, Python gives Apache Airflow the edge in this terms of flexibility.

Apache NiFi vs Apache Airflow: A Summary

|

Category |

Apache NiFi |

Apache Airflow |

Winner |

|

User interface |

Easy GUI-based interface for workflow construction and management |

Web server for monitoring and workflow management |

Tie |

|

Scalability |

Dynamically scales nodes as needed based on workflow needs |

Scales based on the number of concurrent processes and rely on server resources |

Apache NiFi |

|

Monitoring |

Provides a wide variety of error monitoring and logging for users, which are built into the NiFi Dashboard |

Provides logging by default, requires the usage of other processors such as Prometheus and StatsDB to get monitoring information outside of Airflow |

Apache NiFi |

|

Flexibility |

Easily connects to a wide variety of data connections through its various modules |

Offers technical implementation of connector classes built by Python script |

Apache Airflow |

Overall, Apache NiFi offers advantages for data engineers who want speedy and convenient implementation. It offers the same performance as Apache Airflow but minimizes technical complexity through an intuitive and convenient GUI that offers easy monitoring, flexibility, and dynamic scalability.

If the pipeline's needs are more intense, specific, or technical, writing custom DAGs in Airflow using Python will improve performance.

Final Thoughts

Both Apache NiFi and Airflow can be extremely powerful tools. While they are unique in their approaches to data workflow management, both offer advantages.

But remember, being a data engineer means mastering the concept of data workflow orchestration regardless of the tool. DataCamp offers multiple means of improving your data pipeline skills, including a certification pathway!

Refer to the following resources to get started:

FAQs

Can I integrate Apache NiFi and Apache Airflow in the same data pipeline for enhanced functionality?

Yes, you can integrate Apache NiFi and Apache Airflow in a single data pipeline to leverage the strengths of both tools. NiFi can be used for real-time data ingestion, transformation, and routing, while Airflow can manage more complex data workflows and scheduling. By combining the two, you can create a robust and flexible data pipeline that handles both real-time and batch processing effectively.

Is Apache NiFi or Apache Airflow more cost-effective for small to medium-sized businesses?

The cost-effectiveness of Apache NiFi vs. Apache Airflow depends on your specific needs and infrastructure. NiFi’s intuitive GUI may reduce development time and costs, especially for smaller teams. Airflow, while requiring more coding expertise, may offer greater flexibility and integration capabilities that can be beneficial for more complex workflows. Both are open-source, so direct software costs are low, but consider the learning curve and maintenance costs when making your decision.

What skill set does someone need to use Apache NiFi or Airflow?

A solid understanding of data pipelines, in general, is definitely a great start. For Airflow, having a good knowledge of Python and following a straightforward guide will allow you to get started quickly. NiFi will lean more into your understanding of data workflows than coding.

What is the learning curve like for Apache NiFi compared to Apache Airflow?

Apache NiFi typically has a gentler learning curve due to its user-friendly, drag-and-drop interface that allows users to create workflows without extensive coding knowledge. On the other hand, Apache Airflow requires a good understanding of Python and a deeper technical background, which can make the learning curve steeper. However, for those already familiar with Python, Airflow’s learning curve may not be as challenging.

Are Apache NiFi and Apache Airflow suitable for managing machine learning workflows?

Both Apache NiFi and Apache Airflow can be used to manage machine learning workflows, but they serve different purposes. NiFi can handle the data ingestion and preprocessing steps efficiently, moving data from various sources into your machine learning pipeline. Airflow, with its robust scheduling and workflow management capabilities, is well-suited for orchestrating machine learning training and deployment processes. Combining both can provide a comprehensive solution for end-to-end machine learning workflows.

What are the data security features of Apache NiFi and Apache Airflow?

Apache NiFi provides several built-in security features, including encrypted data transfer, user authentication, and authorization with role-based access control (RBAC). It also supports data provenance, which helps track the lineage of data through the pipeline. Apache Airflow also supports RBAC, secure connections to data sources, and logging for auditing purposes. However, for enhanced security, especially in production environments, additional configurations and external tools may be necessary for both platforms.

I am a data scientist with experience in spatial analysis, machine learning, and data pipelines. I have worked with GCP, Hadoop, Hive, Snowflake, Airflow, and other data science/engineering processes.