Course

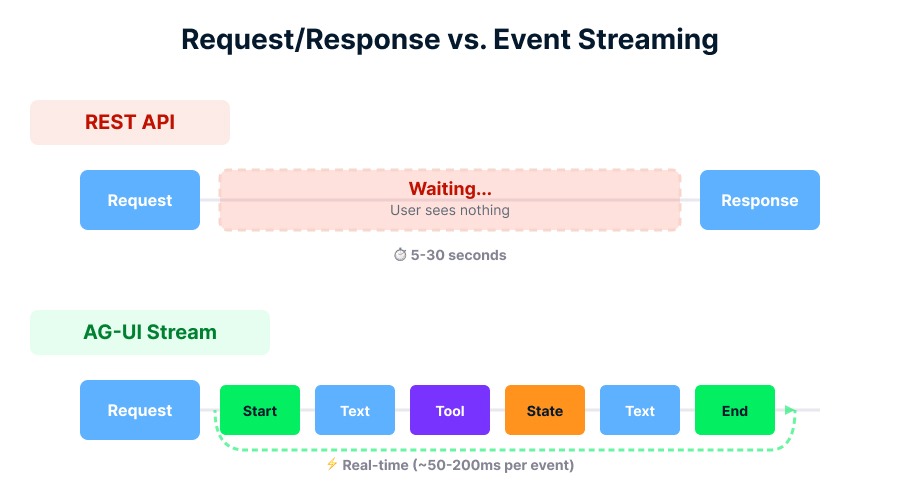

The shift from simple text-generation bots to autonomous agents has broken the traditional way we build software. In the past, you sent a request and got a response. But agent-capable models (like the new GPT-5.2-Codex, as one example) don't work like that. They reason, they plan, they use tools, and they might take minutes to finish a task. They surface progress signals and request permissions before they can update a dashboard in real-time.

Trying to force these fluid, long-running interactions into a standard REST API is like trying to stream a live video over email. The result is usually a mess of custom code that ties your frontend tightly to your backend, making it hard to switch tools or scale.

This guide introduces you to the Agent-User Interaction (AG-UI) Protocol, a real standard that is gaining traction and designed to fix this "interaction silo." I will show you how AG-UI creates a universal language for agents and UIs, how it handles complex state, and how you can start using it to build robust agentic applications.

As a note before we get started: The language of this article might seem unfamiliar unless you're versed in backend/frontend web development and API design patterns.

Introduction to AI Agents

Why Agentic Apps Need AG-UI

Before we look at the code, let me explain why we needed a new protocol in the first place. It comes down to the fundamental difference between a function and an agent.

The limitation of request/response

In traditional web development, interactions are transactional. You ask for a user profile, and the server sends it. It's a one-off exchange.

Agents, however, are unpredictable and take time. When you ask an agent to "debug why your CI pipeline suddenly started failing after a teammate's merge," it doesn't return a JSON object right away. Instead, it might:

- Pull the last 50 commit logs from GitHub (Action).

- Notice a new dependency was added without updating the lockfile (Observation).

- Cross-reference that package version against known CVEs (Reasoning).

- Ask if you want to roll back the merge or patch it forward (Human-in-the-loop).

I'll explain this later in the "Event Types" section, but for now, just know that a standard HTTP request would time out waiting for all this to happen. You need a way to stream these partial updates so the user isn't staring at a frozen screen.

The specific needs of agents

Agents require a bi-directional flow of information that goes beyond text. They need to synchronize the State.

Imagine an agent helping you write a report. It's not just chatting; it's editing a document. Both you and the agent might be typing at the same time. AG-UI handles this by treating the "document" as a shared state, using efficient updates to keep your screen and the agent's memory in sync without overwriting each other's work.

How Agents and UIs Communicate

AG-UI replaces the old Remote Procedure Call (RPC) model with an Event-Sourcing model. Instead of waiting for a final answer, the agent sends out a steady stream of events that describe what it's doing.

The event stream

Think of the AG-UI stream as a news ticker for your agent's brain. The client application (your frontend) subscribes to this ticker and updates the UI based on what it sees. This approach decouples the logic: your React frontend doesn't need to know how the agent is thinking, it just needs to know what to display.

AG-UI avoids the waiting gap. Image by Author.

Core event types

The protocol defines standard event types that cover almost every interaction scenario. Here are the big ones you'll see:

-

TEXT_MESSAGE_*: Events that stream generated text piece by piece. This typically involves_START,_CONTENT(the token stream), and_ENDevents, which together create the familiar "typing" effect. -

TOOL_CALL: Signals that the agent wants to do something, like check the weather or query a database. Crucially, AG-UI can stream the arguments of the tool call, allowing your UI to pre-fill forms before the agent even finishes "speaking." -

STATE_DELTA: This is the heavy lifter for collaboration. Instead of resending the whole document every time a letter changes, the agent sends a tiny "diff" (like "add 'hello' at index 5"). -

INTERRUPT: The safety valve. If an agent hits a sensitive action (like "Delete Database"), it emits an interrupt event. The flow pauses until the user sends back an approval event.

Multimodality and thinking steps

The protocol isn't limited to text. It handles multimodality, by which I mean streaming images, audio, and file attachments alongside the conversation. It also standardizes Thinking Steps, separating the agent's internal thoughts (reasoning traces) from the final public response. You might have noticed a similar pattern when using tools like ChatGPT, where progress indicators or intermediate steps are surfaced separately from the final response. This lets you decide whether to show users execution progress or simply the end result.

AG-UI Building Blocks

Now that you understand the flow, let's look at the specific features that make AG-UI powerful for developers.

Generative UI

Text is great, but sometimes a chart is better. Generative UI allows an agent to decide at runtime that it should show you a UI component instead of text.

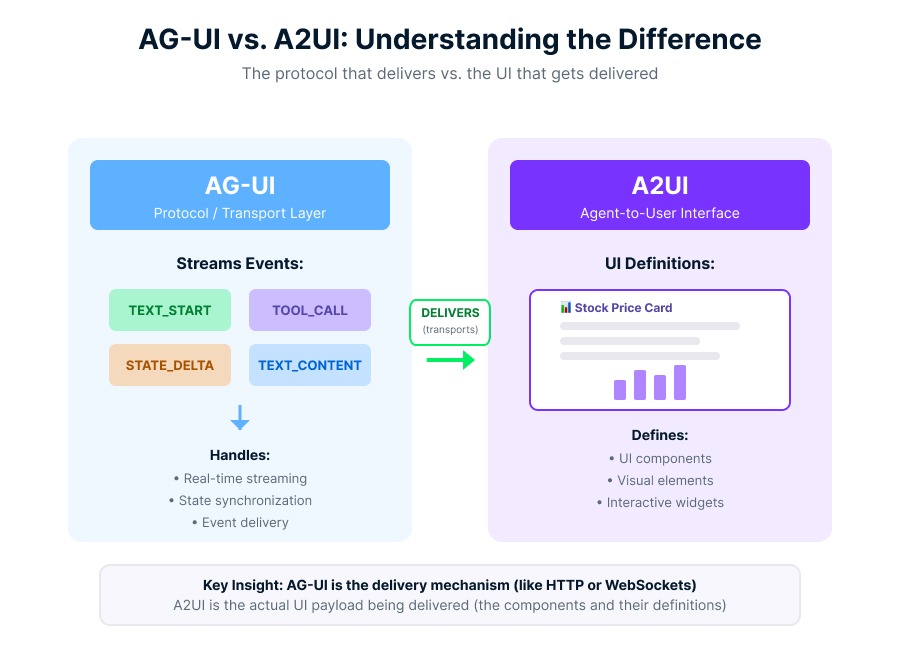

AG-UI streams events; A2UI defines components. Image by Author.

There is a distinction here that often trips people up (I'll touch on this again in the framework section): AG-UI is the delivery mechanism (transport layer), and A2UI (Agent-to-User Interface) is the UI definition (payload). An agent can use an AG-UI TOOL_CALL event to transport an A2UI payload, a declarative JSON tree that describes a UI component (like a "Stock Price Card"). Your frontend receives this and renders the actual chart.

Shared state with JSON Patch

Handling shared state is tricky. If the agent sends a massive 5MB context object every second, your app will crawl.

AG-UI solves this with JSON Patch (RFC 6902). As I mentioned earlier regarding state synchronization, when the agent changes a value in its memory, it calculates the difference and sends a STATE_DELTA event.

[

{

"op": "replace",

"path": "/user/status",

"value": "active"

}

]The client receives this lightweight instruction and applies it to its local state. This efficiency is what makes real-time collaboration with agents possible.

AG-UI in the Protocol Landscape

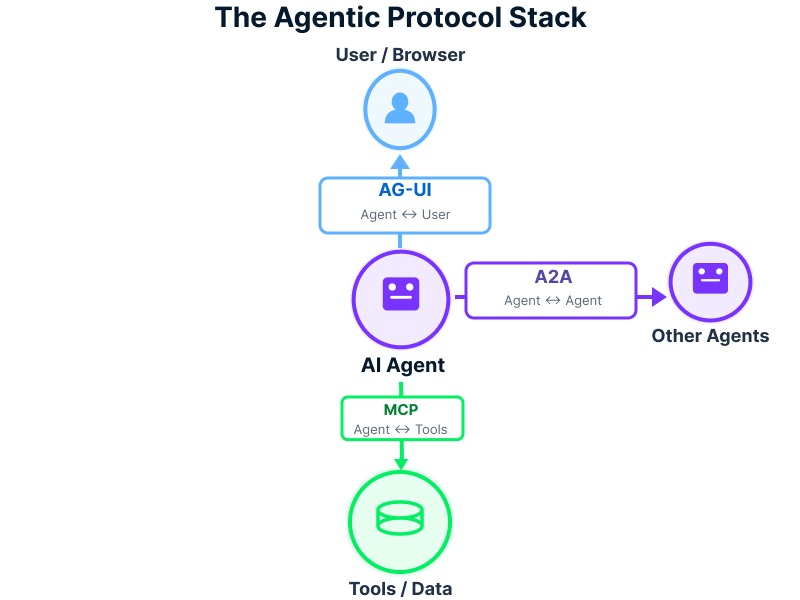

You might have heard of other protocols like MCP (Model Context Protocol). It's important to know where AG-UI fits in the stack so you don't use the wrong tool for the job.

The agentic stack

I like to think of it as a triangle of communication:

- MCP (Agent ↔ Tools): This connects the agent to the backend world: databases, GitHub repos, and internal APIs. It's about getting information.

- A2A (Agent ↔ Agent): This allows agents to talk to each other, delegating tasks to sub-agents.

- AG-UI (Agent ↔ User): This is the "Last Mile." It connects the agent's intelligence to the human user.

The Protocol Trinity connecting agents. Image by Author.

AG-UI doesn't care how the agent got the data (that's MCP's job, as I mentioned above); it only cares about how to show that data to you.

Implementing AG-UI

Let's look at what this actually looks like in practice. Developers typically engage with AG-UI in one of three ways: building 1) Agentic Apps (connecting a frontend to an agent), building 2) Integrations (making a framework compatible), or building 3) Clients (creating new frontends). Most of you will be in the first category.

I'll explain the code in a moment, but first, understand that you typically won't write the raw protocol handlers yourself. Libraries handle the packaging. But understanding the flow is needed for debugging.

Establishing the event channel

Most implementations use Server-Sent Events (SSE) because they are simple, firewall-friendly, and perfect for one-way streaming from server to client. I hinted at this earlier, but SSE is the key that makes this protocol so lightweight compared to WebSockets.

In a typical setup (like with the Microsoft Agent Framework or CopilotKit), your server exposes an endpoint that upgrades the connection to a stream.

Simple event subscription

Here is a conceptual example of how a frontend client listens to the stream. Notice how we handle different event types to update the UI.

// Connect to the AG-UI stream

const eventSource = new EventSource('/api/agent/stream');

eventSource.onmessage = (event) => {

const data = JSON.parse(event.data);

switch (data.type) {

case 'TEXT_MESSAGE_CONTENT':

// Append text to the chat window

updateChatLog(data.content);

break;

case 'TOOL_CALL_START':

// Show a "Processing..." indicator or the specific tool UI

showToolIndicator(data.tool_name);

break;

case 'STATE_DELTA':

// Apply the JSON patch to our local state store

applyPatch(localState, data.patch);

break;

case 'INTERRUPT':

// Prompt the user for permission

showApprovalModal(data.message);

break;

}

};This pattern hides the complexity of the agent's internal logic. Your frontend is just a "player" for the events the agent sends.

Integrations and Ecosystem Support

You don't have to build all this from scratch. The ecosystem is maturing rapidly, with major platforms and frameworks converging on similar event-driven interaction patterns.

The "Golden Triangle" of tools

Microsoft describes a "Golden Triangle" for agent development:

- DevUI: For visualizing the agent's thought process (debugging).

- OpenTelemetry: For measuring performance and cost.

- AG-UI: For connecting to the user.

Supported frameworks

The ecosystem is expanding rapidly. Beyond the major players, support is growing across the board:

-

Microsoft Agent Framework: Has native middleware for ASP.NET Core (

Microsoft.Agents.AI.Hosting.AGUI). -

CopilotKit: Acts as the "Browser" for AG-UI, providing a full React frontend that knows how to render these events out of the box.

-

Python Ecosystem: Frameworks like LangGraph, CrewAI, Mastra, Pydantic AI, Agno, and LlamaIndex can all be exposed as AG-UI endpoints.

-

SDKs: While TypeScript and Python are dominant, SDKs are active or in development for Kotlin, Go, Dart, Java, and Rust.

Reliability, Debugging, and Security

Opening a direct line of communication between an AI and a browser introduces risks. Here is how to keep your application safe.

The trusted frontend server

Never let a client connect directly to your raw agent runtime. As I stressed in the "Why Agentic Apps Need AG-UI" section, always use a Trusted Frontend Server (BFF pattern). This server acts as a gatekeeper. It adds authentication, rate limiting, and input validation before passing the user's message to the agent.

Security considerations

Prompt injection

Malicious users might try to override your agent's instructions through prompt injection attacks. As I mentioned earlier, your middleware should sanitize inputs.

Unauthorized tool calls

Just because an agent can delete a file doesn't mean the current user should be allowed to. Your trusted server must filter the tools available to the agent based on the user's permissions.

State security

Since state is shared via JSON patches, be wary of prototype pollution. Ensure your patching library forbids modifications to protected properties like __proto__.

Debugging with the Dojo

If your agent is acting weird, use the AG-UI Dojo. It's a test suite that lets you visualize the raw event stream. It helps you catch issues like malformed JSON patches or tool calls that are firing but not returning results.

Conclusion

We've covered the architecture, the event types, and the implementation patterns of AG-UI.

Now, I recognize that the average solo developer or someone at a small company isn't realistically going to "adopt AG-UI" and suddenly "decouple their frontend from their backend." All this would require significant architectural decisions, buy-in from multiple teams, and resources most people don't have.

But understanding AG-UI matters even if you're not implementing it. When you're evaluating agent frameworks or deciding whether to build in-house or use a platform, knowing what "good" agent-UI communication looks like gives you better judgment. You'll recognize when a tool is forcing you into brittle patterns.

If you want to dive deeper into building these systems, check out our Introduction to AI Agents and Building Scalable Agentic Systems courses to take your skills to the next level.

I’m a data engineer and community builder who works across data pipelines, cloud, and AI tooling while writing practical, high-impact tutorials for DataCamp and emerging developers.

AG-UI FAQs

Does streaming really make the agent faster?

It doesn't make the AI think faster, but it makes it feel faster to the user. Seeing the first word appear in 200ms keeps people engaged, even if the full answer takes ten seconds. It is a psychological trick as much as a technical one.

What happens if I want to add voice support later?

You are already halfway there. Because AG-UI separates the content from the display, you can just plug in a Text-to-Speech service to read the TEXT_MESSAGE_* events as they arrive. The protocol handles the data flow; you just choose how to render it.

Is this hard to debug if something breaks?

Actually, it can be easier than traditional code. Since everything is an event, you can literally "replay" a bug. If a user reports a crash, you just grab their event log and run it through your dev environment to see exactly what happened.

Am I locked into a specific vendor with this?

No, and that is the best part. AG-UI is an open standard, not a product. You can switch your backend from LangChain to Microsoft Semantic Kernel without rewriting your frontend connection logic.

How do I handle updates to the protocol?

The protocol uses semantic versioning. Most libraries are built to be forward-compatible, meaning if the server sends a new event type your client doesn't know, it will usually just ignore it rather than crashing.

Can I run AG-UI agents locally without a cloud backend?

Absolutely. AG-UI is just a communication pattern, not a service. You can run both the agent and the frontend on your laptop if you want. Some developers use this for offline demos or privacy-sensitive applications.

What if my server is slow and events take forever to arrive?

The beauty of streaming is that slow is better than frozen. Even if your agent takes 30 seconds to finish, the user sees progress every few hundred milliseconds. Compare that to a REST call where they stare at a spinner for 30 seconds and assume it crashed.

Can multiple users talk to the same agent at once?

Yes, but you need to handle session isolation. Each user gets their own event stream (usually tied to a session ID or WebSocket connection). The agent can serve hundreds of users simultaneously, just like a web server handles multiple HTTP requests.

How do I prevent someone from spamming my agent with requests?

Standard rate limiting applies here. Your trusted frontend server should throttle requests per user (e.g., max 10 messages per minute). Since AG-UI runs over HTTP or WebSockets, you can use the same tools you already use for API rate limiting.

How do I write automated tests for an AG-UI agent?

You can mock the event stream. Record a real session's events to a file, then replay them in your test suite to verify your frontend handles them correctly. It is like snapshot testing, but for conversations instead of UI components.