Track

AI agents are reshaping how tasks are automated, decisions are made, and software systems collaborate. But as organizations build more autonomous agents, the need for standardized communication across vendors and frameworks becomes essential. That’s where Agent2Agent (A2A) comes in.

A2A is an open, vendor-neutral protocol developed by Google to standardize collaboration among AI agents across different systems.

In this tutorial, I’ll guide you through understanding how A2A works, its design principles, and how it can power real-world, multi-agent workflows across domains, and how different it is from MCP.

What Is Agent 2 Agent (A2A)?

Agent2Agent (A2A) is an open protocol that enables interoperability between opaque (black-box) agents. It allows AI agents to:

- Discover each other

- Exchange structured tasks

- Stream responses

- Handle multi-turn conversations

- Operate across modalities like text, images, video, and data.

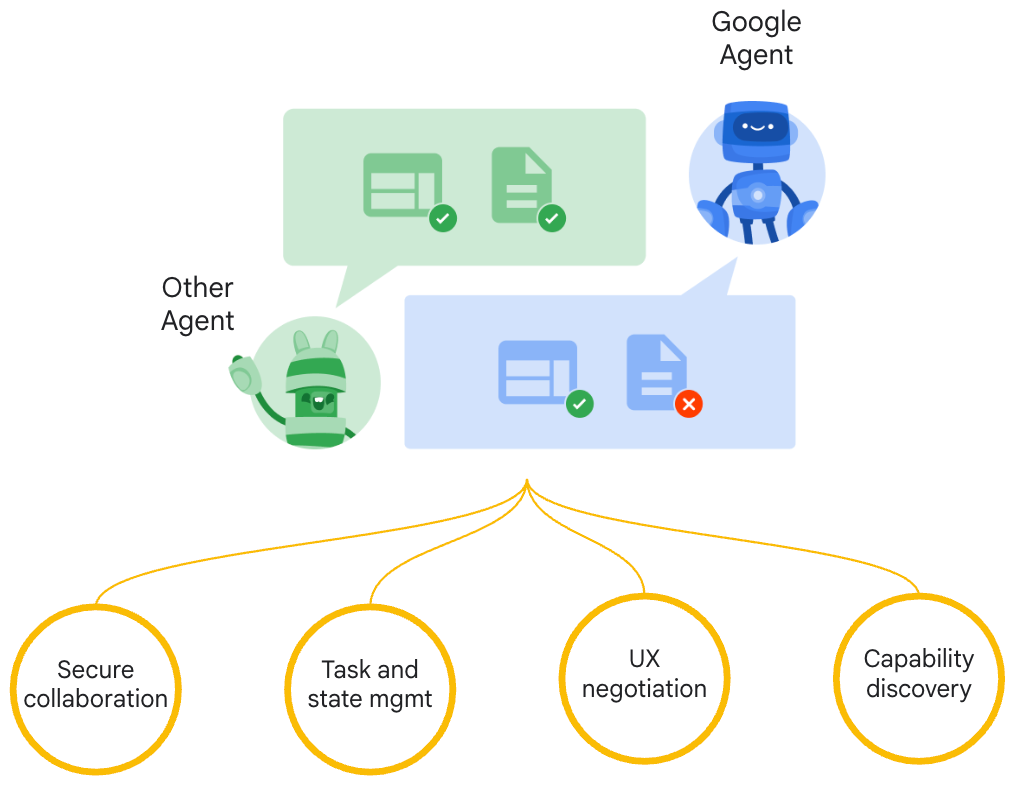

Let me try explaining the main idea of A2A by walking you through the diagram below:

Source: Google

In the image, two agents are represented: a Google Agent (in blue) and another agent (in green), likely from a third-party service. Both agents attempt to process two types of documents or tasks. The green agent succeeds at both, as indicated by the green check marks, while the Google agent succeeds with one and fails with the other.

This discrepancy highlights the need for negotiation and information sharing, central components of the A2A protocol.

Agent2Agent design principles

A2A is designed around five core principles to accomplish tasks for end-users without sharing memory, thoughts, or tools. These five principles include:

- Embrace agentic capabilities: Agents collaborate without sharing memory, tools, or execution plans.

- Built on open standards: A2A uses HTTP, JSON-RPC, and SSE to ensure easy interoperability with existing tech stacks.

- Secure by default: It follows OpenAPI authentication schemes and provides enterprise-grade security.

- Supports long-running tasks: A2A is designed to handle background tasks, human-in-the-loop approvals, and streaming updates.

- Modality agnostic: It handles images, audio, PDFs, HTML, JSON, and other structured/unstructured formats.

These design principles make A2A the backbone of scalable, enterprise-ready, multi-agent ecosystems.

How Does A2A Work?

In this section, we will dive into the inner workings of the Agent2Agent protocol and understand the architecture and lifecycle of agent communication. We’ll explore the main actors involved, the transport and authentication layers, and discovery via Agent Cards.

Actors and protocols

The A2A protocol defines three core actors:

- User: The end-user initiating a task.

- Client agent: The requester that formulates and sends a task on behalf of the user.

- Remote agent: The recipient agent that performs the task and sends back results.

Getting back to our diagram example above, the blue agent is the client agent, and the green agent is the remote agent.

Agent cards and discovery

Agents publish their metadata and capabilities via a standardized JSON document called an Agent Card, usually hosted at /.well-known/agent.json. This card contains:

- Name, version, and hosting URL

- Description and service provider

- Supported input/output modalities and content types

- Authentication methods

- List of agent skills with tags and examples

The discovery can be handled through DNS, registries, marketplaces, or private catalogs.

Core communication objects

At the heart of A2A communication is the Task, i.e., a structured object that represents an atomic unit of work. Tasks have lifecycle states such as submitted, working, input-required, or completed.

Each Task includes:

- Messages: These are used for conversational exchanges between the Client and the Remote agent

- Artifacts: These are for immutable results created by the remote agent.

- Parts: Self-contained data blocks within messages or artifacts like plain text, file blobs, or JSON.

A2A Workflow Example

Let’s understand how A2A works through an example workflow:

- User initiates a task: The user sends a request to a Client Agent, eg, “Schedule a laptop replacement.”

- Client Agent discovers a Remote Agent: The client agent looks up other capable agents using an Agent Card hosted at a public or private URL. This JSON file includes skills, supported modalities, authentication, and service URL.

- Client Agent sends a

Task: The client agent sends atask/sendrequest to the selected Remote Agent, using JSON-RPC 2.0 over HTTP. The payload contains: - A Task ID

- A Session ID (optional)

- A Message with one or more Parts (e.g, text, file, JSON)

- Remote Agent responds: The remote agent processes the task and responds with:

- Immediate results (via artifact)

- Intermediate messages (via message)

- Or requests more info (setting state to input-required)

- Streaming and push updates (if async): If the task is long-running, then the remote agent may stream partial results back using Server-Sent Events (SSE). If the client disconnects, push notifications can be sent to a secure webhook provided by the client.

- Task state transitions: The task transitions through states: submitted → working → input-required → completed. At any point, the agent may emit updates or new artifacts for the client.

- Artifacts and messages: While Messages are used for communication or clarification (e.g, “Please upload your receipts.”), Artifacts are used for showcasing final outputs like report PDF, analysis summary, JSON list, etc.

- Client Agent resumes or completes the task: If input is needed, the client agent collects it from the user and sends a follow-up

task/send. Once the task is complete, the client may fetch all artifacts usingtask/get.

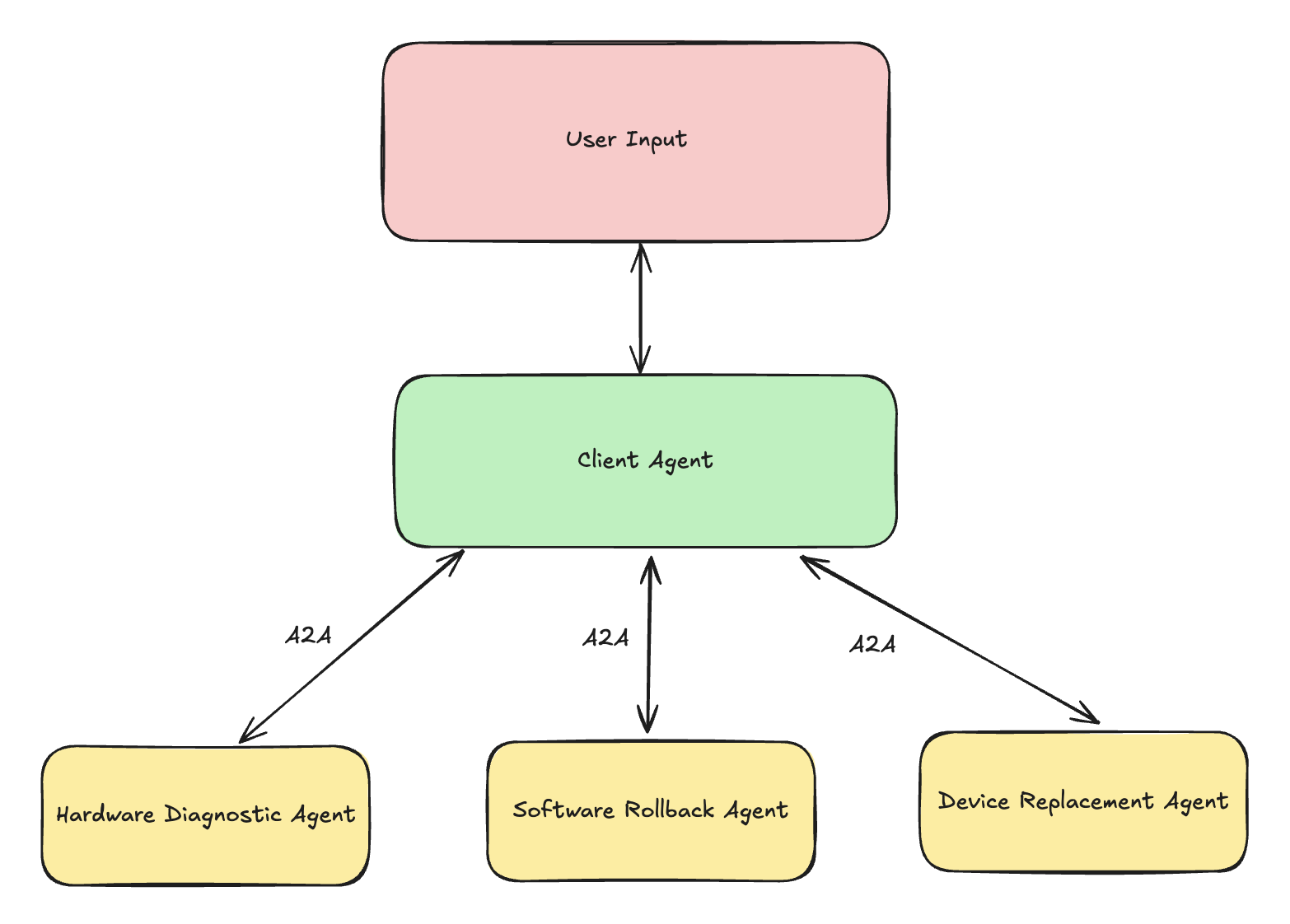

A2A Example: IT Helpdesk Ticket Resolution

Imagine an enterprise IT help desk system that uses AI agents to autonomously manage and resolve support tickets. In this setup, a user agent receives a request like:

“My laptop isn’t turning on after the last software update.”The user agent then orchestrates a resolution workflow by collaborating with multiple remote agents via A2A.

Here is how agents interact via A2A in the above scenario:

- The Client Agent sends a task to the Hardware Diagnostic Agent, asking for a device check.

- If the hardware is fine, it escalates to the Software Rollback Agent, which evaluates recent updates.

- If rollback fails, the Device Replacement Agent is engaged to initiate a hardware swap.

Each agent operates independently, exposes an Agent Card with its capabilities, and communicates asynchronously through structured Tasks. The agents may:

- Stream logs or diagnostics as artifacts

- Pause the flow if user input is needed

- Send push updates as they complete their part

This is a pure A2A use case, because:

- No structured tools like APIs or OCR engines are involved

- All coordination happens between opaque, collaborating agents

- Agents act like peers with individual decision logic and native modality handling

A2A vs MCP: When to Use What?

A2A and MCP solve different but complementary challenges in agentic systems. While A2A focuses on enabling agents to collaborate as autonomous peers, the model context protocol (MCP) is geared towards connecting agents with structured tools and external resources.

Why do we need both A2A and MCP?

Protocols create standards for interoperability. In the agentic world, two types of interoperability are crucial:

- Tool interoperability (MCP): Agents calling structured functions, APIs, or tools.

- Agent interoperability (A2A): Agents collaborating in natural language or mixed modalities.

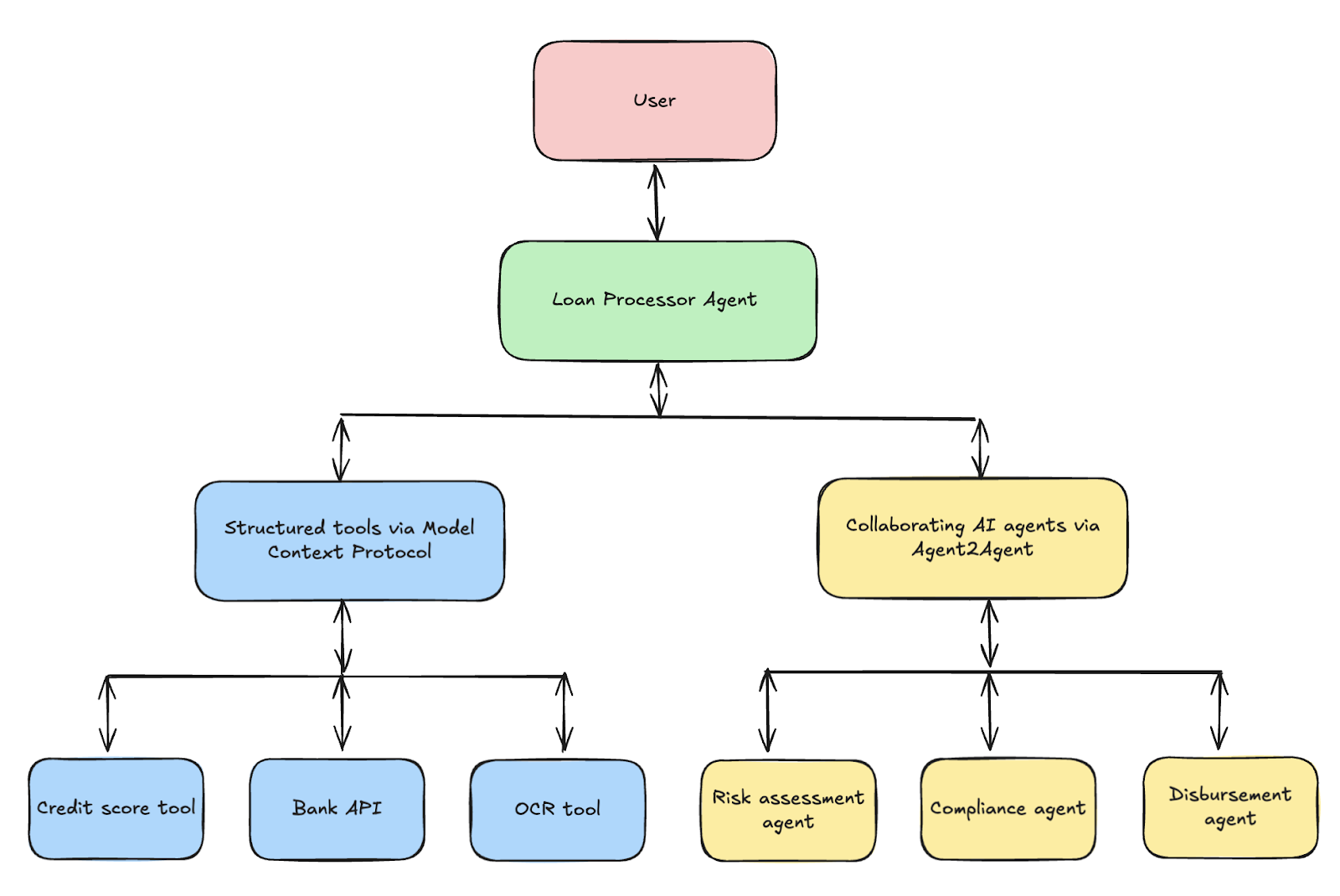

Example: Loan approval workflow

Imagine a multi-agent system that automates loan approvals for a financial institution.

Here is how A2A and MCP work together in this scenario:

Step 1: Preprocessing the application via tools and APIs (MCP)

A LoanProcessor agent receives a loan application from the user. It uses MCP to:

- Call a credit score API

- Then, retrieves the bank transaction history from a secure data source

- Finally, validates uploaded documents using an OCR

Step 2: Multi-agent coordination for final decision making (A2A)

Based on the structured data from step 1, the agent uses A2A to:

-

Collaborate with a

RiskAssessmentAgentto evaluate borrower risk -

It then consults a

ComplianceAgentto ensure the loan meets legal and regulatory requirements -

If approved, it hands off to a

DisbursementAgentto schedule the fund transfer.

Conclusion

Agent2Agent (A2A) is the missing link in large-scale multi-agent systems. With clear agent boundaries, structured task-based communication, and built-in support for streaming and push, it enables modular, discoverable, and scalable agent ecosystems.

A2A is designed for the real-world needs of enterprises and is secure, async, modality-flexible, and vendor-agnostic. Whether you're using Google's ADK, LangGraph, CrewAI, or your framework, A2A helps your agents talk to agents.

To learn more about AI agents, I recommend these blogs:

Building AI Agents with Google ADK

I am a Google Developers Expert in ML(Gen AI), a Kaggle 3x Expert, and a Women Techmakers Ambassador with 3+ years of experience in tech. I co-founded a health-tech startup in 2020 and am pursuing a master's in computer science at Georgia Tech, specializing in machine learning.