Course

One of the new features I really appreciate in ChatGPT is its ability to browse the internet to fulfill users’ requests when further information is needed. Nevertheless, I have encountered two issues so far:

- Browsing capabilities are only available to ChatGPT Plus users.

- Users can access the browsing capabilities through the user interface, but not when interacting with the model via the API.

It turns out that LangChain has addressed these issues by releasing the so-called LangChain Tools!

In this tutorial, we will learn how to use LangChain Tools to build our own GPT model with browsing capabilities. Here, browsing capabilities refers to allowing the model to consult external sources to extend its knowledge base and fulfill user requests more effectively than relying solely on the model’s pre-existing knowledge.

And the best part? LangChain Tools integrate seamlessly with Python and the OpenAI API!

Let’s get started!

Multi-Agent Systems with LangGraph

Scope and Objectives

This article covers the basics of LangChain Tools and how to use them in Python. We will be using a Jupyter Notebook with both the openai and langchain libraries installed. If you are first interested in learning about the LangChain framework, I really recommend you to check out the article Introduction to LangChain for Data Engineering & Data Applications.

In this tutorial, we will learn how to utilize four of the existing LangChain Tools to provide the default GPT model with a variety of external sources to consult while fulfilling user requests. Specifically, we will explore Tools that use the following sources:

- Two well-known search engines: Google SERP and DuckDuckGo search.

- Wikipedia as a comprehensive and widely recognized information database.

- YouTube sources for obtaining video suggestions from the model.

After setting up these Tools, we will have learned how to generally use any LangChain Tool, and how to combine them all in a Toolkit to provide the model with browsing capabilities.

Introduction to LangChain Tools

As we discussed in the introduction, LangChain Tools can enhance a model’s capabilities by enabling it to consult external sources when responding to a user’s prompt. These Tools are instrumental in a prompting technique known as ReAct Prompting. ReAct, an acronym for Reasoning and Acting, divides the model’s processing into two stages:

- Reasoning stage: In this phase, the model takes time to consider the best strategy for arriving at a response.

- Acting stage: Here, the model executes the plan formulated in the previous stage to obtain the actual response.

This process generates reasoning traces that can guide the model itself towards the correct response. This technique has been shown to help Large Language Models (LLMs) achieve more accurate and reliable responses, particularly in knowledge-intensive and decision-making tasks. This is partly because it allows the model additional time to ’think.’

LangChain Tools can further enhance the ReAct prompting technique by providing complementary information sources. In our approach, the reasoning stage involves the model deciding which tool to use based on the user’s query. Meanwhile, the action stage will enable the model to interface with the chosen external source to gather additional information.

If you are interested in an in-depth introduction to ReAct prompting with Langchain, the article ChatGPT ReAct Prompting is for you!

LangChain Elements for ReAct Prompting

There are two important elements within the LangChain framework to make use of this prompting technique: Agents and Tools.

The so-called agents are entities responsible for executing the actual reasoning and acting stages. They pass the results back to the model, enabling the LLM to generate a response with the additional information in natural language. There are various types of agents, each suited to different tasks you might want the LLM to perform. A detailed overview of the different types of agents is available in the official LangChain documentation on Agents.

Tools have been a significant focus of our discussion already. Technically, Tools are functions that an agent can call upon. LangChain offers a wide array of Tools such as Google utilities (such as Search, Drive, and Scholar), Wikipedia searches, the ArXiV database, a shell extension, and native ChatGPT plugins, among others. In this article, we will get to know four of them. Refer to the official LangChainFor for a comprehensive list of all available Tools.

Hands-On with LangChain Tools

Let’s start with the hands-on!

Setting up the OpenAI environment

As with any other project that utilizes GPT models, the first step would be to load the OpenAI API key into our environment. To do this, we will use the os and openai modules.

import openai

import os

os.environ["OPENAI_API_KEY"] = os.getenv("OPENAI_API_KEY")If you don’t have an OpenAI API key yet, this article, A Beginner’s Guide to the OpenAI API, is for you!

Because we will be using the LangChain framework, we will also load the actual gpt model from the langchain library:

from langchain.llms.openai import OpenAI

llm = OpenAI(model_name="gpt-3.5-turbo")In this case, we are using the gpt-3.5-turbo model as the no-cost option, but feel free to use any other model of your preference.

In the following section, we will learn how to set up our first Tool. We will use the DuckDuckGo search tool as an example, but you’ll see that all the Tools follow the same steps to get up and running!

Setting up our first LangChain Tool: DuckDuckGo

DuckDuckGo is a search engine that emphasizes protecting users’ privacy. Unlike some other search engines, DuckDuckGo doesn’t track its users’ search history, which means it doesn’t create or maintain a personal profile of its users.

It has a Python library that we need to install to use the search engine. It is installable via pip:

!pip install duckduckgo-searchIn addition, we need to import the DuckDuckGo Tool from the langchain library:

from langchain.tools import DuckDuckGoSearchRun

ddg_search = DuckDuckGoSearchRun()

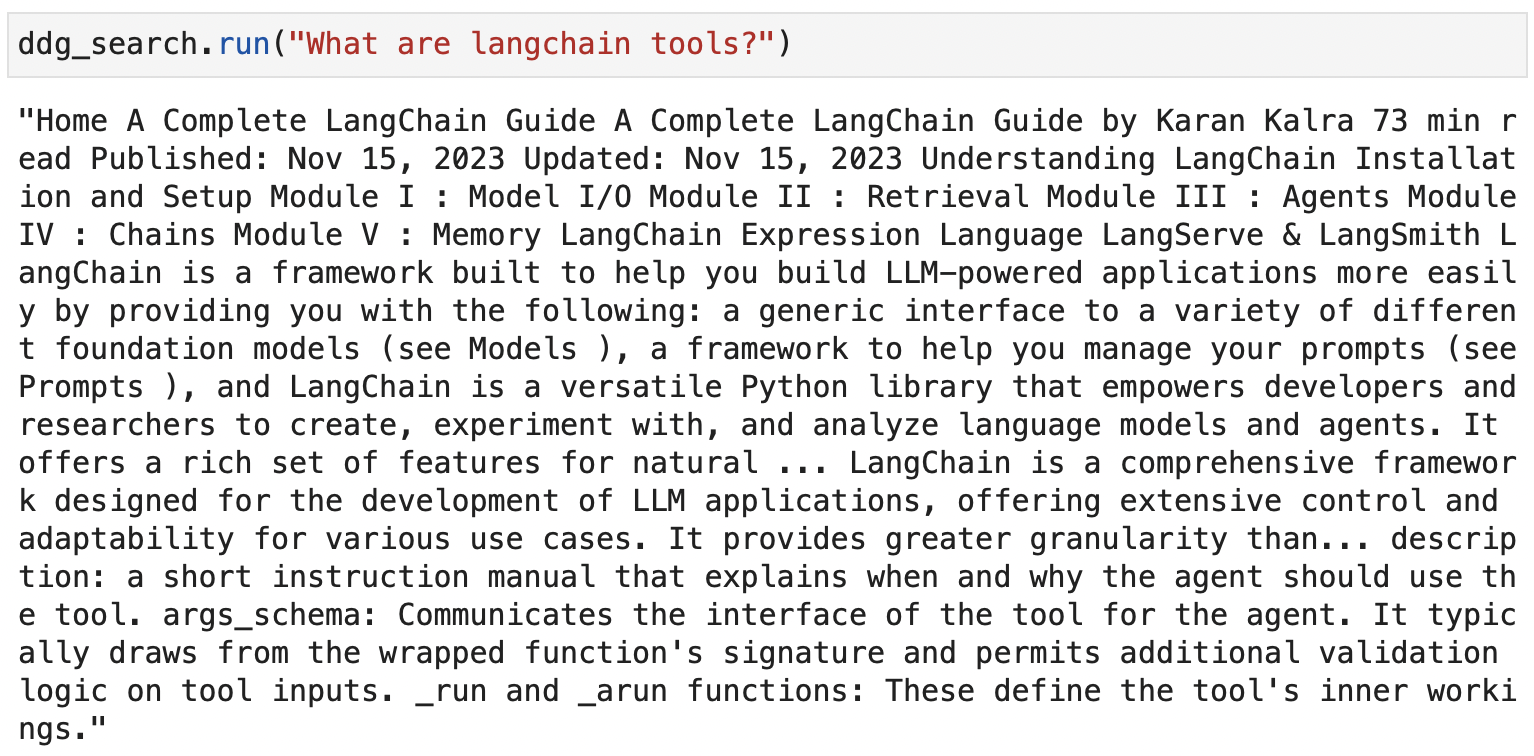

The method DuckDuckGoSearchRun() by itself already allows browsing the internet without interfacing with any LLM. Let’s see a simple example:

Screenshot from the ddg_search.run() execution for browsing the internet with DuckDuckGo

The idea behind Tools is to integrate this browsing capability into an LLM, so that we can get a coherent response in natural language, instead of retrieving just the raw piece of information. To do so, we need to embed this method into an instance of the langchain Tool class as follows:

from langchain.agents import Tool

tools = [

Tool(

name="DuckDuckGo Search",

func=ddg_search.run,

description="Useful to browse information from the Internet.",

)

]

Any tool needs a name (name) that the LangChain agent will use to refer to it, a function (func) that the agent can execute to consult the external sources (ddg_search.run as shown above) and a description (description) that can be used to provide information to the agent about when to use it.

For this example, we have selected the following description: ”Useful to browse information from the Internet”. There are also more optional parameters on the Tools definition that one can customize depending on their needs.

Exploring Other Browsing Tools

Once we have learned how to set up our first tool, setting up the rest should be quite straightforward since they all follow the same structure.

Google Serper Tool

Google Serper is a low-cost Google Search API that allows us to browse the Internet as DuckDuckGo.

For this tool, we need an API key that we can get by signing up for a free account in https://serper.dev/.

Screenshot from the main Google Serper API page.

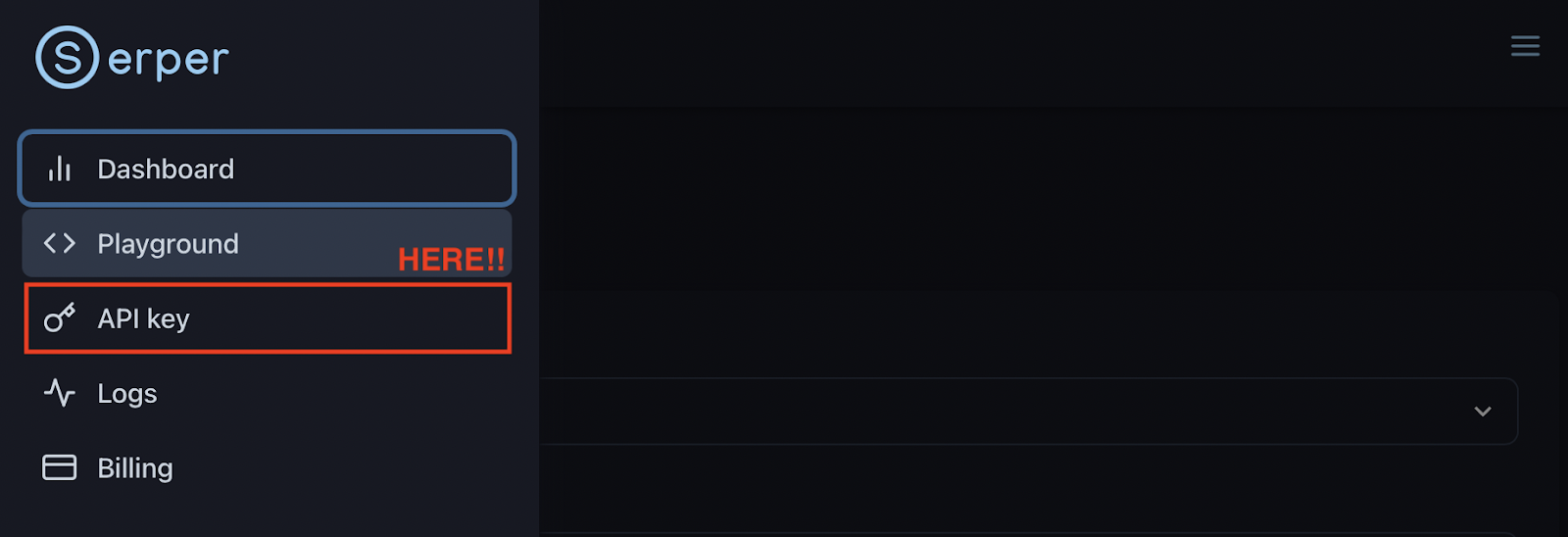

Once we are in our Serper account, we should be able to see the API key option in the left-side pannel:

Screenshot from an account page on Google Serper API. API key option on the left-side pannel.

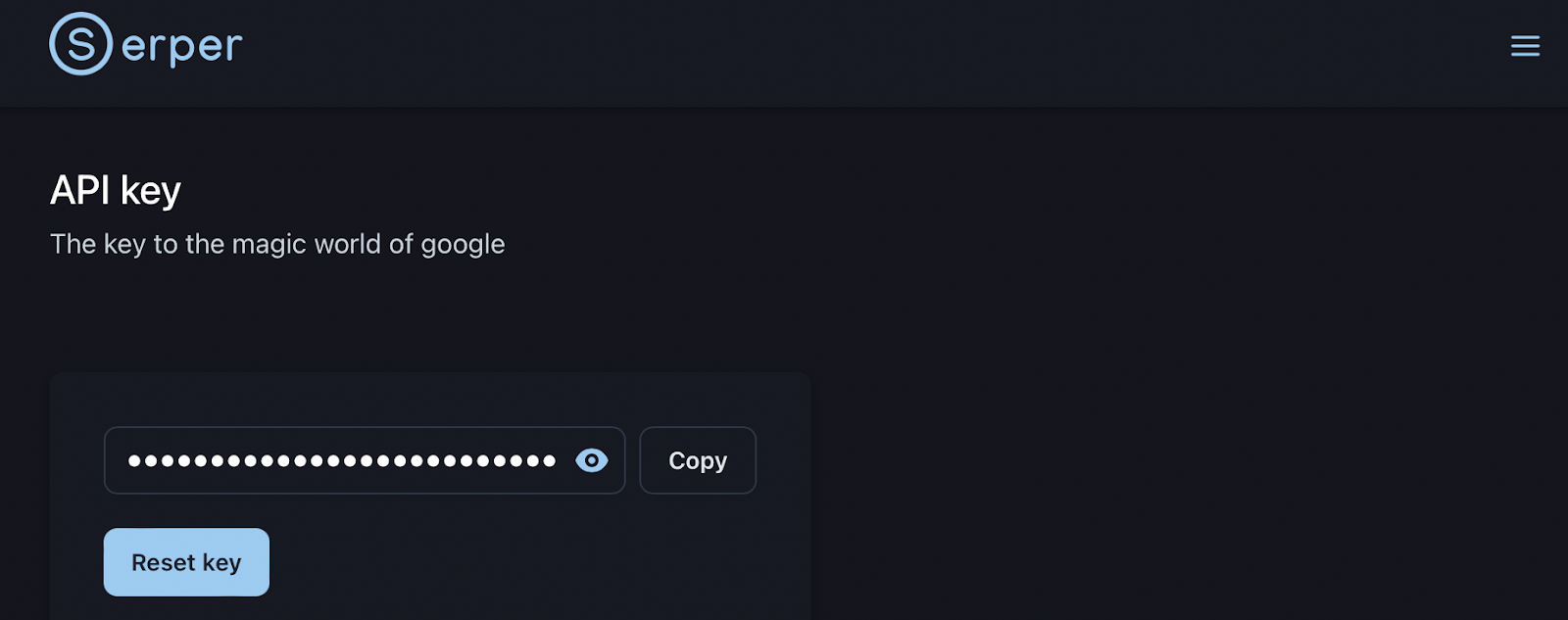

By clicking on it, we will get the API key that we can easily copy and integrate in our Python scripts:

Screenshot from the API key generation on the Google Serper page.

Going back to the code, we can easily import the key to our environment:

serper_api_key = os.environ["SERPER_API_KEY"]To use the Google Serper API, we need to first install the required Python library (pip install google-serp-api). In addition, we need to import the GoogleSerper Tool from the langchain library:

from langchain.utilities import GoogleSerperAPIWrapper

google_search = GoogleSerperAPIWrapper()As in the previous case, google_search.run() is also a method that will independently browse the internet. Nevertheless, again the idea is to integrate it as a LangChain tool as follows:

tools.append(

Tool(

name="Google Search",

func=google_search.run,

description="Useful to search in Google. Use by default.",

)

)

Note that in this case, we are appending a new tool to the tools list. Therefore, our tools list now contains both search engines.

Wikipedia Tool

Let’s complement our set of Tools with the well-known Wikipedia sources following the same process as with the previous Tools.

The first step is to install the wikipedia Python library via pip (pip install wikipedia), and load the required modules from the LangChain library. This tool actually needs two modules due to the Wikipedia API architecture:

from langchain.tools import WikipediaQueryRun

from langchain.utilities import WikipediaAPIWrapper

wikipedia = WikipediaQueryRun(api_wrapper=WikipediaAPIWrapper())Finally, we can append a new instance of the Tool class with a function running the wikipedia.run() method:

tools.append(

Tool(

name="Wikipedia Search",

func=wikipedia.run,

description="Useful when users request biographies or historical moments.",

)

)

YouTube Tool

Finally, I found it very interesting to integrate the YouTube sources so that the model has an additional capability to answer back in the form of videos, not only written text. Following the same procedure as before:

!pip install youtube

from langchain.tools import YouTubeSearchTool

youtube = YouTubeSearchTool()

tools.append(

Tool(

name="Youtube Search",

func=youtube.run,

description="Useful for when the user explicitly asks you to look on Youtube.",

)

)

Now we are ready to go!

We have built a toolkit (tools list) with the four Tools providing browsing capabilities to our model. Nevertheless, we haven’t interacted with the model so far.

Let’s see how it can make use of those sources!

Testing Browsing Capabilities

As we have already mentioned, the so-called agents are the entities responsible for using the Tools. Therefore, we also need to import them from the langchain library:

from langchain.agents import initialize_agentThe initialize_agent method will allow us to set up our agent:

agent = initialize_agent(

tools, llm, agent="zero-shot-react-description", verbose=True

)

As we can observe from the initialization, we need to provide the agent with the list of available Tools (tools), the LLM to use (llm), and the type of agent (agent=”zero-shot-react-description”). For this example, we are going to use the Zero-shot ReAct agent type as the most general-purpose action agent. In this case, we have also set verbose=True to observe the agent’s reasoning process.

Once the agent has been initialized, we can run any user prompt by using the agent.run() method.

Example 1: Biography

In principle, once we submit a user prompt, the agent will analyze the Tools descriptions and use the one that is best suited for the use case. In addition, the agent will still default to the other Tools if it does not find the appropriate information when using the selected tool.

Let’s run a first test asking for a biography. In this case, there is a suitable tool that we have configured for biographies and historical moments, so we expect the agent to select the configured tool:

agent.run(

"Harry Styles biography"

)

We can observe the reasoning process:

> Entering new AgentExecutor chain...

I should look for a biography of the singer

Action: Wikipedia Search

Action Input: Harry Styles biography

Observation:

Page: Harry Styles

Summary: Harry Edward Styles (born 1 February 1994) is an English singer. His musical career began in 2010 as part of One Direction, a boy band formed on the British music competition series The X Factor after each member of the band had been eliminated from the solo contest. They became one of the best-selling boy groups of all time before going on an indefinite hiatus in 2016.

Styles released his self-titled debut solo album through Columbia Records in 2017. It debuted at number one in the UK and the US and was one of the world's top-ten best-selling albums of the year, while its lead single, "Sign of the Times", topped the UK Singles Chart. Styles' second album, Fine Line (2019), debuted atop the US Billboard 200 with the biggest ever first-week sales by an English male artist, and was the most recent album to be included in Rolling Stone's "500 Greatest Albums of All Time" in 2020. Its fourth single, "Watermelon Sugar", topped the US Billboard Hot 100. Styles' third album, Harry's House (2022), broke several records and was widely acclaimed, receiving the Grammy Award for Album of the Year in 2023. Its lead single, "As It Was", became the number-one song of 2022 globally according to Billboard.

Styles has received various accolades, including six Brit Awards, three Grammy Awards, an Ivor Novello Award, and three American Music Awards. His film roles include Dunkirk (2017), Don't Worry Darling (2022), and My Policeman (2022). Aside from music and acting, Styles is known for his flamboyant fashion. He is the first man to appear solo on the cover of Vogue.

Page: Debbie Harry

Summary: Deborah Ann Harry (born Angela Trimble; July 1, 1945) is an American singer, songwriter and actress, best known as the lead vocalist of the band Blondie. Four of her songs with the band reached No. 1 on the US charts between 1979 and 1981.

Born in Miami, Florida, Harry was adopted as an infant and raised in Hawthorne, New Jersey. After college she worked various jobs—as a dancer, a Playboy Bunny, and a secretary (including at the BBC in New York)—before her breakthrough in the music industry. She co-formed Blondie in 1974 in New York City. The band released its eponymous debut studio album in 1976 and released three more studio albums between then and 1979, including Parallel Lines, which spawned six singles, including "Heart of Glass". Their fifth studio album, Autoamerican (1980), spawned such hits as a cover of "The Tide Is High", and "Rapture", which is considered the first rap song to chart at number one in the United States.Harry released her debut solo studio album, KooKoo, in 1981. During a Blondie hiatus, she embarked on an acting career, appearing in lead roles in the neo-noir Union City (1980) and in David Cronenberg's body horror film Videodrome (1983). She released her second solo studio album, 1986's Rockbird, and starred in John Waters's cult dance film Hairspray (1988). She released two more solo albums between then and 1993, before returning to film with roles in a John Carpenter-directed segment of the horror film Body Bags (1993), and in the drama Heavy (1995).

Blondie reunited in the late 1990s, releasing No Exit (1999), followed by The Curse of Blondie (2003). Harry continued to appear in independent films throughout the 2000s, including Deuces Wild (2002), My Life Without Me (2003) and Elegy (2008). With Blondie, she released the group's ninth studio album, Panic of Girls, in 2011, followed by Ghosts of Download (2014). The band's eleventh studio album, 2017's Pollinator, charted at number 4 in the United Kingdom.

Page: Harry Potter

Summary: Harry Potter is a series of seven fantasy novels written by British author J. K. Rowling. The novels chronicle the lives of a young wizard, Harry Potter, and his friends Hermione Granger and Ron Weasley, all of whom are students at Hogwarts School of Witchcraft and Wizardry. The main story arc concerns Harry's conflict with Lord Voldemort, a dark wizard who intends

Thought: I now know the final answer

Final Answer: Harry Styles is an English singer, songwriter and actor. He rose to fame as a member of the boy band One Direction, and later embarked on a solo career. He released his debut solo album, Harry Styles, in 2017, and his second album, Fine Line, in 2019. He has also had success in film, appearing in Dunkirk (2017), Don't Worry Darling (2022) and My Policeman (2022).

> Finished chain.

"Harry Styles is an English singer, songwriter and actor. He rose to fame as a member of the boy band One Direction, and later embarked on a solo career. He released his debut solo album, Harry Styles, in 2017, and his second album, Fine Line, in 2019. He has also had success in film, appearing in Dunkirk (2017), Don't Worry Darling (2022) and My Policeman (2022)."

We can see that once we execute the .run() method, the agent processes the user prompt and starts the reasoning phase, which concludes with the following outcome: "I should look for a biography of the singer". To do so, it designs an action plan that consists of using the Wikipedia Search tool – according to our tool definition – with "Harry Styles biography" as input. Then, the action plan starts, and the agent retrieves related information from the Wikipedia database, processes the findings, and generates a suitable answer in natural language.

Example 2: Random Browsing

Let’s now request a generic browsing task so that the agent doesn’t have a specific tool mentioned in the toolkit description:

agent.run(

"Yesterday's latest news in Spain"

)

Let’s observe the reasoning process in this case:

> Entering new AgentExecutor chain...

I should look for news from yesterday

Action: Google Search

Action Input: "Yesterday's news in Spain"

Observation: The latest breaking news, comment and features from The Independent. All the latest content about Spain from the BBC. Find all national and international information about spain. Select the subjects you want to know more about on euronews.com. Latest Spain news stories including accidents, crime and policing, politics, and Spanish sport news headlines. Latest news, essential insights and practical guides to life in Spain from The Local, Europe's leading independent voice. Missing: Yesterday's | Show results with:Yesterday's. Stay on top of Spain latest developments on the ground with Al Jazeera's fact-based news, exclusive video footage, photos and updated maps. Missing: Yesterday's | Show results with:Yesterday's. Spain · Shakira due to go on trial in Spain over tax fraud claims · The Observer view on Pedro Sánchez's election deal to take power: it undermines democracy. Missing: Yesterday's | Show results with:Yesterday's. Latest News · Hundreds leave Al-Shifa hospital as Israeli forces take control of facility · F1 fans file class-action suit over being forced to exit Las Vegas ... Missing: Yesterday's | Show results with:Yesterday's. Follow the latest Spain news stories and headlines. Get breaking news alerts when you download the ABC News App and subscribe to Spain notifications. Missing: Yesterday's | Show results with:Yesterday's. Get the latest breaking news and top news headlines at Reuters ... Spain Headlines. Shakira stands trial in Spain for alleged tax fraud. Shakira ... Missing: Yesterday's | Show results with:Yesterday's.

Thought: I found a lot of news sources with yesterday's news, so the final answer should be about that.

Final Answer: The latest news in Spain from yesterday is that Shakira is standing trial in Spain for alleged tax fraud, hundreds of people have left Al-Shifa hospital as Israeli forces take control, and F1 fans have filed a class-action suit over being forced to exit Las Vegas. Other news includes insights and practical guides to life in Spain, Pedro Sánchez's election deal to take power, and the top news headlines from Reuters.

> Finished chain.

"The latest news in Spain from yesterday is that Shakira is standing trial in Spain for alleged tax fraud, hundreds of people have left Al-Shifa hospital as Israeli forces take control, and F1 fans have filed a class-action suit over being forced to exit Las Vegas. Other news includes insights and practical guides to life in Spain, Pedro Sánchez's election deal to take power, and the top news headlines from Reuters."

In this case, the agent successfully defaults to the default Google Serper tool and finds the latest news in Spain. This browsing example is particularly interesting because we manage to ask a very current prompt to an LLM whose training data was cut in September 2021, yet we still get an up-to-date response.

Example 3: Video Retrieval

Finally, let’s assume we are interested in a video resource. Let’s see if the model can actually point us to it. We’ll turn off the verbose mode and observe the actual response from the model:

agent = initialize_agent(

tools, llm, agent="zero-shot-react-description"

)

agent.run(

"Can you point me to the Procrastination TED talk by Tim Urban?"

)

And, as expected, we successfully get the following response back:

'The TED Talk "Procrastination" by Tim Urban can be found at

https://www.youtube.com/watch?v=arj7oStGLkU'

It works! With these three examples, we have shown the different sources that our LLM can make use of to fulfill our requests.

If you are interested in incorporating more Tools to our Toolkit, I really recommend the article ReAct: 3 LangChain Tools To Enhance the Default GPT Capabilities.

Conclusion

In this tutorial, we have learned about the use of LangChain Tools to enhance browsing capabilities in LLMs. Central to LangChain is its ability to streamline the development of LLM-based applications, showcasing a suite of powerful features including built-in memories, complete chatbots, and the Tools explored in this article, among others.

While OpenAI is making strides in the realm of customized AI with the launch of its latest GPT models, I believe there is still significant room for growth and innovation.

In this regard, LangChain Tools hold a substantial advantage in this technological race for users who frequently interact with GPT models through Python and the OpenAI API. This stands in stark contrast to GPTs, which appear to be tailored for a non-coding audience. GPT users can easily configure a specialized instance from the ChatGPT user interface and access it as needed, offering user-friendliness but limited flexibility.

Moreover, the ability of LangChain Tools to check external information sources like Google or Wikipedia greatly enhances the model’s knowledge base. This presents a considerable advantage over GPTs, which support information uploads but on a smaller scale.

In conclusion, LangChain Tools are, in my opinion, the most powerful features for augmenting the model’s capabilities and customizing our own instances using widely available public data. Can you imagine now the power of combining Tools with our own private database?

New to the world of LLMs? Check out our Large Language Models Concepts course to get up to speed!

Andrea Valenzuela is currently working on the CMS experiment at the particle accelerator (CERN) in Geneva, Switzerland. With expertise in data engineering and analysis for the past six years, her duties include data analysis and software development. She is now working towards democratizing the learning of data-related technologies through the Medium publication ForCode'Sake.

She holds a BS in Engineering Physics from the Polytechnic University of Catalonia, as well as an MS in Intelligent Interactive Systems from Pompeu Fabra University. Her research experience includes professional work with previous OpenAI algorithms for image generation, such as Normalizing Flows.