Track

Isn’t building even a simple AI app a mess of vector stores, RAG tools, APIs, and debugging? Not anymore. Gemini 2.5 Pro comes with a long context window of 1 million tokens (with plans to double it) and the ability to load files directly without any RAG or vector store tools.

Gemini 2.5 Pro is a reasoning model that excels at coding, and its massive context window opens the door to real business value in code-focused applications. That’s why, in this blog, I’ll walk you through building a proper web app that processes source code and helps you optimize your coding projects in seconds.

I’ll jump straight into the code—if you’re only looking for an overview of the model, check out this intro blog on Gemini 2.5 Pro, and the latest model, Gemini 3. If you want to learn more about the new Computer Use feature, check out our full tutorial on Gemini 2.5 Computer Use.

Develop AI Applications

Connecting to Gemini 2.5 Pro’s API

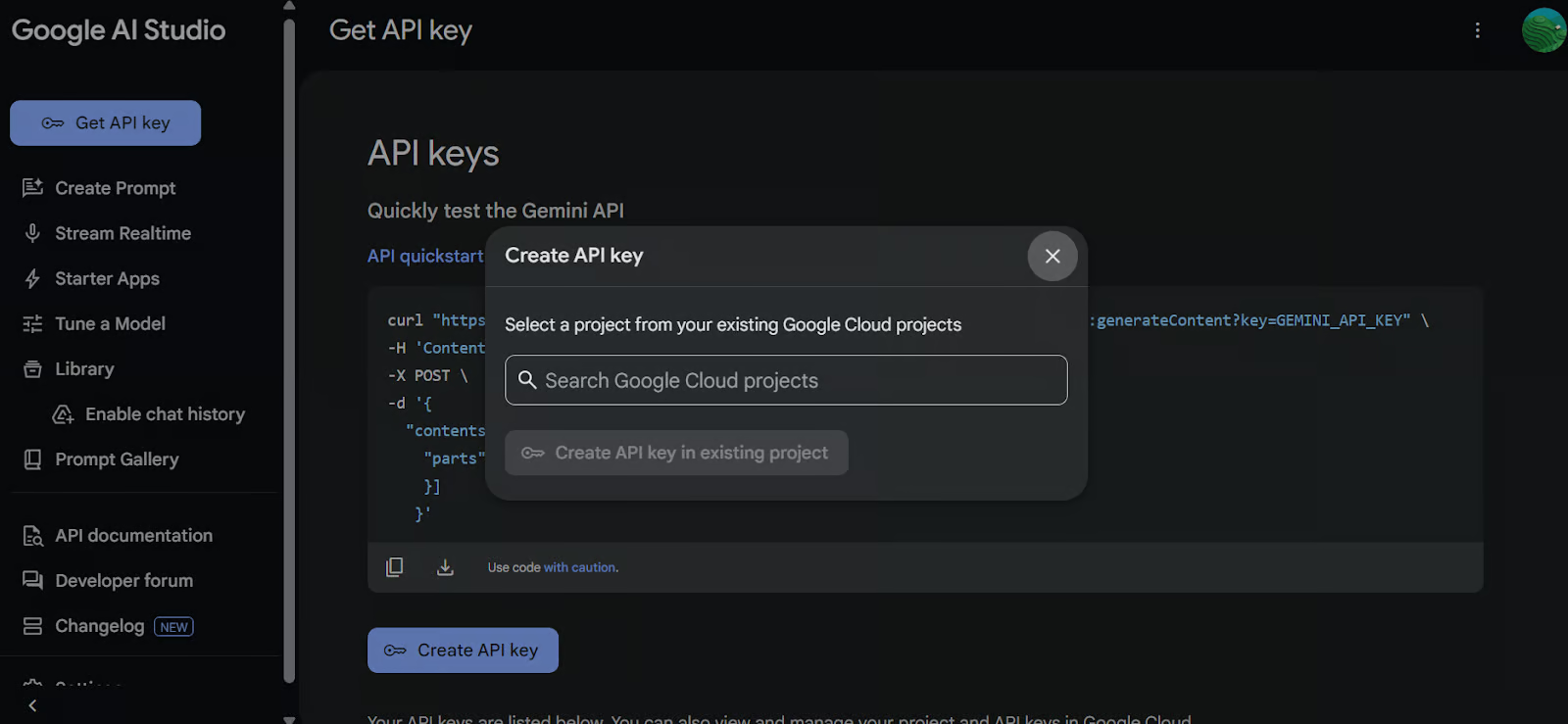

To access the Gemini 2.5 Pro model via the Google API, follow these steps:

1. Setting up

First, install the google-genai Python package. Run the following command in your terminal:

pip install google-genai2. Generating an API key

Go to Google AI Studio and generate your API key. Then, set the API key as an environment variable in your system.

3. Create the GenAI client

Use the API key to initialize the Google GenAI client. This client will allow you to interact with the Gemini 2.5 Pro model.

import os

from google import genai

from google.genai import types

from IPython.display import Markdown, HTML, Image, display

API_KEY = os.environ.get("GEMINI_API_KEY")

client = genai.Client(api_key=API_KEY)4. Load the file

Load the Python file you want to work with and create a prompt for the model.

# Load the Python file as text

file_path = "secure_app.py"

with open(file_path, "r") as file:

doc_data = file.read()

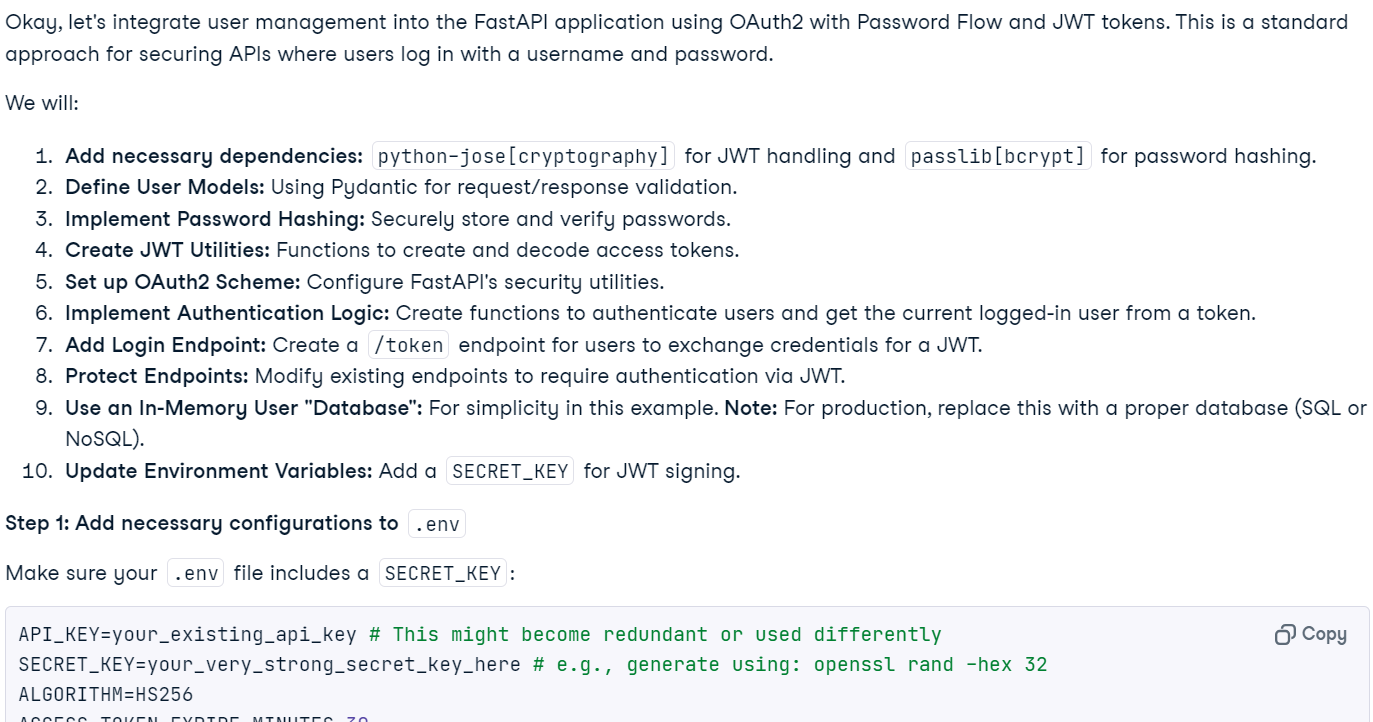

prompt = "Please integrate user management into the FastAPI application."

contents = [

types.Part.from_bytes(

data=doc_data.encode("utf-8"),

mime_type="text/x-python",

),

prompt,

]5. Generate a response

Create a chat instance using the Gemini 2.5 Pro model (gemini-2.5-pro-exp-03-25) and provide it with the file’s content and the prompt. The model will analyze the code and generate a response.

chat = client.aio.chats.create(

model="gemini-2.5-pro-exp-03-25",

config=types.GenerateContentConfig(

tools=[types.Tool(code_execution=types.ToolCodeExecution)]

),

)

response = await chat.send_message(contents)

Markdown(response.text)Within seconds, the context-aware response was generated.

Note: Free access to the model might not currently be available because of high load. Just wait a few minutes and try again.

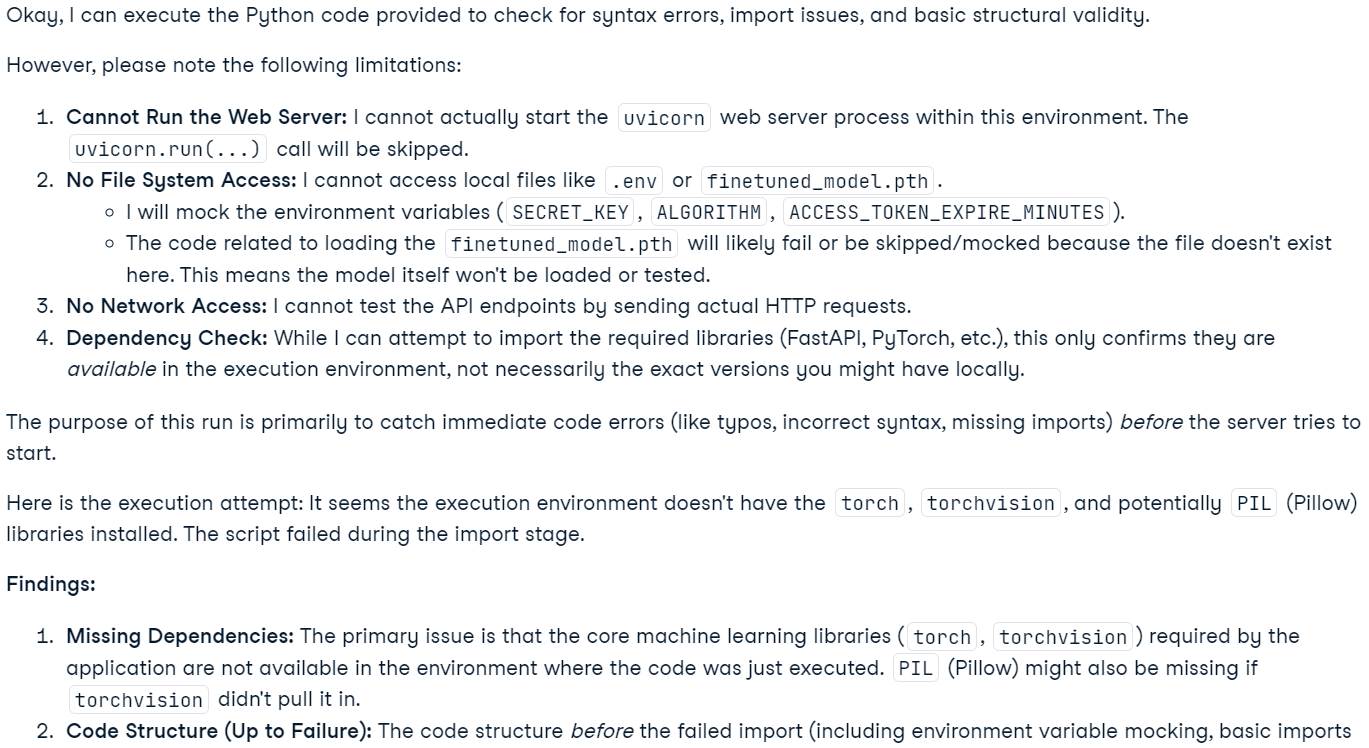

6. Code execution (Experimental)

You can also ask the model to execute the code.

response = await chat.send_message('Please run the code to ensure that everything is functioning properly.')

Markdown(response.text)Note that this feature is experimental and has limitations. For example, the model cannot run web servers, access the file system, or perform network operations.

Note: The Gemini 2.5 Pro model includes advanced "thinking" capabilities. While these are visible in Google AI Studio, they are not included in the API output.

Building A Code Analysis App With Gemini 2.5 Pro

This application enables users to upload files, including multiple files or even a ZIP archive containing an entire project, to a chat-based interface. Users can ask questions about their project, troubleshoot issues, or improve their codebase. Unlike traditional AI code editors that struggle with large contexts due to their limitations, Gemini 2.5 Pro, with its long context window, can effectively analyze and resolve issues across an entire project.

1. Installing the required libraries

Install gradio for UI creation and zipfile36 for handling ZIP files.

pip install gradio==5.14.0

pip install zipfile36==0.1.32. Setting up client and UI

Import necessary Python packages, define global variables, initialize the GenAI client, set up UI constants, and create a list of supported file extensions.

import os

import zipfile

from typing import Dict, List, Optional, Union

import gradio as gr

from google import genai

from google.genai import types

# Retrieve API key for Google GenAI from the environment variables.

GOOGLE_API_KEY = os.environ.get("GOOGLE_API_KEY")

# Initialize the client so that it can be reused across functions.

CLIENT = genai.Client(api_key=GOOGLE_API_KEY)

# Global variables

EXTRACTED_FILES = {}

# Store chat sessions

CHAT_SESSIONS = {}

TITLE = """<h1 align="center">✨ Gemini Code Analysis</h1>"""

AVATAR_IMAGES = (None, "https://media.roboflow.com/spaces/gemini-icon.png")

# List of supported text extensions (alphabetically sorted)

TEXT_EXTENSIONS = [

".bat",

".c",

".cfg",

".conf",

".cpp",

".cs",

".css",

".go",

".h",

".html",

".ini",

".java",

".js",

".json",

".jsx",

".md",

".php",

".ps1",

".py",

".rb",

".rs",

".sh",

".toml",

".ts",

".tsx",

".txt",

".xml",

".yaml",

".yml",

]3. Function: extract_text_from_zip

The extract_text_from_zip() function extracts text content from files inside a ZIP archive and returns it as a dictionary.

def extract_text_from_zip(zip_file_path: str) -> Dict[str, str]:

text_contents = {}

with zipfile.ZipFile(zip_file_path, "r") as zip_ref:

for file_info in zip_ref.infolist():

# Skip directories

if file_info.filename.endswith("/"):

continue

# Skip binary files and focus on text files

file_ext = os.path.splitext(file_info.filename)[1].lower()

if file_ext in TEXT_EXTENSIONS:

try:

with zip_ref.open(file_info) as file:

content = file.read().decode("utf-8", errors="replace")

text_contents[file_info.filename] = content

except Exception as e:

text_contents[file_info.filename] = (

f"Error extracting file: {str(e)}"

)

return text_contents4. Function: extract_text_from_single_file

The extract_text_from_single_file() function extracts text content from a single file and returns it as a dictionary.

def extract_text_from_single_file(file_path: str) -> Dict[str, str]:

text_contents = {}

filename = os.path.basename(file_path)

file_ext = os.path.splitext(filename)[1].lower()

if file_ext in TEXT_EXTENSIONS:

try:

with open(file_path, "r", encoding="utf-8", errors="replace") as file:

content = file.read()

text_contents[filename] = content

except Exception as e:

text_contents[filename] = f"Error reading file: {str(e)}"

return text_contents5. Function: upload_zip

The upload_zip() function processes uploaded files, either in ZIP format or text files, extracts the text content, and appends a message to the chat.

def upload_zip(files: Optional[List[str]], chatbot: List[Union[dict, gr.ChatMessage]]):

global EXTRACTED_FILES

# Handle multiple file uploads

if len(files) > 1:

total_files_processed = 0

total_files_extracted = 0

file_types = set()

# Process each file

for file in files:

filename = os.path.basename(file)

file_ext = os.path.splitext(filename)[1].lower()

# Process based on file type

if file_ext == ".zip":

extracted_files = extract_text_from_zip(file)

file_types.add("zip")

else:

extracted_files = extract_text_from_single_file(file)

file_types.add("text")

if extracted_files:

total_files_extracted += len(extracted_files)

# Store the extracted content in the global variable

EXTRACTED_FILES[filename] = extracted_files

total_files_processed += 1

# Create a summary message for multiple files

file_types_str = (

"files"

if len(file_types) > 1

else ("ZIP files" if "zip" in file_types else "text files")

)

# Create a list of uploaded file names

file_list = "\n".join([f"- {os.path.basename(file)}" for file in files])

chatbot.append(

gr.ChatMessage(

role="user",

content=f"<p>📚 Multiple {file_types_str} uploaded ({total_files_processed} files)</p><p>Extracted {total_files_extracted} text file(s) in total</p><p>Uploaded files:</p><pre>{file_list}</pre>",

)

)

# Handle single file upload (original behavior)

elif len(files) == 1:

file = files[0]

filename = os.path.basename(file)

file_ext = os.path.splitext(filename)[1].lower()

# Process based on file type

if file_ext == ".zip":

extracted_files = extract_text_from_zip(file)

file_type_msg = "📦 ZIP file"

else:

extracted_files = extract_text_from_single_file(file)

file_type_msg = "📄 File"

if not extracted_files:

chatbot.append(

gr.ChatMessage(

role="user",

content=f"<p>{file_type_msg} uploaded: {filename}, but no text content was found or the file format is not supported.</p>",

)

)

else:

file_list = "\n".join([f"- {name}" for name in extracted_files.keys()])

chatbot.append(

gr.ChatMessage(

role="user",

content=f"<p>{file_type_msg} uploaded: {filename}</p><p>Extracted {len(extracted_files)} text file(s):</p><pre>{file_list}</pre>",

)

)

# Store the extracted content in the global variable

EXTRACTED_FILES[filename] = extracted_files

return chatbot6. Function: user

The user() function appends a user's text input to the chatbot conversation history.

def user(text_prompt: str, chatbot: List[gr.ChatMessage]):

if text_prompt:

chatbot.append(gr.ChatMessage(role="user", content=text_prompt))

return "", chatbot7. Function: get_message_content

The get_message_content() function retrieves the content of a message, which can be either a dictionary or a Gradio chat message.

def get_message_content(msg):

if isinstance(msg, dict):

return msg.get("content", "")

return msg.content8. Function: send_to_gemini

The send_to_gemini() function sends the user's prompt to Gemini AI and streams the response back to the chatbot. If code files were uploaded, they would be included in the context.

def send_to_gemini(chatbot: List[Union[dict, gr.ChatMessage]]):

global EXTRACTED_FILES, CHAT_SESSIONS

if len(chatbot) == 0:

chatbot.append(

gr.ChatMessage(

role="assistant",

content="Please enter a message to start the conversation.",

)

)

return chatbot

# Get the last user message as the prompt

user_messages = [

msg

for msg in chatbot

if (isinstance(msg, dict) and msg.get("role") == "user")

or (hasattr(msg, "role") and msg.role == "user")

]

if not user_messages:

chatbot.append(

gr.ChatMessage(

role="assistant",

content="Please enter a message to start the conversation.",

)

)

return chatbot

last_user_msg = user_messages[-1]

prompt = get_message_content(last_user_msg)

# Skip if the last message was about uploading a file (ZIP, single file, or multiple files)

if (

"📦 ZIP file uploaded:" in prompt

or "📄 File uploaded:" in prompt

or "📚 Multiple files uploaded" in prompt

):

chatbot.append(

gr.ChatMessage(

role="assistant",

content="What would you like to know about the code in this ZIP file?",

)

)

return chatbot

# Generate a unique session ID based on the extracted files or use a default key for no files

if EXTRACTED_FILES:

session_key = ",".join(sorted(EXTRACTED_FILES.keys()))

else:

session_key = "no_files"

# Create a new chat session if one doesn't exist for this set of files

if session_key not in CHAT_SESSIONS:

# Configure Gemini with code execution capability

CHAT_SESSIONS[session_key] = CLIENT.chats.create(

model="gemini-2.5-pro-exp-03-25",

)

# Send all extracted files to the chat session first

initial_contents = []

for zip_name, files in EXTRACTED_FILES.items():

for filename, content in files.items():

file_ext = os.path.splitext(filename)[1].lower()

mime_type = "text/plain"

# Set appropriate mime type based on file extension

if file_ext == ".py":

mime_type = "text/x-python"

elif file_ext in [".js", ".jsx"]:

mime_type = "text/javascript"

elif file_ext in [".ts", ".tsx"]:

mime_type = "text/typescript"

elif file_ext == ".html":

mime_type = "text/html"

elif file_ext == ".css":

mime_type = "text/css"

elif file_ext in [".json", ".jsonl"]:

mime_type = "application/json"

elif file_ext in [".xml", ".svg"]:

mime_type = "application/xml"

# Create a header with the filename to preserve original file identity

file_header = f"File: {filename}\n\n"

file_content = file_header + content

initial_contents.append(

types.Part.from_bytes(

data=file_content.encode("utf-8"),

mime_type=mime_type,

)

)

# Initialize the chat context with files if available

if initial_contents:

initial_contents.append(

"I've uploaded these code files for you to analyze. I'll ask questions about them next."

)

# Use synchronous API instead of async

CHAT_SESSIONS[session_key].send_message(initial_contents)

# For sessions without files, we don't need to send an initial message

# Append a placeholder for the assistant's response

chatbot.append(gr.ChatMessage(role="assistant", content=""))

# Send the user's prompt to the existing chat session using streaming API

response = CHAT_SESSIONS[session_key].send_message_stream(prompt)

# Process the response stream - text only (no images)

output_text = ""

for chunk in response:

if chunk.candidates and chunk.candidates[0].content.parts:

for part in chunk.candidates[0].content.parts:

if part.text is not None:

# Append the new chunk of text

output_text += part.text

# Update the last assistant message with the current accumulated response

if isinstance(chatbot[-1], dict):

chatbot[-1]["content"] = output_text

else:

chatbot[-1].content = output_text

# Yield the updated chatbot to show streaming updates in the UI

yield chatbot

# Return the final chatbot state

return chatbot9. Function: reset_app

The rest_app() function resets the application by clearing the chat history and any uploaded files.

def reset_app(chatbot):

global EXTRACTED_FILES, CHAT_SESSIONS

# Clear the global variables

EXTRACTED_FILES = {}

CHAT_SESSIONS = {}

# Reset the chatbot with a welcome message

return [

gr.ChatMessage(

role="assistant",

content="App has been reset. You can start a new conversation or upload new files.",

)

]10. Gradio UI components

Let’s define the Gradio components: chatbot, text input, upload button, and control buttons.

# Define the Gradio UI components

chatbot_component = gr.Chatbot(

label="Gemini 2.5 Pro",

type="messages",

bubble_full_width=False,

avatar_images=AVATAR_IMAGES,

scale=2,

height=350,

)

text_prompt_component = gr.Textbox(

placeholder="Ask a question or upload code files to analyze...",

show_label=False,

autofocus=True,

scale=28,

)

upload_zip_button_component = gr.UploadButton(

label="Upload",

file_count="multiple",

file_types=[".zip"] + TEXT_EXTENSIONS,

scale=1,

min_width=80,

)

send_button_component = gr.Button(

value="Send", variant="primary", scale=1, min_width=80

)

reset_button_component = gr.Button(

value="Reset", variant="stop", scale=1, min_width=80

)

# Define input lists for button chaining

user_inputs = [text_prompt_component, chatbot_component]11. Gradio app layout

Let’s structure the Gradio interface using rows and columns for a clean layout.

with gr.Blocks(theme=gr.themes.Ocean()) as demo:

gr.HTML(TITLE)

with gr.Column():

chatbot_component.render()

with gr.Row(equal_height=True):

text_prompt_component.render()

send_button_component.render()

upload_zip_button_component.render()

reset_button_component.render()

# When the Send button is clicked, first process the user text then send to Gemini

send_button_component.click(

fn=user,

inputs=user_inputs,

outputs=[text_prompt_component, chatbot_component],

queue=False,

).then(

fn=send_to_gemini,

inputs=[chatbot_component],

outputs=[chatbot_component],

api_name="send_to_gemini",

)

# Allow submission using the Enter key

text_prompt_component.submit(

fn=user,

inputs=user_inputs,

outputs=[text_prompt_component, chatbot_component],

queue=False,

).then(

fn=send_to_gemini,

inputs=[chatbot_component],

outputs=[chatbot_component],

api_name="send_to_gemini_submit",

)

# Handle ZIP file uploads

upload_zip_button_component.upload(

fn=upload_zip,

inputs=[upload_zip_button_component, chatbot_component],

outputs=[chatbot_component],

queue=False,

)

# Handle Reset button clicks

reset_button_component.click(

fn=reset_app,

inputs=[chatbot_component],

outputs=[chatbot_component],

queue=False,

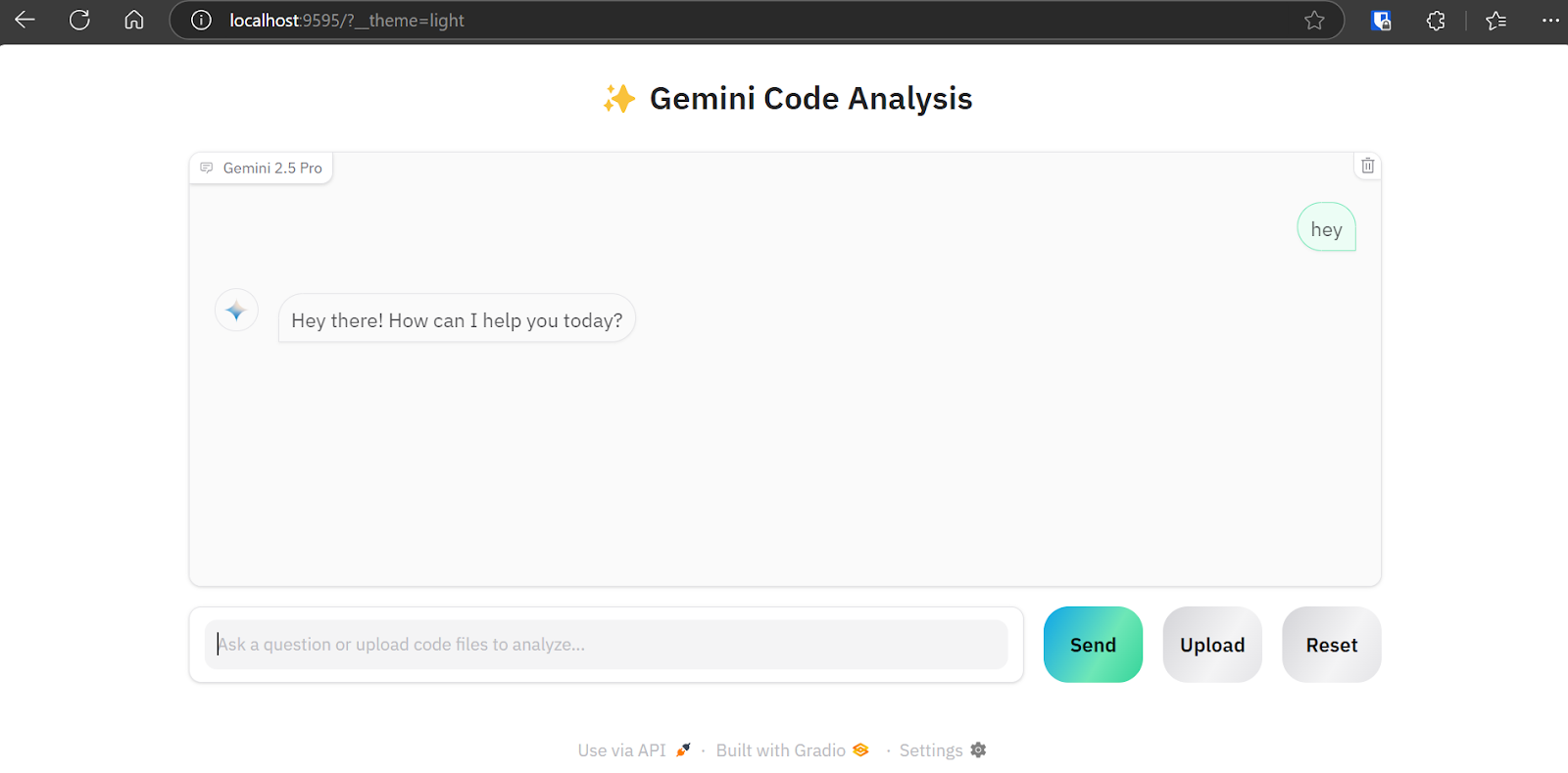

)12. Launching the app

Let’s launch the Gradio application locally with queuing enabled to handle multiple requests.

# Launch the demo interface

demo.queue(max_size=99, api_open=False).launch(

debug=False,

show_error=True,

server_port=9595,

server_name="localhost",

)Testing the Gemini 2.5 Pro Code Analysis App

To launch the web application, combine all the code sources above into the main.py file (which I uploaded to GitHub to make it easier for you to copy it). Run it with the following command:

python main.pyThe web application will be available at the URL: http://localhost:9595/

You can copy the URL and paste it into your web browser.

As we can see, the web application features a chatbot interface. We can use it just like ChatGPT.

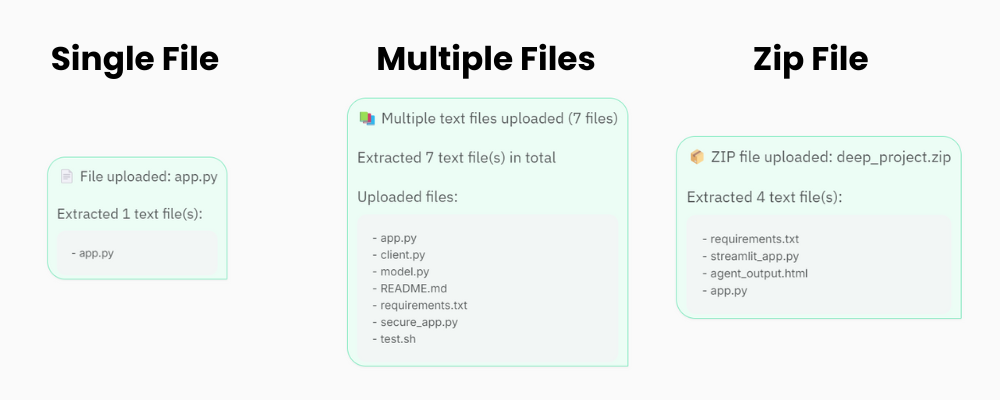

The “Upload” button supports single and multiple file uploads, as well as zip files for your project. So, don't worry if your project contains more than 20 files; the code analysis app will be able to process and send it to the Gemini 2.5 Pro API.

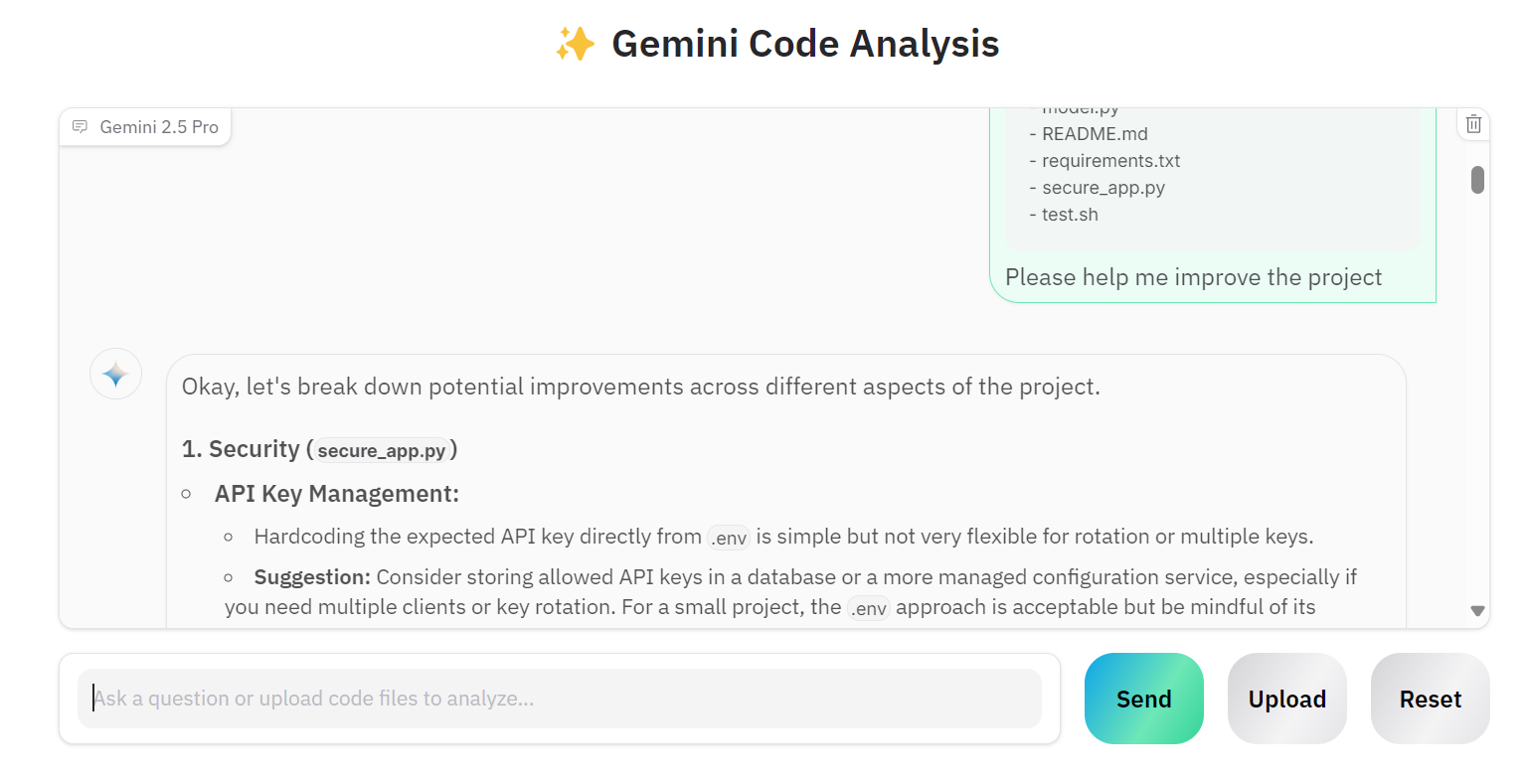

Let's upload multiple files and ask Gemini 2.5 Pro to improve our project.

As we can see, the model provides correct suggestions for improvements.

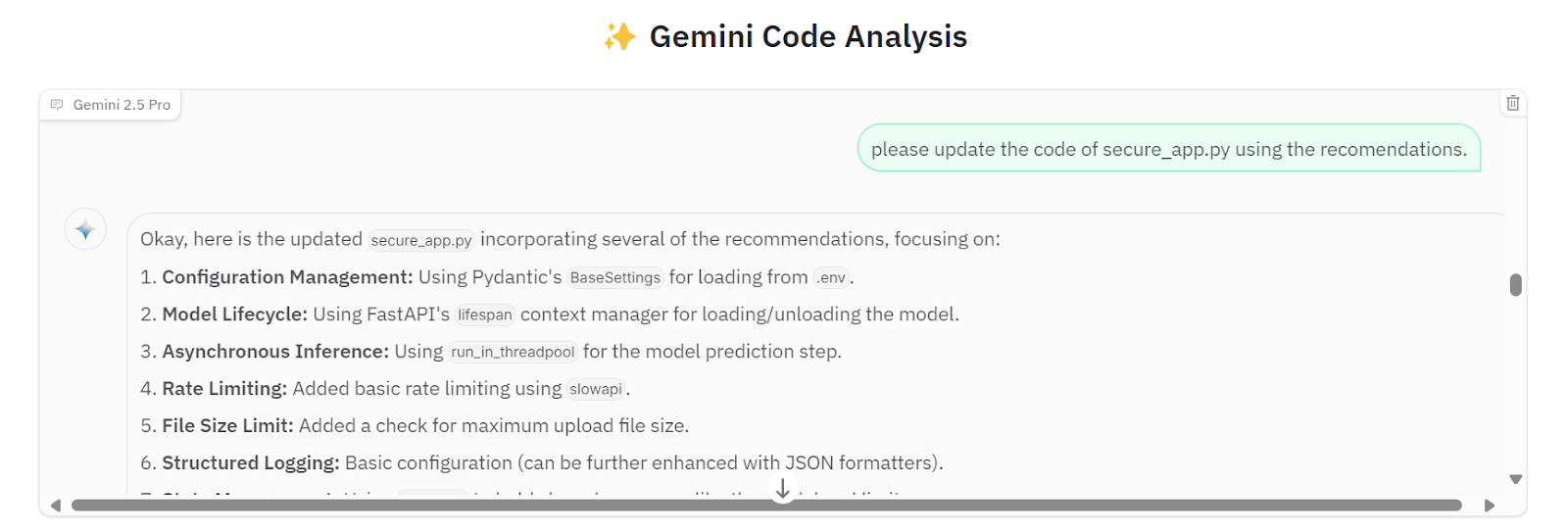

We can ask it to implement all the suggestions into the secure_app.py file.

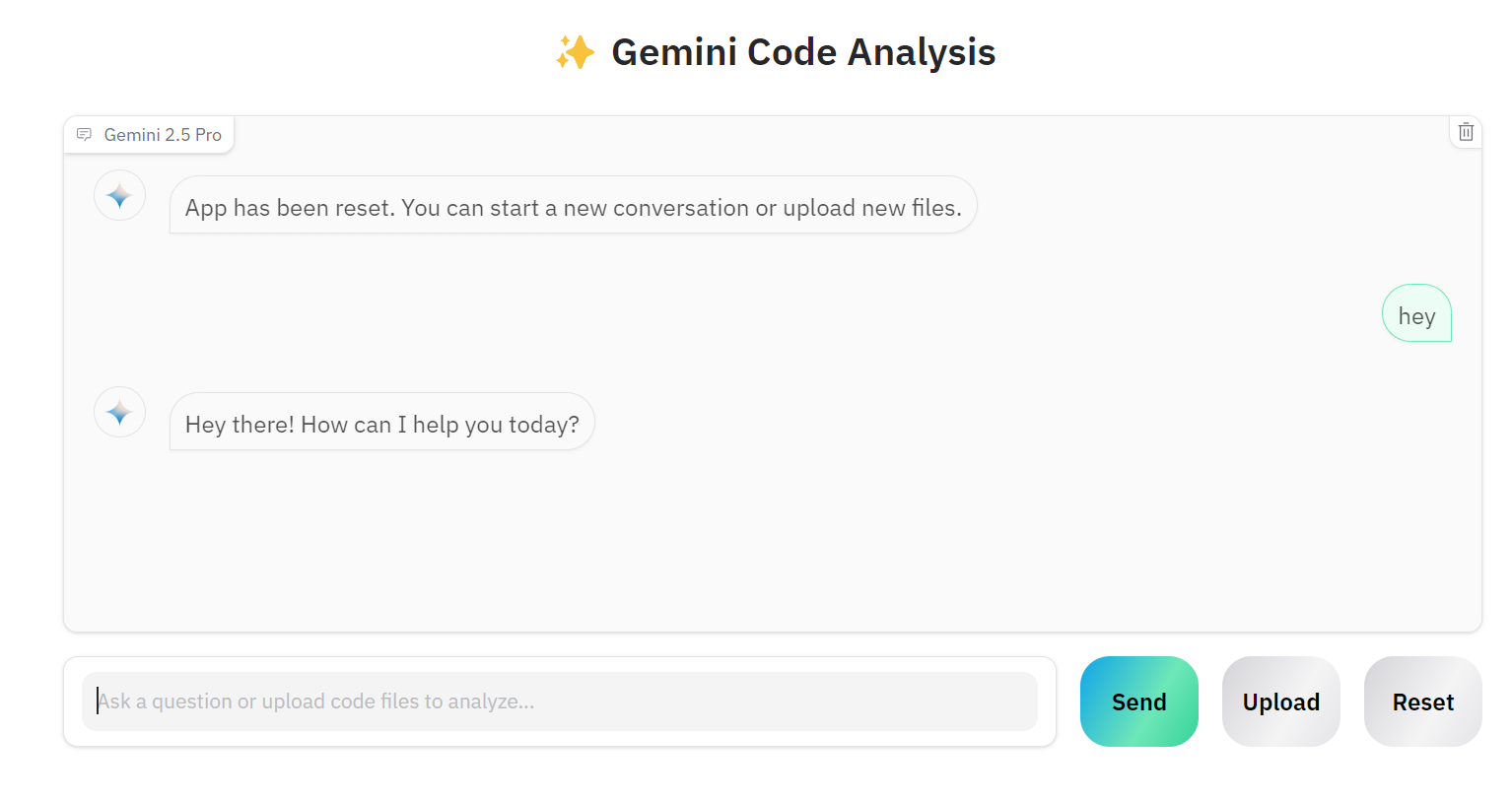

If you want to work on another project, you can click on the “Reset” button and start chatting about the new project.

The source code and configurations are available in the GitHub repository: kingabzpro/Gemini-2.5-Pro-Coding-App.

Conclusion

Building a proper AI application has become significantly easier. Instead of creating complex applications with tools like LangChain, integrating vector stores, optimizing prompts, or adding chains of thought, we can simply initialize the Gemini 2.5 Pro client via the Google API. The Gemini 2.5 Pro Chat API can handle various file types directly, allows follow-up questions, and provides highly accurate responses.

As a certified data scientist, I am passionate about leveraging cutting-edge technology to create innovative machine learning applications. With a strong background in speech recognition, data analysis and reporting, MLOps, conversational AI, and NLP, I have honed my skills in developing intelligent systems that can make a real impact. In addition to my technical expertise, I am also a skilled communicator with a talent for distilling complex concepts into clear and concise language. As a result, I have become a sought-after blogger on data science, sharing my insights and experiences with a growing community of fellow data professionals. Currently, I am focusing on content creation and editing, working with large language models to develop powerful and engaging content that can help businesses and individuals alike make the most of their data.