Track

Google just released Gemini 2.5 Pro, its most capable reasoning model so far and the first in the Gemini 2.5 family. (Update: As of Nov 18, 2025, Gemini 3 is live. You can read all about it in our Gemini 3 guide and our Gemini 3 API tutorial.)

In my opinion, its biggest strength is the massive 1 million token context window, with plans to expand to 2 million. Combining a reasoning model with that much context opens up real business value, especially given how limited AI adoption still is across most enterprises.

To put things in perspective: OpenAI’s o3-mini supports 200K tokens, Claude 3.7 Sonnet also caps at 200K, DeepSeek R1 tops out at 128K, and Grok 3 is the only other model currently matching Gemini at 1 million.

Since one of the most common AI use cases is code generation, a model that can reason through code and read a large codebase in a single pass, without needing RAG, can bring significant business value. We already showed in a previous blog how to process large documents without RAG using Gemini 2.0 Flash.

In this post, I’ll walk through what Gemini 2.5 Pro offers, the kinds of inputs it supports, and how to access it. I’ll also run a few hands-on tests and look at how it compares on key benchmarks against Claude, DeepSeek, Grok, and OpenAI’s latest models.

We keep our readers updated on the latest in AI by sending out The Median, our free Friday newsletter that breaks down the week’s key stories. Subscribe and stay sharp in just a few minutes a week:

What Is Gemini 2.5 Pro?

Gemini 2.5 Pro is the first model in Google’s Gemini 2.5 family. It’s currently labeled experimental and is available through the Gemini Advanced plan and Google AI Studio.

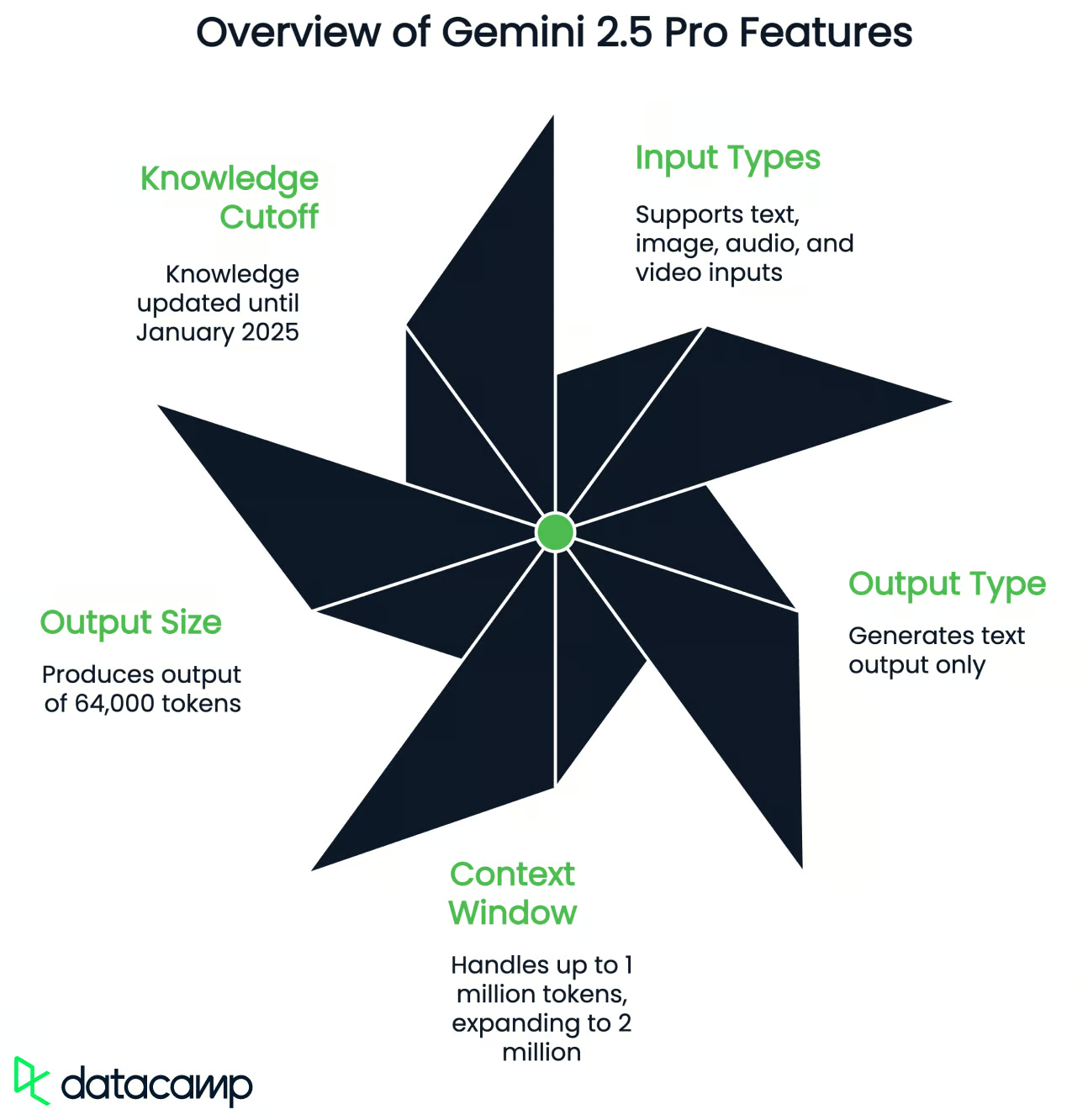

According to Google, it’s their best reasoning model yet, with improvements in tool use, multimodal input handling, and long-context performance. Here’s a quick overview of what it supports:

- Input types: Text, image, audio, and video

- Output type: Text only

- Context window: Up to 1 million tokens for input (planned expansion to 2 million)

- Output size: 64,000 tokens

- Knowledge cutoff: January 2025

Gemini 2.5 Pro supports tool use, meaning it can call external functions, generate structured output (like JSON), execute code, and use search. This allows the model to solve multi-step tasks, call APIs, or format responses for specific downstream systems.

Since it’s a reasoning model, Gemini 2.5 Pro is especially strong at coding, math, logic, and science. For most daily tasks, you can still use a generalist model like Gemini 2.0 Flash because it’s much faster.

Now, let's try out Gemini 2.5 Pro to see how well it performs.

Testing Gemini 2.5 Pro

P5js game

First, I wanted to test out the dinosaur game that Google offered as an example, and I used the same prompt as in the demo video (I tried it in the Gemini app):

Make me a captivating endless runner game. Key instructions on the screen. p5js scene, no HTML. I like pixelated dinosaurs and interesting backgrounds.Let’s see the result:

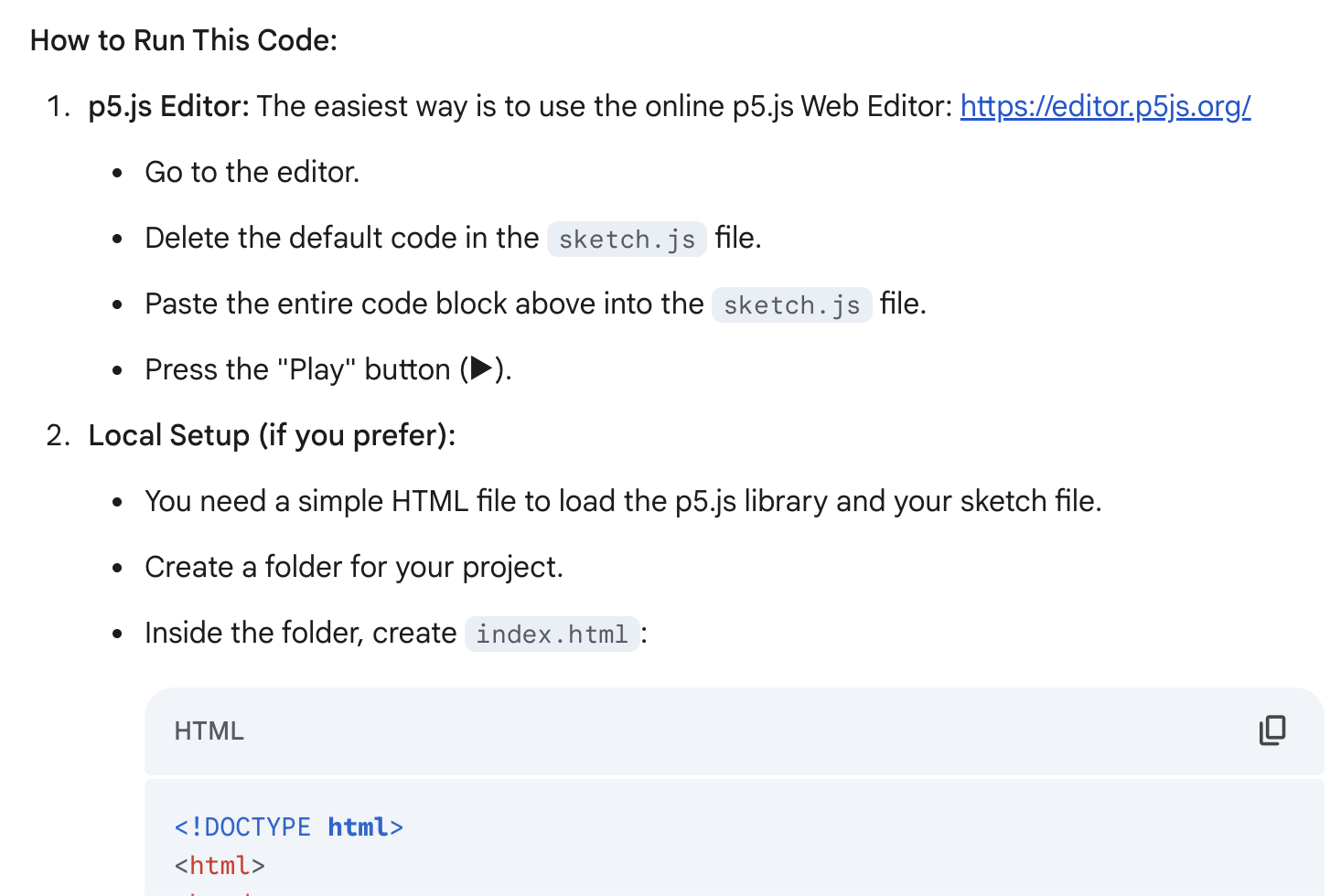

That’s pretty cool for just one prompt! The generation was fast (under 30 seconds), and I also liked the detailed instructions on how to run this code—it offered me two ways to run it:

I didn’t like that the game started immediately after I ran the code, so I wanted to change this:

I don't like that the game starts immediately after I run the code. Add a starting screen where the user can be the one who starts the game (keep instructions on the screen)Let’s see the result:

Exactly what I wanted! There are still so many things that I’d change, but the result is very good relative to my effort (two prompts) and my goal (just to build a prototype).

Multimodal input (video and text)

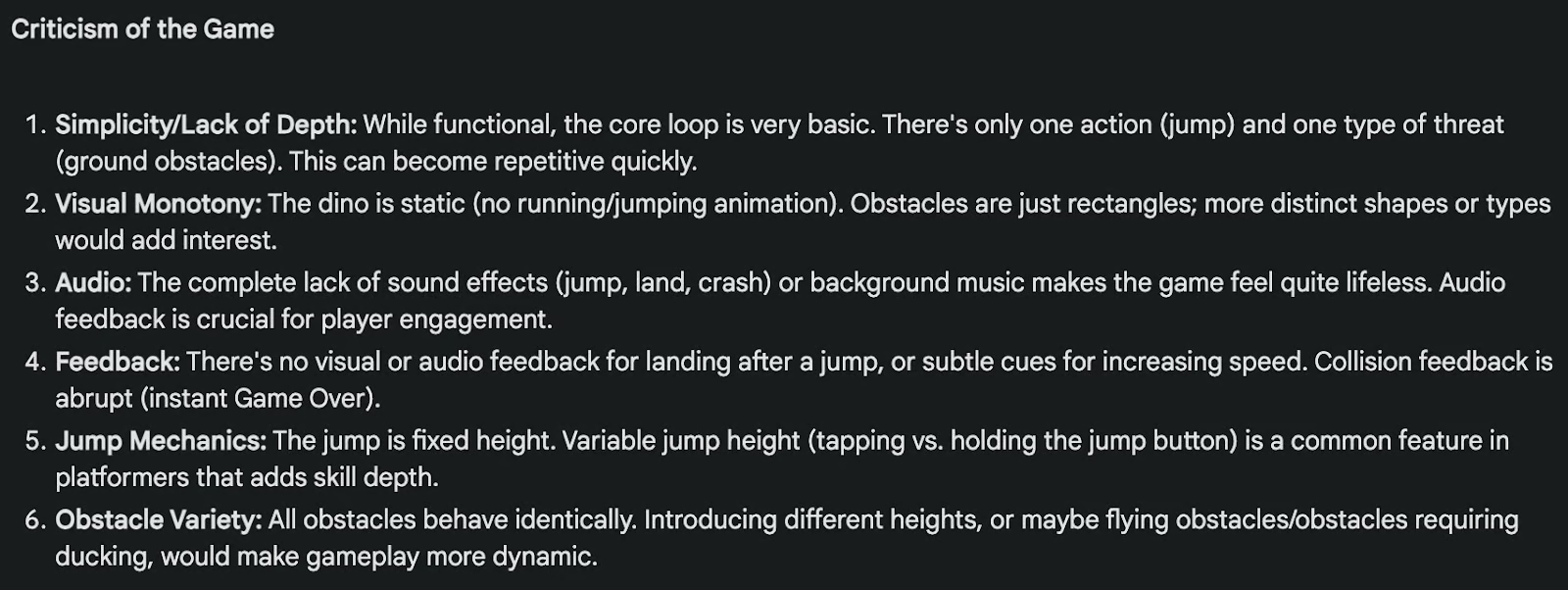

Next, I wanted to test Gemin 2.5 Pro’s multimodal capabilities. I uploaded the video above with the game and gave Gemini 2.5 Pro this prompt on Google AI Studio (I wasn’t able to add video as input on the Gemini App):

Analyze the game in the video, criticize both the game and the code I will give you below, and indicate what changes I could make to this game to make it better.

Code:

(truncated fo readability)The output was quite good! For readability, I will only show here the criticism of the game, which indirectly shows a good understanding of the video and code:

Processing large documents

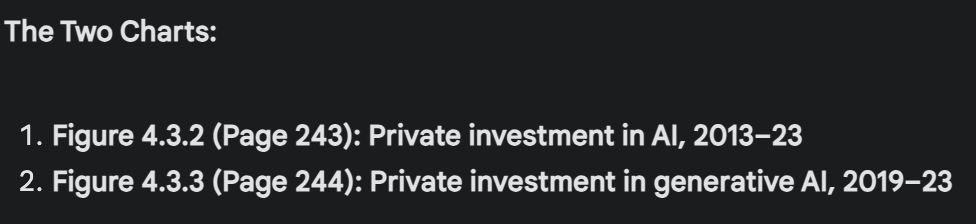

Finally, I wanted to test Gemini 2.5 Pro on a relatively large document, and I used Stanford’s Artificial Intelligence Index Report 2024. After uploading the 502-page document (129,517 tokens), I asked Gemini 2.5 Pro:

Pick two charts in this report that appear to show opposing or contradictory trends. Describe what each chart says, why the contradiction matters, and propose at least one explanation that reconciles the difference. Mention the page of the charts so I can double-check. If there's no such contradiction, don't try to artificially find one.For some reason, it couldn’t directly analyze charts within the PDF document on the Gemini App. I switched to Google AI Studio, and it worked. Gemini 2.5 Pro managed to find two graphs regarding AI investment that show a contradictory trend: private AI investment is going down despite private generative AI investment going up.

It perfectly located the charts by the page number (just like I asked), the figure number, and the titles (I recommend checking the PDF yourself to double-check and see the charts).

It summarized the contradictory trend very well: How can total private AI investment be going down when investment in its most hyped and visible subfield, generative AI, is exploding?

And explained why we’re seeing this apparently contradictory trend:

Gemini 2.5 Pro Benchmarks

Google benchmarked Gemini 2.5 Pro against some of the top models available today, including Claude 3.7 Sonnet, OpenAI’s o3-mini, DeepSeek R1, and Grok 3. While performance varies by task, Gemini 2.5 Pro generally performs well across reasoning, coding, math, and long-context tasks.

|

Category |

Benchmark |

Gemini 2.5 Pro |

Closest Competitors |

|

Reasoning & General Knowledge |

Humanity’s Last Exam (no tools) |

18.8% |

o3-mini (14%), Claude 3.7 (8.9%), DeepSeek R1 (8.6%) |

|

GPQA Diamond (pass@1) |

84.0% |

Grok 3 Beta (80.2%), o3–mini (79.7%), Claude 3.7 Sonnet (78.2%) |

|

|

Math & Logic |

AIME 2024 (pass@1) |

92.0% |

o3-mini (87.3%), Grok 3 Beta (83.9%) |

|

AIME 2025 (pass@1) |

86.7% |

o3-mini (86.5%), Grok 3 Beta (77.3%) |

|

|

Coding |

LiveCodeBench v5 |

70.4% |

o3-mini (74.1%), Grok 3 Beta (70.6%) |

|

Aider Polyglot (whole file editing) |

74.0% |

— |

|

|

SWE-bench Verified |

63.8% |

Claude 3.7 (70.3%) |

|

|

Long Context & Multimodal |

MRCR (128K context) |

91.5% |

GPT-4.5 (48.8%), o3-mini (36.3%) |

|

MMMU (multimodal understanding; pass@1) |

81.7% |

Grok 3 Beta (76.0%), Claude 3.7 Sonnet (75%) |

Source: Google

Reasoning and general knowledge

Gemini 2.5 Pro shows strong performance on benchmarks designed to test multi-step reasoning and real-world knowledge:

- Humanity’s Last Exam (no tools): Gemini 2.5 Pro scores 18.8%, ahead of o3-mini (14%) and well above Claude 3.7 (8.9%) and DeepSeek-R1 (8.6%). This test is designed to mimic expert-level exams across 100+ subjects.

- GPQA Diamond: A factual QA benchmark across STEM and humanities. Gemini 2.5 Pro scored leads with 84.0% (for single attempt/pass@1), followed by Grok 3 Beta with 80.2%.

Math and logic

These are benchmarks where Gemini’s reasoning architecture seems to shine:

- AIME 2024: Gemini 2.5 Pro leads with 92.0% for single attempt/pass@1.

- AIME 2025: Gemini 2.5 Pro drops to 86.7% on the 2025 set of problems, and marginally leads this benchmark for single attempt/pass@1, followed by o3-mini (86.5%).

Coding

On benchmarks that test code generation, debugging, and multi-file reasoning, Gemini performs well but doesn’t dominate:

- LiveCodeBench v5 (code generation): Gemini 2.5 Pro scores 70.4%, behind o3-mini (74.1%) and Grok 3 Beta (70.6%).

- Aider Polyglot (whole file): Gemini reaches 74.0%, which is solid, especially considering it handles multiple languages. This benchmarks measures code editing.

- SWE-bench verified (agentic coding): Gemini scores 63.8%, placing it ahead of o3-mini and DeepSeek R1, but behind Claude 3.7 Sonnet (70.3%).

Long-context and multimodal tasks

This is where Gemini 2.5 Pro stands out most clearly:

- MRCR (long-context reading comprehension): Gemini 2.5 Pro hits 91.5% for a 128,000 context length, and it’s miles ahead o3-mini (36.3%) and GPT-4.5 (48.8%).

- MMMU (multimodal understanding): Gemini 2.5 Pro leads the benchmark with a score of 81.7%.

How to Access Gemini 2.5 Pro

There are a few ways to try out Gemini 2.5 Pro, depending on whether you’re a casual user or building something more technical.

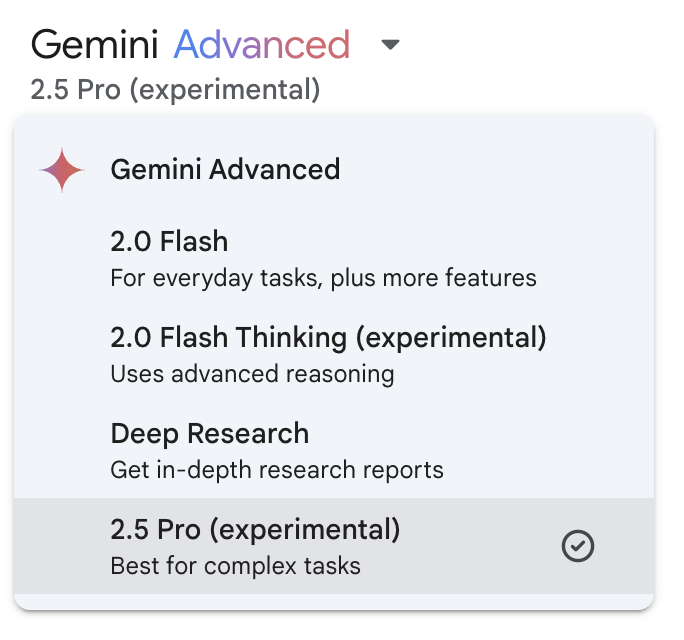

Gemini App

The easiest way to access Gemini 2.5 Pro is through the Gemini app (on mobile or web).

If you’re a Gemini Advanced subscriber, you’ll see Gemini 2.5 Pro listed in the model dropdown menu.

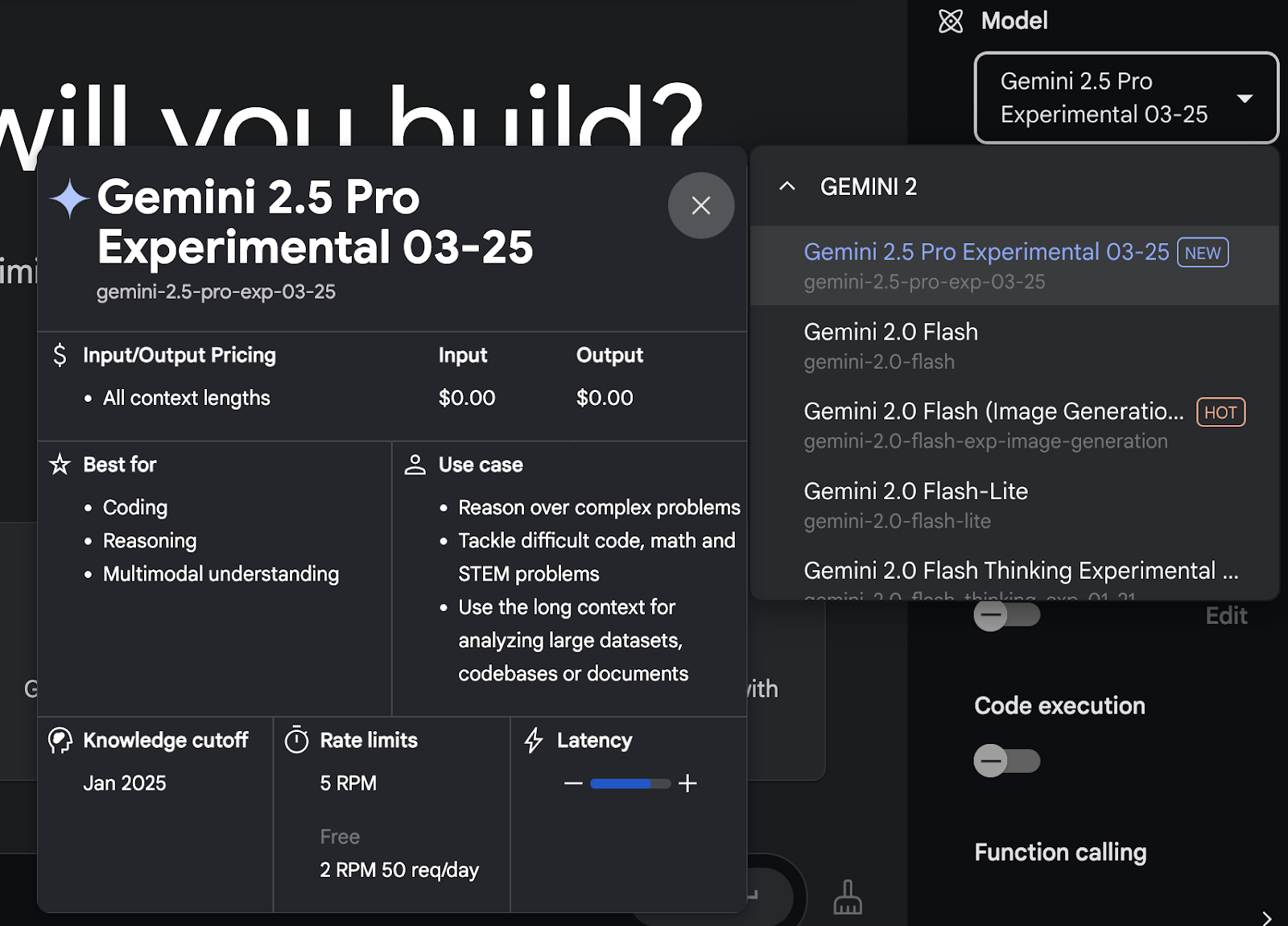

Google AI Studio

If you want more control over inputs, tool use, or multimodal prompts, I recommend using Google AI Studio.

This environment gives you access to Gemini 2.5 Pro for free (as of now) and supports text, image, video, and audio inputs. It also works better than the Gemini app for uploading files or testing tool use, especially when dealing with large documents or custom workflows.

After you create an account, you can select Gemini 2.5 Pro from the model dropdown menu.

Gemini 2.5 Pro API

For programmatic access, you can use the Gemini API, which supports Gemini 2.5 Pro.

This gives you more flexibility if you’re integrating the model into an application or workflow. You can call the model directly with tool use enabled, get structured responses, or process long documents in an automated way.

You can find more technical information here.

Gemini 2.5 Pro on Vertex AI

Google says Gemini 2.5 Pro will also be available soon in Vertex AI, which is part of Google Cloud. The main difference between using the Gemini API directly and accessing it via Vertex AI is in infrastructure, scale, and integration.

If you’re just testing or building internal tools, AI Studio or the API should be enough. If you’re deploying something into production with strict performance or security requirements, Vertex AI will be the better fit once it launches support for Gemini 2.5 Pro.

Conclusion

It’s getting harder to be impressed by new model releases. Most launches follow the same pattern: some cherry-picked examples, some flashy benchmarks, and a lot of claims about being the best at everything. But Gemini 2.5 Pro actually gave me a few moments where I paused and thought, “Okay, this is really useful.”

The 1 million token context window changes how you can approach tasks that used to require extra work—especially anything involving long documents, messy codebases, or multi-step reasoning. I didn’t have to chunk inputs or set up a RAG pipeline. I just uploaded the file, asked my question, and got back something coherent and grounded in the source.

If the 2 million token context window rolls out soon, that alone could make it one of the most practical models for real-world work.

FAQs

What input types does Gemini 2.5 Pro support?

Gemini 2.5 Pro supports text, image, video, and audio inputs.

Where can I access Gemini 2.5 Pro?

Gemini 2.5 Pro is available through Google AI Studio and to Gemini Advanced subscribers via the Gemini app.

What are the primary use cases for Gemini 2.5 Pro?

Gemini 2.5 Pro excels in coding, complex reasoning tasks, and handling multimodal inputs.

Is Gemini 2.5 Pro suitable for real-time applications?

While powerful, its experimental status suggests caution for real-time deployments until further stability is confirmed.

I’m an editor and writer covering AI blogs, tutorials, and news, ensuring everything fits a strong content strategy and SEO best practices. I’ve written data science courses on Python, statistics, probability, and data visualization. I’ve also published an award-winning novel and spend my free time on screenwriting and film directing.