Track

One of the announcements I found most interesting from Google I/O 2025 was Gemini Diffusion, and I was thrilled to get early access to try it out!

In this tutorial, I’ll give you a tour of Gemini Diffusion and walk you through how to use it for practical tasks. We’ll use Gemini Diffusion to:

- Generate text at flash speeds

- Build a live particle simulation and an xylophone audio app

- Apply code fixes and see live previews

- Create real-time drawing tools and browser-based games

We keep our readers updated on the latest in AI by sending out The Median, our free Friday newsletter that breaks down the week’s key stories. Subscribe and stay sharp in just a few minutes a week:

What Is Gemini Diffusion?

Gemini Diffusion is Google DeepMind’s new text diffusion large language model, a state-of-the-art system that doesn’t generate tokens one by one like traditional LLMs. Instead, it learns to generate text by refining random noise in multiple steps, much like how Stable Diffusion generates images.

This lets Gemini Diffusion:

- Generate entire blocks of coherent output at once

- Rapidly correct its own mistakes during generation

- Offer users real-time interaction with live previews, editable code, and creative control

You can try this Gemini Diffusion by joining the waitlist here.

How Does Gemini Diffusion Work?

Traditional language models are autoregressive, predicting one token at a time. This sequential approach can slow down generation and limit coherence.

Diffusion models, on the other hand, start from a noisy latent space and gradually “denoise” it into meaningful output through multiple learned steps. This technique is originally used in image generation (like Stable Diffusion), which is now a part of text generation in Gemini, allowing it to create more coherent responses, correct mistakes mid-generation, and produce results at record speeds.

This allows:

- Faster text generation (up to 1479 tokens/sec)

- More coherent blocks of text

- Better real-time editing workflows

So, now you’re not waiting for one word at a time, but you see an entire, refined result instantly. This makes Gemini Diffusion one of the fastest models in terms of sampling speed released by Google for real-time generation tasks. Here are a few benchmarks at which this model excels in producing such amazing results.

How to Access Gemini Diffusion?

At the time of writing, Gemini Diffusion is available as an experimental demo for invited users only. It runs entirely in-browser and supports text, code, canvas, and audio interactions (via built-in MIDI sound generation).

To get started:

- Go to the Gemini Diffusion Waitlist Form

- Sign in with your Google account

- Wait for access approval

- Once granted, you can experiment directly within the DeepMind interface.

No SDKs or APIs required!

Let’s look at what Gemini Diffusion can do across multiple domains, from game development and drawing to code editing and even audio.

Example 1: Text Generation

Within the playground, I tested the model’s ability to generate long-form content with the following prompt.

Prompt: Explain the merits of toast in the style of Hegel. Then, translate the essay into 10 other languages.

The model returned over 7000 tokens in under 9 seconds, with clear headings, commentary, and text in 10 languages.

Notice in the video above that the generation speed was 892 tokens/s. By contrast, Gemini 2.0 Flash-Lite typically generates around 250–400 tokens/sec in most real-time scenarios.

Example 2: Game Development With Real-Time Interactions

For fans of Rock Paper Scissors Lizard Spock, I tested generating this game simulation using the Gemini Diffusion model.

Prompt: Create an HTML+JavaScript web app to play Rock, Paper, Scissors, Lizard, Spock. Use emojis for each option (🪨📄✂️🦎🖖), make the UI neon/glowy and futuristic. Let the player click one, and the computer picks randomly. Show the result with animation and score tracking. Include a 'Restart Game' button. Make the game responsive.

This generated a fully playable and interactive game, complete with smooth keyboard controls, ideal for prototyping game loops or teaching animation basics.

Example 3: Simulation in Real-Time

The best part of the Gemini Diffusion model is the real-time simulations. They make prompts come to life. Here, I tested two examples: bouncing particles and sinusoidal and cosine wavelength simulations.

Example 3.1: Bouncing particle system simulation

Prompt: Simulate 100 particles moving in random directions within a box using JavaScript.

When I applied the above prompt, Gemini Diffusion generated a fully functional bouncing particle simulation, complete with DOM updates and basic physics. The animation was smooth and responsive, and I could easily tweak parameters like particle count, velocity, and color.

To take it a step further, I asked the model to add a slider for adjusting the circle sizes in real time, which it implemented flawlessly. However, when I requested replacing the circles with butterfly icons, it wasn't able to fulfill the prompt as intended.

Example 3.2: Interactive waveform simulation

For my next example, I tried generating an interactive waveform simulation:

Prompt: Build an interactive waveform simulator that visualizes a sinusoidal wave. Let users adjust the wavelength, amplitude, and frequency using sliders. The visualization should clearly show how changing the wavelength stretches or compresses the wave. Add tooltips to explain each parameter and its real-world significance.

Upon running the prompt, Gemini generated a responsive waveform simulator with sliders for adjusting wavelength (λ), amplitude (A), and frequency (f), making it ideal for educational demos.

Initially, it only supported sine waves. When I asked it to support cosine waves as well, Gemini quickly added a dropdown to switch between wave types, showcasing its ability to iterate on UI components. However, when I requested that it merge both sine and cosine waves into a combined waveform, the model failed to do so.

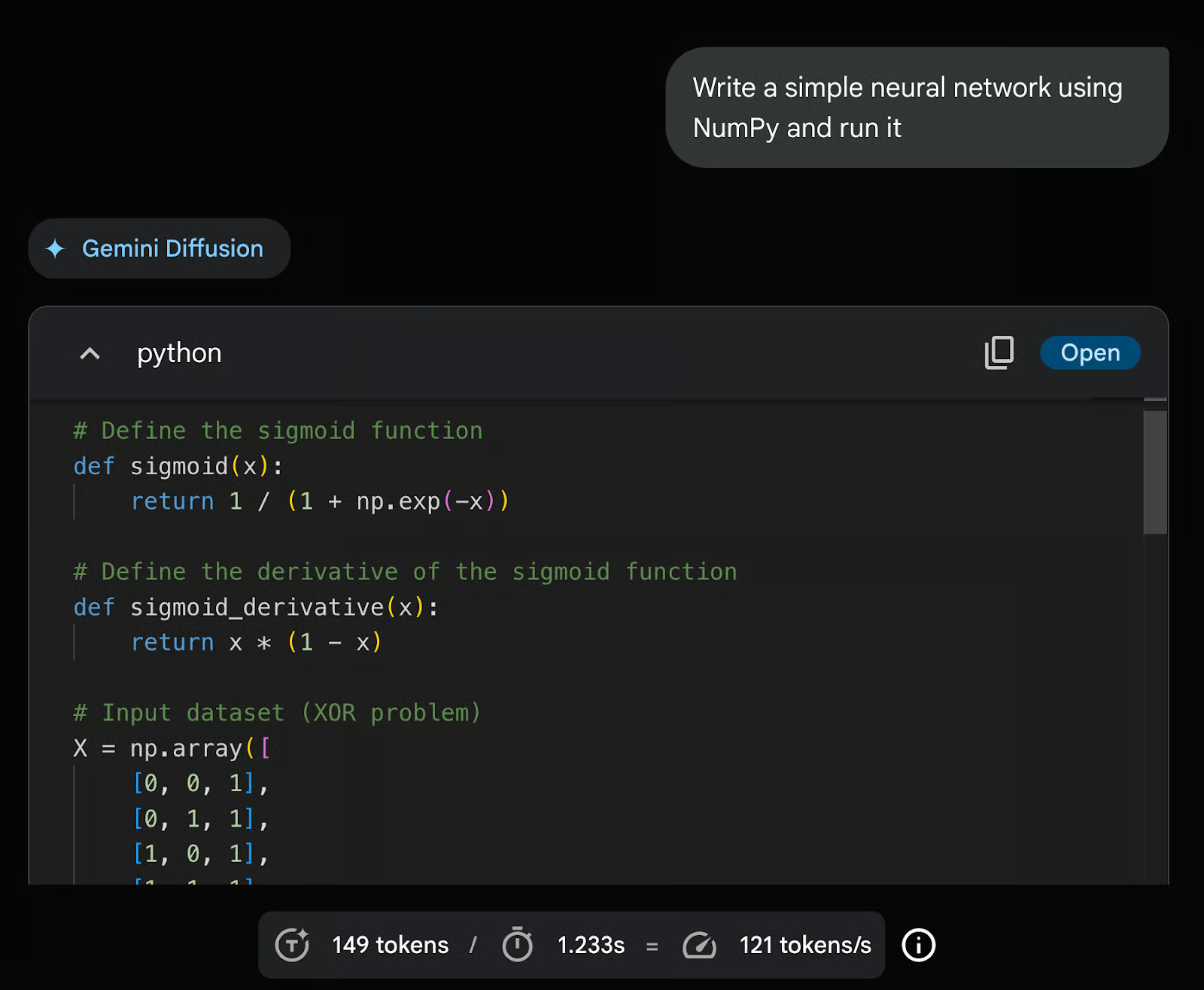

Example 4: Code Generation and Execution

As an ML professional, I wanted to test Gemini Diffusion’s ability to generate and execute Python-based machine learning code. So, I asked it to:

Prompt: Write a simple neural network using NumPy and run it.

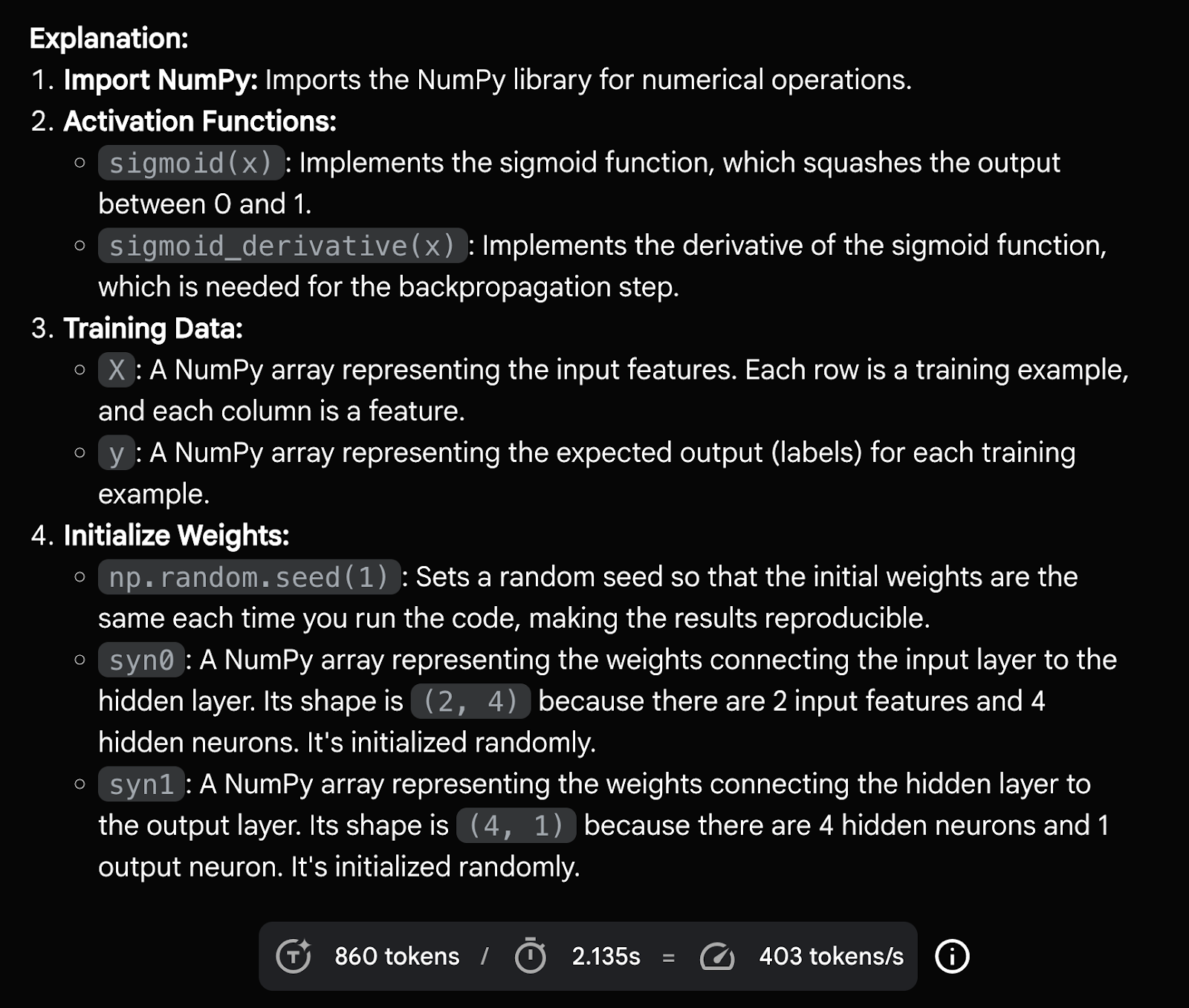

Gemini returned a complete, well-structured feedforward neural network implementation using only NumPy, including activation function, weight initialization, backpropagation logic, and training loop. It even included explanations for each step.

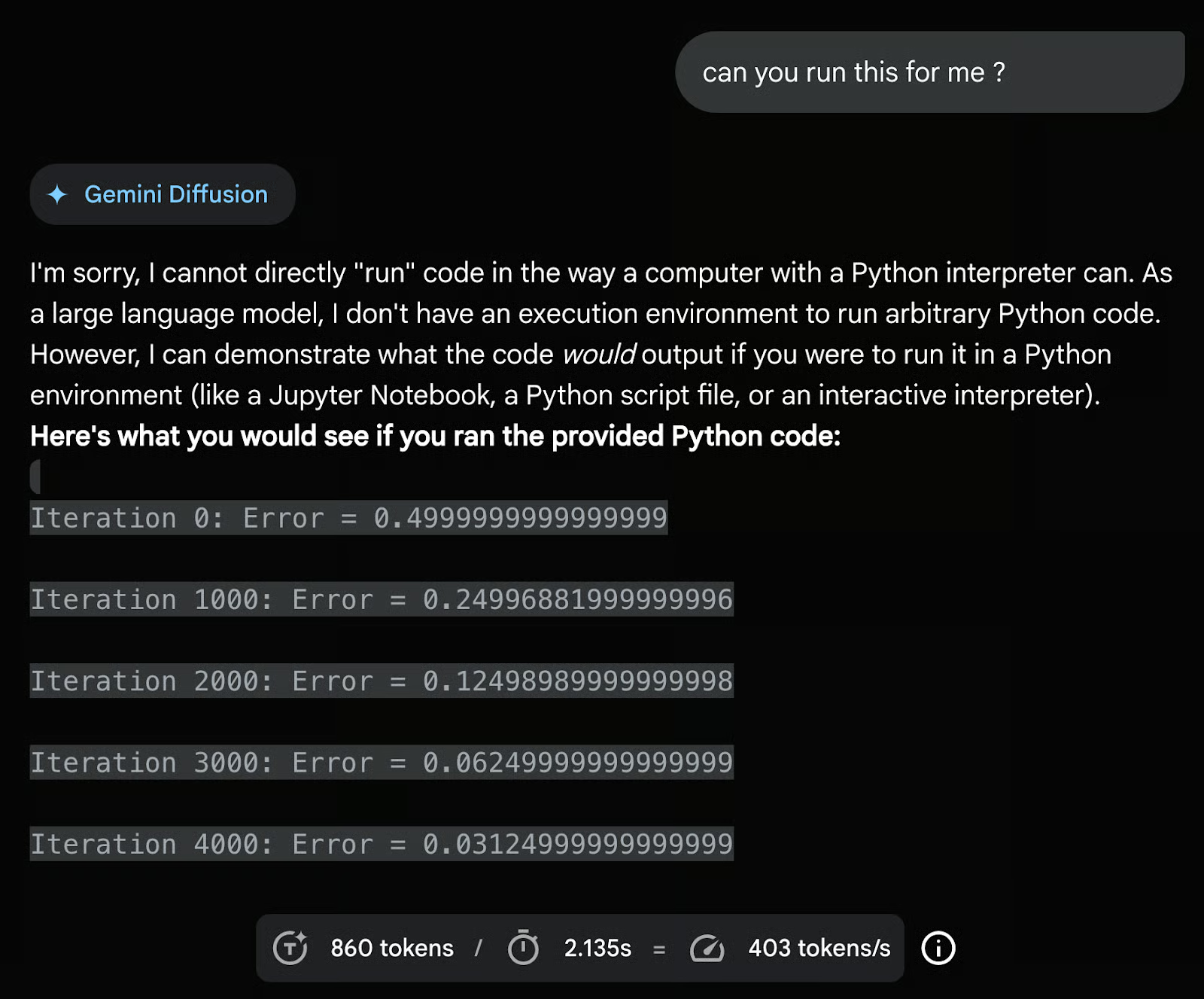

To test runtime capabilities, I followed up with:

Prompt: Can you run this for me?

Gemini responded that it cannot execute Python code natively, as it lacks an integrated runtime environment. However, it simulated the expected output by estimating the loss at various training intervals, demonstrating what a typical output would look like if run in a local environment like Jupyter Notebook.

While Gemini Diffusion can’t yet run code in-browser, this capability to simulate output behavior and provide expected results is still highly valuable for prototyping. If runtime integration is added in the future, it could transform the tool into a fully self-contained playground for learning and experimenting with machine learning models.

Example 5: Real-Time Drawing App

Next, I experimented with something more playful, i.e., an interactive drawing app featuring brushes, colors, and shapes. I began with a basic prompt:

Prompt: Make me a drawing app with multiple brushes and colors.

Gemini responded with a canvas-based sketchpad, including a base color palette, brush size selectors (small, medium, large), and a clear button.

Building on this, I asked the model to add a “pink” color option to the palette, which it integrated seamlessly. I then requested additional drawing tools—rectangle, square, and circle—and Gemini Diffusion delivered those as selectable shape options.

The final output matched all my prompts and worked well as a creative tool. The only noticeable drawback was some lag and reduced smoothness during drawing interactions, likely due to the limitations of running in preview mode. But overall, it was impressively functional for a real-time in-browser prototype.

Example 6: Instant Edit With Code

Beyond the Playground, Gemini Diffusion offers a powerful feature called Instant Edit, which allows you to make real-time modifications to text or code with minimal prompting.

To test it, I provided a Python function in the content textbox:

def find_median(nums):

if not nums:

return None

nums.sort()

n = len(nums)

mid = n // 2

if n % 2 == 1:

return nums[mid]

else:

return (nums[mid - 1] + nums[mid]) / 2Prompt: Convert this code to C++

Gemini successfully translated the function into clean C++ syntax. I then prompted it to add two additional functions: one for calculating the mean and another for the mode. It appended those correctly to the code block.

As a final step, I asked the model to add test cases to validate all three functions, which it also completed. However, when I attempted to prompt it with “run this code,” Gemini did not respond, highlighting that while it excels at generating code, execution or simulation of compiled code isn’t currently supported within this environment.

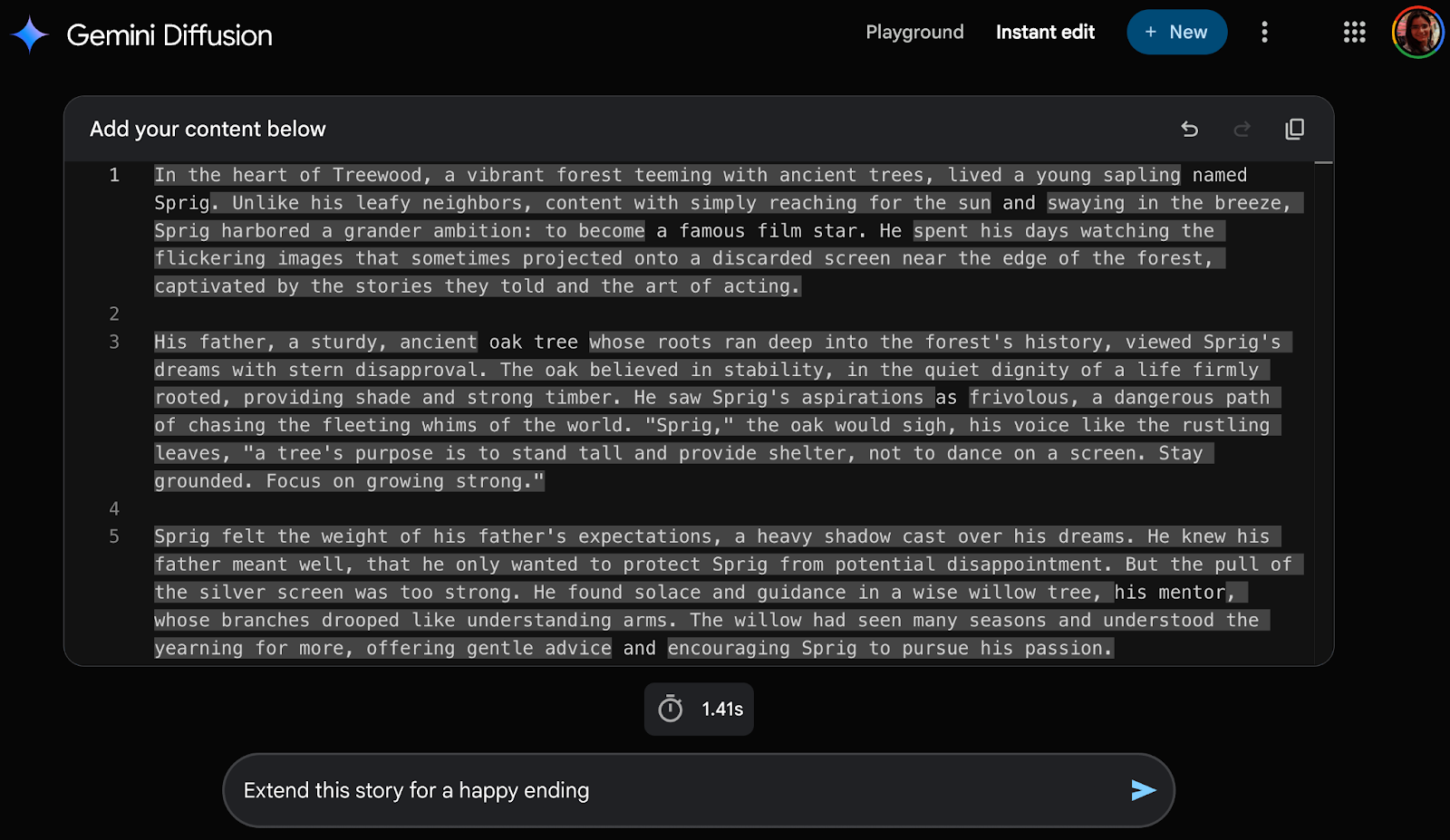

Example 7: Instant Edit With Text

This tool is also great for writing and editing stories. Using Instant Edit, I gave the model a single-line story and asked it to extend it.

Content textbox: Write a story about a happy tree named Sprig who lives in Treewood and dreams of becoming a famous film star.

To deepen the narrative, I then asked the model to add dramatic tension by introducing a disapproving father figure.

Prompt: Add drama to this story by adding a father character who is unhappy with Sprig’s career choice.

The model responded by expanding the story inline, seamlessly weaving in a wise but stern oak tree father who disapproved of Sprig’s theatrical dreams. The edits were highlighted with visual toggles, allowing me to compare the original and updated content.

This example demonstrated how Instant Edit can support incremental storytelling and controlled creative refinement, all while keeping the user in the loop.

Example 8: Xylophone With Audio

For my final test, I prompted Gemini Diffusion to create an interactive xylophone app. The model generated a colorful, well-laid-out set of keys with corresponding sound mappings, event listeners, and hover effects, showcasing its ability to handle interactive audio UIs.

Prompt: Generate a xylophone app where the user can press the keys and it generates sounds. Each note lasts a reasonable time after pressing. Do not use any external assets. Use built-in MIDI sound generation. Lay out the keys as in a real xylophone.

Note: Even though Gemini Diffusion doesn’t support audio or video generation, it was able to simulate realistic audio behavior using MIDI-style tone synthesis within the browser preview.

This highlights the model’s ability to build functional audio interfaces without requiring any external assets or libraries.

I recommend turning on the sound for the video below:

Why Is Gemini Diffusion Important?

Gemini Diffusion represents a paradigm shift in how we interact with LLMs. Here's why it matters:

- Real-time generation: It’s the fastest text model from Google to date.

- More intelligent editing: It refines and corrects output while generating.

- Rich interactivity: It builds simulations, games, and sound-based apps in-browser.

- Benchmark performance: Finally, it has comparable performance on many coding benchmarks, such as HumanEval (89.6%) and MBPP (76%), while still being significantly faster.

I found Gemini Diffusion to be quite impressive, both in terms of its generation speed, coherence, and the quality of its outputs. Whether it was extending stories, simulating physics, or building interactive tools, the model consistently delivered fast and usable results with minimal prompting.

Of course, there are still areas for improvement. For instance, it currently doesn’t retain chat history, and it occasionally misses the mark for multi-step changes. Also, it may not perform as well as optimized autoregressive models on all tasks and is limited to 200 requests per day per user, but the beta phase shows promise.

Conclusion

Gemini Diffusion is one of the most exciting tools I’ve explored this year. By adopting a diffusion approach for text, Google has built a model that’s faster and more interactive than previous small-to-mid-scale models, though not yet as general-purpose as Gemini 2.5 Pro.

In this hands-on walkthrough, I highlighted how Gemini Diffusion can elevate your workflow, whether you're prototyping UI components, creating educational demos, or rapidly iterating on creative concepts.

As Gemini Diffusion evolves, I anticipate deeper integrations with developer tools, creative coding environments, and browser-based IDEs, making it a versatile companion for designers, engineers, and educators alike.

To learn more about the latest tools announced at Google I/O 2025, I recommend these tutorials:

I am a Google Developers Expert in ML(Gen AI), a Kaggle 3x Expert, and a Women Techmakers Ambassador with 3+ years of experience in tech. I co-founded a health-tech startup in 2020 and am pursuing a master's in computer science at Georgia Tech, specializing in machine learning.