Track

This year was predicted to be the year of agentic AI, but to me, it feels more like the year of reasoning models. Google has just introduced Gemini 2.0 Flash Thinking Experimental to Gemini app users, expanding access beyond its previous availability in Google AI Studio, the Gemini API, and Vertex AI.

The 2.0 Flash Thinking model is designed to compete with other reasoning models from OpenAI’s o-series and DeepSeek’s R-series.

Rather than just providing answers, Flash Thinking focuses on showing its thought process—breaking down steps, evaluating alternatives, and making reasoning more transparent. My first impression is that the chat-based version of Gemini 2.0 Flash Thinking is noticeably faster than its OpenAI and DeepSeek counterparts.

In this blog, I’ll cover what Gemini 2.0 Flash Thinking Experimental is, how you can use it, its benchmarks, and how and why to use it alongside tools like Search, Maps, and YouTube.

If you want to get more hands-on with Gemini 2.0, I recommend this video by my colleague Adel Nehme:

What Is Gemini 2.0 Flash Thinking Experimental?

Google has introduced Gemini 2.0 Flash Thinking Experimental as a new AI model designed for advanced reasoning. Initially available through Google AI Studio, the Gemini API, and Vertex AI, it has now been rolled out to Gemini app users as well.

Unlike standard language models that prioritize generating fluent responses, Flash Thinking aims to break down its thought process, showing step-by-step reasoning, evaluating multiple options, and explaining its conclusions in a more structured way.

Gemini 2.0 Flash Thinking Experimental is a multimodal AI model, meaning it can process both text and images as input. This means we can use for tasks that require visual context, such as interpreting diagrams, analyzing charts, or extracting insights from complex documents.

However, unlike some other multimodal models, Flash Thinking only produces text-based outputs, meaning it won’t generate images or visual data in response.

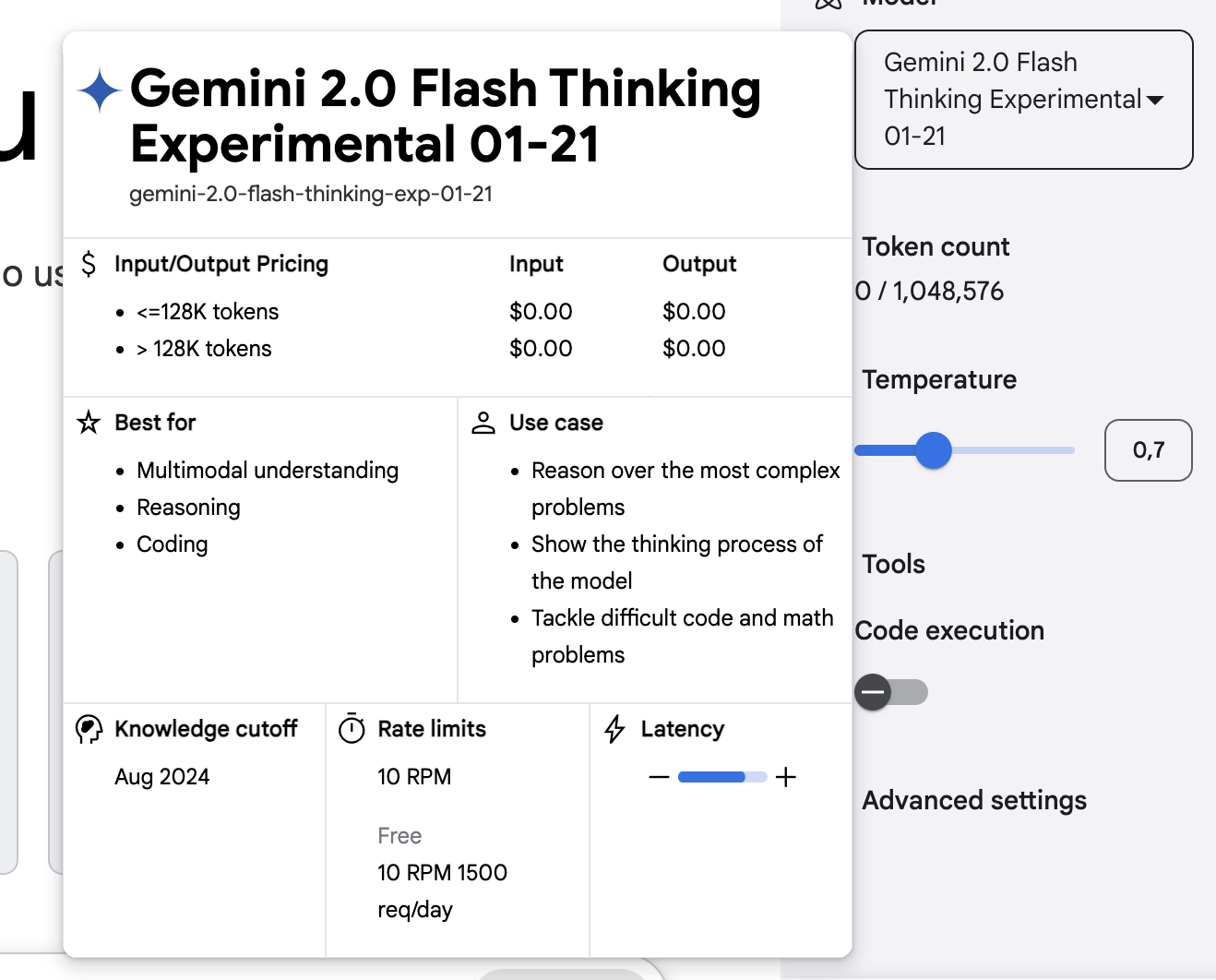

One of its standout features is its massive context window, supporting up to 1 million tokens for input and generating responses of up to 64,000 tokens. This makes it one of the most expansive AI models available for long-form reasoning, allowing it to analyze entire books, research papers, or extended conversations while maintaining coherence.

The large token limit ensures the model can track complex arguments over extended interactions, reducing the need for users to repeatedly reintroduce context.

One key limitation to keep in mind is that Flash Thinking has a knowledge cutoff of June 2024. This means it does not have built-in knowledge of events beyond that point.

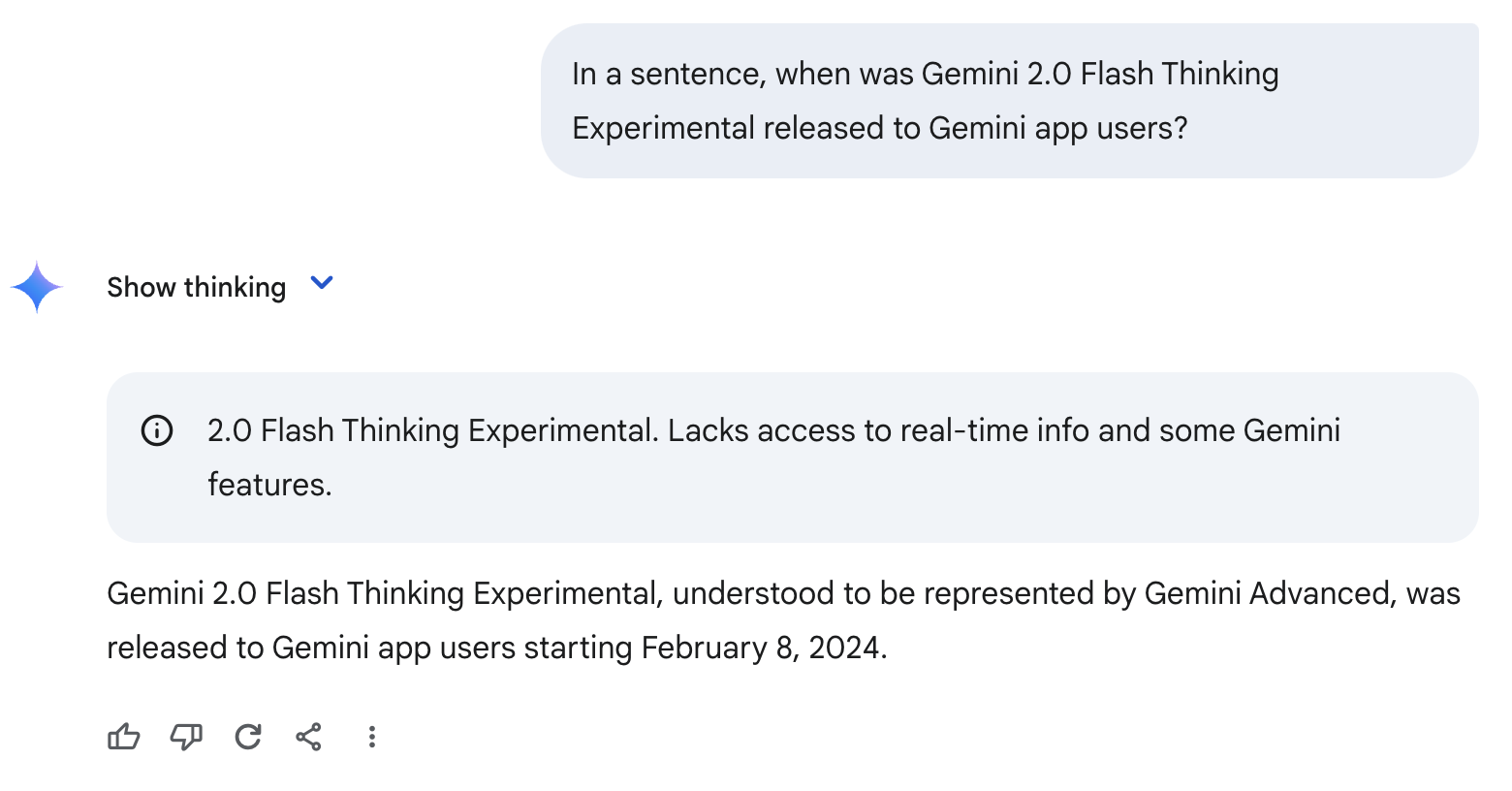

For this reason, it can hallucinate or make wrong assumptions, like in the example below. Because it has never heard about Gemini 2.0 Flash Thinking Experimental, it wrongly assumes that this model is the equivalent of Gemini Advanced and outputs a wrong date.

Gemini 2.0 Flash Thinking Experimental With Apps

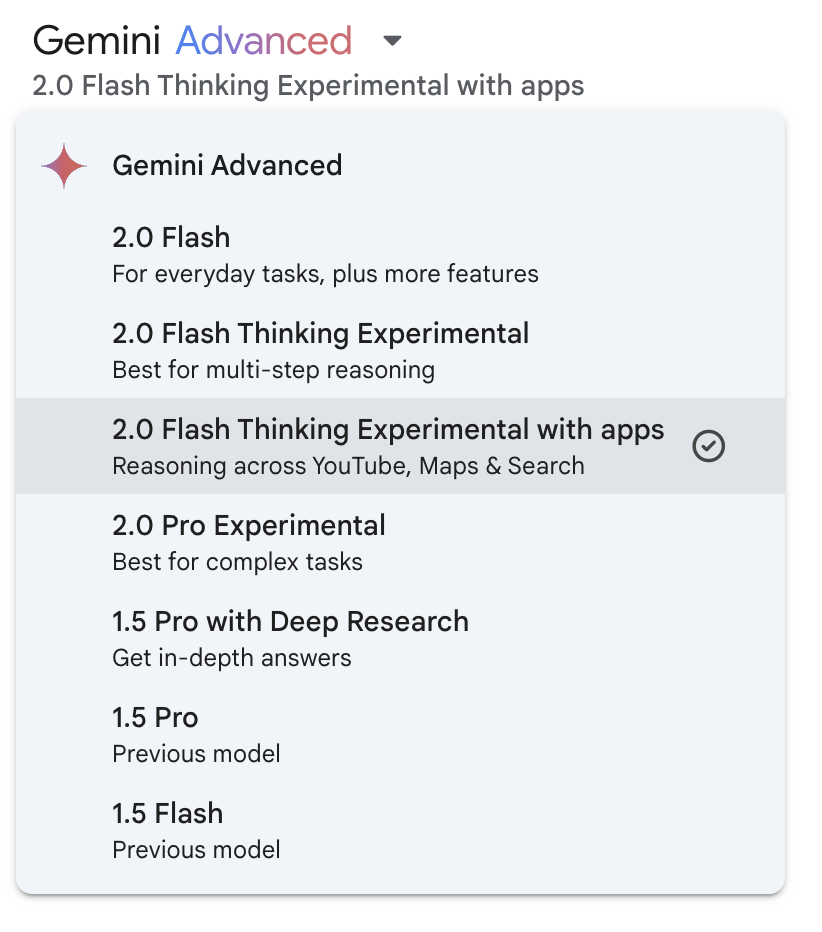

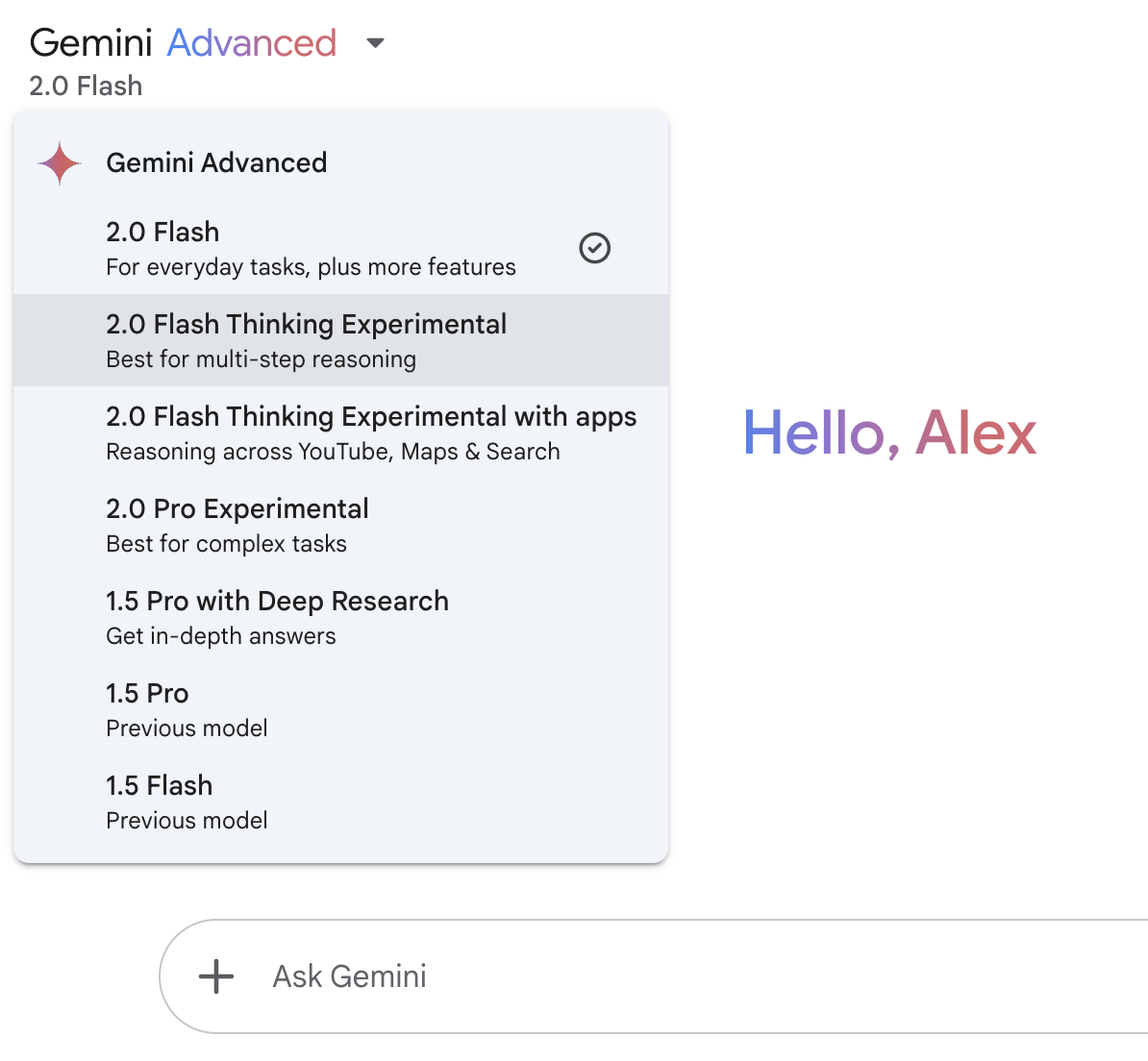

To address the knowledge cutoff mentioned above, Google has integrated YouTube, Maps, and Search functionality into Gemini 2.0 Flash Thinking Experimental. You can enable this functionality from the model drop-down menu:

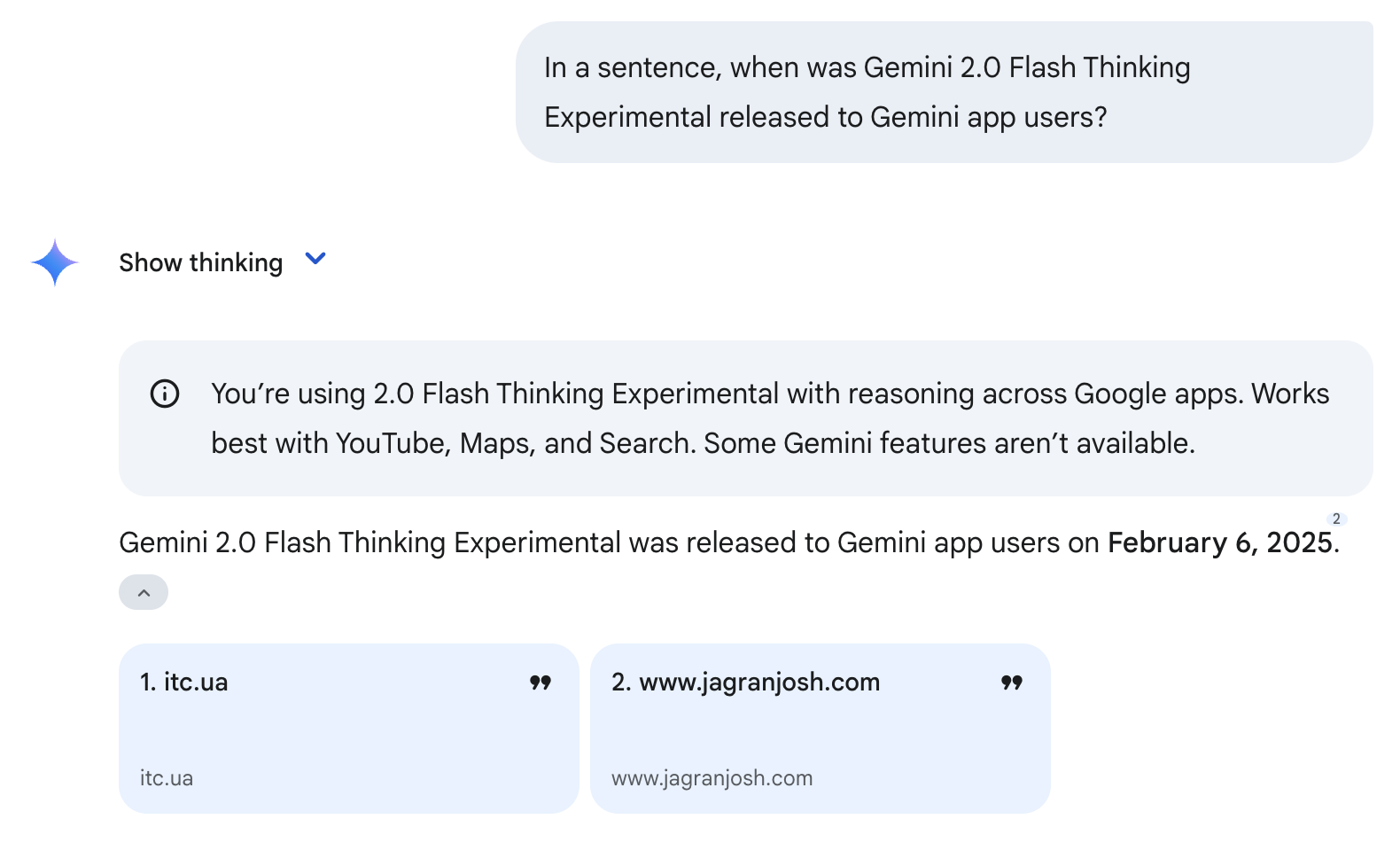

Note that using these tools doesn’t guarantee a correct answer. For instance, it still got the release date wrong, but it was much closer this time—the correct release date is February 5, 2025 (see example below). This happened because the model used the Search tool and sourced its information from two cited websites. Since these sites were published on February 6, 2025, the model wrongly inferred that was the release date of Gemini 2.0 Flash Thinking Experimental.

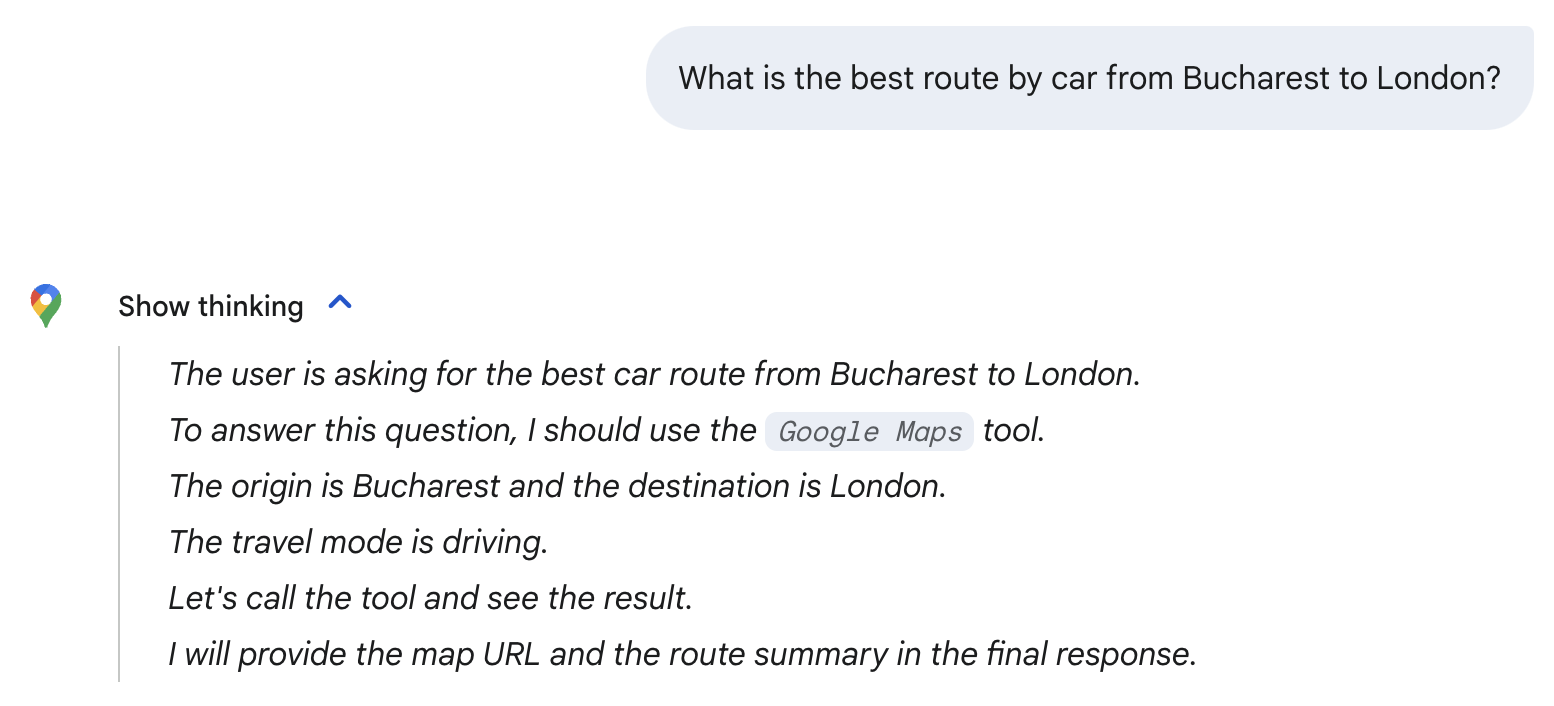

The model automatically selects the tools it needs based on the prompt. For instance, when I asked for the best driving route from Bucharest to London, the model automatically chose the Google Maps tool. Notice the Google Maps icon below and the mention of the Google Maps tool in its reasoning process.

Note that I’m only using these trivial questions to explore the model—for the question above, the simple 2.0 Flash model is enough. As we’ll see in the section below, Gemini 2.0 Flash Thinking Experimental is best used for advanced math, science, and multimodal reasoning.

Benchmarks: Gemini 2.0 Flash Thinking Experimental

Google’s Gemini 2.0 Flash Thinking Experimental has shown significant improvements in reasoning-based tasks, outperforming its predecessors in multiple key benchmarks (with a focus on mathematics, science, and multimodal reasoning).

|

Benchmark |

Gemini 1.5 Pro 002 |

Gemini 2.0 Flash Exp |

Gemini 2.0 Flash Thinking Exp 01-21 |

|

AIME2024 (Math) |

19.3% |

35.5% |

73.3% |

|

GPQA Diamond (Science) |

57.6% |

58.6% |

74.2% |

|

MMMU (Multimodal reasoning) |

64.9% |

70.7% |

75.4% |

Source: Google

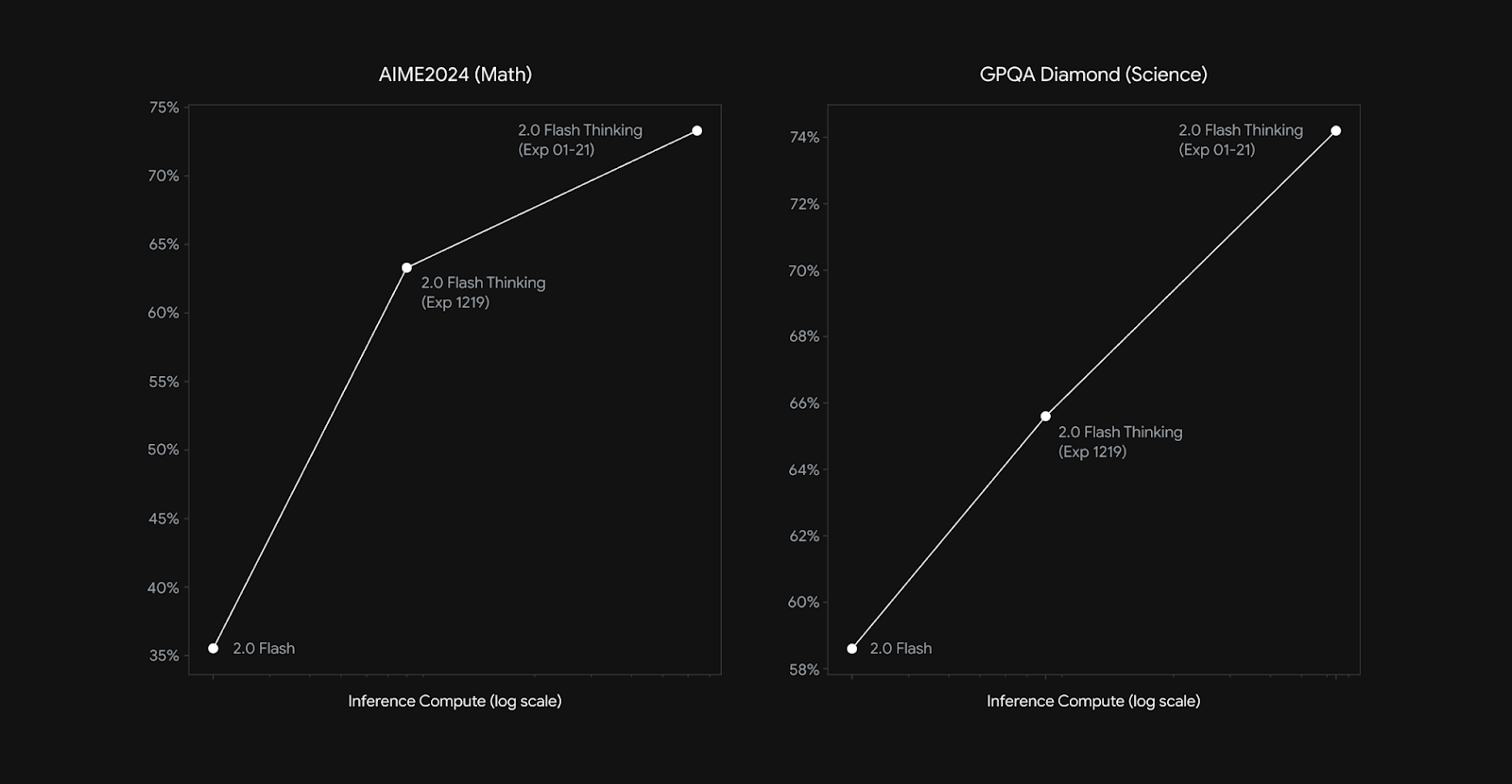

On the AIME2024 (math) benchmark, Gemini 2.0 Flash Thinking Experimental achieved 73.3%, a large increase from the 35.5% score of Gemini 2.0 Flash Experimental and the 19.3% of Gemini 1.5 Pro. For comparison, OpenAI’s o3-mini (high) scored 87.3% on this benchmark.

Similarly, for GPQA Diamond (science), the model reached 74.2%, improving from the 58.6% of the previous version. For comparison, DeepSeek-R1 scored 71.5% (pass@1—at the first pass) on this benchmark, while OpenAI’s o1 scored 75.7 (pass@1), and o3-mini (high) scored 79.7%.

In MMMU (multimodal reasoning), which evaluates the model’s ability to integrate and interpret information across multiple modalities, Gemini 2.0 Flash Thinking Experimental scored 75.4%, again surpassing its earlier iterations.

Just as we’ve seen with other reasoning models, 2.0 Flash Thinking’s reasoning capabilities scale with increased inference compute (see graph below). Inference compute refers to the amount of computational power used after you give your prompt to the model.

Source: Google

How to Access Gemini 2.0 Flash Thinking Experimental

Google has made Gemini 2.0 Flash Thinking Experimental available through multiple platforms, including direct access for Gemini users.

Gemini Chat (Gemini App & Web)

The easiest way to access Gemini 2.0 Flash Thinking is through Google’s Gemini chat interface, available both in the Gemini web app and the Gemini mobile app.

At the time of publishing this article, access to 2.0 Flash Thinking is available for free to all users.

Use the drop-down menu on the upper left to access the model:

Google AI Studio

Google AI Studio is another way to use Flash Thinking. This web-based platform is directed at more advanced users, and it allows you to experiment with the model’s reasoning capabilities, control parameters like temperature, test complex queries, explore structured responses, etc.

Gemini API

For developers looking to integrate Flash Thinking into their applications, Gemini 2.0 Flash Thinking is available via the Gemini API.

Conclusion

Gemini 2.0 Flash Thinking Experimental expands Google’s push into AI reasoning, now available to Gemini app users. It stands out by explaining its thought process, structuring responses step by step, and using tools like Search, YouTube, and Maps.

While promising, Flash Thinking still faces challenges like occasional inaccuracies and over-reliance on sources. With OpenAI and DeepSeek also advancing reasoning models, it will be interesting to see how competition in this space evolves. I also recommend checking out our guide to the new Gemini 3 model.

FAQs

Can I fine-tune Gemini 2.0 Flash Thinking for my own use case?

No, Google has not yet provided fine-tuning options for Flash Thinking.

Can Gemini 2.0 Flash Thinking generate images?

No, while the model processes text and images as inputs, it only generates text-based responses. It does not produce visual content like images or diagrams.

What kind of questions should I use Flash Thinking for?

It excels at complex reasoning tasks, such as mathematical problem-solving, scientific analysis, and multimodal data interpretation. Day-to-day queries may not require Flash Thinking and can be handled by standard Gemini models.

I’m an editor and writer covering AI blogs, tutorials, and news, ensuring everything fits a strong content strategy and SEO best practices. I’ve written data science courses on Python, statistics, probability, and data visualization. I’ve also published an award-winning novel and spend my free time on screenwriting and film directing.