Course

In collaboration with several academic researchers and non-profit organizations, Stability AI, a startup founded in 2019, developed the Stable Diffusion model, which was first released in 2022.

Stable Diffusion is an open-source deep learning model designed to generate high-quality, detailed images from text descriptions. It can also modify existing images or enhance low-resolution images using text inputs. The model has continued to evolve, with the latest advancements improving its performance and capabilities.

In this article, we will explore how Stable Diffusion works and cover various methods for running it.

What is Stable Diffusion?

Stable Diffusion is an advanced, open-source deep learning model developed by Stability AI. Released in 2022, it excels at generating high-quality, detailed images from textual descriptions. This versatile model can modify existing images or enhance low-resolution images using text inputs.

Initially trained on a vast dataset of 2.3 billion images, Stable Diffusion leverages the principles of generative modeling and diffusion processes. This allows it to create new, realistic images by learning patterns and structures from the training data. Its capabilities are comparable to other state-of-the-art models, making it a powerful tool for a wide range of applications in image generation and manipulation.

In February 2024, Stability AI announced Stable Diffusion 3 in an early preview, showcasing greatly improved performance, especially in handling multi-subject prompts, image quality, and spelling. The Stable Diffusion 3 suite ranges from 800 million to 8 billion parameters, emphasizing scalability and quality to meet various creative needs. By June 2024, the release of Stable Diffusion 3 Medium, a 2 billion parameter model, marked a significant advancement, offering exceptional detail, color, and photorealism while efficiently running on standard consumer GPUs.

Stable Diffusion 3 incorporates a new Multimodal Diffusion Transformer (MMDiT) architecture, which uses separate sets of weights for image and language representations. This innovation enhances text understanding and spelling capabilities compared to previous versions of the model. Based on human preference evaluations, Stable Diffusion 3 outperforms other leading text-to-image generation systems such as DALL·E 3, Midjourney v6, and Ideogram v1 in typography and prompt adherence.

Stability AI has published a comprehensive research paper detailing the underlying technology of Stable Diffusion 3, highlighting its advancements and superior performance. These enhancements make Stable Diffusion 3 a powerful tool for generating high-quality images from textual descriptions, with notable improvements in handling complex prompts and producing realistic outputs.

How Does Stable Diffusion Work?

Stable Diffusion is a sophisticated example of a class of deep learning models known as diffusion models. More specifically, it falls under the category of generative models. These models are designed to generate new data that is similar to the data they were trained on, enabling them to create new, realistic outputs based on learned patterns and structures.

Diffusion models are inspired by the concept of diffusion in physics, where particles spread out from areas of high concentration to low concentration over time. In the context of deep learning, diffusion models simulate this process in a high-dimensional data space. The model starts with random noise and iteratively refines this noise through a series of steps to generate coherent and high-quality images.

Generative modeling, a type of unsupervised learning, involves training models to discover and learn the patterns in input data automatically. Once trained, these models can generate new examples that resemble the original data. This ability makes generative models particularly useful for tasks such as image synthesis, data augmentation, and more.

If you'd like to know more about these models, consider taking our Deep Learning in Python course track.

The Diffusion Process

The diffusion process in Stable Diffusion involves two main phases: the forward diffusion process and the reverse denoising process.

1. Forward diffusion process:

This phase involves gradually adding noise to the training data (images) over several steps until the images become pure noise. This process is mathematically designed to be reversible.

2. Reverse denoising process:

During this phase, the model learns to reverse the noise addition process. Starting from random noise, the model iteratively denoises the image through multiple steps, gradually reconstructing a coherent and high-quality image. This reverse process is guided by the patterns and structures learned from the training data.

Multimodal Diffusion Transformer (MMDiT) Architecture

Stable Diffusion 3 introduces a new architecture known as the Multimodal Diffusion Transformer (MMDiT). This architecture employs separate sets of weights for image and language representations, enhancing the model's ability to understand and generate text-based prompts. By using distinct pathways for processing images and textual information, MMDiT improves the coherence and accuracy of generated images, particularly in handling complex prompts and typography.

Stable Diffusion Practical Applications

Stable Diffusion can be used for a variety of practical applications, including:

- Image generation: Creating new images from textual descriptions.

- Image modification: Altering existing images based on text prompts.

- Image enhancement: Improving the quality of low-resolution images.

These capabilities make Stable Diffusion a powerful tool for artists, designers, researchers, and anyone interested in exploring the potential of generative AI.

AI Upskilling for Beginners

How To Run Stable Diffusion Online

If you wish to start using the Stable diffusion model immediately, you can run it online using the following tools.

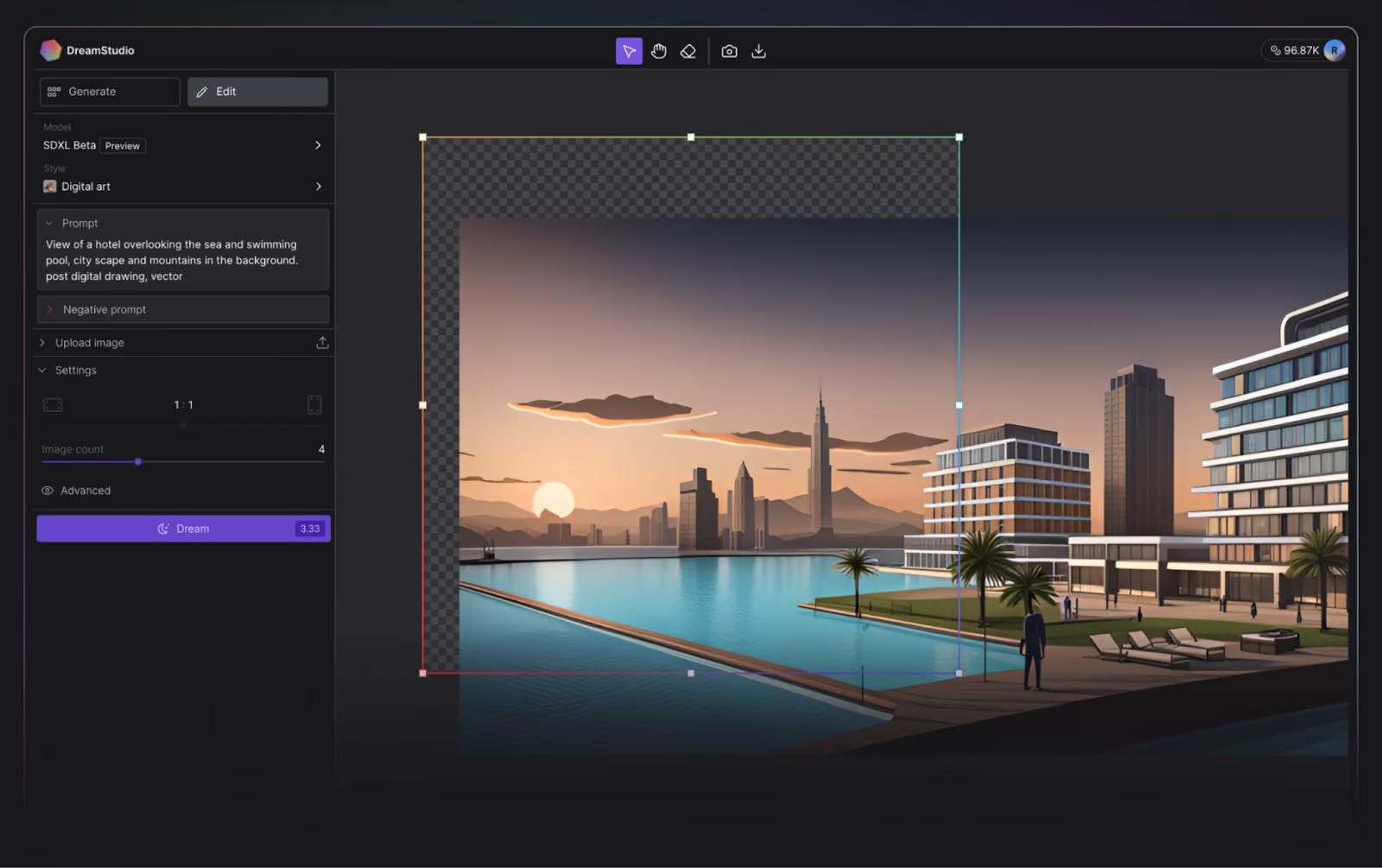

1. DreamStudio

Stability AI, the creators of Stable Diffusion, have made it extremely simple for curious parties to test their text-to-image model with their online tool, DreamStudio.

DreamStudio grants users access to the latest version of Stable Diffusion models and allows them to generate an image in up to 15 seconds.

DreamStudio user interface. Image source: DreamStudio.

When writing this tutorial, new users receive 100 free credits to try out DreamStudio, which is enough for 500 images using the default settings! Additional credits can be purchased within the application at your convenience, costing only $10.00 per 1000 credits.

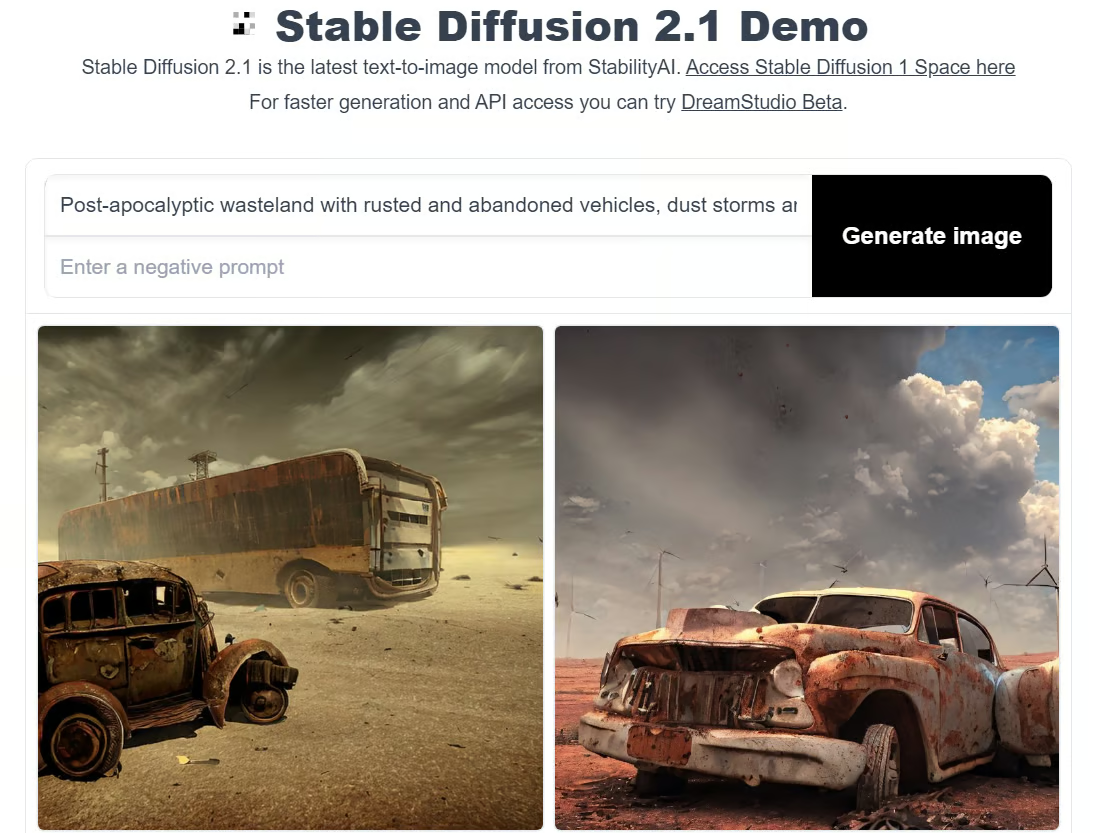

2. Hugging Face

Hugging Face is an AI community and platform that promotes open-source contributions. Though it’s highly recognized for its transformer models, Hugging Face also provides access to the latest Stable diffusion model, and like a true lover of open-source, it’s free.

To run stable diffusion in Hugging Face, you can try one of the demos, such as the Stable Diffusion 2.1 demo.

The tradeoff with Hugging Face is that you can’t customize properties as you can in DreamStudio, and it takes noticeably longer to generate an image.

Stable Diffusion demo in Hugging Face. Image by author.

How to Run Stable Diffusion Locally

But what if you want to experiment with Stable Diffusion on your local computer? We’ve got you covered.

Running Stable Diffusion locally enables you to experiment with various text inputs to generate images that are more tailored to your requirements. You may also fine-tune the model on your data to improve the results, given the inputs you provide.

Disclaimer: You must have a GPU to run Stable Diffusion locally.

Step 1: Install Python and Git

To run Stable Diffusion from your local computer, you will require Python 3.10.6. This can be installed from the official Python Website. If you get stuck, check out our How to Install Python tutorial.

Check the installation worked correctly by opening the command prompt, typing python, and executing the command. This should print the version of Python you’re using.

Disclaimer: The recommended version to run Stable Diffusion is Python 3.10.6. We recommend not to proceed without this version to avoid problems.

Next, you must install the code repository management system Git. The Git Install Tutorial can help, and our Introduction to Git course can deepen your knowledge of Git.

Step 2: Create a GitHub and Hugging Face account

GitHub is a software development hosting service where developers host their code so they can track and collaborate with other developers on projects. If you haven’t got a Github account, now is also a good time to create one—check out Github and Git Tutorial for Beginners for assistance.

Hugging Face, on the other hand, is an AI community that advocates for open-source contributions. It is the hub for several AI models from various domains, including natural language processing, computer vision, and more. You’ll need an account to download the latest version of Stable Diffusion. We will get to this step later.

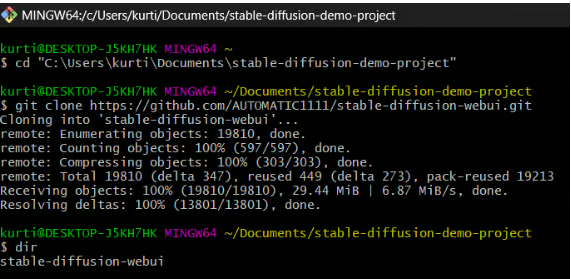

Step 3: Clone Stable Diffusion Web-UI

In this step, you will download the Stable Diffusion Web-UI to your local computer. While it's helpful to create a dedicated folder (e.g., stable-diffusion-demo-project) for this purpose, it's not mandatory.

1. Open Git Bash:

- Make sure you have Git Bash installed on your computer.

2. Navigate to your desired folder:

- Open Git Bash and use the

cdcommand to navigate to the folder where you want to clone the Stable Diffusion Web-UI. For example:

cd path/to/your/folder3. Clone the repository:

- Run the following command to clone the Stable Diffusion Web-UI repository:

git clone https://github.com/AUTOMATIC1111/stable-diffusion-webui.git

4. Verify the clone:

- If the command executes successfully, you should see a new folder named

stable-diffusion-webuiin your chosen directory.

Note: You can find more specific instructions for your hardware and operating system in the Stable Diffusion web UI Github repository.

Step 4: Download the latest Stable Diffusion model

1. Log in to Hugging Face:

- Access your Hugging Face account.

2. Download the Stable Diffusion model:

- Find and download the Stable Diffusion model you wish to run from Hugging Face. These files are large, so the download may take a few minutes.

3. Locate the model folder:

- Navigate to the following folder on your computer:

stable-diffusion-webui\models\Stable-diffusion

4. Move the downloaded model:

- In the

Stable-diffusionfolder, you will see a text file namedPut Stable Diffusion Checkpoints here. - Move the downloaded Stable Diffusion model file into this folder.

Step 5: Setup the Stable Diffusion web UI

In this step, you will install the necessary tools to run Stable Diffusion.

1. Open the Command Prompt or Terminal.

2. Navigate to the Stable Diffusion web UI folder:

- Use the

cdcommand to navigate to thestable-diffusion-webuifolder you cloned earlier. For example:

cd path/to/stable-diffusion-webui3. Run the setup script:

- Once you are in the

stable-diffusion-webuifolder, run the following command:

webui-user.batThis script will create a virtual environment and install all the required dependencies for running Stable Diffusion. The process may take about 10 minutes, so be patient.

Note: You can find more specific instructions for your hardware and operating system in the Stable Diffusion web UI Github repository.

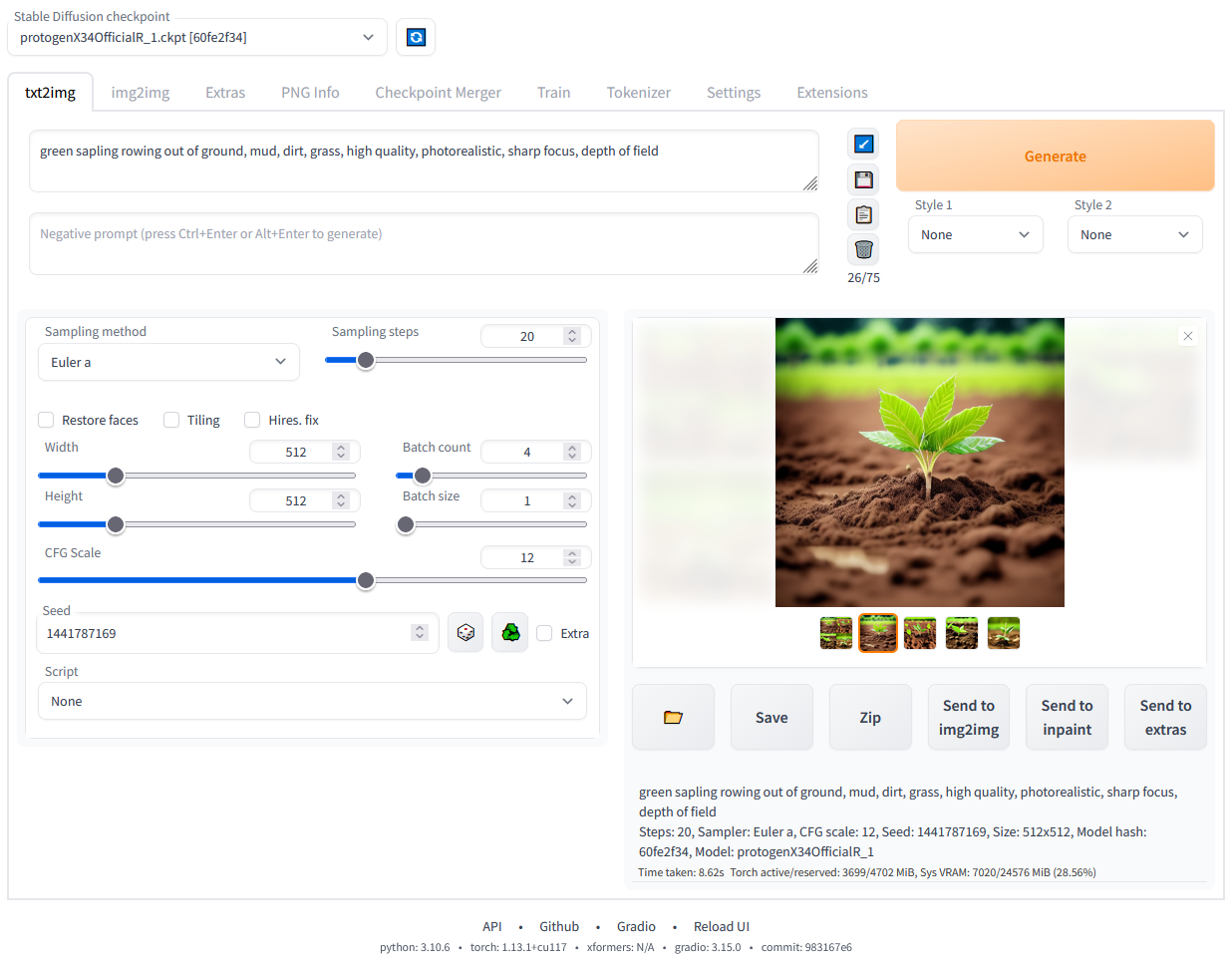

Step 6: Run Stable Diffusion locally

After the dependencies have been installed, a URL will appear in your command prompt: http://127.0.0.1:7860.

- Copy and paste this into your web browser to run the Stable Diffusion web UI.

- Now, you can start running prompts and generating images!

Stable Diffusion web UI running locally. Image by author.

Conclusion

Stable Diffusion represents a significant advancement in the field of generative AI. It offers the ability to generate high-quality, detailed images from textual descriptions. Whether you are looking to modify existing images, enhance low-resolution images, or create entirely new visuals, Stable Diffusion provides a powerful and versatile toolset.

With the recent updates and improvements in Stable Diffusion 3 and Medium, the model's capabilities have been further enhanced, making it a leader in the generative AI space.

Running Stable Diffusion locally or through various online platforms like DreamStudio and Hugging Face allows you to explore and leverage its full potential. Following the steps outlined in this guide, you can set up and start using Stable Diffusion to meet your creative and practical needs!

Learn More About Generative AI

Generative AI is a groundbreaking mode of deep learning that creates high-quality text, images, and other content based on the data it was trained on. Tools like Stable Diffusion, ChatGPT, and DALL-E are excellent examples of how generative AI is transforming various industries by enabling new forms of creativity and innovation. As these technologies continue to evolve, they open up new possibilities for artists, developers, and researchers to push the boundaries of what is possible.

For those interested in diving deeper into the world of generative AI, here are some resources to explore:

Earn a Top AI Certification

FAQs

Can I run Stable Diffusion on a computer without a dedicated GPU?

Running Stable Diffusion requires significant computational power, typically provided by a dedicated GPU. While it is theoretically possible to run it on a CPU, the process would be extremely slow and inefficient. For the best experience, it is recommended to use a machine with a powerful GPU that has at least 6GB of VRAM.

How can I fine-tune Stable Diffusion with my own dataset?

Fine-tuning Stable Diffusion involves retraining the model on your specific dataset to improve its performance for your use case. This process requires a good understanding of machine learning and access to a suitable computing environment. Generally, you will need to prepare your dataset, modify the training scripts to include your data, and then run the training process using a powerful GPU. Detailed instructions on fine-tuning models can often be found in the documentation of machine learning frameworks like PyTorch.

Check out our tutorial, Fine-tuning Stable Diffusion XL with DreamBooth and LoRA, for more information.

What are some common issues I might encounter when running Stable Diffusion locally, and how can I troubleshoot them?

Common issues include:

- Installation errors: Ensure all dependencies are correctly installed and compatible with your system.

- Out of memory errors: Reduce the image size or batch size if your GPU does not have enough VRAM.

- Slow performance: Verify that your GPU drivers are up to date and that your system is not running other intensive processes.

Consult the GitHub repository’s issues page and community forums for specific troubleshooting tips and solutions.

How can I optimize the performance of Stable Diffusion on my GPU?

To optimize Stable Diffusion’s performance on your GPU:

- Update drivers: Ensure your GPU drivers are up to date.

- Adjust settings: Reduce image resolution or batch size to fit within your GPU’s VRAM limits.

- Use optimized libraries: Utilize optimized libraries and frameworks such as CUDA and cuDNN for NVIDIA GPUs.

- Close background processes: Free up system resources by closing unnecessary background processes.

- Monitor performance: Use monitoring tools to track GPU usage and adjust settings accordingly for optimal performance.

Can I use Stable Diffusion to generate animations or videos?

While Stable Diffusion is primarily designed for generating still images, it is possible to create animations or videos by generating a sequence of images and combining them. This can be done by:

- Frame-by-frame generation: Create individual frames by varying the input prompt or seed slightly for each frame.

- Interpolation: Use techniques to interpolate between keyframes generated by Stable Diffusion.

- Video editing software: Combine the frames using video editing software to produce smooth animations or videos. Note that creating high-quality animations may require significant post-processing and expertise in video editing.