Course

User-Defined Functions (UDFs) in PySpark offer Python developers a powerful way to handle unique tasks that built-in Spark functions simply cannot manage. If you're a data engineer, analyst, or scientist proficient in Python, understanding UDF concepts can enable you to effectively tackle complex, real-world data challenges.

This tutorial guides you through PySpark UDF concepts, practical implementations, and best practices in optimization, testing, debugging, and advanced usage patterns. By the end, you will be able to confidently write, optimize, and deploy efficient UDFs at scale.

If you are new to PySpark, I recommend first checking out our Getting Started with PySpark tutorial as what we cover here are advanced Spark concepts.

What are PySpark UDFs?

PySpark UDFs are custom Python functions integrated into Spark's distributed framework to operate on data stored in Spark DataFrames. Unlike built-in Spark functions, UDFs enable developers to apply complex, custom logic at the row or column level.

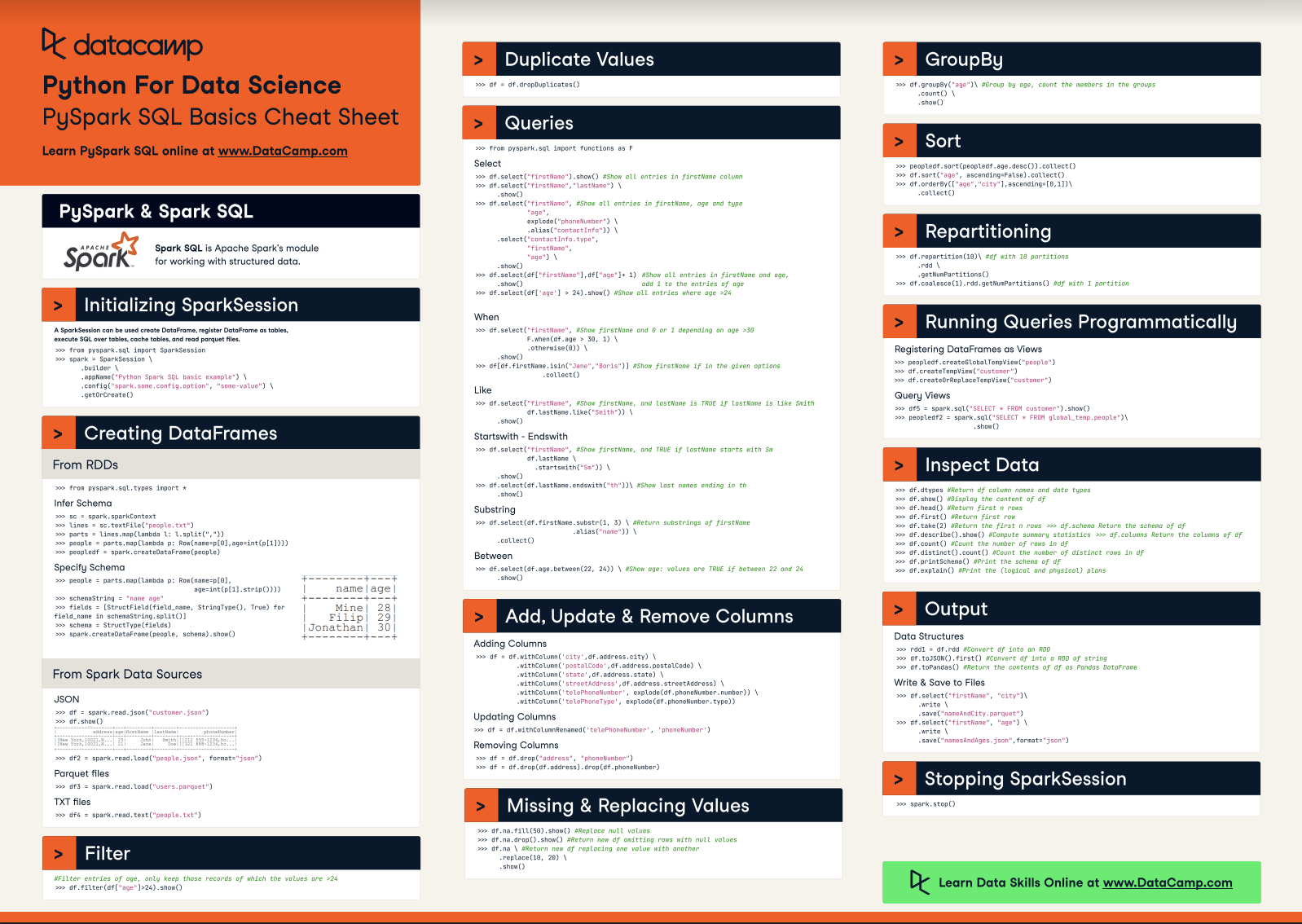

Our PySpark Cheat Sheet covers everything you need to know about Spark DataFrames, making it even easier for you to comprehend Spark UDFs.

When should you use PySpark UDFs?

Use UDFs when:

- You need logic that can’t be expressed using Spark’s built-in functions.

- Your transformation involves complex Python-native operations (e.g., regex manipulations, custom NLP logic).

- You’re okay trading performance for flexibility, especially during prototyping or for small to medium datasets.

Avoid UDFs when:

- Equivalent functionality exists in

pyspark.sql.functions, Spark’s native functions are faster, optimized, and can be pushed down to the execution engine. - You’re working with large datasets where performance is critical. UDFs introduce serialization overhead and break Spark’s ability to optimize execution plans.

- You can express your logic using SQL expressions, Spark SQL built-ins, or Pandas UDFs (for vectorized operations).

Strategic applications in data engineering

Here are the primary use cases for PySpark UDFs:

- Complex data transformations, such as advanced text parsing, data extraction, or string manipulation.

- Integration with third-party Python libraries, including popular machine learning frameworks like TensorFlow and XGBoost.

- Bridging legacy systems and supporting seamless schema evolution as data structures change.

UDFs simplify messy, real-world data engineering tasks, empowering teams to handle diverse requirements flexibly and effectively.

Let’s now discover how you can implement PySpark UDFs.

Implementing PySpark UDFs

This section outlines how to practically define and implement PySpark UDFs.

Standard UDF declaration methods

There are three common approaches for declaring UDFs in PySpark:

1. Lambda-based UDFs: Quick to define directly within DataFrame queries; best for simple operations.

Lambda-based UDF (Basic Python UDF) is best for quick and simple transformations. Avoid them for large-scale jobs where performance matters.

Here is an example:

from pyspark.sql.functions import udf

from pyspark.sql.types import StringType

uppercase = udf(lambda x: x.upper() if x else None, StringType())

df = spark.createDataFrame([("Ada",), (None,)], ["name"])

df.withColumn("upper_name", uppercase("name")).show()2. Decorated Python functions: Explicitly annotated using @pyspark.sql.functions.udf, supporting reusability and readability.

For example:

from pyspark.sql import functions as F

from pyspark.sql.types import IntegerType

@F.udf(returnType=IntegerType())

def str_length(s):

return len(s) if s else 0

df.withColumn("name_length", str_length("name")).show()3. SQL-registered UDFs: Registered directly in Spark SQL contexts, allowing their use within SQL queries.

from pyspark.sql.types import StringType

def reverse_string(s):

return s[::-1] if s else ""

spark.udf.register("reverse_udf", reverse_string, StringType())

df.createOrReplaceTempView("people")

spark.sql("""SELECT name, reverse_udf(name) AS reversed FROM people""").show()Each method has trade-offs: lambda UDFs are concise but limited, while function annotations favor readability, maintainability, and best practices.

Pandas UDFs allow vectorized operations on Arrow batches. They’re often faster than regular UDFs and integrate better with Spark’s execution engine.

Scalar Pandas UDF (element-wise, like map)

These are best suited for Fast, row-wise transformations on large datasets. For example:

from pyspark.sql.functions import pandas_udf

from pyspark.sql.types import IntegerType

import pandas as pd

@pandas_udf(IntegerType())

def pandas_strlen(s: pd.Series) -> pd.Series:

return s.str.len()

df.withColumn("name_len", pandas_strlen("name")).show()Grouped Map Pandas UDF

These are best for custom logic per group, similar to groupby().apply() in Pandas.

from pyspark.sql import functions as F

from pyspark.sql.types import StructType, StructField, StringType, DoubleType

import pandas as pd

schema = StructType([

StructField("group", StringType()),

StructField("avg_val", DoubleType())

])

@pandas_udf(schema, F.PandasUDFType.GROUPED_MAP)

def group_avg(pdf: pd.DataFrame) -> pd.DataFrame:

return pd.DataFrame({

"group": [pdf["group"].iloc[0]],

"avg_val": [pdf["value"].mean()]

})

df.groupBy("group").apply(group_avg).show()Pandas Aggregate UDF

This one performs Aggregations over groups, faster than grouped map. For example:

from pyspark.sql.functions import pandas_udf

from pyspark.sql.types import DoubleType

import pandas as pd

@pandas_udf(DoubleType(), functionType="grouped_agg")

def mean_udf(v: pd.Series) -> float:

return v.mean()

df.groupBy("category").agg(mean_udf("value").alias("mean_value")).show()Pandas Iterator UDF

Pandas Iterator UDF is best for large datasets requiring low-memory processing (batch-wise). For instance:

from pyspark.sql.functions import pandas_udf

from pyspark.sql.types import IntegerType

from typing import Iterator

import pandas as pd

@pandas_udf(IntegerType(), functionType="iterator")

def batch_sum(it: Iterator[pd.Series]) -> Iterator[pd.Series]:

for batch in it:

yield batch + 1

df.withColumn("incremented", batch_sum("id")).show()Type handling and null safety

Types and null values pose frequent challenges for PySpark UDFs. PySpark enforces strict type checking, often causing implicit type conversions or runtime issues. Moreover, Spark passes null values directly to UDFs, creating potential crashes if not handled explicitly.

Ensure robust UDFs with these strategies:

- Explicitly specify return types.

- Incorporate null checks (e.g., conditional statements) within your Python functions.

- Adopt defensive coding practices; simple null checks prevent frustrating runtime exceptions.

Optimizing UDF Performance

Performance often proves the Achilles' heel for standard UDFs due to their row-by-row execution model. Taking advantage of vectorized UDFs and Spark's optimization tools will significantly improve runtimes.

Vectorized UDFs with Pandas integration

Pandas UDFs introduce a vectorized approach to UDFs in PySpark by passing batches of data as Pandas Series to Python functions. This design significantly improves performance by reducing serialization overhead compared to standard, row-based UDFs.

Backed by Apache Arrow for zero-copy data transfer between the JVM and Python processes, Pandas UDFs enable efficient execution of operations at scale. They are particularly effective for intensive computations and complex string manipulations across millions of records.

We cover more details about data manipulation with PySpark in our Cleaning Data with PySpark course.

Additionally, Pandas UDFs allow seamless integration with the broader Python data science ecosystem, leveraging familiar tools and workflows.

|

UDF Type |

Execution Style |

Speed |

Spark Optimizations |

Best For |

Notes |

|

Standard UDF |

Row-by-row (Python) |

Slow |

Not optimized |

Simple logic, small datasets |

Easy to write, but costly |

|

Pandas Scalar UDF |

Vectorized (column-wise) |

Fast |

Arrow-backed |

Numeric ops, string transforms |

Use over standard UDFs whenever possible |

|

Pandas Grouped Map UDF |

Per group (Pandas DataFrame) |

Medium–Fast |

Arrow-backed |

Group-wise transformations |

Output schema must be defined manually |

|

Pandas Aggregate UDF |

Per group (Series input → scalar output) |

Fast |

Optimized |

Aggregations like mean, sum |

Simpler than grouped map |

|

Pandas Iterator UDF |

Batch iterator (streaming) |

Fast |

Optimized |

Processing large batches safely |

Lower memory footprint |

Arrow optimization techniques

Apache Arrow's columnar memory format allows zero-copy, efficient data transfer between Spark JVM and Python processes. By enabling Arrow (spark.sql.execution.arrow.pyspark.enabled=true) within your Spark configurations, data moves swiftly between JVM and Python environments, considerably speeding up UDF execution.

Execution plan optimization

Optimizing PySpark jobs involves understanding how to influence Spark's Catalyst optimizer. Advanced strategies include techniques like predicate pushdown, column pruning, and using broadcast join hints to improve query planning and execution efficiency.

To maximize performance, it's important to minimize the scope of UDF execution and favor built-in Spark SQL functions whenever possible. Strategic use of caching and careful plan crafting can further enhance job execution speed and resource utilization.

Performance optimization is one of the main questions you might encounter in a PySpark interview. Discover how to answer this and more Spark questions in our Top 36 PySpark Interview Questions and Answers for 2025 blog post.

Advanced Patterns and Anti-Patterns

Understanding proper and improper use patterns helps ensure stable and efficient UDF deployments.

Stateful UDF implementations

Stateful and nondeterministic UDFs present unique challenges in PySpark. These functions produce results that depend on external state or changing conditions, such as environment variables, system time, or session context.

While nondeterministic UDFs are sometimes necessary—for example, generating timestamps, tracking user sessions, or introducing randomness—they can complicate debugging, reproducibility, and optimization.

Implementing stateful UDFs requires careful design patterns: documenting behavior clearly, isolating side effects, and adding thorough logging to aid troubleshooting and ensure consistency across job runs.

When used thoughtfully, they can unlock powerful capabilities, but maintaining reliable data pipelines requires disciplined management. Our Big Data Fundamentals with PySpark course goes into more detail about how to handle big data in PySpark.

Common anti-patterns

Common anti-patterns in UDF usage can significantly degrade PySpark performance:

- Row-wise processing instead of batch processing: Applying UDFs to individual rows rather than using vectorized approaches like Pandas UDFs leads to major execution slowdowns.

- Nested DataFrame operations inside UDFs: Embedding DataFrame queries within UDFs causes excessive computation and hampers Spark's ability to optimize execution plans.

- Repeated inline UDF registration: Defining and registering UDFs multiple times inside queries adds unnecessary overhead; it's better to declare UDFs once and reuse them across jobs.

- Overusing custom Python logic for simple operations: Tasks like basic filtering, arithmetic, or straightforward transformations should favor Spark’s highly optimized built-in functions over custom UDFs.

Avoiding these pitfalls ensures better performance, easier optimization by Catalyst, and more maintainable PySpark code.

Debugging and Testing PySpark UDFs

Testing and debugging UDFs ensures reliability and robustness in production scenarios.

Exception handling patterns

Implementing structured error capture within UDFs is essential for building resilient and maintainable PySpark pipelines. Use try-except blocks inside UDFs to gracefully handle runtime surprises, such as null values, type mismatches, or division by zero errors.

Robust exception handling stabilizes pipelines against unexpected data and simplifies debugging by surfacing clear, actionable error messages. Properly captured and logged exceptions make UDF behavior more transparent, accelerating issue resolution and improving overall pipeline reliability.

Unit testing frameworks

Use PySpark’s built-in testing base class, pyspark.testing.utils.ReusedPySparkTestCase, along with frameworks like pytest, to write reliable unit tests for your UDFs. Structuring clear and focused tests ensures the correctness, stability, and maintainability of your UDF logic over time.

Best practices for testing UDFs include covering both typical and edge cases, validating outputs against known results, and isolating UDF behavior from external dependencies. Well-designed tests not only protect against regressions but also simplify future development and refactoring efforts.

Evolution and Future Directions

The PySpark ecosystem continues to evolve rapidly, introducing new capabilities that enhance UDFs even further.

Unity Catalog integration

Recent developments have integrated UDF registration into Unity Catalog, streamlining how UDFs are managed, discovered, and governed at scale. Unity Catalog enables centralized control over UDF lifecycle management, including registration, versioning, and access control, all critical for enterprise environments.

This integration enhances governance, enforces consistent security policies, and improves discoverability across teams, making UDFs easier to reuse, audit, and manage within large, complex data ecosystems.

GPU-accelerated UDFs

Frameworks like RAPIDS Accelerator enable GPU offloading for computationally intensive UDF tasks in PySpark, offering transformative performance improvements. By shifting heavy operations, such as numeric analysis, deep learning inference, and large-scale data modeling, to GPUs, RAPIDS can reduce execution times from hours to minutes in suitable workloads.

GPU acceleration is particularly beneficial for scenarios involving massive datasets, complex vectorized computations, and machine learning pipelines, dramatically expanding PySpark’s performance and scalability for modern data engineering tasks. Our Machine Learning with PySpark course dives deeper into these concepts.

Conclusion

PySpark UDFs are a powerful tool for extending Spark’s capabilities, enabling teams to tackle complex, customized data processing tasks that go beyond built-in functions. When applied correctly, they unlock flexibility and innovation in large-scale data pipelines.

However, optimizing UDF performance requires careful attention, avoiding common pitfalls like row-wise operations, managing exceptions gracefully, and leveraging techniques like Pandas UDF vectorization with Arrow integration.

Emerging advancements, such as GPU acceleration through frameworks like RAPIDS, are further expanding what’s possible with UDF-driven workflows. Whether you’re transforming messy real-world data or embedding advanced analytics into production systems, mastering UDF best practices is essential for building fast, efficient, and reliable data pipelines.

Learn the gritty details that data scientists are spending 70-80% of their time on, data wrangling and feature engineering, from our Feature Engineering with PySpark course.

PySpark UDF FAQs

When should I use a PySpark UDF instead of a built-in function?

You should use a PySpark UDF only when your transformation cannot be achieved using Spark’s built-in functions. Built-in functions are optimized and run faster than UDFs because they operate natively on the JVM without serialization overhead.

Why are Pandas UDFs faster than regular Python UDFs in PySpark?

Pandas UDFs (vectorized UDFs) are They are faster because they use Apache Arrow for efficient data serialization and process data in batches rather than row-by-row, which reduces the overhead of moving data between the JVM and Python interpreter.

Do I always need to specify a return type for a UDF in PySpark?

Yes, PySpark requires an explicit return data type when defining UDFs. The requirement ensures proper serialization between Java and Python and prevents runtime errors.

How do I enable Apache Arrow in my PySpark application?

You can enable Apache Arrow by setting the following configuration before running any UDFs:

spark.conf.set("spark.sql.execution.arrow.pyspark.enabled", "true")

What’s the best way to handle null values in a PySpark UDF?

Always include a conditional check for None (null) values in your UDF to avoid exceptions. For example: if product_name is None: return None.