Course

PySpark is the Python API for Apache Spark, designed for parallel and distributed data processing across clusters. Choosing the correct join operation for your needs affects job speed, resource consumption, and overall success.

Working with extensive datasets often means integrating data from several sources. Effectively combining this information is essential for accurate analysis and meaningful insights. PySpark joins provide powerful ways to combine separate datasets based on shared keys.

However, inefficient joins can severely impact your data processing speed and reliability when dealing with vast datasets containing millions or even billions of records across distributed clusters.

Have you experienced joins that fail with slow analysis or even with large-scale data? If so, this guide is for you. It will help intermediate data scientists, developers, and engineers gain mastery and confidence when performing PySpark joins.

If you’re looking for some hands-on exercises to familiarize yourself with PySpark, check out our Big Data with PySpark skill track.

What are PySpark Joins?

As you will discover in our Learn PySpark From Scratch blog post, one of PySpark's main features is the ability to merge large datasets. PySpark join operations are essential for combining large datasets based on shared columns, enabling efficient data integration, comparison, and analysis at scale.

They play a critical role in handling big data by helping uncover relationships and extract meaningful insights across distributed data sources.

PySpark offers several types of joins, such as inner joins, outer joins, left and right joins, semi joins, and anti joins, each serving different analytical purposes.

However, working with joins on large datasets can introduce challenges like skewed data, shuffling overhead, and memory constraints, making selecting the right join strategy for performance and accuracy crucial.

Fundamentals of PySpark Joins

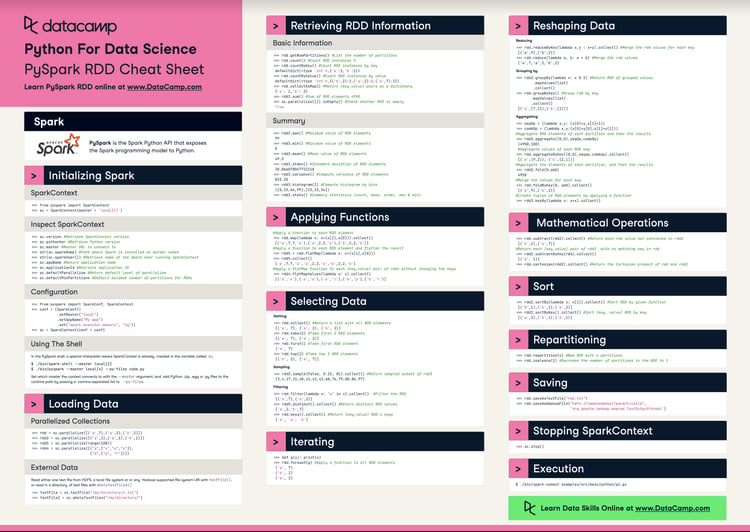

Understanding PySpark's internal operations is vital for efficiently joining large datasets, as we show in our Introduction to PySpark course. This section outlines the conceptual framework behind PySpark's join execution and the role of its optimizer.

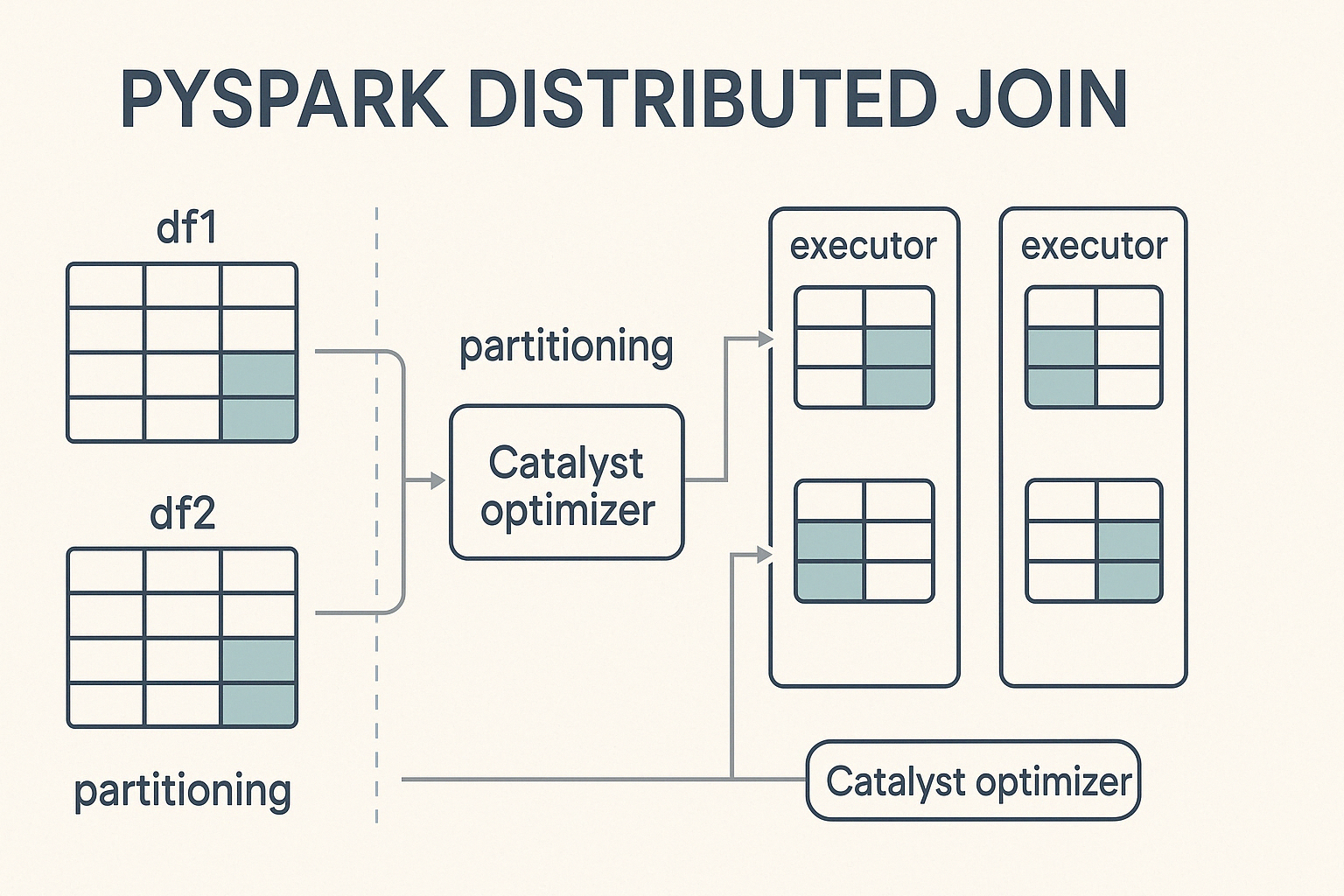

Conceptual framework

PySpark performs joins in a distributed computing environment, meaning it splits large datasets into smaller partitions and processes them in parallel across a cluster of machines. When a join is triggered, PySpark distributes the work among these nodes, aiming for balanced computation to speed up execution and avoid bottlenecks.

At the core of this process is Catalyst, Spark’s powerful query optimization engine. Catalyst determines how join operations are executed. It decides partitioning strategies, detects when data shuffling is necessary, and applies performance optimizations like predicate pushdown and broadcast joins. This automated optimization ensures efficient execution without requiring manual tuning from the developer.

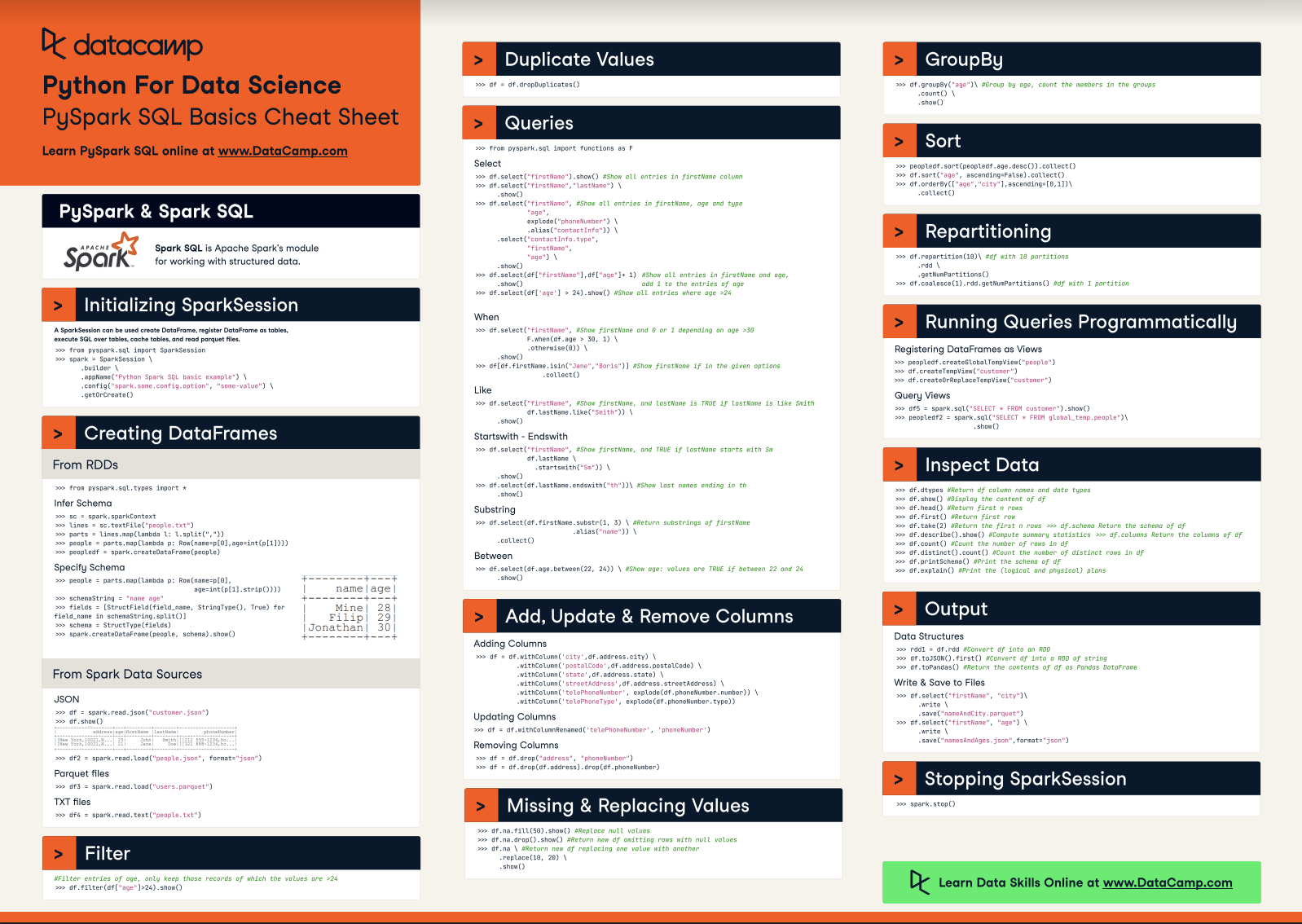

Core join syntax

To perform joins in PySpark, you follow a straightforward syntax that involves specifying the DataFrames, a join condition, and the type of join to execute.

from pyspark.sql import SparkSession

# Initialize Spark session

spark = SparkSession.builder.appName("PySpark Joins Example").getOrCreate()

# Perform a join

joined_df = df1.join(df2, on="key", how="inner")In this example:

df1anddf2are the DataFrames to be joined.df1.key==df2.keyis the join condition, determining how rows from each DataFrame should be matched.inner' specifies the join type, which could also beleft,right,,outer,semi, oranti` depending on the desired result.

Follow our Getting Started with PySpark tutorial for instructions on how to install PySpark and run the above joins with no errors.

PySpark Join Types and Applications

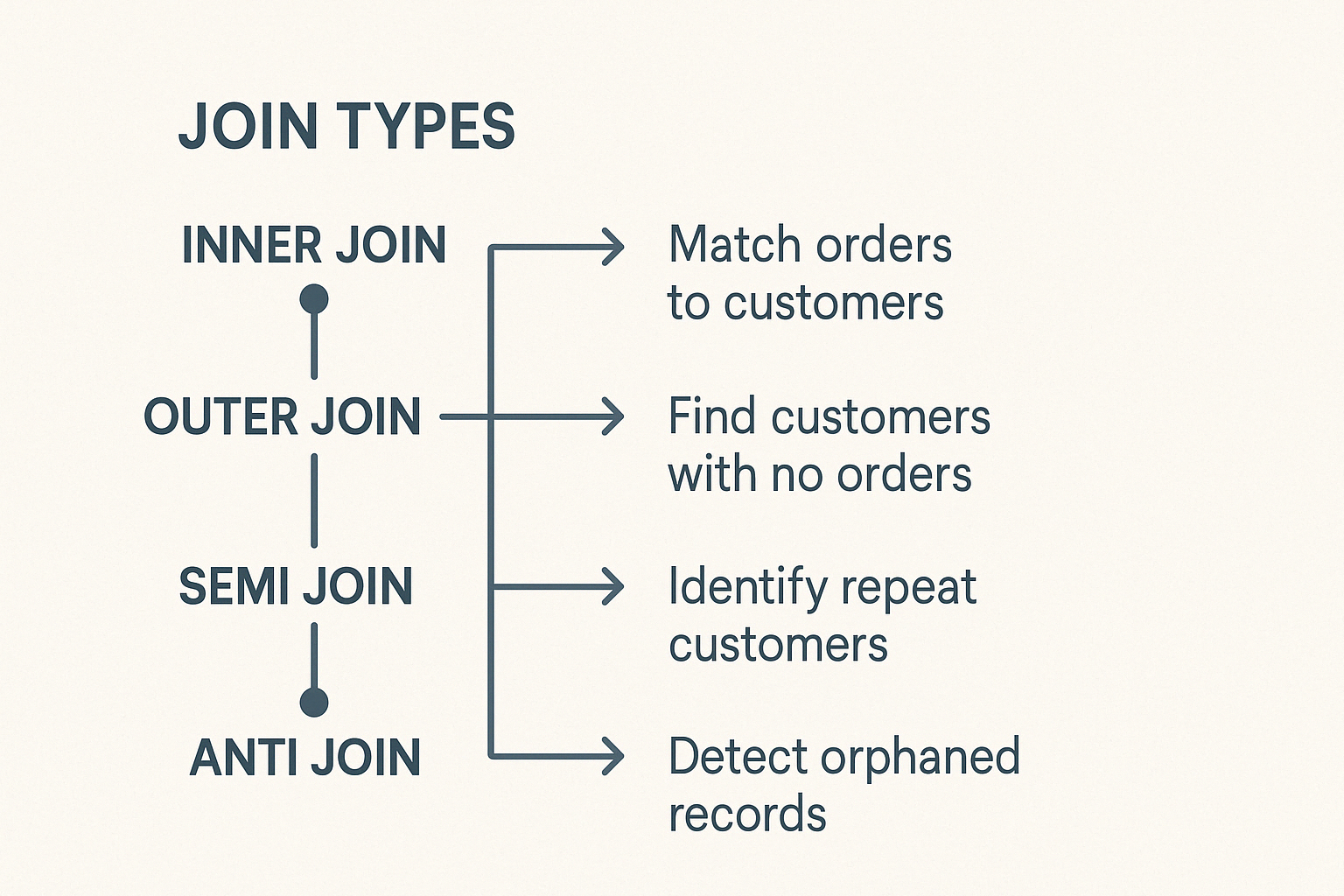

PySpark includes different kinds of joins, each suitable for distinct data tasks. Understanding these helps you select wisely.

Inner joins

The inner join combines rows from two DataFrames when matching keys exist in both. Rows without matching keys will be excluded. It is the most common and default join type in PySpark.

Typical usage scenarios include:

- Matching customer orders to customer profiles for accurate analytical reporting.

- Precisely comparing datasets from different sources.

Outer joins

PySpark supports left, right, and full outer joins.

The left outer join returns all rows from the left DataFrame and matching rows from the right DataFrame. When no matching key appears in the right DataFrame, null values fill the right-hand columns.

Use cases:

- Finding customers who made no purchases.

- Identifying missing information on records.

The right outer join mirrors the logic of the left join. It returns all rows from the right DataFrame and matching rows from the left DataFrame, filling missing matches from the left columns with nulls.

Example scenario:

- Checking product category assignments or labeling mistakes.

The Full Outer Join returns all available rows from both DataFrames, filling unmatched entries with nulls. It helps spot discrepancies or data gaps between two datasets.

Specialized joins: Semi and anti joins

Semi and anti joins serve specialized functions:

A semi join returns rows from the left DataFrame where matching keys exist in the right DataFrame. It doesn't add columns from the right DataFrame and avoids duplicates, making it ideal for existence checks.

Use case example:

- Quickly checking user login histories without additional details.

An anti Join return rows from one DataFrame that have no matching key in the second DataFrame.

Use case example:

- Finding orphaned records or validating data integrity.

Cross joins create data by pairing every row in one DataFrame with every row from another. Unless specifically desired, avoid cross joins, as they expand datasets drastically.

Use them cautiously for specific scenarios such as:

- Testing parameter combinations.

- Analyzing all possible pairings in experimental settings.

Optimization Techniques for Efficient PySpark Joins

Large-scale joins demand careful optimization. Consider the following techniques:

Broadcast join strategy

Broadcast joins improve performance by replicating smaller DataFrames across all nodes, eliminating costly shuffles.

Syntax example:

from pyspark.sql.functions import broadcast

joined_df = large_df.join(broadcast(small_df), "id", "inner")Be aware of your system’s memory limits; broadcasting excessively large DataFrames won't work effectively.

Skew mitigation techniques

Data skew means data is not distributed evenly across partitions, which creates bottlenecks. Techniques to reduce skew include:

- Key salting: attaching random prefixes to heavily skewed join keys for even distribution.

- Skew-aware partitioning: Spark automatically balances data across partitions, proactively managing skew from the outset.

Partitioning strategies

Efficient join performance in PySpark heavily depends on minimizing shuffle overhead, which occurs when data needs to be moved across nodes to satisfy join conditions. One of the most effective ways to reduce this overhead is by aligning partitions of the DataFrames being joined.

Best practices for partitioning

Keep these best practices in mind:

- To minimize unnecessary data movement, partition both DataFrames using the same column(s) used in the join condition.

- Avoid skewed columns: Don’t partition on columns with a few dominant values, as this leads to unbalanced workload distribution across nodes.

- Use

repartition()orbroadcast()wisely: Userepartition()for large datasets where uniform distribution is possible, andbroadcast()for significantly smaller tables to avoid shuffling entirely. - Monitor data size and distribution: Understanding the underlying characteristics of your data (e.g., cardinality and frequency distribution of join keys) helps you choose the most balanced partitioning strategy.

Advanced PySpark Join Strategies and Use Cases

PySpark includes specialized mechanisms for more demanding cases, enabling even greater efficiency:

Range joins

Range joins are used when matching rows based on numerical or time-based conditions, rather than exact equality. These joins are common in scenarios involving time-series data, such as log analysis, event tracking, or IoT sensor streams.

Best practices for efficient range joins include:

- Minimize shuffle overhead: Proper partitioning is essential to avoid costly data movement. When working with range joins, consider partitioning the data based on time windows or value ranges relevant to your use case.

- Sort data within partitions: Sorting data by the range key (e.g., timestamp) before performing the join can improve performance, especially when using operations like

rangeBetweenorbetween. - Avoid wide range scans: Keep the join ranges as narrow as possible to reduce the number of comparisons and improve execution time.

- Leverage bucketing or window functions: These can help reduce shuffle operations and ensure better alignment when dealing with large datasets and overlapping ranges.

Example use cases include:

- Aligning marketing campaign data with customer interactions across time windows.

- Merging IoT or sensor data captured in sequential intervals for anomaly detection or trend analysis.

Streaming joins

Joining streaming data introduces unique challenges due to its continuous and often unpredictable nature. Key issues include late-arriving events, out-of-order data, and the need to manage state efficiently over time.

PySpark addresses these challenges using watermarks, which define a threshold for how long the system should wait for late data before discarding it. By setting a watermark on event-time columns, PySpark can efficiently manage late data and optimize resource usage.

Watermarks are especially useful when joining two streaming sources or a stream with a static dataset, helping maintain both performance and consistency in real-time data pipelines.

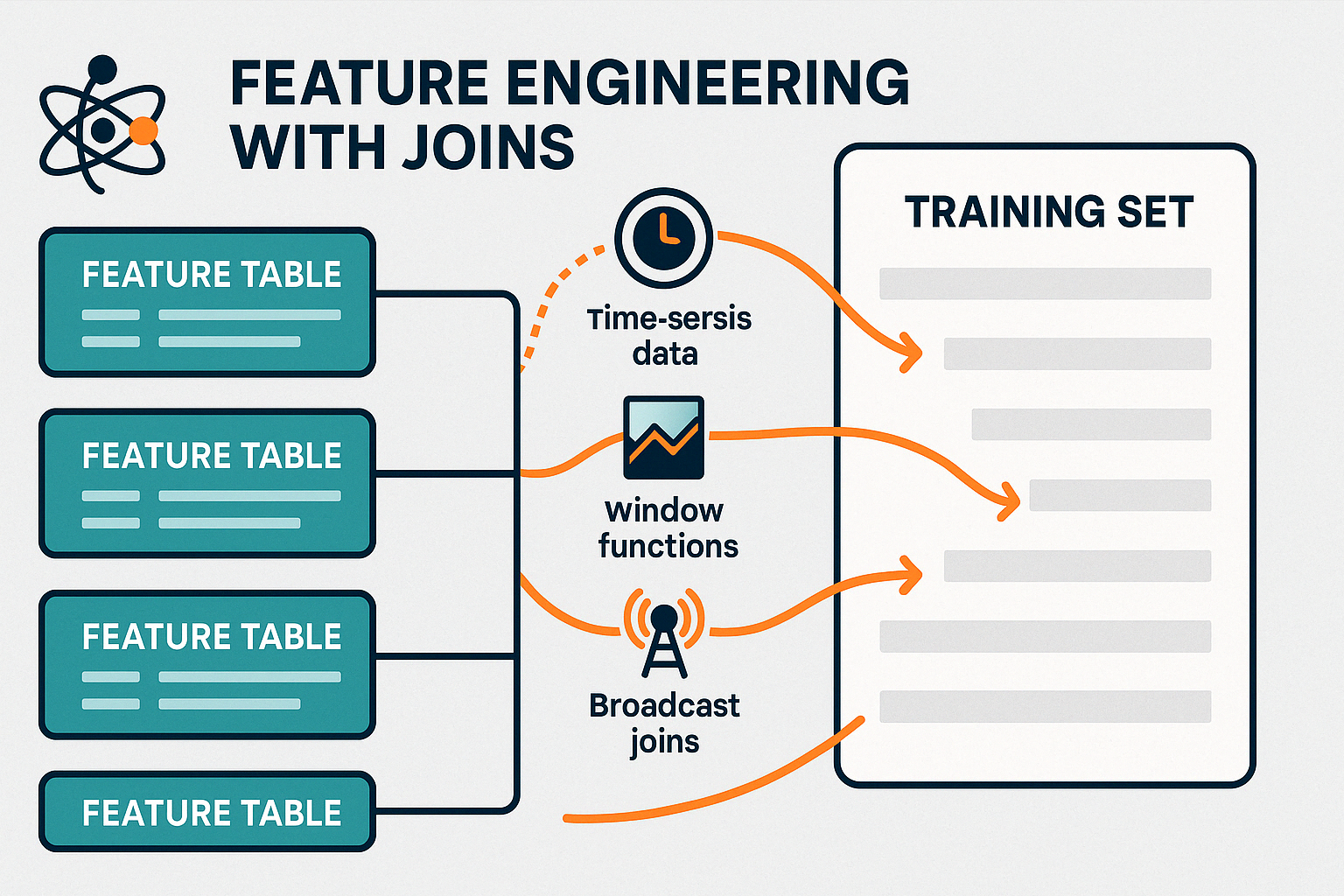

Machine Learning (ML) Feature Joins

Joins play a crucial role in preparing data for machine learning models, for example, when building models with Spark MLlib. Consolidating these features into a single, unified dataset is essential for accurate model training and evaluation.

Strategies for efficient feature joins in real-time systems include:

- Using broadcast joins for small, static feature tables to minimize shuffle and speed up real-time data enrichment.

- Pre-aggregating features using window functions or group operations before joining to reduce the volume of data in motion.

- Leveraging watermarks and event-time alignment when joining real-time streams to ensure timely and relevant feature updates.

- Persisting intermediate joins for frequently reused features to avoid redundant computations in downstream tasks.

By efficiently managing these joins, PySpark enables real-time feature engineering, supporting both online learning systems and up-to-date batch training pipelines.

You can learn how to make predictions from data with Apache Spark, using decision trees, logistic regression, linear regression, ensembles, and pipelines with our Machine Learning with PySpark course.

Best Practices and Common Challenges

Joining operations in distributed computing requires regular attention to avoid potential issues.

Efficient join operations can drastically improve overall pipeline speed and resource usage. Here are key practices to follow:

- Pre-join filtering: Remove irrelevant rows and drop unused columns before performing joins. This reduces the amount of data shuffled across the cluster.

- Smart join ordering: Always join smaller or broadcastable tables first to minimize intermediate data size and avoid unnecessary computation.

- Memory and resource management: Monitor task execution through Spark’s UI or logs to detect skew or bottlenecks. Ensure that partitioning strategies align with cluster resources to avoid overloading individual nodes.

- Repartition wisely: Use

repartition()orcoalesce()to adjust partition sizes for more balanced parallel processing.

Anti-patterns to avoid

Even small missteps in join logic can lead to major issues in performance or data accuracy. Here are common pitfalls to watch out for:

- Incorrect or ambiguous join conditions: Poorly defined join conditions can lead to incorrect matches or duplicate rows. Always verify that your join keys are accurate, unique where necessary, and appropriately matched in data type.

- Unintentional cross joins: Cross joins multiply every row in one DataFrame with every row in another, often resulting in massive data explosions. Use them only when explicitly required and with a clear understanding of the output size.

- Forcing broadcast joins on large DataFrames: Broadcasting large tables can overwhelm memory and slow down performance. Let PySpark decide, or only manually broadcast when the DataFrame is small and fits comfortably in memory.

- Ignoring data skew: If one or more join keys are heavily skewed, it can overload a few partitions. Detect skew early and consider salting techniques or alternative strategies.

Even well-structured joins can encounter performance or execution problems.

Here are common issues and how to address them:

- Slow execution times: Often caused by data skew or imbalanced partitions. Check for highly repetitive join key values and consider repartitioning or applying salting techniques to distribute data more evenly.

- Join failures or unexpected errors: Typically due to data type mismatches or null values in join keys. Ensure both sides of the join use the same data types and apply appropriate null handling.

- Out-of-memory errors: May occur when large datasets are joined without filtering or when broadcast joins are forced on large tables. Filter early and broadcast only small, static DataFrames.

Conclusion

PySpark joins are a cornerstone of large-scale data processing, enabling powerful and efficient analysis across massive datasets. When used correctly, they reveal meaningful relationships that drive deeper insights and smarter decisions.

While performance tuning can present challenges, such as handling data skew or optimizing partitioning, mastering these aspects is key to building robust data pipelines.

Whether you're joining real-time streams or consolidating features for machine learning, refining your join strategies can significantly improve execution speed, resource efficiency, and analytical precision.

To dive deeper, explore big data processing with PySpark, and work toward becoming a data engineering expert with our Big Data Fundamentals with PySpark skill track.

PySpark Joins FAQs

What is the most efficient join type to use in PySpark?

The most efficient join type depends on your data and goals. Inner joins are fast when matching data exists on both sides. For handling small reference tables, broadcast joins are often the most performant.

When should I use broadcast joins in PySpark?

Use broadcast joins when you're joining a large DataFrame with a much smaller one (generally less than 10MB to 100MB depending on your cluster). Broadcasting the smaller table avoids expensive shuffles.

What causes data skew in joins, and how can I fix it?

Data skew happens when certain join keys have disproportionately large records, causing some partitions to be overloaded. Salting, adding randomized values to distribute data more evenly, is an effective technique to mitigate this.

Is repartitioning always necessary before a join?

Not always, but repartitioning by the join key can drastically reduce shuffle and improve performance when the two DataFrames originate from different sources or are partitioned differently.

Can PySpark handle streaming data joins in real time?

Yes. PySpark’s Structured Streaming API supports real-time joins using watermarks to handle late-arriving data and manage state efficiently.