Course

It’s estimated that around 328.77 million terabytes of data are created daily. Every click and purchase generates data that can be processed into meaningful insights and predictions with the right tools.

However, we need a high-performance library to help us process that amount of data. That is where PySpark comes into play.

In this guide, we’ll explore how to learn PySpark from scratch. I’ll help you craft a learning plan, share my best tips for learning it effectively, and provide useful resources to help you find roles that require PySpark.

Learn PySpark From Scratch

What Is PySpark?

PySpark is the combination of two powerful technologies: Python and Apache Spark.

Python is one the most used programming languages in software development, particularly for data science and machine learning, mainly due to its easy-to-use and straightforward syntax.

On the other hand, Apache Spark is a framework that can handle large amounts of unstructured data. Spark was built using Scala, a language that gives us more control over It. However, Scala is not a popular programming language among data practitioners. So, PySpark was created to overcome this gap.

PySpark offers an API and a user-friendly interface for interacting with Spark. It uses Python's simplicity and flexibility to make big data processing accessible to a wider audience.

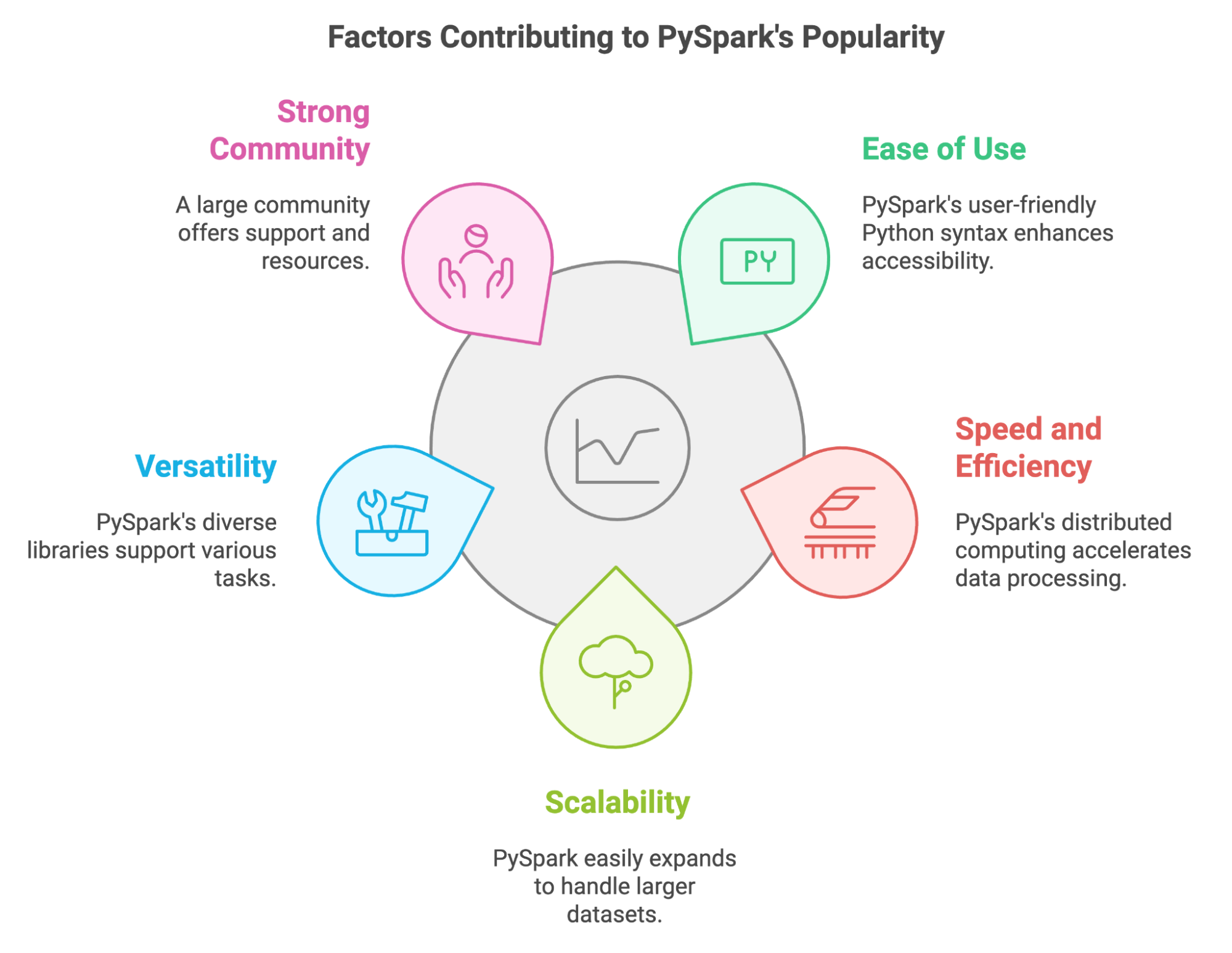

What makes PySpark popular?

In recent years, PySpark has become an important tool for data practitioners who need to process huge amounts of data. We can explain its popularity by several key factors:

- Ease of use: PySpark uses Python's familiar syntax, which makes it more accessible to data practitioners like us.

- Speed and efficiency: By distributing computations across clusters of machines, PySpark handles huge datasets at a high speed.

- Scalability: PySpark adapts to growing data volumes, allowing us to scale our applications by adding more computing resources.

- Versatility: It offers a wide ecosystem of libraries for different tasks, from data manipulation to machine learning.

- Strong community: We can rely on a large and active community to provide us with support and resources when we face issues and challenges.

PySpark also allows us to leverage existing Python skills and libraries. We can easily integrate it with popular tools like Pandas and Scikit-learn, and it lets us use various data sources.

Main features of PySpark

PySpark was created especially for big data and machine learning developments. But what features make it a powerful tool for handling huge amounts of data? Let’s have a look at them:

- Resilient distributed datasets (RDDs): These are the fundamental data structures behind PySpark. Because of them, data transformation, filtering, and aggregations can be done in parallel.

- DataFrames and SQL: In PySpark, DataFrames represents a higher-level abstraction built on top of RDDs. We can use them with Spark SQL and queries to perform data manipulation and analysis.

- Machine learning libraries: Using PySpark's MLlib library, we can build and use scalable machine learning models for tasks such as classification and regression.

- Support different data formats: PySpark provides libraries and APIs to read, write, and process data in different formats such as CSV, JSON, Parquet, and Avro, among others.

- Fault tolerance: PySpark keeps track of each RDD. If a node fails during execution, PySpark reconstructs the lost RDD partition using that tracking information. So, there is little risk of data loss.

- In-memory processing: PySpark stores intermediate data in memory, which reduces the need for disk operations, and in turn, enhances the data processing performance.

- Streaming and real-time processing: We can leverage Spark Streaming component to process real-time data streams and perform near-real-time analytics.

Why Is Learning PySpark so Useful?

The volume of data is only increasing. Today, data wrangling, data analysis, and machine learning tasks involve working with large amounts of data. We need to use powerful tools that process that data efficiently and promptly. PySpark is among one of those tools.

PySpark has a variety of applications

We’ve already mentioned the strengths of PySpark, but let’s look at a few specific examples of where you can use them:

- Data ETL. PySpark ability for efficient data cleaning and transformation is used for processing sensor data and production logs in manufacturing and logistics.

- Machine learning. MLlib library is used to develop and deploy models for personalized recommendations, customer segmentation, and sales forecasting in e-commerce.

- Graph processing. PySpark's GraphFrames are used to analyze social networks and understand relationships between users.

- Stream processing. PySpark's Structured Streaming API enables real-time processing of financial transactions to detect fraud.

- SQL data processing. PySpark's SQL interface makes it easier for healthcare researchers and analysts to query and analyze large genomic datasets.

There is a demand for skills in PySpark

With the rise of data science and machine learning and the increase in data available, there is a high demand for professionals with data manipulation skills. According to The State of Data & AI Literacy Report 2024, 80% of leaders value data analysis and manipulation skills.

Learning PySpark can open up a wide range of career opportunities. Over 800 job listings on Indeed, from data engineers to data scientists, highlight the demand for PySpark proficiency in data-related job postings.

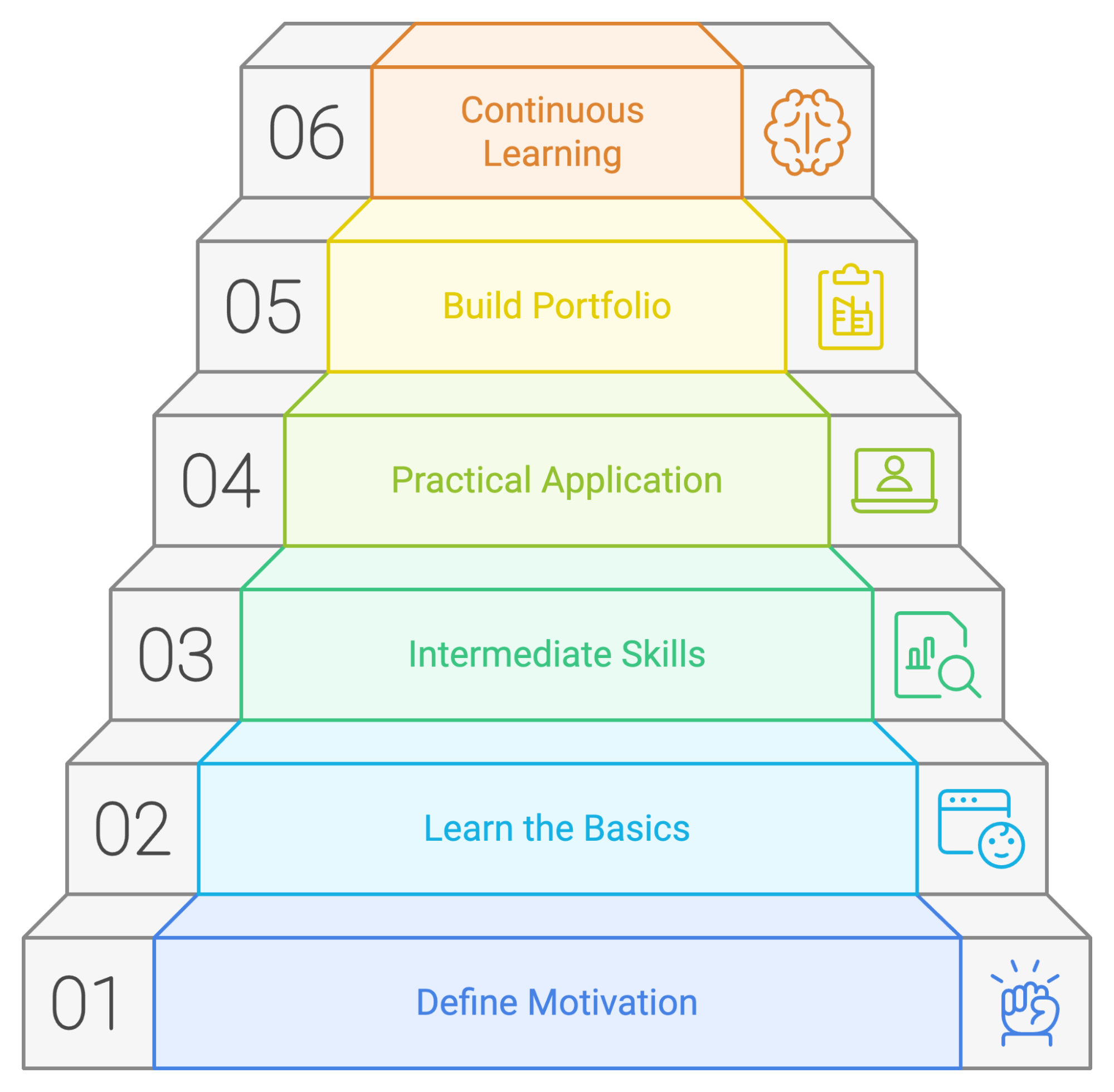

How to Learn PySpark from Scratch in 2026

If you learn PySpark methodically, you have more chances of success. Let’s focus on a few principles you can use in your learning journey.

1. Understand why you’re learning PySpark

Before you learn technical details, define your motivation for learning PySpark. Ask yourself:

- What are my career goals?

- Is PySpark a skill you need to advance in your current role or land a dream job?

- Which opportunities are you expecting to open if you master PySpark?

- What problems am I trying to solve?

- Do you struggle with processing large datasets that the current tools you know can't handle?

- Do you need to perform complex data transformations or build advanced machine-learning models?

- What interests me?

- Does the idea of building scalable data pipelines excite you?

- Are you interested in big data and its potential to unlock insights?

- Do I have a specific project in mind that requires PySpark's capabilities?

- Are you working on a personal project that involves large-scale data processing or analysis?

- Does your company need PySpark expertise in an upcoming project?

2. Start with the basics of PySpark

After you identify your goals, master the basics of PySpark and understand how they work.

Python fundamentals

Because PySpark is built on top of Python, you must become familiar with Python before using PySpark. You should feel comfortable working with variables and functions. Also, it might be a good idea to be familiar with data manipulation libraries such as Pandas. DataCamp's Introduction to Python course and Data Manipulation with Pandas can help you get up to speed.

Installing PySpark and learning the basics

You need to install PySpark to start using it. You can download PySpark using pip or Conda, manually download it from the official website, or start with DataLab to get started with PySpark in your browser.

If you want a full explanation of how to set up PySpark, check out this guide on how to install PySpark on Windows, Mac, and Linux.

PySpark DataFrames

The first concept you should learn is how PySpark DataFrames work. They are one of the key reasons why PySpark works so fast and efficiently. Understand how to create, transform (map and filter), and manipulate them. The tutorial on how to start working with PySpark will help you with these concepts.

3. Master intermediate PySpark skills

Once you're comfortable with the basics, it's time to explore intermediate PySpark skills.

Spark SQL

One of the biggest advantages of PySpark is its ability to perform SQL-like queries to read and manipulate DataFrames, perform aggregations, and use window functions. Behind the scenes, PySpark uses Spark SQL. This introduction to Spark SQL in Python can help you with this skill.

Data wrangling and transformation

Working with data implies becoming proficient in cleaning, transforming, and preparing it for analysis. This includes handling missing values, managing different data types, and performing aggregations using PySpark. Take the DataCamp's Cleaning Data with PySpark to gain practical experience and master these skills.

Machine learning with MLlib

PySpark can also be used to develop and deploy machine learning models, thanks to its MLlib library. You should learn to perform feature engineering, model evaluation, and hyperparameter tuning using this library. DataCamp's Machine Learning with PySpark course provides a comprehensive introduction.

4. Learn PySpark by doing

Taking courses and practicing exercises using PySpark is an excellent way to get familiar with the technology. However, to get proficient in PySpark, you need to solve challenging and skill-building problems, such as those you’ll face on real-world projects. You can start by taking simple data analysis tasks and gradually move to more complex challenges.

Here are some ways to practice your skills:

- Participate in webinars and code-alongs. Check for upcoming DataCamp webinars and online events where you can follow along with PySpark tutorials and code examples. This will help you reinforce your understanding of concepts and gain familiarity with coding patterns.

- Develop independent projects. Identify datasets that interest you and apply your PySpark skills to analyze them. This could involve anything from analyzing social media trends to exploring financial market data.

- Contribute to open-source projects. Contribute to PySpark projects on platforms like GitHub to gain experience collaborating with others and working on real-world projects.

- Build a personal blog. Write about your PySpark projects, share your insights, and contribute to the PySpark community by creating a personal blog.

5. Build a portfolio of projects

As you keep moving in your PySpark learning journey, you will complete different projects. To showcase your PySpark skills and experience to potential employers, you should compile them into a portfolio. This portfolio should reflect your skills and interests and be tailored to the career or industry you're interested in.

Try to make your projects original and showcase your problem-solving skills. Include projects that demonstrate your proficiency in various aspects of PySpark, such as data wrangling, machine learning, and data visualization. Document your projects, providing context, methodology, code, and results. You can use DataLab, which is an online IDE that allows you to write code, analyze data collaboratively, and share your insights.

Here are two PySpark projects you can work on:

6. Keep challenging yourself

Learning PySpark is a continuous journey. Technology constantly evolves, and new features and applications are being developed regularly. PySpark is not the exception to that.

Once you’ve mastered the fundamentals, you can look for more challenging tasks and projects such as performance optimization or GraphX. Focus on your goals and specialize in areas that are relevant to your career goals and interests.

Keep up to date with the new developments and learn how to apply them to your current projects. Keep practicing, seek out new challenges and opportunities, and embrace the idea of making mistakes as a way to learn.

Let’s recap the steps we can take for a successful PySpark learning plan:

An Example PySpark Learning Plan

Even though each person has their way of learning, it’s always a good idea to have a plan or guide to follow for learning a new tool. We’ve created a potential learning plan outlining where to focus your time and efforts if you’re just starting with PySpark.

Month 1: PySpark fundamentals

- Core concepts. Install PySpark and explore its syntax. Understand the core concepts of Apache Spark, its architecture, and how it enables distributed data processing.

- PySpark basics. Learn to set up your PySpark environment, create SparkContexts and SparkSessions, and explore basic data structures like RDDs and DataFrames.

- Data manipulation. Master essential PySpark operations for data manipulation, including filtering, sorting, grouping, aggregating, and joining datasets. You can complete the Cleaning Orders with PySpark project.

Month 2: PySpark for Data Analysis and SQL

- Working with different data formats: Learn to read and write data in various formats, including CSV, JSON, Parquet, and Avro, using PySpark.

- Spark SQL. Learn to use Spark SQL for querying and analyzing data with familiar SQL syntax. Explore concepts like DataFrames, Datasets, and SQL functions.

- Data visualization and feature Engineering: Explore data visualization techniques in PySpark using libraries like Matplotlib and Seaborn to gain insights from your data. Learn how to wrangle data and perform feature engineering by taking the Feature Engineering with PySpark course.

Month 3-4: PySpark for Machine Learning and Advanced Topics

- MLlib introduction: Get started with PySpark's MLlib library for machine learning. Explore basic algorithms for classification, regression, and clustering. You can use the Machine Learning with PySpark course.

- Building ML pipelines. Learn to build and deploy machine learning pipelines in PySpark for efficient model training and evaluation.

- Develop a project. Work on developing a Demand Forecasting Model.

- Advanced concepts. Explore techniques for optimizing PySpark applications, including data partitioning, caching, and performance tuning.

Five Tips for Learning PySpark

I imagine that by now, you are ready to jump into learning PySpark and get your hands on a large dataset to practice your new skill. But before you do, let me highlight these tips that will help you navigate the path to PySpark proficiency.

1. Narrow your scope

PySpark is a tool that can have many different applications. To keep focus and achieve your goal, you should identify your area of interest. Do you want to focus on data analysis, data engineering, or machine learning? Taking a focused approach can help you gain the most relevant aspects and knowledge of PySpark for your chosen path.

2. Practice frequently and constantly

Consistency is key to mastering any new skill. You should set aside dedicated time to practice PySpark. Just a short amount of time every day will do. You don’t need to tackle complex concepts every day. You can review what you’ve learned or revisit a simple exercise to refactor it. Regular practice will reinforce your understanding of concepts and build your confidence in applying them.

3. Work on real projects

This is one of the key tips, and you will read it several times in this guide. Practicing exercises is great for gaining confidence. However, applying your PySpark skills to real-world projects is what will make you excel at it. Look for datasets that interest you and use PySpark to analyze them, extract insights, and solve problems.

Start with simple projects and questions and gradually take on more complex ones. This can be as simple as reading and cleaning a real dataset and writing a complex query to perform aggregations and predict the price of a house.

4. Engage in a community

Learning is often more effective when done collaboratively. Sharing your experiences and learning from others can accelerate your progress and provide valuable insights.

To exchange knowledge, ideas, and questions, you can join some groups related to PySpark, and attend meet-ups and conferences. The Databricks Community, the company founded by the creators of Spark, has an active community forum where you can engage in discussion and ask questions about PySpark. Moreover, the Spark Summit, organized by Databricks, is the largest Spark conference.

5. Make mistakes

As with any other technology, learning PySpark is an iterative process. And learning from your mistakes is an essential part of the learning process. Don't be afraid to experiment, try different approaches, and learn from your errors. Try different functions and alternatives for aggregating the data, perform subqueries or nested queries, and observe the fast response that PySpark gives.

Best Ways to Learn PySpark

Let’s cover a few efficient methods of learning PySpark.

Take online courses

Online courses offer an excellent way to learn PySpark at your own speed. DataCamp offers PySpark courses for all levels, which together make up the Big Data with PySpark track. The courses cover introductory concepts to machine learning topics and are designed with hands-on exercises.

Here are some of the PySpark-related courses on DataCamp:

- Feature Engineering with PySpark

- Machine Learning with PySpark

- Building Recommendation Engines with PySpark

- Big Data Fundamentals with PySpark

Follow online tutorials

Tutorials are another great way to learn PySpark, especially if you are new to the technology. They contain step-by-step instructions on how to perform specific tasks or understand certain concepts. For a start, consider these tutorials:

Check out PySpark cheat sheets

Cheat sheets come in handy when you need a quick reference guide on PySpark topics. Here are two useful cheat sheets:

Complete PySpark projects

Learning PySpark requires hands-on practice. Facing challenges while completing projects that will allow you to apply all the skills you’ve learned. As you start to take on more complex tasks, you’ll need to find solutions and research new alternatives to get the results you want, boosting your PySpark expertise.

Check for the PySpark projects to work on at DataCamp. These allow you to apply your data manipulation skills and machine learning model building leveraging PySpark:

Discover PySpark through books

Books are an excellent resource for learning PySpark. They offer in-depth knowledge and insights from experts alongside code snippets and explanations. Here are some of the most popular books on PySpark:

- Learning PySpark 2nd Edition, Jules S. Damji

- PySpark Cookbook, Denny Lee

- The Spark for Python Developers

Careers in PySpark

The demand for PySpark skills has increased across several data-related roles, from data analysts to big data engineers. If you’re getting ready for an interview, consider these PySpark interview questions for

Big data engineer

As a big data engineer, you are the architect of big data solutions, responsible for designing, building, and maintaining the infrastructure that handles large datasets. You will rely on PySpark to create scalable data pipelines, ensuring efficient data ingestion, processing, and storage.

You will require a strong understanding of distributed computing and cloud platforms and expertise in data warehousing and ETL processes.

- Key skills:

- Proficiency in Python and PySpark, Java, and Scala

- Understanding of data structures and algorithms

- Proficiency in both SQL and NoSQL

- Expertise in ETL processes and data pipeline building

- Understanding of distributed systems

- Key tools used:

- Apache Spark, Hadoop Ecosystem

- Data Warehousing Tools (e.g. Snowflake, Redshift, or BigQuery)

- Cloud Platforms (e.g. AWS, GCP, Databricks)

- Workflow Orchestration Tools (e.g. Apache Airflow, Apache Kafka)

Get certified in your dream Data Engineer role

Our certification programs help you stand out and prove your skills are job-ready to potential employers.

Data scientist

As a data scientist, you will use PySpark capabilities to perform data wrangling and manipulation and develop and deploy machine learning models. Your statistical knowledge and programming skills will help you develop models to contribute to the decision-making process.

- Key skills:

- Strong knowledge of Python, PySpark, and SQL

- Understanding of machine learning and AI concepts

- Proficiency in statistical analysis, quantitative analytics, and predictive modeling

- Data visualization and reporting techniques

- Effective communication and presentation skills

- Key tools used:

- Data analysis tools (e.g., pandas, NumPy)

- Machine learning libraries (e.g., Scikit-learn)

- Data visualization tools (e.g., Matplotlib, Tableau)

- Big data frameworks (e.g., Airflow, Spark)

- Command line tools (e.g., Git, Bash)

Machine learning engineer

As a machine learning engineer, you’ll use PySpark to prepare data, build machine learning models, and train and deploy them.

- Key skills:

- Proficiency in Python, PySpark, and SQL

- Deep understanding of machine learning algorithms

- Knowledge of deep learning frameworks

- Understanding of data structures, data modeling, and software architecture

- Key tools used:

- Machine learning libraries and algorithms (e.g., Scikit-learn, TensorFlow)

- Data science libraries (e.g., Pandas, NumPy)

- Cloud platforms (e.g., AWS, Google Cloud Platform)

- Version control systems (e.g., Git)

- Deep learning frameworks (e.g., TensorFlow, Keras, PyTorch)

Data analyst

As a data analyst, you’ll use PySpark to explore and analyze large datasets, identify trends, and communicate their findings through reports and visualizations.

- Key skills:

- Proficiency in Python, PySpark, and SQL

- Strong knowledge of statistical analysis

- Experience with business intelligence tools (e.g., Tableau, Power BI)

- Understanding of data collection and data cleaning techniques

- Key tools used:

- Data analysis tools (e.g., pandas, NumPy)

- Business intelligence data tools (e.g., Tableau, Power BI)

- SQL databases (e.g., MySQL, PostgreSQL)

|

Role |

What you do |

Your key skills |

Tools you use |

|

Big Data Engineer |

Designs, builds, and maintains the infrastructure for handling large datasets. |

Python, PySpark, Java, and Scala, data structures, SQL and NoSQL, ETL, distributed systems |

Apache Spark, Hadoop, Data Warehousing Tools, Cloud Platforms, Workflow Orchestration Tools |

|

Data Scientist |

Uncovers hidden patterns and extracts valuable insights from data. Applies statistical knowledge and programming skills to build models that aid in decision-making. |

Python, PySpark, SQL, machine learning, AI concepts, statistical analysis, predictive modeling, data visualization, Effective communication |

Pandas, NumPy, Scikit-learn, Keras, Matplotlib, plotly, Airflow, Spark, Git |

|

Machine Learning Engineer |

Designs, develops, and deploys machine learning systems to make predictions using company data. |

Python, PySpark, and SQL, machine learning algorithms, deep learning, data structures, data modeling, and software architecture |

Scikit-learn, TensorFlow, Keras, PyTorch, Pandas, NumPy, AWS, Google Cloud Platform, Git |

|

Data Analyst |

Bridges the gap between raw data and actionable business insights. Communicate findings through reports and visualizations. |

Python, PySpark, and SQL, statistical analysis, data visualization, data collection and data cleaning techniques |

Pandas, NumPy, Tableau, PowerBI, MySQL, PostgreSQL. |

How to Find a Job That Uses PySpark or in PySpark

A degree can be a great asset when starting a career that uses PySpark, but it's not the only pathway. Nowadays, an increasing number of professionals are starting to work on data-related roles through alternative routes, including transitioning from other fields. With dedication, consistent learning, and a proactive approach, you can land your dream job that uses PySpark.

Keep learning about the field

Stay updated with the latest developments in PySpark. Follow influential professionals who are involved with PySpark on social media, read PySpark-related blogs, and listen to PySpark-related podcasts.

PySpark was developed by Matei Zaharia, who is also CTO at Databricks, a platform built on top of Apache Spark. You'll gain insights into trending topics, emerging technologies, and the future direction of PySpark.

You should also check out industry events, whether it’s webinars at DataCamp, data science and AI conferences, or networking events.

Develop a portfolio

You need to stand out from other candidates. One good way to do it is to build a strong portfolio that showcases your skills and completed projects. You can leave a good impression on hiring managers by addressing real-world challenges.

Your portfolio should contain diverse projects that reflect your PySpark expertise and its various applications. Check this guide on how to craft an impressive data science portfolio.

Develop an effective resume

In recent years, there has been an increase in people transitioning to data science and data-related roles. Hiring managers have to review hundreds of resumes and distinguish great candidates. Also, many times, your resume is passed through Applicant Tracking Systems (ATS), automated software systems used by many companies to review resumes and discard those that don't meet specific criteria. So, you should build a great resume to impress both ATS and your recruiters.

Prepare for the interview

If you get noticed by the hiring manager or your effective resume goes through the selection process, you should next prepare for a technical interview. To be ready, you can check this article on top questions asked for PySpark interviews.

Conclusion

Learning PySpark can open doors for better opportunities and career outcomes. The path to learning PySpark is rewarding but requires consistency and hands-on practice. Experimenting and solving challenges using this tool can accelerate your learning process and provide you with real-world examples to showcase when you are looking for jobs.

FAQs

What are the main features of PySpark?

PySpark provides a user-friendly Python API for leveraging Spark, enabling speed, scalability, and support for SQL, machine learning, and stream processing for large datasets.

Why is the demand for PySpark skills growing?

PySpark's ease of use, scalability, and versatility for big data processing and machine learning are driving the growing demand for these skills.

What are the key points to consider to learn PySpark?

Focus on Python fundamentals, Spark's core concepts, and data manipulation techniques, and explore advanced topics like Spark SQL and MLlib.

What are some ways to learn PySpark?

Take online courses and follow tutorials, work on real-world datasets, use cheat sheets, and discover PySpark through books.

What are some of the roles that use PySpark?

Some of the roles that use PySpark are: big data engineer, machine learning engineer, data scientist, and data analyst.