Course

PySpark DataFrames are central when you build scalable pipelines in Spark. One key thing to remember is that DataFrames are immutable. This fact means once you have one, you can't change it directly; you always get a new DataFrame whenever you make a change. This is where PySpark withColumn() comes in. It helps you add, update, or alter columns, but since DataFrames don't change in place, you always reassign the result to a new variable.

In this tutorial, I’ll walk you through how to use withColumn() to shape and tweak your datasets, whether you’re building features, cleaning up types, or adding logic.

What is withColumn() in PySpark?

In a nutshell, withColumn() returns a fresh DataFrame with an added or replaced column. Because DataFrames don’t mutate, you must assign that return value, df = df.withColumn(...).

Under the hood, every call to withColumn() adds a projection in the logical plan. That’s fine if you're doing just one or two, but chaining many can bloat the plan, making Spark run significantly slower.

New to PySpark? You can master the fundamentals to handle big data with ease, by learning to process, query, and optimize massive datasets for powerful analytics in our Introduction to PySpark course.

Core Uses of PySpark withColumn()

Let’s walk through the main ways you’ll use withColumn():

Adding a constant column

Say you want to add a timestamp or a flag. Use lit() or typedLit() from pyspark.sql.functions. For instance:

from pyspark.sql.functions import lit

df = df.withColumn("ingest_date", lit("2025-07-29"))Creating a column from existing data

Maybe you want a derived value, combine strings, or compute a total. You can do:

from pyspark.sql.functions import col, expr

df = df.withColumn("full_name", col("first_name") + expr(" ' ' + last_name"))Arithmetic or expression-based transformations also fit here.

Overwriting an existing column

If you already have a column and want to change it, withColumn() just replaces it:

df = df.withColumn("age", col("age").cast("integer"))No need to drop and re-add.

Casting data types

Changing a column's type is straightforward:

df = df.withColumn("price", col("price").cast("decimal(10,2)"))I find that this is particularly handy when reading loose JSON or CSV where types arrive as strings.

If you’re looking for further examples of what PySpark is and how you can use it with examples, I recommend our Getting Started with PySpark tutorial.

Conditional Logic and when() Expressions

There are times when you need to do more than just simple arithmetic. Maybe you're building a status column based on a score. Or flagging entries based on a mix of rules. This is where when() from pyspark.sql.functions steps in. Think of it like an IF statement. You can pair it with otherwise() to cover multiple paths.

Here’s how it looks:

from pyspark.sql.functions import when

df = df.withColumn(

"grade",

when(col("score") >= 90, "A")

.when(col("score") >= 80, "B")

.when(col("score") >= 70, "C")

.otherwise("F")

)It reads almost like plain English: “If score is at least 90, then A. Else if it’s 80, then B. Keep going… and if none of those match, give it an F.” It's expressive, and Spark turns that logic into an efficient expression behind the scenes. No nested loops, no apply() calls, just clean DAGs and clear execution plans.

This comes in handy when you want to avoid switching to SQL or cluttering your code with UDFs.

You can learn how to manipulate data and create machine learning feature sets in Spark using SQL in Python from our Introduction to Spark SQL in Python tutorial.

Transforming Columns with Built-ins and User-Defined Functions

You’ll often need to format or restructure columns, convert strings to uppercase, split them into parts, concatenate values, and so on. PySpark has a rich library of built-in functions that work directly inside withColumn().

Here’s an example:

from pyspark.sql.functions import upper, concat_ws, split

df = df.withColumn("full_caps", upper(col("name")))

df = df.withColumn("city_state", concat_ws(", ", col("city"), col("state")))

df = df.withColumn("first_word", split(col("description"), " ").getItem(0))Now, built-ins are great. Fast, native, and optimized. But sometimes, you’ve got a one-off rule that doesn’t fit in any box. That’s when user-defined functions (UDFs) come in.

Using a UDF

Let’s say you want to compute the length of a string and label it:

from pyspark.sql.functions import udf

from pyspark.sql.types import StringType

def label_length(x):

return "short" if len(x) < 5 else "long"

label_udf = udf(label_length, StringType())

df = df.withColumn("name_length_label", label_udf(col("name")))Simple enough. But be cautious, UDFs come with overhead. They pull data out of the optimized engine, run it in Python, and then wrap it back up. That’s fine when needed, but for high-volume tasks, prefer built-ins or SQL expressions if possible.

Learn how to create, optimize, and use PySpark UDFs, including Pandas UDFs, to handle custom data transformations efficiently and improve Spark performance from our How to Use PySpark UDFs and Pandas UDFs Effectively tutorial.

Performance Considerations and Advanced Practices

At some point, every PySpark user runs into this: you keep stacking withColumn() calls and your pipeline slows to a crawl. The reason? Each call adds a new layer to the logical plan, which Spark has to parse, optimize, and execute.

If you’re adding just one or two columns, that’s fine. But if you're chaining five, six, or more, you should start thinking differently.

Use select() when adding many columns

Rather than calling withColumn() repeatedly, build a new column list using select():

df = df.select(

"*",

(col("salary") * 0.1).alias("bonus"),

(col("age") + 5).alias("age_plus_five")

)This approach builds the logical plan in one go.

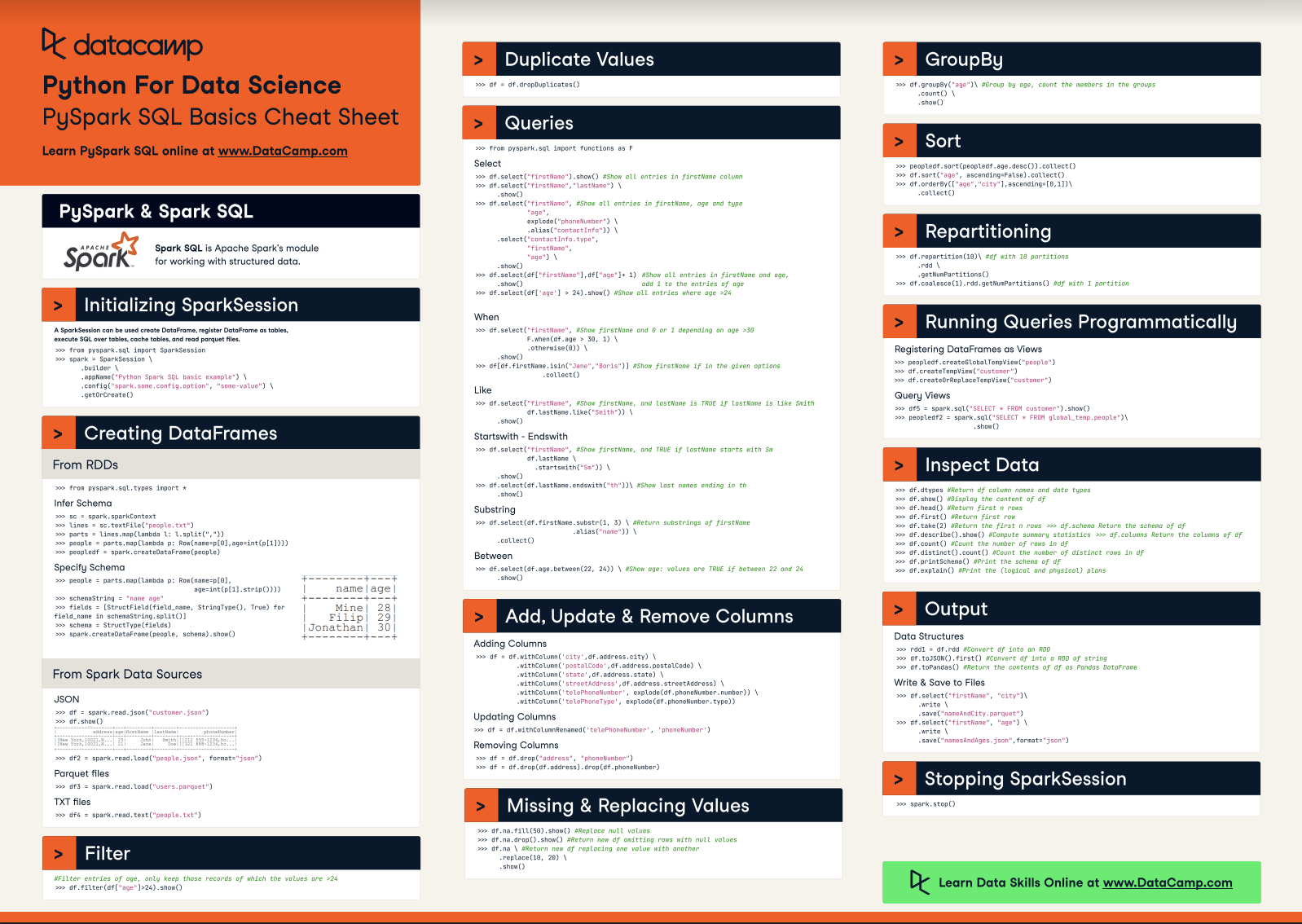

Learn more about select() and other methods in our PySpark Cheat Sheet: Spark in Python tutorial.

What about withColumns()?

Introduced in Spark 3.3, withColumns() lets you add multiple columns in one pass. No more repeated calls. It’s a dictionary-style method:

df = df.withColumns({

"bonus": col("salary") * 0.1,

"age_plus_five": col("age") + 5

})Not everyone’s caught up to Spark 3.3+ yet, but if you are, use this. It’s cleaner, faster, and avoids the “death by chaining” problem.

Complete PySpark withColumn() Example Walkthrough

Say you’re working on user activity data for a subscription-based platform. Your raw DataFrame looks something like this:

from pyspark.sql import SparkSession

spark = SparkSession.builder.appName("withColumn-demo").getOrCreate()

data = [

("Alice", "NY", 24, 129.99),

("Bob", "CA", 31, 199.95),

("Charlie", "TX", 45, 0.0),

("Diana", "WA", 17, 19.99)

]

columns = ["name", "state", "age", "purchase_amount"]

df = spark.createDataFrame(data, columns)You’ve got names, states, ages, and how much they’ve spent. Pretty basic. But in the real world, that’s never enough. So here’s what we’ll do next:

- Add a constant column for ingestion date.

- Create a new column showing whether the user is an adult.

- Format

purchase_amountto two decimal places. - Categorize users by spend level.

- Apply a custom function to label users.

- Use

withColumns()to wrap up extra values in a cleaner way.

Preparing for your next interview? The Top 36 PySpark Interview Questions and Answers for 2025 article provides a comprehensive guide to PySpark interview questions and answers, covering topics from foundational concepts to advanced techniques and optimization strategies.

Step 1: Add a constant ingestion date

It’s good practice to track when the data is entered into your system.

from pyspark.sql.functions import lit

df = df.withColumn("ingest_date", lit("2025-07-29"))Step 2: Flag adults vs. minors

You could’ve used just col("age") >= 18, but wrapping it with when() gives you full control if the logic ever gets more complicated.

from pyspark.sql.functions import when, col

df = df.withColumn(

"is_adult",

when(col("age") >= 18, True).otherwise(False)

)Step 3: Format purchase_amount

Casting types is one of the most frequent cleanup jobs you'll do, especially when reading from CSV or JSON.

df = df.withColumn("purchase_amount", col("purchase_amount").cast("decimal(10,2)"))Step 4: Categorize spending level

Let’s say you want three groups: “none”, “low”, and “high”.

df = df.withColumn(

"spend_category",

when(col("purchase_amount") == 0, "none")

.when(col("purchase_amount") < 100, "low")

.otherwise("high")

)This helps you segment users without running a separate query.

Step 5: Label users using a UDF

Now for a made-up rule. Let’s say you label a person based on their name length.

from pyspark.sql.functions import udf

from pyspark.sql.types import StringType

def user_label(name):

return "simple" if len(name) <= 4 else "complex"

label_udf = udf(user_label, StringType())

df = df.withColumn("label", label_udf(col("name")))Step 6: Add multiple extra columns in one go

Maybe you want a few more, age in months, and a welcome message.

df = df.withColumns({

"age_in_months": col("age") * 12,

"welcome_msg": col("name") + lit(", welcome aboard!")

})Much cleaner than calling withColumn() twice.

Here’s how the final DataFrame looks when you show it:

df.show(truncate=False)|

name |

state |

age |

purchase_amount |

ingest_date |

is_adult |

spend_category |

label |

age_in_months |

welcome_msg |

|

Alice |

NY |

24 |

129.99 |

2025-07-29 |

True |

high |

complex |

288 |

Alice, welcome aboard! |

|

Bob |

CA |

31 |

199.95 |

2025-07-29 |

True |

high |

simple |

372 |

Bob, welcome aboard! |

|

Charlie |

TX |

45 |

0.00 |

2025-07-29 |

True |

none |

complex |

540 |

Charlie, welcome aboard! |

|

Diana |

WA |

17 |

19.99 |

2025-07-29 |

False |

low |

complex |

204 |

Diana, welcome aboard! |

This kind of transformation stack is common in feature engineering, reporting, or cleaning up third-party feeds.

Learn the fundamentals of working with big data with PySpark from our Big Data Fundamentals with PySpark course.

withColumn() Best Practices and Pitfalls

PySpark's withColumn() might look simple, but it can trip up even seasoned engineers if you're not careful. Here’s the kind of stuff that can quietly mess up your pipeline, along with a few habits that can save you from nasty surprises.

Always reassign the result

This one’s basic, but it still catches people off guard: withColumn() doesn’t modify your original DataFrame. It gives you a new one. If you forget to reassign it, your change is gone..

df.withColumn("new_col", lit(1)) # This won't do anything

df = df.withColumn("new_col", lit(1)) # This worksWatch out for accidental overwrites

Let’s say your DataFrame has a column named status. You run this:

df = df.withColumn("Status", lit("Active"))Looks harmless, right? But Spark treats column names as case-insensitive by default. That means you just overwrote your original status column. Without realizing it.

One fix is to always check df.columns before and after. Or, if your pipeline supports it, turn on case sensitivity using:

spark.conf.set("spark.sql.caseSensitive", "true")Don’t use Python literals in expressions

This one’s easy to forget. When adding constants, avoid raw Python values. Always wrap them with lit().

df = df.withColumn("region", "US") # Bad

df = df.withColumn("region", lit("US")) # GoodWhy? Because withColumn() expects a Column expression, not a raw value. If you slip up, Spark might throw an unhelpful error, or worse, silently break downstream logic.

Handle exceptions outside of withColumn()

People sometimes get creative and wrap entire withColumn() blocks inside try/except. It’s better to isolate risky parts (like UDFs or data reads) and catch exceptions there. Keep your transformation layer clean and predictable.

try:

def risky_udf(x):

if not x:

raise ValueError("Empty input")

return x.lower()

except Exception as e:

print("Error in UDF definition:", e)Let Spark fail early, don’t hide it behind nested try blocks.

Learn more about exceptions in Python from our Exception & Error Handling in Python tutorial.

Favor built-ins over UDFs

Sure, UDFs give you power. But they come with trade-offs: slower performance, harder debugging, and less optimization. If there’s a built-in function that does the job, use it.

This:

df = df.withColumn("upper_name", upper(col("name")))Is much faster than this:

df = df.withColumn("upper_name", udf(lambda x: x.upper(), StringType())(col("name")))When Not to Use withColumn()

Despite how flexible withColumn() is, there are moments when it's not the right tool for the job.

You're reshaping a lot of columns at once

If you find yourself calling withColumn() 10 times in a row, it's time to switch gears. Use select() instead and write your transformations as part of a new projection.

df = df.select(

col("name"),

col("age"),

(col("salary") * 0.15).alias("bonus"),

(col("score") + 10).alias("adjusted_score")

)It’s clearer, it performs better, and it makes Spark’s optimizer work for you instead of against you.

You want to write SQL-style logic

If your team leans heavily on SQL, and you’ve already registered the DataFrame as a temporary view, it’s often cleaner to just run a SQL query.

df.createOrReplaceTempView("users")

df2 = spark.sql("""

SELECT name, age,

CASE WHEN age >= 18 THEN true ELSE false END AS is_adult

FROM users

""")This can be easier for SQL-savvy analysts or teams working across both Spark and traditional databases.

Build your SQL skills with interactive courses, tracks, and projects curated by real-world experts using our SQL Courses.

You're already on Spark 3.3+

If you're using Spark 3.3 or newer and need to add multiple columns, withColumns() is your friend. It’s not just convenient, it can be faster under the hood by creating a single logical plan update.

Learn to implement distributed data management and machine learning in Spark using the PySpark package from our Foundations of PySpark course.

Conclusion

PySpark’s withColumn() function is one of the most versatile tools in your data transformation arsenal, giving you the ability to add, modify, and engineer features directly within a DataFrame-centric workflow. From casting types and injecting constants to embedding complex logic with conditionals and UDFs, withColumn() helps you reshape messy data into production-ready pipelines.

But with that power comes responsibility. Overusing withColumn() in long chains can silently degrade performance by bloating the logical plan, making your Spark jobs harder to optimize and debug. That’s why knowing when to reach for select(), withColumns(), or even SQL can mean the difference between a job that crawls and one that scales.

With Spark constantly evolving, especially with features like withColumns() in Spark 3.3+, understanding the internals and performance trade-offs of each method is key to writing cleaner, faster, and more maintainable code.

Master the techniques behind large-scale column transformations, avoid the pitfalls of plan inflation, and learn how the pros streamline feature pipelines in our Feature Engineering with PySpark course.

PySpark withColumn() FAQs

Why is my Spark job slow when I use withColumn() a lot?

When you chain multiple withColumn() calls, Spark adds each one as a separate step in the logical execution plan. Over time, this can turn into a bloated plan that’s harder to optimize and slower to run. Instead of stacking ten withColumn() calls, try building your new columns inside a single select() or use withColumns() to add several columns in one go.

Can I use withColumn() to remove a column?

No, withColumn() only adds or replaces columns, it doesn’t delete them. If you want to drop a column, use drop() instead. You can also use select() to keep only the columns you need.

Why do I get an error when I try to use a string or number in withColumn()?

That usually happens when you pass a raw Python value instead of wrapping it with lit().withColumn() expects a Spark Column expression. Here’s the right way:

from pyspark.sql.functions import lit

df = df.withColumn("new_col", lit(42))