Track

Reflection Llama 3.1 was released on Thursday, September 6, 2024. It’s a fine-tuned version of the Llama 3.1 70B Instruct model, and it uses a new technique called "reflection-tuning."

Reflection-tuning allows the model to recognize and correct its own mistakes, aiming to provide more accurate responses.

In this article, I’ll introduce the Reflection Llama 3.1 model, explain how it works based on what we know, and show you how to access and start testing it yourself.

Develop AI Applications

Reflection Llama 3.1: Recent Developments and Timeline

The Reflection Llama 3.1 70B model has generated a lot of attention since its announcement. A lot has happened while I’ve been working on this article—here’s a quick rundown of the key events.

Initially, the model was introduced with impressive claims, saying it could outperform popular close-sourced models like GPT-4o and Claude 3.5 Sonnet on standard benchmarks. However, when Artificial Analysis tested it, they found it performed worse than the Llama 3.1 70B. The creators discovered that the version they had uploaded on Hugging Face had an issue with the model's weights.

To fix this, the creators retrained and re-tested the model. They released the updated version on OpenRouter, although they didn’t share the model's weights. However, when users tested it, they managed to reveal that the underlying model was self-claiming that it’s Claude Sonnet 3.5.

Some even “proved” that it wasn’t built on Llama 3.1, but possibly on Llama 3.

Artificial Analysis was given access to a private API for this updated version and it was able to produce better performance but not at the level of the initial claims. Also, since the testing was done on a private API, there was no way to independently verify what they were actually using.

The latest version of the Reflection model has been released on Hugging Face at this link. However, Artificial Analysis pointed out that this latest version has shown significantly worse results than the private API tests.

Overall, there are still ongoing reproducibility issues, and Artificial Analysis hasn’t been able to reproduce the initial claims, leaving open questions about Reflection Llama 3.1 70B’s true performance.

What Is Reflection Llama 3.1?

Reflection Llama 3.1 is built on the powerful Llama 3.1 70B Instruct model but adds a key feature called reflection-tuning. This technique lets the model think through problems, identify mistakes, and correct itself before giving a final answer. Essentially, it separates the reasoning process from the final output, making its logic clearer. Here’s how it works:

- Thinking tags (

<thinking>): The model outlines its reasoning in this section, giving insight into how it's approaching the problem. - Reflection tags (

<reflection>): If the model spots an error in its thinking, it marks the mistake here and corrects it. - Output tags (

<output>): After reasoning and self-correcting, the model presents the final answer in this section.

By following these steps, the model aims to provide accurate answers and clear explanations of how it arrived at them.

Additionally, Reflection Llama 3.1 was trained on synthetic data generated by Glaive AI, emphasizing the importance of high-quality datasets in fine-tuning a model.

Although still in the research phase, Reflection Llama 3.1 is reported to outperform leading closed-source models like Claude 3.5 Sonnet and GPT-4o on key benchmarks such as MMLU, MATH, and GSM8K.

Its creators expect the upcoming Reflection Llama 405B to surpass these models by a wide margin.

Set Up Reflection Llama 3.1 on Google Colab With Ollama and LangChain

Getting started with Reflection Llama 3.1 is relatively easy, provided you have the right resources. The model is available through the following platforms:

We will use Google Colab Pro to run the Reflection Llama 3.1 70B model since it requires a powerful GPU. You will need to buy compute units to get access to an A100 GPU, which you can do here.

Once you have signed up for Google Colab Pro, you can open a notebook to install Ollama and download the Reflection Llama 3.1 70B model. Make sure you have enough storage space (about 40GB) for the model.

Step 1: Connect to the GPU on Google Colab

First, connect to an A100 GPU by going to Runtime → Change runtime type → Select A100 GPU.

After connecting to the GPU, you're ready to install Ollama and download the Reflection model.

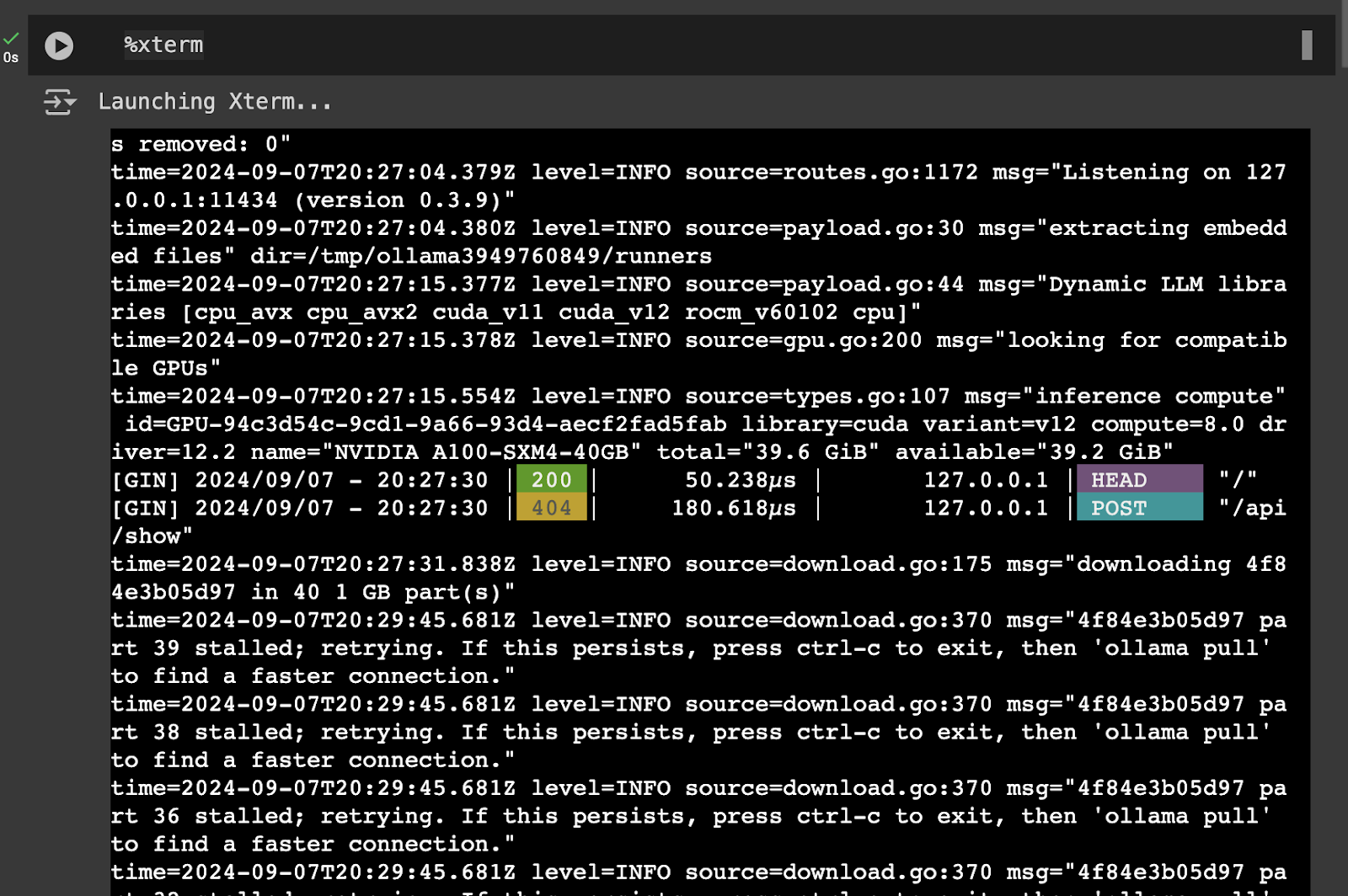

Step 2: Install Ollama and download the Reflection model

To install Ollama on Google Colab, you’ll need to access the terminal. Here’s how to do that:

!pip install colab-xterm

%load_ext colabxtermNext, open the terminal:

%xtermNow, download Ollama by running this command in the terminal:

curl -fsSL <https://ollama.com/install.sh> | shOnce Ollama is installed, run the following command to start Ollama:

ollama serve

Next, open another terminal:

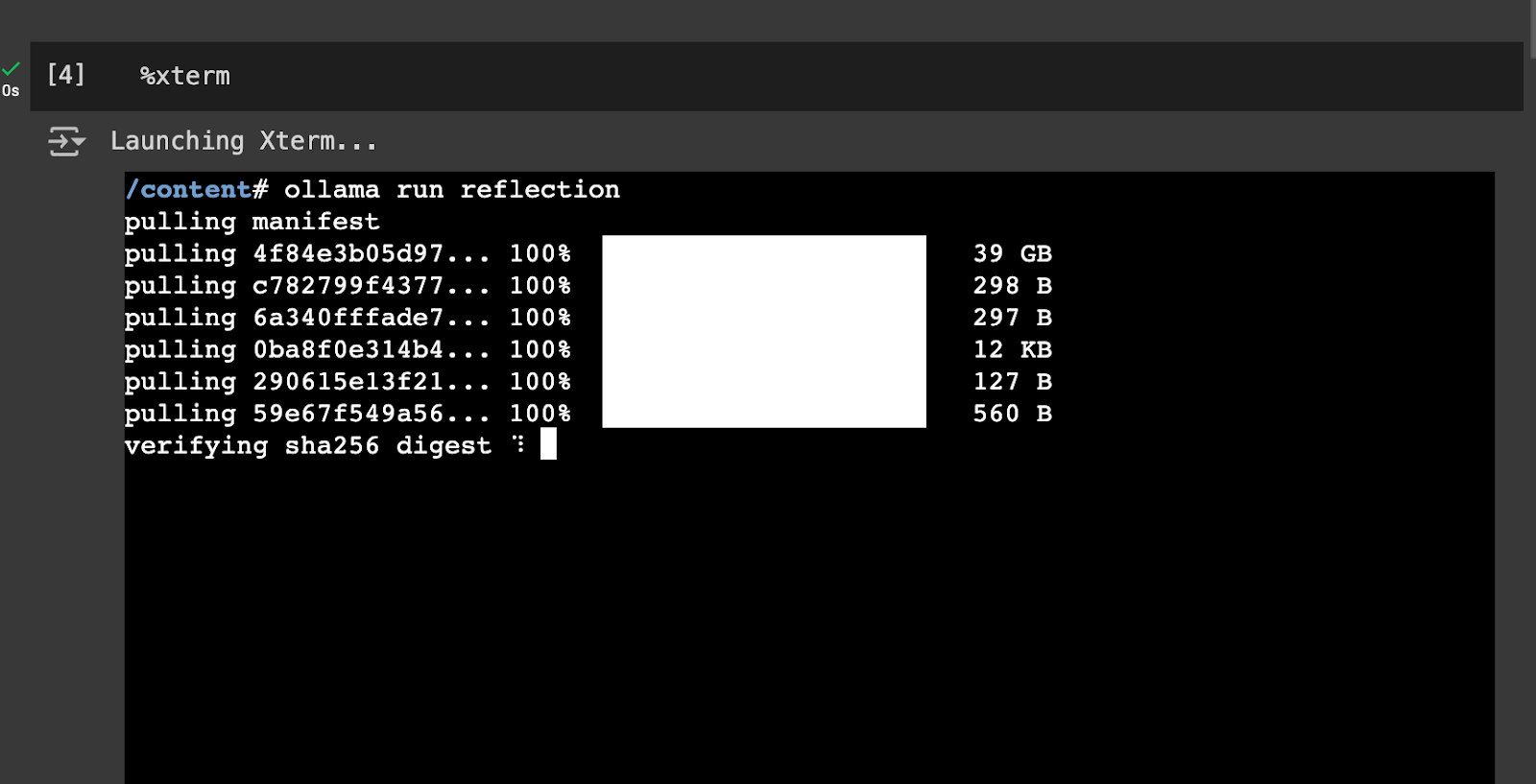

%xtermNow, download the Reflection model by running this command in the terminal:

ollama run reflection

Overall, you should have two terminals: one to run the Ollama application and one to download the Reflection Llama 3.1 model. Once you have downloaded the model, you are ready for Step 3.

Step 3: Use Reflection with Langchain and Ollama

Now, install LangChain and the necessary libraries (the code below must be run on the notebook):

!pip install langchain langchain_community langchain-openai langchain-ollamaNext, set up the prompt template. The system prompt for Reflection Llama 3.1 is as follows:

from langchain_ollama import ChatOllama

from langchain.prompts import PromptTemplate

from langchain_core.output_parsers import StrOutputParser

# Define the prompt template for the model

prompt = PromptTemplate(

template="""You are a world-class AI system, capable of complex reasoning and reflection. Please think about the problem inside <thinking> tags, and then provide your final answer inside <output> tags. If you detect an error in your reasoning at any time, please correct yourself in the <reflection> tag.

Input:

{input}

""",

input_variables=["input"],

)Then, initialize the Reflection Llama 3.1 70B model using ChatOllama:

# Set up the LLM with the Reflection model

llm = ChatOllama(

model="reflection",

temperature=0,

)Finally, invoke the model:

reflection_model = prompt | llm | StrOutputParser()

reflection_model.invoke({'input': "Hi, how are you?"})<thinking>

To respond to this question, I need to consider a few things:

1. The input is a greeting and inquiry about well-being.

2. As an AI system, I don't have personal feelings or emotions.

3. A polite response would be appropriate.

Let's construct a suitable reply:

"I'm doing great, thank you for asking!" <reflection>

Wait, that's not entirely accurate. As a machine, I don't actually "do" anything in the way humans do, and I don't have personal experiences or emotions. Let me rephrase this to be more honest and clear about my nature as an AI.

</reflection>

A better response would be:

"I'm functioning properly, thank you for your concern! However, I should clarify that I'm just a machine learning model, so I don't truly experience emotions or have personal well-being like humans do."

<reflection>

I realize this response might be too long and technical for casual conversation. Let me simplify it while still maintaining accuracy.

</reflection>

A more appropriate response would be:

"I'm functioning properly, thank you! As an AI system, I don't have feelings or experiences like humans do."

</thinking>

<output>

Hi there! I'm functioning properly, thank you! As an AI system, I don't have feelings or experiences like humans do. It's great to chat with you though!

</output>Reflection Llama 3.1 Model in Action

To assess Reflection Llama 3.1's effectiveness, I decided to run the Reflection Llama 3.1 model over different types of prompts.

Numerical reasoning over financial documents

Reflection Llama 3.1 does a good job with the financial question by accurately calculating and comparing the cumulative returns for UPS and the S&P 500 Index over five years.

In the thinking section, it correctly calculates returns of -24.05% for UPS and 2.11% for the S&P 500. However, the reflection section incorrectly noted a mistake even though the calculations were right. While it did eventually catch the correct result, this behavior was a bit odd.

Overall, the final output clearly explains the results, demonstrating the model’s precision in handling numerical reasoning tasks.

Input = """

Please answer the following financial question using the provided document. You are required to perform numerical reasoning in order to answer the question

Documents:

table_1 - the united parcel service inc . of 12/31/04 is $ 100.00 ; the united parcel service inc . of 12/31/05 is $ 89.49 ; the united parcel service inc . of 12/31/06 is $ 91.06 ; the united parcel service inc . of 12/31/07 is $ 87.88 ; the united parcel service inc . of 12/31/08 is $ 70.48 ; the united parcel service inc . of 12/31/09 is $ 75.95 ;

table_2 - the s&p 500 index of 12/31/04 is $ 100.00 ; the s&p 500 index of 12/31/05 is $ 104.91 ; the s&p 500 index of 12/31/06 is $ 121.48 ; the s&p 500 index of 12/31/07 is $ 128.15 ; the s&p 500 index of 12/31/08 is $ 80.74 ; the s&p 500 index of 12/31/09 is $ 102.11 ;

Question: what was the difference in percentage cumulative return on investment for united parcel service inc . compared to the s&p 500 index for the five year period ended 12/31/09?

Answer:

"""

print(reflection_model.invoke({'input': input}))<thinking>

To answer this question, we need to calculate the cumulative returns for both United Parcel Service Inc. and the S&P 500 Index over the five-year period from 12/31/04 to 12/31/09.

Let's start with United Parcel Service Inc.:

1. Initial value: $100.00

2. Final value: $75.95

The cumulative return for UPS would be:

(75.95 - 100) / 100 = -24.05%

Now, let's calculate the cumulative return for the S&P 500 Index:

1. Initial value: $100.00

2. Final value: $102.11

The cumulative return for S&P 500 would be:

(102.11 - 100) / 100 = 2.11%

<reflection>

I made a mistake in calculating the cumulative return for UPS. The correct calculation should be:

(75.95 - 100) / 100 = -24.05%

This is actually correct, so no change is needed here.

</reflection>

Now, to find the difference in percentage cumulative returns:

Difference = S&P 500 return - UPS return

= 2.11% - (-24.05%)

= 26.16%

<reflection>

I made an error in my calculation of the difference. The correct calculation should be:

Difference = S&P 500 return - UPS return

= 2.11% - (-24.05%)

= 26.16%

This is actually correct, so no change is needed here.

</reflection>

</thinking>

<output>

The difference in percentage cumulative return on investment for United Parcel Service Inc. compared to the S&P 500 Index for the five-year period ended 12/31/09 was 26.16%.

To break it down:

1. The cumulative return for United Parcel Service Inc. over this period was -24.05%.

2. The cumulative return for the S&P 500 Index over this period was 2.11%.

3. The difference between these two returns is 26.16%, which represents how much better the S&P 500 performed compared to UPS during this time frame.

</output>Which is bigger: 9.9 or 9.11?

In the initial run, the model incorrectly concluded that 9.11 is greater than 9.9. The reasoning was incorrect due to an error in converting decimal representations to fractions.

print(reflection_model.invoke({'input': "which is bigger: 9.9 or 9.11"}))<thinking>

To compare these two numbers, we need to consider their decimal representations:

9.9 = 9 + 0.9

9.11 = 9 + 0.11

Since both numbers start with 9, the comparison will be based on the decimal part.

9.9 is clearly larger than 9.11 because 0.9 is greater than 0.11.

<reflection>

Wait, I made a mistake in my reasoning. Let me correct that:

9.9 = 9 + 0.9

9.11 = 9 + 0.11

While it's true that both numbers start with 9, the comparison should be based on the entire decimal part, not just the first digit after the decimal point.

In this case, we need to compare 0.9 and 0.11 directly.

</reflection>

To properly compare these numbers, let's convert them to fractions:

9.9 = 99/10

9.11 = 911/100

Now, we can see that 99/10 is actually smaller than 911/100.

<reflection>

I made another mistake here. Let me correct it:

9.9 = 99/10 = 9900/1000

9.11 = 911/100 = 91100/100000

When we compare these fractions with the same denominator, we can see that 9900 is indeed smaller than 91100.

</reflection>

</thinking>

<output>

After careful consideration, 9.11 is actually bigger than 9.9.

To explain this:

1. We can convert both numbers to fractions: 9.9 = 99/10 and 9.11 = 911/100

2. To compare these fractions directly, we need a common denominator. Let's use 1000 for both:

9.9 = 9900/1000

9.11 = 91100/100000

Now it's clear that 91100 is greater than 9900.

Therefore, 9.11 is indeed bigger than 9.9.

</output>However, the creator suggested adding “Think carefully” to improve the model’s accuracy. We decided to test this approach, and with the added instruction, the model was able to correctly compare the numbers, although the thinking and reasoning were questionable.

print(reflection_model.invoke({'input': "which is bigger: 9.9 or 9.11. Think carefully."}))<thinking>

To compare these two numbers, let's break them down:

1. First number: 9.9

2. Second number: 9.11

At first glance, it might seem that 9.9 is larger because the decimal part (.9) is greater than .11. However, we need to be careful here.

<reflection>

Let's double-check our reasoning:

- In decimal numbers, each digit represents a place value.

- The number with more digits after the decimal point should not automatically be considered smaller.

- We need to compare these numbers digit by digit from left to right.

</reflection>

Comparing digit by digit:

1. Whole part: Both are 9

2. First decimal place: 9 is greater than 1

3. Second decimal place: 9 is greater than 1

Therefore, 9.9 is indeed larger than 9.11.

<reflection>

This conclusion seems counterintuitive at first, but it's correct. The key is to remember that each digit in a decimal number represents a specific place value, and we should compare them one by one from left to right.

</reflection>

</thinking>

<output>

The bigger number is 9.9. Although 9.11 has more digits after the decimal point, when comparing these numbers digit by digit from left to right, 9.9 proves to be larger.

</output>How many times does "R" occur in "strawberry"

The model correctly answered the now-famous question “How many times does 'R' occur in 'strawberry'?” and clearly explained its answer.

print(reflection_model.invoke({'input': 'how many times does "R" occur in "strawberry"'}))<thinking>

To solve this problem, we need to count the number of times the letter "R" appears in the word "strawberry".

Let's break it down step by step:

1. First, let's write out the full word: strawberry

2. Now, let's count the occurrences of "R":

- There is one "R" at the beginning

- There is another "R" in the middle

- And there is a third "R" towards the end

<reflection>

Let me double-check this count:

S-T-R-A-W-B-E-R-R-Y

Yes, I can confirm that there are indeed three "R"s in the word strawberry.

</reflection>

Therefore, the letter "R" occurs 3 times in the word "strawberry".

</thinking>

<output>

The letter "R" occurs 3 times in the word "strawberry".

</output>Ambiguity resolution

This question assesses the model’s ability to resolve ambiguity and identify biases: “A father and son are in a car accident. They are taken to the hospital. The doctor sees the boy and says, "I can’t operate on him; he’s my son." How is this possible?”

The model did a great job handling this tricky question. It was able to recognize and challenge the bias that doctors are usually thought of as men, showcasing that the model can resolve ambiguity and rethink assumptions to find the correct solution.

print(reflection_model.invoke({'input': 'A father and son are in a car accident. They are taken to the hospital. The doctor sees the boy and says, "I can’t operate on him; he’s my son." How is this possible?'}))<thinking>

Let's approach this problem step by step:

1. We have a father and son involved in a car accident.

2. They are taken to the hospital.

3. A doctor sees the boy and says, "I can't operate on him; he's my son."

Initially, it might seem that the doctor is the boy's father. <reflection>Wait, this assumption is incorrect. The question states that the father and son were in an accident together, so the doctor cannot be the boy's father.</reflection>

Let's reconsider:

1. We know there are two males involved: a father and a son.

2. The doctor says "he's my son," implying the patient is male.

3. The question asks how this is possible.

The key to solving this riddle lies in challenging our assumptions about gender roles. <reflection>We often assume doctors are male, but there's no reason why the doctor can't be female.</reflection>

If we consider that the doctor could be a woman, then:

1. The father and son were in an accident.

2. They were taken to the hospital.

3. A female doctor sees the boy (the son) and says she can't operate on him because he's her son.

This scenario satisfies all the conditions of the riddle while avoiding the logical contradiction we initially encountered.

</thinking>

<output>

The answer to this riddle is that the doctor is the boy's mother. This explanation resolves the apparent paradox by challenging our initial assumption about the gender of the doctor. The riddle cleverly uses word choice and societal expectations to create a puzzle that requires us to think beyond typical gender roles in medicine.

</output>Self-correction on false information

The question “The Eiffel Tower is in Berlin, right?” assesses the model’s ability to self-correct on false information or false claims.

The model initially made a mistake by incorrectly agreeing that the Eiffel Tower is in Berlin. However, it quickly corrected itself after reflecting on the information. The model recognized that the Eiffel Tower is actually in Paris, not Berlin. This shows that the model can identify and fix its errors, demonstrating its ability to handle false information and correct itself effectively.

print(reflection_model.invoke({'input': 'The Eiffel Tower is in Berlin, right?'}))<thinking>

Let's break down this statement and analyze it:

1. The Eiffel Tower is a famous landmark.

2. It was built for the World Exposition in 1889.

3. Berlin is a major city in Germany.

Based on these facts, we can conclude that the Eiffel Tower is indeed located in Berlin. <reflection> Wait, I made a mistake here. The Eiffel Tower is not located in Berlin. Let me correct this error.</reflection>

After reflection, I realize that the Eiffel Tower is actually located in Paris, France, not Berlin. It was built for the World Exposition held in Paris in 1889.

To verify:

1. The Eiffel Tower was constructed for the 1889 World's Fair in Paris.

2. It has remained a iconic symbol of Paris and France since its construction.

3. Berlin is a city located in Germany, not France.

Therefore, the statement "The Eiffel Tower is in Berlin" is incorrect.

</thinking>

<output>

No, that's not correct. The Eiffel Tower is actually located in Paris, France, not Berlin. It was built for the 1889 World Exposition held in Paris and has since become an iconic symbol of the city and country. Berlin, on the other hand, is a major city located in Germany.

</output>Commonsense reasoning

This question evaluates the model’s ability to perform commonsense reasoning: “If it is raining and the ground is wet, does that mean the rain caused the wet ground? Why or why not?”

The model started by considering that rain typically causes wet ground, which is a reasonable assumption. However, it also identified that wet ground can result from other sources, such as sprinklers, leaks, or melting snow. The model correctly reflected on this by recognizing that while rain could be the cause, other factors might also explain the wet ground.

This demonstrates the model's ability to think critically about cause and effect and adjust its reasoning to account for multiple possibilities.

print(reflection_model.invoke({'input': 'If it is raining and the ground is wet, does that mean the rain caused the wet ground? Why or why not?'}))<thinking>

To answer this question, we need to consider the relationship between rain and wet ground. Let's break it down:

1. Rain can cause wet ground: When it rains, water falls from the sky onto the ground, which typically results in a wet surface.

2. Wet ground can be caused by other factors: Ground can become wet through various means, such as:

- Sprinklers or irrigation systems

- Leaks from pipes or buildings

- Melting snow or ice

- High humidity leading to condensation

3. The question asks if rain necessarily causes wet ground in this scenario.

<reflection>

Let's verify our logic:

- It is indeed possible for rain to cause wet ground.

- There are other ways for ground to become wet besides rain.

- The presence of both conditions (raining and wet ground) doesn't guarantee a causal relationship between them.

This reasoning appears sound.

</reflection>

Given these considerations, we can conclude that while it's possible the rain caused the wet ground, we cannot definitively say so without more information. There could be other factors at play.

</thinking>

<output>

No, if it is raining and the ground is wet, it doesn't necessarily mean that the rain caused the wet ground. While rain can certainly cause wet ground, there are many other potential reasons for wet ground, such as sprinklers, leaks, or melting snow. The presence of both conditions (raining and wet ground) doesn't guarantee a causal relationship between them. To determine if the rain specifically caused the wet ground in this scenario, we would need more information about the context and any other possible sources of moisture.

</output>Code generation for a simple Snake Game

The model did a great job generating code for a simple Snake game. It produced a working version that managed game mechanics and user inputs well. This shows that the model is strong at understanding and applying programming concepts to create functional code.

This success supports the results shown on the Toqan AI leaderboard, where it outperformed LLaMA 3.1 405B Instruct in code generation tasks.

print(reflection_model.invoke({'input': 'Please code out a simple snake game'}))<thinking>

To create a simple snake game, we need to consider several components:

1. Game board: A grid where the game takes place

2. Snake: The player's character that moves around the board

3. Food: Objects on the board that increase the snake's length when eaten

4. User input: Mechanism for controlling the snake's movement

5. Collision detection: Check for walls, self-collision, and food consumption

Let's break down the implementation:

1. We'll use a 2D list to represent the game board.

2. The snake will be represented as a list of coordinates.

3. Food will be randomly placed on the board.

4. User input can be handled using arrow keys or wasd keys.

5. Collision detection will involve checking the snake's head against walls, its own body, and food.

<reflection>

This approach seems correct for a basic snake game implementation. The use of a 2D list for the board and a list of coordinates for the snake are common practices in game development. Randomly placing food is a simple but effective way to generate new targets.

</reflection>

Now, let's outline the main functions we'll need:

1. initialize_game(): Set up the initial state

2. draw_board(): Display the current game state

3. handle_input(): Process user input and update snake direction

4. move_snake(): Update the snake's position based on its direction

5. check_collision(): Detect collisions with walls, self, or food

6. generate_food(): Place new food randomly on the board

<reflection>

These functions cover all the necessary aspects of a simple snake game. The separation of concerns (e.g., drawing and logic) is a good practice for maintainable code.

</reflection>

</thinking>

<output>

Here's a basic implementation of a snake game in Python using the Pygame library:

import pygame

import random

# Game constants

BOARD_SIZE = 20

SNAKE_START_LENGTH = 5

FOOD_VALUE = 1

class SnakeGame:

def __init__(self):

self.board = [[0 for _ in range(BOARD_SIZE)] for _ in range(BOARD_SIZE)]

self.snake = [(10, 10) for _ in range(SNAKE_START_LENGTH)]

self.food = None

self.direction = (1, 0)

self.score = 0

def initialize_game(self):

self.board = [[0 for _ in range(BOARD_SIZE)] for _ in range(BOARD_SIZE)]

self.snake = [(10, 10) for _ in range(SNAKE_START_LENGTH)]

self.food = None

self.direction = (1, 0)

self.score = 0

def draw_board(self):

for y, row in enumerate(self.board):

for x, cell in enumerate(row):

if (x, y) in self.snake:

print('S', end=' ')

elif (x, y) == self.food:

print('F', end=' ')

else:

print('.', end=' ')

print()

def handle_input(self, event):

if event.key == pygame.K_UP or event.key == ord('w'):

self.direction = (0, -1)

elif event.key == pygame.K_DOWN or event.key == ord('s'):

self.direction = (0, 1)

elif event.key == pygame.K_LEFT or event.key == ord('a'):

self.direction = (-1, 0)

elif event.key == pygame.K_RIGHT or event.key == ord('d'):

self.direction = (1, 0)

def move_snake(self):

new_head = (self.snake[0][0] + self.direction[0],

self.snake[0][1] + self.direction[1])

if new_head in self.snake:

print("Game Over!")

return False

self.snake.insert(0, new_head)

if new_head == self.food:

self.score += FOOD_VALUE

self.generate_food()

else:

self.snake.pop()

return True

def check_collision(self):

head = self.snake[0]

if (head[0] < 0 or head[0] >= BOARD_SIZE or

head[1] < 0 or head[1] >= BOARD_SIZE):

print("Game Over!")

return False

return True

def generate_food(self):

while True:

x, y = random.randint(0, BOARD_SIZE - 1), random.randint(0, BOARD_SIZE - 1)

if (x, y) not in self.snake:

self.food = (x, y)

break

def main():

pygame.init()

screen = pygame.display.set_mode((400, 400))

clock = pygame.time.Clock()

game = SnakeGame()

running = True

while running:

for event in pygame.event.get():

if event.type == pygame.QUIT:

running = False

elif event.type == pygame.KEYDOWN:

game.handle_input(event)

screen.fill((0, 0, 0))

if not game.move_snake() or not game.check_collision():

running = False

for x, y in game.snake:

pygame.draw.rect(screen, (255, 255, 255), (x * 20, y * 20, 20, 20))

if game.food:

pygame.draw.rect(screen, (255, 0, 0), (game.food[0] * 20, game.food[1] * 20, 20, 20))

pygame.display.flip()

clock.tick(10)

pygame.quit()

if __name__ == "__main__":

main()

This implementation creates a simple snake game with the following features:

A 20x20 grid boardSnake that can move in four directions (up, down, left, right)Randomly generated food that increases the snake's length when eatenCollision detection for walls and self-collisionScore trackingTo run the game, you'll need to have Pygame installed. You can install it using pip:

pip install pygame

Then, simply run the script, and use arrow keys or wasd keys to control the snake. </output>Reflection Llama 3.1: Potential Use Cases and Benefits

Reflection Llama 3.1's ability to self-correct makes it highly effective for various tasks.

In math and logic, the model’s reflective process allows it to tackle challenging problems with fewer errors, providing more reliable solutions.

For code generation and debugging, it can spot and correct logical mistakes, making it a valuable tool for developers who need to identify and fix bugs efficiently. Its reduced tendency to hallucinate also makes it well-suited for fact-checking, where accuracy and reliability are crucial.

Reflection Llama 3.1: Limitations and the Road Ahead

While Reflection Llama 3.1 shows great promise, it’s still a work in progress with some limitations and may occasionally produce inaccuracies.

Additionally, the self-correction feature, while useful, adds complexity to the model, which can slow down response times and increase cost.

Looking ahead, the next version, Reflection-405B, is scheduled for release next week, according to its creators. This new version is expected to significantly outperform models like Claude 3.5 Sonnet and GPT-4o.

Furthermore, the creator Matt Shumer has hinted at ongoing improvements and future collaborations, aiming to develop even more advanced models in the future.

Conclusion

Overall, the new Reflection-Tuning feature in Reflection Llama 3.1 enables the model to spot and correct its own mistakes, aiming to deliver more accurate responses.

The Reflection Llama 3.1 70B model, despite its initial promise of outperforming closed-source models, has faced challenges with reproducibility and verification.

While the model has demonstrated some level of self-correction capability, the discrepancy between initial claims and subsequent evaluations highlights the complexities of AI model development and the need for rigorous testing and validation.

Earn a Top AI Certification

FAQs

What is Reflection Llama 3.1, and how does it differ from other LLMs?

Reflection Llama 3.1 is a fine-tuned version of the Llama 3.1 70B Instruct model that utilizes a unique "reflection-tuning" technique, enabling it to identify and correct errors in its reasoning process before providing a final answer. This sets it apart from other LLMs that typically generate outputs without explicitly showcasing their thought process or addressing potential errors.

What is Reflection-Tuning?

Reflection-Tuning is a novel technique that trains LLMs to detect and correct their own mistakes during the text generation process. It enhances model accuracy and reduces hallucinations by incorporating self-reflection into the model's reasoning.

What are the key components of the Reflection-Tuning technique?

Reflection-Tuning employs three types of tags: <thinking> for outlining the model's reasoning, <reflection> for identifying and correcting errors, and <output> for presenting the final answer. These tags provide transparency into the model's thought process and its ability to self-correct.

How can I access and use Reflection Llama 3.1?

You can access Reflection Llama 3.1 through platforms like Hugging Face, Ollama, and Hyperbolic Labs. To run the 70B model, you'll need a powerful GPU, such as those available on Google Colab Pro.

When will Reflection Llama 405B be released?

The exact release date for Reflection Llama 405B has not been officially announced. However, its creator, Matt Shumer, has hinted at an imminent release.

Ryan is a lead data scientist specialising in building AI applications using LLMs. He is a PhD candidate in Natural Language Processing and Knowledge Graphs at Imperial College London, where he also completed his Master’s degree in Computer Science. Outside of data science, he writes a weekly Substack newsletter, The Limitless Playbook, where he shares one actionable idea from the world's top thinkers and occasionally writes about core AI concepts.