Course

Runway ML specializes in generative AI models for video and images. They recently released Runway Aleph, which promises to empower users to transform their visual projects with remarkable ease and precision.

I used Aleph to create the video below for my friend's 50th birthday. In this article, I share the process I followed while exploring whether Runway Aleph delivers on its promise. In what follows, I will share my process step-by-step.

What Is Runway Aleph?

Runway Aleph is a versatile video AI generation model that brings video creation one step further by allowing video inputs that can be edited using a text prompt.

Key features include:

- Ability to intricately add, remove, and alter objects within a scene.

- Generate different perspectives or angles of a video.

- Adjust the overall style and lighting to match any desired aesthetic.

- Extend a video by generating the next scene.

You can see many examples in Runway’s official announcement.

How To Access Runway Aleph?

Runway Aleph is available starting with their Standard plan for $15 a month. Despite being a monthly plan, it doesn't grant an unlimited number of uses.

The Standard plan comes with 625 credits, and each second of Aleph costs 15 credits, meaning about 41 seconds of video can be edited each month.

There is an Unlimited plan that includes 2250 credits and provides unlimited usage in explore mode, meaning that the videos take longer to generate because they are queued and generated when they reach the front of the queue.

Almost all videos I generated here were done using the explore mode, and I found the wait times to vary a lot depending on the hour of the day. Most of the time, there was no waiting time at all.

How to Use Runway Aleph?

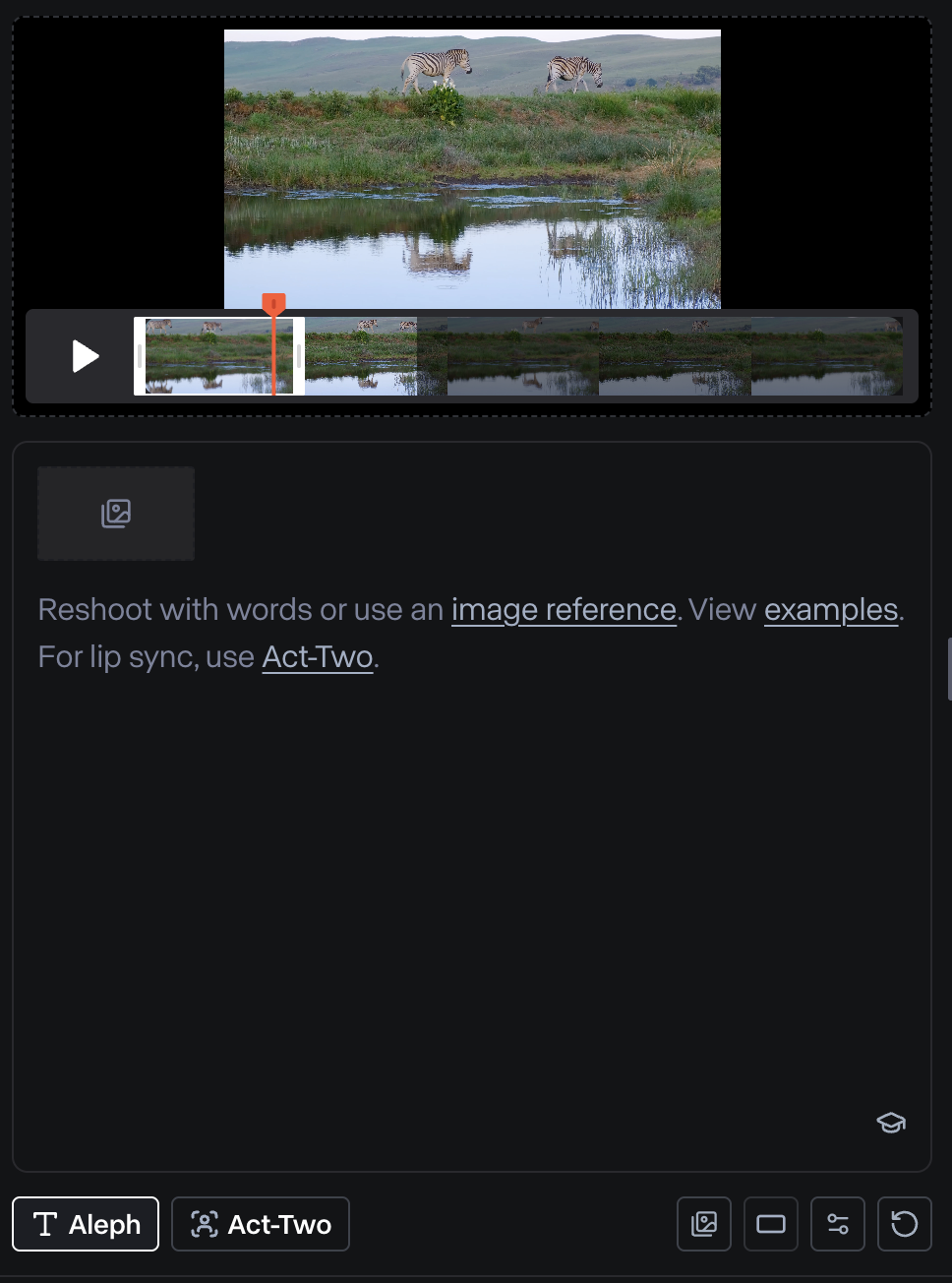

To use Runway Aleph, we need to have a video as input. Once a video is selected, we can provide a text prompt to edit or transform the video.

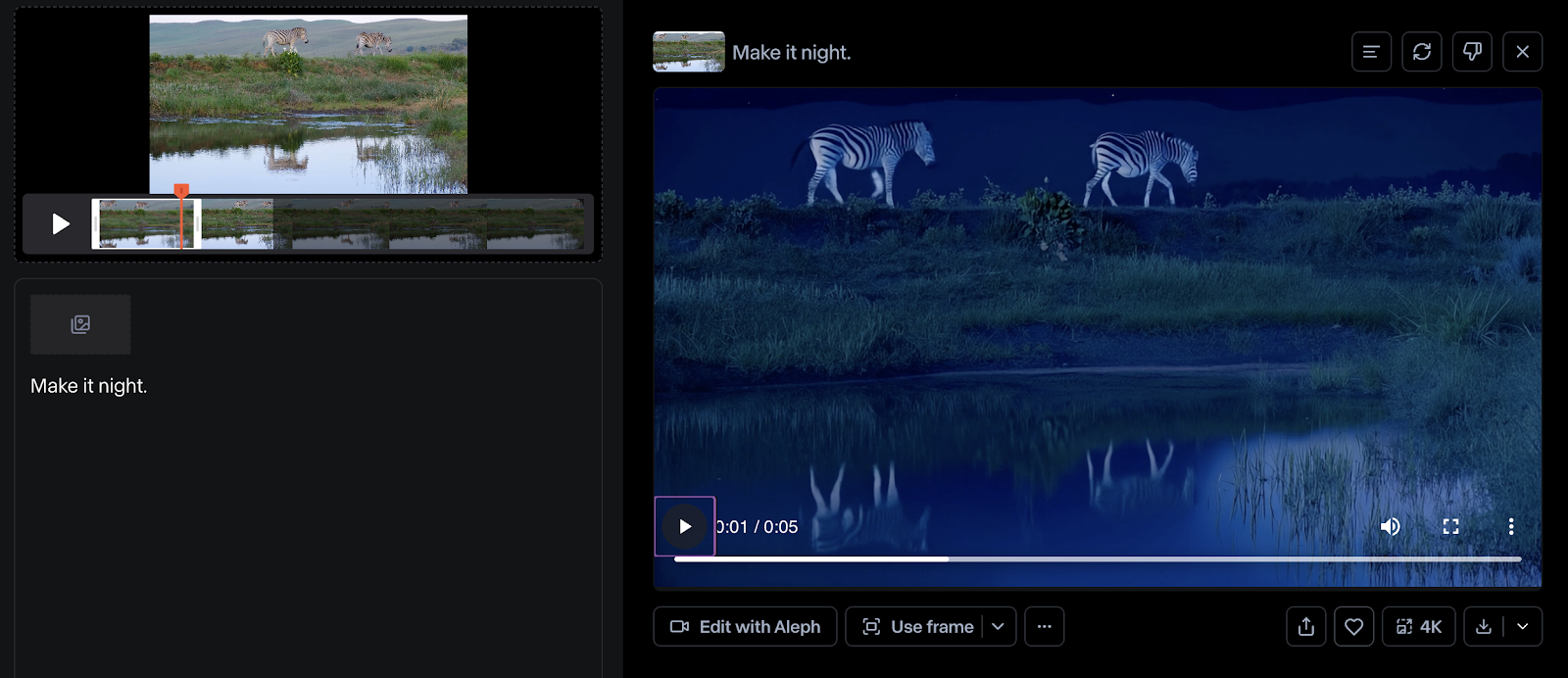

We can, for example, ask Aleph to make it night:

Note that if the video is longer than 5 seconds, a 5-second section of the video will be used instead. This section can be selected by dragging the window under the video input.

Planning The Video

When I got access to Runway Aleph, I had just been asked to record a video wishing my friend a happy birthday. So, I thought I'd see if Aleph could be used to make a video from start to finish. I felt that the process taught me a lot about its capabilities and especially about its limitations.

This experiment will not only use Aleph's video editing capabilities but also Runway's Gen-4 video generation capabilities.

For the sounds, I used free sound effects from Pixabay, while the voices were generated using text-to-speech models from OpenAI.

My idea was to do something with references to John Wick, one of his favorite movies. Since I didn't have any footage, I had to create everything based on photos of us and freely available videos on the internet.

I wanted to keep it simple, so I went for a trailer-like video with a narrator. Not having characters speaking is much simpler when dealing with AI-generated videos.

My script was something along the lines of:

- Set the scene in Taipei (where we live)

- Introduce the two characters

- The two characters meet at "The Continental" hotel (a movie reference)

- I gift him a box containing a marker (another movie reference)

Creating the characters

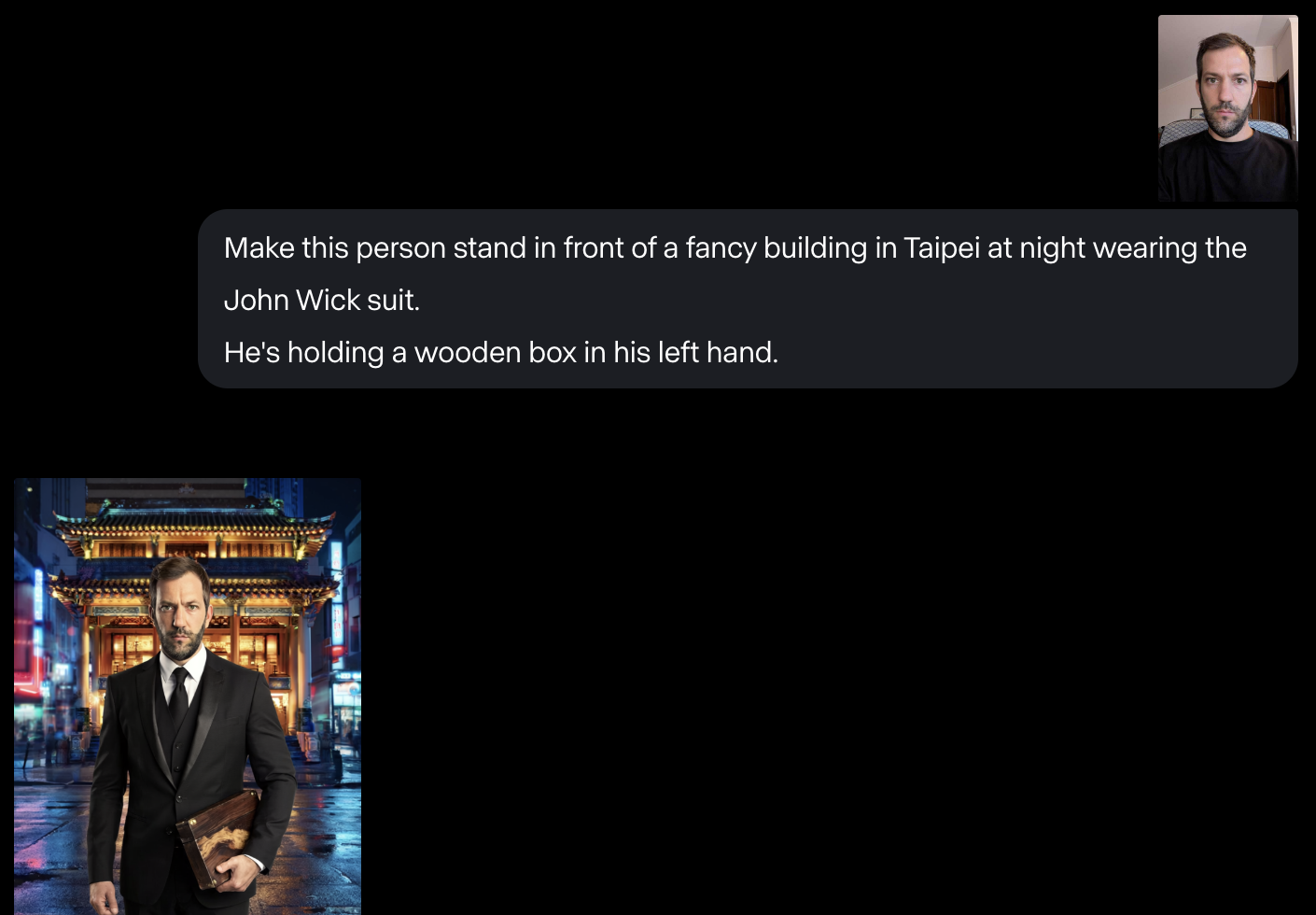

To generate my character, I took a selfie and used Runway chat mode to generate a version of me wearing a suit and holding a wooden box.

For my friend, I couldn't get Runway to generate what I wanted, so I ended up doing it semi-manually using Photoshop Firefly.

While generating these, I also ran into issues several times with Runway refusing to generate the images. I think with AI image generation in general, it's hard to generate specific people for safety reasons. But that's something to keep in mind if you want to generate something with specific people as characters.

Scene 1: Taipei 101 with the moon

The opening scene with the moon timelapse isn't AI-generated. It's a free-to-use video from Timo Volz that I found here on Pexels.com.

I tried modifying it using Runway Aleph, but it failed. I thought making it a drone shot with the drone flying above the building could be a cool edit, but Aleph gave me this instead:

Make this video into a drone shot overflying the building.

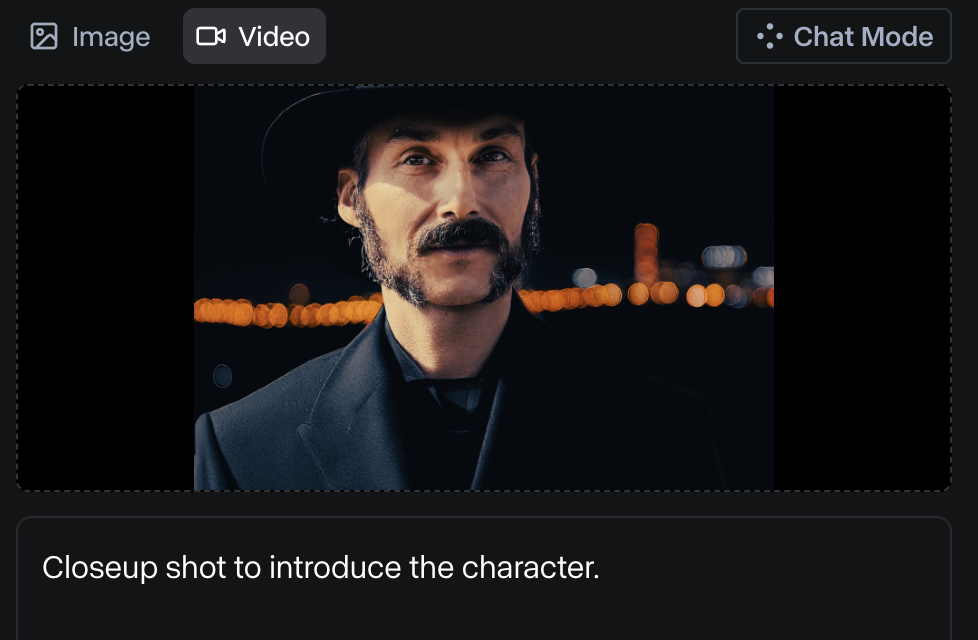

Scene 2: Close-up of my friend

For the second scene, I provided the photo I had generated using Photoshop and asked for a close-up shot to introduce the character.

I liked the result, but I wanted to introduce some mystery before revealing the character. So I asked it to generate another shot with the prompt: "Close-up shot to introduce the character. Make it start facing back and then slowly turn to the camera.".

Here are the results:

In the second example, Runway Aleph didn't comply with the prompt. However, that shot is the one I ended up using. I cut the first half in video editing software so that the character's face starts hidden by the hat and only then gets revealed.

Scene 3: Walking down a dark alley

This scene was generated by:

1. Based on the photo of the character, generate an image of the character facing away in a dark alley.

2. Using that image, along with a text prompt: "The man with the hat walks down the alley, away from the camera until he disappears in the distance. Add mist to make the scene more mysterious."

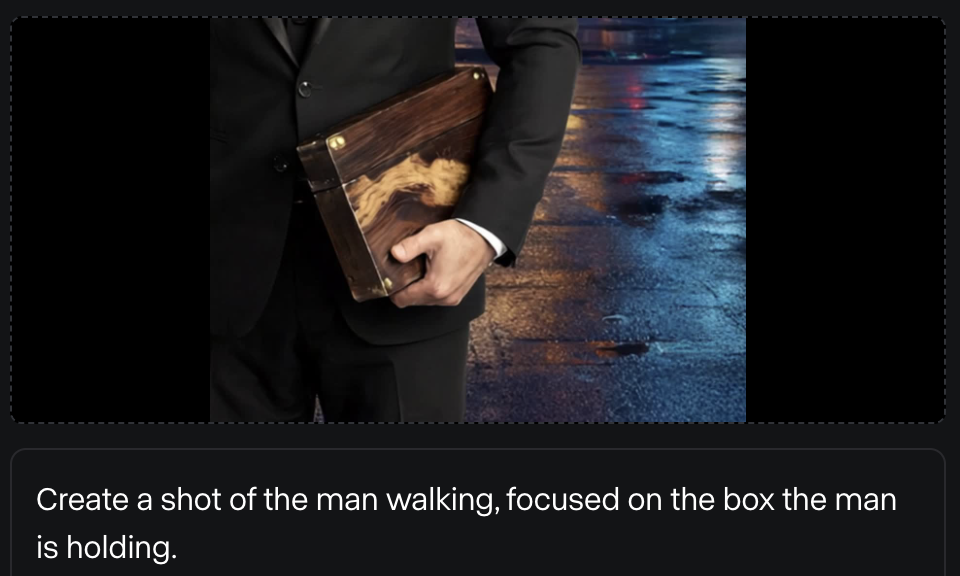

Scene 4: Me walking with the box

For this scene, I used the image of myself I created above. I had to insist quite a bit to get Aleph to generate a video of me walking. The prompt was simple: "The person walks to the building in the background."

None of the four videos did what I asked. I ended up using the fourth one as it was the only one that was usable.

I did want to add some mist to the video to make it look more mysterious, so I used Aleph's video editing capabilities to make it misty. This, on the other hand, worked quite well.

For the second part, the close-up shot of the box, I first tried to have Aleph edit the video using the prompt: "Make a close-up shot focusing on the hand holding the box." However, that didn't work at all.

I took a screenshot of the frame I wanted to animate and used it along with a prompt to fix it.

Finally, for the shot of me walking away, Runway originally generated an alley with neon lights that looked similar to the one from a previous scene. To make it look different, I asked Aleph to edit the video and remove the neon lights, which worked very well.

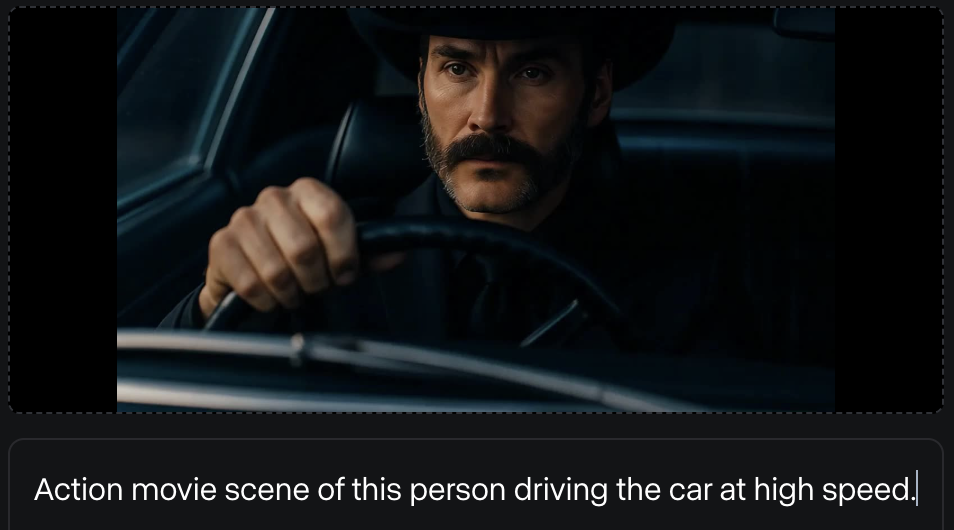

Scene 5: Driving the car

This scene was generated in the same way as the third scene, by generating a still image and then transforming it into a video.

However, the video didn't quite turn out as I wanted:

When using Runway Aleph, I feel that often there are only a couple of seconds that are usable in the video before it gets weird. I still managed to make something out of it by:

- Reversing the video.

- Keeping only the part of the video that focuses on the driver.

Scene 6: Traffic timelapse

This scene is a free-to-use video from Timo Volz taken from Pexels. I tried modifying the style but with little success.

Here's the prompt I used:

"Change the style of this video to a neo-noir action aesthetic inspired by John Wick—cinematic, high-contrast lighting, rain-soaked neon city streets in deep blues, purples, and reds; elegant yet dangerous atmosphere; sleek modern interiors; refined but gritty mood."

However, the result was unusable:

I did other styling experiments on other videos, and none of them worked out.

Ending Scenes

The final three scenes were also generated by turning images into videos.

- This image is of a real building in Taipei, and I added the neon sign using Photoshop.

- The image of me walking down the hallway with the box was generated using Runway by asking it to place me in a fancy hotel with a carpet with a "C". The image didn't turn out great as the box looks more like a suitcase, but I didn't manage to have it fixed.

- The final image was taken from a John Wick gift box, and the note was added using Photoshop.

Ending text

The ending text was fully generated using Runway. I used chat mode to ask it to generate the text "Chapter 50" as the title of a movie in the style of John Wick. It generated this image:

Then, in chat mode, it asked if I wanted the text to be animated, and I said yes. Here's the result:

I wanted the letters to also be animated, so I used Aleph to edit the video. The result isn't what I asked for, but I still liked it and managed to use it.

Here's what I asked for: "The camera flies through the zero in the '50' text, fading into a black screen."

Here's the final result:

Further Experiments With Runway Aleph

Next scene generation

I tried to extend portions of the video by using Aleph to generate the next scenes for some of my scenes. First, I didn't specify what I wanted the next scene to be; I just asked it to generate a next scene.

In terms of action, the generated scene is consistent with the previous scene, but there is a significant loss in the consistency of the character. It doesn't look like the same person.

To test this further, I tried to specify that I wanted the next scene to have the character hop on a motorcycle. Then I used the result and asked for yet another scene of the character riding away on the motorcycle.

Here's the result:

Aleph struggled with the fact that the character was carrying a box. We can also see some strange artifacts in the video.

Altering a video

To make the video, I successfully used Aleph's video editing capabilities to remove street neon signs and add mist into a scene. However, when I tried to change the aesthetic of one of the videos, it failed.

Out of curiosity, I tested making it rain in one of the scenes to see how it would cope with that, and it worked quite well on the first attempt.

In the following example, I asked to add fire to the back wheel of a motorcycle. It did successfully add fire, but not where it was supposed to:

Here's a final example where I asked it to add a giant wave in an existing video:

Conclusion

Creating videos with Runway Aleph is certainly within reach, but it's essential to understand that the process isn't as simple as typing a text prompt and getting a seamless video output. The journey requires a blend of creativity and patience, as the technology, while innovative, still requires guidance and adjustments to bring one's vision to life.

More often than not, I found myself adapting my initial ideas to the results produced by Runway Aleph. Rather than directing each scene purely through prompts, it became a collaborative effort, where the tool's unique interpretations often influenced the direction I ultimately took.

The examples showcased in Runway Aleph's announcement likely represent its best outcomes. While the model can certainly produce impressive results, achieving those desired effects often necessitates multiple attempts and a willingness to refine and iterate on the output.

Overall, Runway Aleph emerges as an exciting and enjoyable tool for hobbyists, allowing for video creations that otherwise might remain just a concept. I enjoyed the process while using it to create the birthday video. However, realizing such a vision does highlight that there's still a journey ahead before the tool can flawlessly translate every idea into a polished visual reality.