Track

OpenAI has introduced its next generation of audio models focused on enhancing speech-to-text and text-to-speech capabilities. These latest models promise improved accuracy, especially in complex situations like accents or noisy settings, and offer more customizable voice interactions.

In this tutorial, I will explain step-by-step how to use these new OpenAI audio models to build a voice-to-voice AI assistant. Our goal is to develop an AI assistant that can understand spoken input and reply with a naturally synthesized voice tailored to specific needs.

Develop AI Applications

The OpenAI Audio API

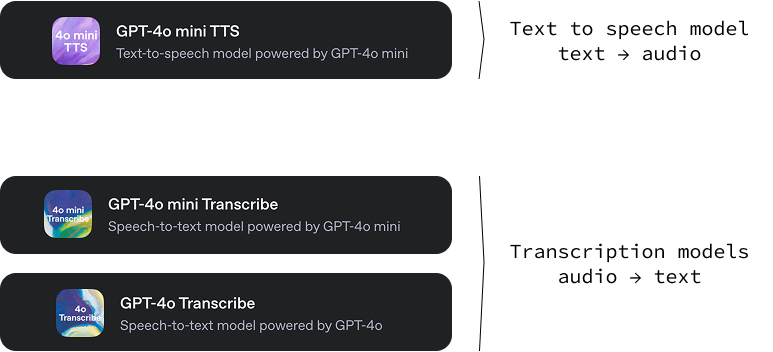

This new release from OpenAI includes three models:

gpt-4o-mini-tts: A text-to-audio model capable of generating audio from text with various tones and voices. A neat feature of this text-to-speech model is that we can guide how the voice sounds by giving specific text instructions. This brings a high level of customization, allowing for the creation of unique and tailored voice experiences. You can try it out on OpenAI.fm.-

gpt-4o-transcribeandgpt-4o-mini-transcribe: Two audio-to-text models that are designed for converting spoken language into written text. Their main function is to provide highly accurate and reliable transcriptions of audio. These models demonstrate a lower word error rate (WER), which means they make fewer mistakes in recognizing spoken words compared to previous solutions.

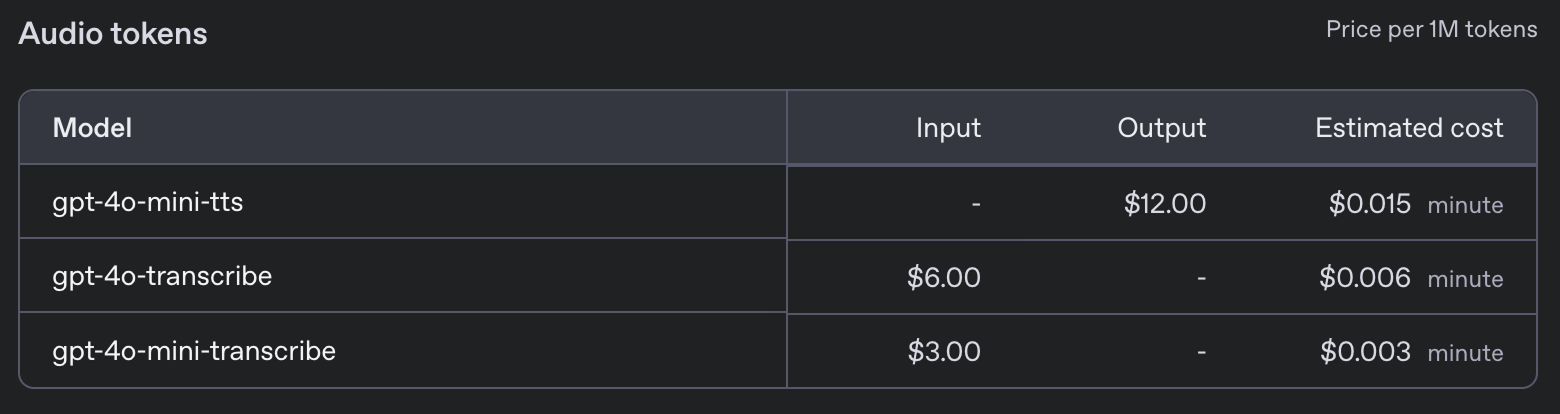

These new models come with the following pricing:

Voice Assistant Project

In this tutorial, I'll guide you through building an AI voice assistant right in your terminal. This voice assistant will essentially mimic a popular text-based AI model but will interact entirely through spoken language. Imagine being able to speak directly to your computer, ask any question you have, and receive a vocal response almost instantly.

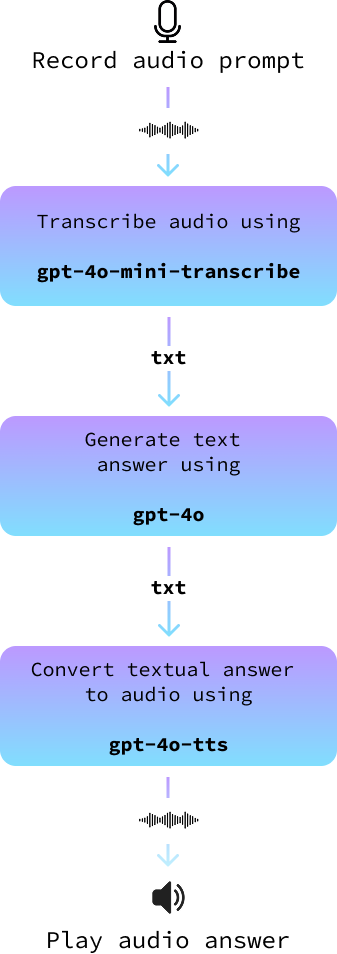

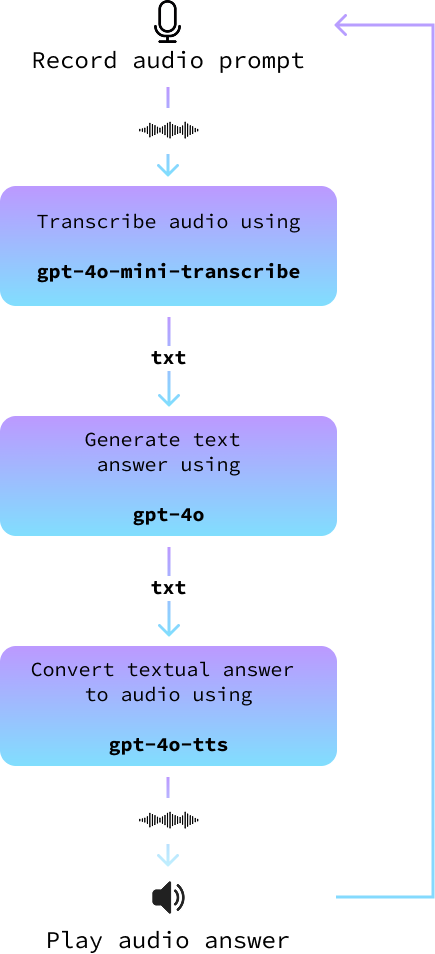

Our project will use a simple yet effective architecture. We'll begin by using your microphone to capture your spoken prompt. Once recorded, we'll convert this audio input into text with the help of advanced speech-to-text models.

This text is then fed into a large language model to generate a suitable response. Finally, we'll convert the text response back into audio, allowing the assistant to “speak” the answer back to you. Each step of this process is designed to ensure that our voice assistant is both accurate and engaging.

Although OpenAI offers a dedicated Realtime API that can enhance interactions by streamlining the entire process, we will opt for a different approach. The Realtime API, while impressive and perfect for developers looking for swift integrations, is often costlier and offers less flexibility.

By choosing to build our project using separate components for each step, we gain greater control over the customization of our AI assistant. This approach lets us decide the models we want to use, thereby optimizing for specific needs, whether it be accuracy, speed, or preference in tone of response. This way, our voice assistant becomes not only a powerful tool but also a highly tailored one, capable of fitting unique project requirements.

All the code we develop here is available in this GitHub repository.

Python Setup

To get started, we'll first set up a new Anaconda environment named audio-demo. Anaconda's environments enable us to create isolated spaces for each project where we can install specific versions of packages without conflicts. Execute the following commands in your command-line interface:

conda create -n audio-demo -y python=3.9

conda activate audio-demo

pip install openai

pip install numpy

pip install dotenv

pip install sounddevice

pip install scipyLet's break down what each command and package does:

- Creating the environment:

conda create -n audio-demo -y python=3.9: This command creates a new environment calledaudio-demowith Python version 3.9. The-yflag automatically agrees to the package installations without prompting.

- Activating the environment:

conda activate audio-demo: Activates the newly createdaudio-demoenvironment, so we can work within it.

- Installing packages:

pip install openai: OpenAI is a library that provides easy access to OpenAI's models and APIs.pip install numpy: NumPy is a library essential for numerical computing.pip install dotenv: Dotenv helps load environment variables from a.envfile, making configuration management easier and safer.pip install sounddevice: Sounddevice allows us to record and play sound using simple functions, which is ideal for handling audio input and output in Python.pip install scipy: SciPy builds on NumPy and provides additional functionality for scientific and technical computing, such as signal processing. In our case, we'll use it to store the audio file.

With our audio-demo environment set up, we are ready to start working on our AI assistant that can process audio inputs. This structured setup helps us maintain a clean development space, ensuring all dependencies are in place for our project.

Open AI API Key Setup

To use the OpenAI API, we need an API key. Go to their API key page and generate an API key by clicking the "Generate new secret key" button. Copy the key, create a file named .env, and paste it there with the following format:

OPENAI_API_KEY=<paste_your_api_key_here>Text to Audio Example

Let's walk through the steps to create a Python script that uses OpenAI's text-to-audio capabilities, transforming text into speech with a personalized touch. We write our code in a file named text_to_audio.py in the same folder as the .env. file.

Import required libraries

First, we need to import the necessary libraries that will make up our script:

import asyncio

from openai import AsyncOpenAI

from openai.helpers import LocalAudioPlayer

from dotenv import load_dotenvLet’s quickly walk through what each of these imports does:

asyncio: This library is required for writing asynchronous code in Python, which is essential for working with streaming APIs.AsyncOpenAI: A part of the OpenAI library, this provides tools to interact with OpenAI's APIs asynchronously.LocalAudioPlayer: This helper from OpenAI allows us to play audio locally on our machine.load_dotenv: Loads environment variables from the.envfile, which is where we store sensitive information like our API keys.

Load environment variables

Next, we load our API key from the .env file using the load_dotenv function:

load_dotenv()This ensures that our script has secure access to the API key.

Initialize OpenAI

We create an instance of AsyncOpenAI to start interacting with the OpenAI API:

openai = AsyncOpenAI()Write the main function

Now we define our main function, text_to_audio(), which will use OpenAI's text-to-audio feature to process the input and play the resulting audio:

async def text_to_audio(text, tone_and_style_instructions):

async with openai.audio.speech.with_streaming_response.create(

model="gpt-4o-mini-tts",

voice="coral",

input=text,

instructions=tone_and_style_instructions,

response_format="pcm",

) as response:

await LocalAudioPlayer().play(response)Let’s quickly explain what we did above:

- We specify the

modelandvoiceparameters to control the speech synthesis. Themodelused isgpt-4o-mini-ttsand the voice selected is "coral". - The

response_formatis set to"pcm", suitable for audio processing. - The

LocalAudioPlayerthen plays the audio response generated by the API.

Execute the function

We complete the script with the following lines to ensure the text_to_audio() function runs when we execute the script:

if __name__ == "__main__":

asyncio.run(text_to_audio("Hello world!", "Enthusiastic voice."))This code block checks if the script is the main module being run and executes the text_to_audio() function using asyncio.run() to handle the asynchronous logic.

With these steps, our script is ready to convert text input into speech using OpenAI's text-to-audio service. This setup allows us to experiment with different inputs and styles, bringing text to life through sound.

We can run the script using the command:

python text_to_audio.pyThe complete code can be found here.

Audio Transcription From a File

In this section, let's explore how to transcribe an audio file into text using OpenAI’s audio transcription tool. Our script is designed to handle audio files asynchronously to make the process efficient and quick. We'll implement this script in a file named audio_to_text.py.

The imports and initial setup are the same as before, except that we don't need to import the LocalAudioPlayer here. Here's how we can write a function that transcribes an audio file:

async def transcribe_audio(audio_filename = "audio.wav"):

audio_file = await asyncio.to_thread(open, audio_filename, "rb")

stream = await openai.audio.transcriptions.create(

model="gpt-4o-mini-transcribe",

file=audio_file,

response_format="text",

stream=True,

)

transcript = ""

async for event in stream:

if event.type == "transcript.text.delta":

print(event.delta, end="", flush=True)

transcript += event.delta

print()

audio_file.close()

return transcriptLet’s break down what happens here:

- Opening the audio file:

audio_file = await asyncio.to_thread(open, audio_filename, "rb"): This line opens the audio file in binary read mode ("rb"). The methodasyncio.to_thread()allows this file opening operation to run in a separate thread, preventing it from blocking other parts of the program.

- Creating a transcription stream:

stream = await openai.audio.transcriptions. create(...): This line calls the transcription API.- We specify the

modelparameter asgpt-4o-mini-transcribe, designed specifically for transcription tasks. - The

fileparameter holds our opened audio file. response_format="text"tells the API to return the transcription as text.stream=Trueis used to stream the transcription in real time, which means as soon as a part of the audio is processed, it is immediately returned, speeding up the response.

- Processing the transcription stream:

async for event in stream: Starts a loop to read events from the transcription stream as they occur.if event.type == "transcript.text.delta":: Checks each event type and processes it if it is of typetranscript.text.delta, which indicates that a part of the transcription is ready.print(event.delta, end="", flush=True): Prints the incremental transcription as it becomes available, ensuring our output is real-time.

- Closing the audio file:

audio_file.close(): After we complete the transcription, it's good practice to close the audio file to free up system resources.

By executing the main() function, we can convert an audio file into text efficiently and process it in a streamed fashion to get immediate feedback. This setup is ideal for applications that need fast transcription or involve long audio files.

You can try it by placing an audio file in the same folder as the script, replacing audio.wav with the name of your audio file, and running the command:

python audio_to_text.pyThe complete code can be found here.

Audio Transcription from Microphone

Because our goal is to create a voice assistant, we need to record the user's audio prompt into an audio file.

We will create a new file named record.py with a function called record_audio. This function captures sound from the microphone and saves it as an audio file. We won't go into much detail about how it works because it is not the main focus of this article:

import sounddevice as sd

import numpy as np

import scipy.io.wavfile as wavfile

SAMPLE_RATE = 44100 # Sample rate in Hz

def record_audio():

print("[INFO: Recording... Press <Enter> to stop]")

audio_data = [] # Initialize a list to store audio frames

def callback(indata, frames, time, status):

audio_data.append(indata.copy())

with sd.InputStream(samplerate=SAMPLE_RATE, channels=1, callback=callback, dtype='int16'):

input() # Wait for the user to press Enter to stop recording

print("[INFO: Recording complete]")

print()

audio_data = np.concatenate(audio_data) # Concatenate the list into a single array

filename = "output.wav"

wavfile.write(filename, SAMPLE_RATE, audio_data)

return audio_dataWhen we call this function, it will start recording from the user's microphone. It waits until the user presses "Enter" and then saves the audio into a file with the given filename.

To test this, we can combine this function with the transcription function above to transcribe a message spoken by the user. Here's how we can create a new file named record_and_transcribe.py to implement this:

import asyncio

from audio_to_text import transcribe_audio

from audio_recorder import record_audio

async def main():

record_audio("prompt.wav")

await transcribe_audio("prompt.wav")

if __name__ == "__main__":

asyncio.run(main())You can try running it using the command python record_and_transcribe.py. The script will record what you say until you press "Enter" and then transcribe what you said.

Building an Audio Assistant

In this section, we put it all together to build an audio assistant. We implement it in a new file called audio_assistant.py by following these steps:

- Record the user's audio prompt using the

record_audio()function. - Convert the audio prompt to text with the

transcribe_audio()function. - Use a regular text-to-text model like

gpt-4oto generate an answer. - Finally, convert the textual answer to audio using the

text_to_audio()function. - Repeat this until the user exits.

The following diagram illustrates this:

I encourage you to try building it yourself before reading further.

First, we import the functions we implemented before and initialize the OpenAI client.

# Import the functions we created

from text_to_audio import text_to_audio

from audio_to_text import transcribe_audio

from audio_recorder import record_audio

# Import other dependencies and initialize OpenAI

import asyncio

from openai import AsyncOpenAI

from dotenv import load_dotenv

load_dotenv()

openai = AsyncOpenAI()Then, we need a function to generate the answer. This uses the normal OpenAI GPT API with a model like gpt-4o or any other text-to-text model. If you're new to this, you might wanna check this GPT-4o API tutorial.

Here's an async implementation of this function:

async def get_answer(prompt):

stream = await openai.chat.completions.create(

model="gpt-4o",

messages=[

{"role": "user", "content": prompt}

],

stream=True,

)

answer = ""

async for chunk in stream:

content = chunk.choices[0].delta.content

if content is not None:

answer += content

print(content, end="", flush=True)

print("\n\n")

return answerTo implement the main loop, we follow the steps outlined above:

async def main(tone_and_style_instructions):

await text_to_audio("Hello, how can I help you today?", tone_and_style_instructions)

while True:

record_audio("prompt.wav")

prompt = await transcribe_audio("prompt.wav")

print()

answer = await get_answer(prompt)

await text_to_audio(answer, tone_and_style_instructions)Finally, we run the main loop when the script is executed:

if __name__ == "__main__":

tone_and_style_instructions = "Enthusiastic voice."

asyncio.run(main(tone_and_style_instructions))Here's a demo of it in action:

The complete code can be found here.

Further Improvements

If we try using the assistant with complex tone and style instructions, we may notice a dissociation between the words and the tone. For example, consider the following instructions for an "Emo Teenager" voice taken from the OpenAI website:

tone_and_style_instructions = """

Tone: Sarcastic, disinterested, and melancholic, with a hint of passive-aggressiveness.

Emotion: Apathy mixed with reluctant engagement.

Delivery: Monotone with occasional sighs, drawn-out words, and subtle disdain, evoking a classic emo teenager attitude.

"""The voice tone of the audio will match this style, but the text content of the answer generated by the get_answer() function won't take this into account, which can lead to a bit of inconsistency. Here's an example:

To overcome this, we can pair the user message in the get_answer() function with a system prompt indicating that the generated text should follow the tone and style instructions.

For this, we provide the tone_and_style_instructions as the second argument of the get_answer() function and modify the chat request by adding a system message:

async def get_answer(prompt, tone_and_style_instructions):

stream = await openai.chat.completions.create(

model="gpt-4o",

messages=[

{

"role": "system",

"content":

f"""

The text you generate is being used in a text-to-voice model.

Make sure your answer matches the guidelines {tone_and_style_instructions}

"""

},

{"role": "user", "content": prompt}

],

stream=True,

)

answer = ""

async for chunk in stream:

content = chunk.choices[0].delta.content

if content is not None:

answer += content

print(content, end="", flush=True)

print("\n\n")

return answerHere’s how the model answers now:

As you can see, the text that it generates now matches the tone instructions. The complete code can be found here.

Building an Assistant with the Agents API

In the previous example, we manually requested the voice-to-text and text-to-voice models to build a voice assistant. By doing so, we learned how to explicitly use the new models from OpenAI’s voice API.

However, if the goal is to build a voice assistant, there’s an easier way to use the agent’s API. This API has been updated to be able to automatically handle the voice-to-text-to-voice workflow we’ve implemented here.

If it’s your first time using the agent’s API, you may wanna take a look at this tutorial on OpenAI Agents SDK.

Before we start, we need to install one more dependency:

pip install 'openai-agents[voice]'With that out of the way, we start by importing everything we need to run an agent with a voice pipeline:

from dotenv import load_dotenv

load_dotenv()

import asyncio

from agents import Agent

from agents.voice import (

AudioInput,

SingleAgentVoiceWorkflow,

VoicePipeline,

VoicePipelineConfig,

TTSModelSettings,

)

from audio_recorder import record_audio

from audio_player import AudioPlayerThe AudioPlayer doesn’t belong to any package. It’s imported from a local file and contains a simple class to help us play the audio in real time. This is required because with the VoicePipeline, we’ll get the audio chunk by chunk, and we'll playback each chunk as we receive it. Here’s the content of the audio_player.py file:

import numpy as np

import sounddevice as sd

class AudioPlayer:

def __enter__(self):

self.stream = sd.OutputStream(samplerate=24000, channels=1, dtype=np.int16)

self.stream.start()

return self

def __exit__(self, tp, val, tb):

self.stream.close()

def add_audio(self, audio_data):

self.stream.write(audio_data)The next step is to create an agent:

agent = Agent(

name="Voice Assistant",

instructions="You’re a helpful assistant speaking to a human.",

model="gpt-4o-mini",

)Here’s a description of the parameters we used:

name: This can be anything we want.instructions: These instructions define what the agent is supposed to be. It works as the system prompt.model: The model that is used to generate answers.

The agent works as the get_answer() function we implemented previously. Think of it as the part of the pipeline that provides an answer to a text prompt.

Next, we define the pipeline. This is where we specify all the configurations related to the voice:

pipeline = VoicePipeline(

workflow=SingleAgentVoiceWorkflow(agent),

stt_model="gpt-4o-mini-transcribe",

tts_model="gpt-4o-mini-tts",

config=VoicePipelineConfig(

tts_settings=TTSModelSettings(

voice="coral",

instructions="""

Speak in an enthusiastic voice.

"""

)

)

)Here’s a breakdown of some of the parameters:

stt_model: The model to use to convert speech into text.tts_model: The model used to convert text into speech.config: Provide the pipeline configuration. Here we use it to specify the voice we want thetts_modelto use, as well as the speech instructions.

Finally, we run the main loop, similarly to what we did before:

async def main():

while True:

audio_input = AudioInput(buffer=record_audio())

result = await pipeline.run(audio_input)

with AudioPlayer() as player:

async for event in result.stream():

if event.type == "voice_stream_event_audio":

player.add_audio(event.data)

if __name__ == "__main__":

asyncio.run(main())You can find a complete implementation here.

Adding tools

Note that this loop will run forever, as we didn’t specify a stop condition. When executing it, you’ll have to kill the process manually to stop it. One way to implement stopping it is to provide a tool to the agent.

Tools are functions that we provide to the agent so that it can execute them for us. In this case, we could provide a function that stops the script. The agent uses the function name and comment to decide whether it should call it.

from agents import function_tool

@function_tool

def stop_conversation():

"""Stop the conversation."""

exit()Then, we provide the tool to the agent:

agent = Agent(

…

tools=[stop_conversation], # Add this line when creating the agent

)With this implementation, if we say, “I would like to stop the conversation,” the agent will understand that it needs to call the stop_conversation() function. Note that this implementation will not exit gracefully because of the way the stop_conversation() function is implemented.

Check this file if you want a complete script.

Using multiple agents

A neat feature of the agents API is that we can configure multiple agents to work together. Here’s an example taken from the OpenAI documentation:

from agents.extensions.handoff_prompt import prompt_with_handoff_instructions

spanish_agent = Agent(

name="Spanish voice assistant",

handoff_description="A spanish speaking agent.",

instructions=prompt_with_handoff_instructions(

"You're speaking to a human, so be polite and concise. Speak in Spanish.",

),

model="gpt-4o-mini",

)

agent = Agent(

name="Voice Assistant",

instructions=prompt_with_handoff_instructions("""

You're speaking to a human, so be polite and concise.

If the user speaks in Spanish, handoff to the spanish agent.

"""),

model="gpt-4o-mini",

)In this example, we define a second agent designed to speak Spanish and modify the first agent by providing a prompt with a handoff. When the handoff instructions are verified, the second agent will kick in and continue the conversation.

The full script is available here.

Conclusion

By using the advanced capabilities of OpenAI's latest audio models, we have created a system that can effectively transcribe spoken language into text and generate human-like speech from textual responses. This project demonstrates not only the potential of current technology but also how accessible these tools have become for developers interested in creating custom AI solutions.