What is Pandas AI?

Pandas AI is a Python library that uses generative AI models to supercharge pandas capabilities. It was created to complement the pandas library, a widely-used tool for data analysis and manipulation.

Users can summarize pandas data frames data by using natural language. Moreover, you can use it to plot complex visualization, manipulate dataframes, and generate business insights.

Image from Pandas-AI

Pandas AI is beginner-friendly; even a person with little technical background can use it to perform complex data analytics tasks. Its utility helps analyze data faster and derive meaningful conclusions.

Getting Started with Pandas AI

In this section, we will learn how to install and set up Pandas AI for data analysis.

First, we will install Pandas AI using pip.

pip install pandasaiOptional install: We can also install Google PaLM dependency by using the following code.

pip install pandasai[google]Second, we need to obtain an OpenAI API key and store it as an environment variable by following the tutorial on Using GPT-3.5 and GPT-4 via the OpenAI API in Python.

Finally, we must import essential functions, set the OpenAI key into the LLM API wrapper, and instantiate a PandasAI object. We will use this object to run prompts on single or multiple pandas dataframes.

import os

from pandasai import PandasAI

from pandasai.llm.openai import OpenAI

openai_api_key = os.environ["OPENAI_API_KEY"]

llm = OpenAI(api_token=openai_api_key)

pandas_ai = PandasAI(llm)In addition to OpenAI GPT-3.5, you can use the LLM API wrapper for Google PALM (Bison) or even open-source models available on HuggingFace, like Starcoder and Falcon.

from pandasai.llm.starcoder import Starcoder

from pandasai.llm.falcon import Falcon

from pandasai.llm.google_palm import GooglePalm

# GooglePalm

llm = GooglePalm(api_token="YOUR_Google_API_KEY")

# Starcoder

llm = Starcoder(api_token="YOUR_HF_API_KEY")

# Falcon

llm = Falcon(api_token="YOUR_HF_API_KEY")You can also set up a .env file and avoid setting up api_token. For that, you have to add API keys to the .env file using the following template:

HUGGINGFACE_API_KEY=

OPENAI_API_KEY=

Basic Uses of Pandas AI

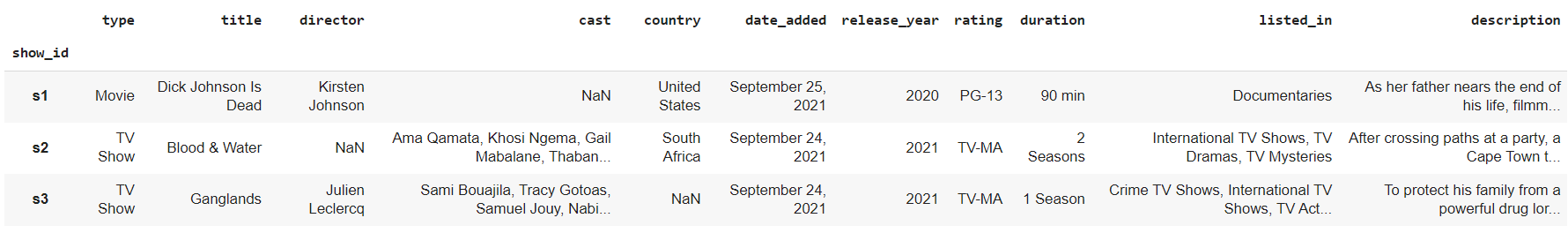

In this basic use case, we will load the Netflix Movie Data using the pandas library. The dataset consists of more than 8,500 movies and TV shows available on Netflix.

import pandas as pd

df = pd.read_csv("netflix_dataset.csv", index_col=0)

df.head(3)

Follow our Python pandas tutorial to learn everything you can do with the pandas Python library.

By passing a dataframe and prompt, we can get Pandas AI to generate analysis and manipulate the dataset. In our case, we will prompt Pandas AI to display records of the five longest-duration movies.

pandas_ai.run(df, prompt='What are 5 longest duration movies?')As we can see, the longest-duration movie is Black Mirror, with 312 minutes.

Let’s ask it to only display the names of the five longest-duration movies.

pandas_ai.run(df, prompt='List the names of the 5 longest duration movies.')['Black Mirror: Bandersnatch', 'Headspace: Unwind Your Mind', 'The School of Mischief', 'No Longer kids', 'Lock Your Girls In']Note: if you want to enforce your privacy further, you can instantiate PandasAI with enforce_privacy = True, which will not send the dataset headers (but just column names) to the LLM.

We can even ask Pandas AI to perform complex tasks like grouping, sorting, and combining.

pandas_ai.run(df, prompt='What is the average duration of tv shows based on country? Make sure the output is sorted.')country

Denmark, Singapore, Canada, United States 10.0

United States, Mexico, Colombia 7.0

Canada, United States, France 5.5

United Kingdom, Ireland 5.0

Canada, United Kingdom 5.0

...

Spain, Cuba 1.0

Germany, France, Russia 1.0Note: some technical prompts might not work, especially when you ask it to group columns.

Advanced Uses of Pandas AI

An advanced use case for Pandas AI is generating complex data visualizations and business analysis using multiple data frames.

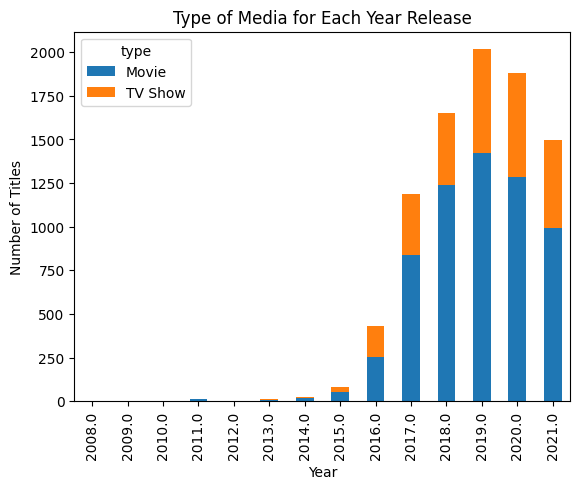

In the first example, we could write a prompt to generate a bar chart showing the number of titles by year, categorized by type.

pandas_ai.run(df, prompt='Plot the bar chart of type of media for each year release, using different colors.')

Note: You can save any charts generated by Pandas AI by setting the save_charts parameter to True. For example, PandasAI(llm, save_charts=True). The charts will be saved in the ./pandasai/exports/charts directory.

In a second example, we will create three data frames and use all three data frames to generate analysis with Pandas AI.

Pandas AI will first join df1 with df2 based on "store" and df2 with df3 on "location." It will then process the combined dataset and produce a result in a few seconds. It would have taken at least 10 minutes for a data scientist to understand the data and devise a solution.

# DataFrame 1

df1 = pd.DataFrame({

'sales': [100, 200, 300],

'store': ['Walmart', 'Target', 'Walmart']

})

# DataFrame 2

df2 = pd.DataFrame({

'revenue': [400, 500, 600],

'store': ['Walmart', 'Target', 'Walmart'],

'location': ['North', 'South', 'West']})

# DataFrame 3

df3 = pd.DataFrame({

'profit': [700, 800, 900],

'location': ['North', 'South', 'West'],

'employees': [20, 25, 30]})

pandas_ai.run([df1,df2,df3], prompt='How many employees work at Walmart?')50You can also perform complex data analysis tasks by taking a Data Manipulation with pandas course.

Pandas AI Command Line Interface (CLI)

Pandas AI CLI is an experimental tool, and you can install it by cloning the repository and moving to the project directly.

!git clone https://github.com/gventuri/pandas-ai.git

%cd pandas-aiAfter that, we will use poetry to create and activate a virtual environment.

!poetry shellNote: if poetry is not installed in your system, you can install it using curl -sSL https://install.python-poetry.org | python3 -

Use the following code to install the dependencies inside the activated environment.

!poetry installFinally, open a terminal and use the Pandas AI CLI tool. You must provide a dataset, model name, and prompt. If no token is provided, pai will retrieve the token from the .env file.

!pai -d "netflix_dataset.csv" -m "openai" -p "What are 5 longest duration movies?"- -d, --dataset: The file path to the dataset.

- -t, --token: Your HuggingFace or OpenAI API token.

- -m, --model: The LLM model to use. Options are:

openai,open-assistant,starcoder, falcon,azure-openai, orgoogle-palm. - -p, --prompt: The prompt for PandasAI to execute.

Read the Pandas AI documentation to learn about more functions and features that can simplify your workflow.

Conclusion

Pandas AI has the potential to revolutionize data analysis by leveraging large language models to generate insights from datasets.

While data scientists typically spend significant time cleaning, exploring, and visualizing data, Pandas AI automates many of these repetitive tasks.

However, like all AI tools, Pandas AI still has limitations and cannot completely replace humans. The analyzed results often require human verification to ensure accuracy and identify any edge cases.

In this post, we learned how to install, set up, and use Pandas AI for data analysis. We utilized Pandas AI to perform data analysis tasks, generate data visualizations, and leverage multiple dataframes to gain business insights. If you want to improve your prompts to get better results, consider completing an Introduction to ChatGPT course or referencing the ChatGPT Cheat Sheet for Data Science.

As a certified data scientist, I am passionate about leveraging cutting-edge technology to create innovative machine learning applications. With a strong background in speech recognition, data analysis and reporting, MLOps, conversational AI, and NLP, I have honed my skills in developing intelligent systems that can make a real impact. In addition to my technical expertise, I am also a skilled communicator with a talent for distilling complex concepts into clear and concise language. As a result, I have become a sought-after blogger on data science, sharing my insights and experiences with a growing community of fellow data professionals. Currently, I am focusing on content creation and editing, working with large language models to develop powerful and engaging content that can help businesses and individuals alike make the most of their data.