Course

What is Edge Computing?

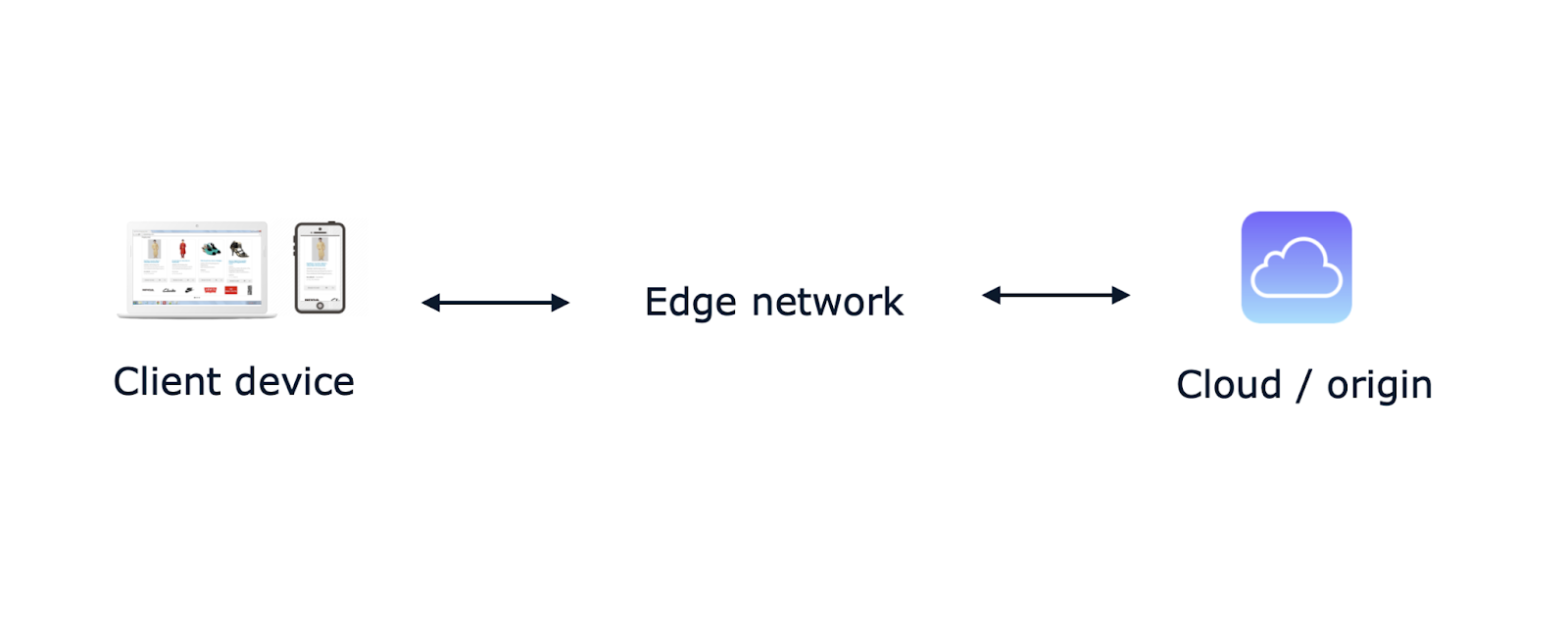

There are many ways to define edge computing, but the core concept is quite simple. Edge computing moves computation and data storage closer to the user to reduce latency compared to centralized data centers.

This leads to different architectural designs based on the type of application you’re building. In fact, edge computing is a broad term that encompasses many technological areas.

For example, in industrial or manufacturing use cases, computation is pushed to IoT devices and edge gateways to aggregate or preprocess data before pushing it to the cloud. In other industries, such as healthcare, retail, or automotive, you’ll find similar applications that push business-critical compute logic to wearable devices, smart cameras, or autonomous vehicles. In all these use cases, computation must happen quickly at the edge, avoiding delays from network round trips to distant data centers.

A bit of web and cloud computing history

In this article, we’ll focus on CDN-based edge computing, which is the type of edge you care about if you use web and cloud services on a daily basis to build web applications.

To understand CDN-based edge computing and its purpose, we need to explore the evolution of the web and the cloud over the years. This context is essential because edge computing is key to that evolution.

Let’s have a quick look at how the web was born and how it led to the cloud:

- 1960s: The first web network is launched (ARPANET) between 4 computers across the USA, mainly for research purposes between universities.

- 1970s: There are roughly 200 nodes in the network, including new nodes in the UK and Europe. Queen Elizabeth II sends her first email on March 26, 1976.

- 1980s: The network grows rapidly to nearly 100,000 nodes, also thanks to the first personal computers, forming the basis of the modern Internet.

- 1990s: The World Wide Web (www) is born, including the first graphical web browser (Mosaic) and the first e-commerce and web companies such as Amazon, Google, and eBay - almost 250M users by 1999.

- 2000s: Faster broadband connections allow richer content, especially on early smartphones with 3G and 4G networks. The first social media platforms emerge (MySpace, LinkedIn, Facebook, YouTube, Twitter).

The early 2000s is also when the cloud was born. Let’s review a few specific milestones of the last 20 years:

- 2006: Amazon Web Services (AWS) is launched, becoming the very first public cloud platform with services such as SQS, S3, and EC2.

- 2008: Google Cloud is launched with the preview of Google App Engine (then Google Cloud Storage and BigQuery in 2010).

- 2010: Windows Azure is launched (then renamed to Microsoft Azure in 2014).

- 2013: Docker is launched, kicking off container-based computing.

- 2014: Kubernetes is announced (then reaching 1.0 and joining CNCF in 2015).

- 2015: AWS Lambda is announced, and serverless computing is born (quickly joined by Azure Functions, Google Cloud Functions, and many others a few years later).

So, what does the cloud look like today?

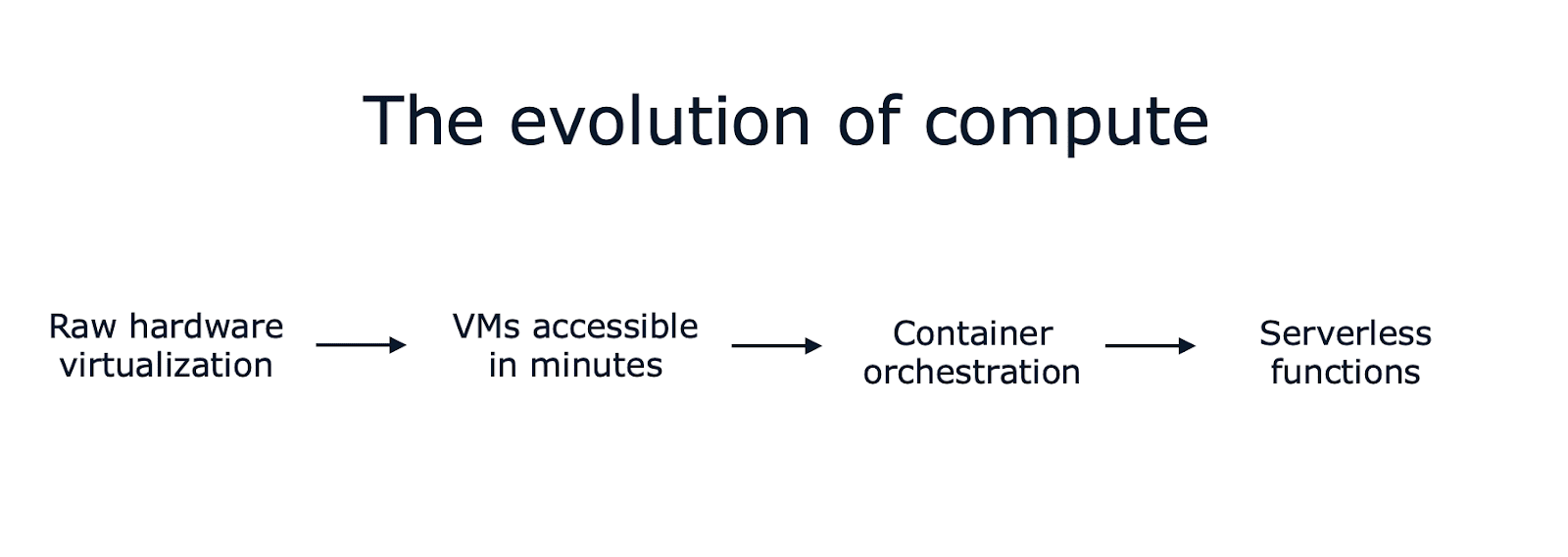

In under a decade, web application technology shifted from raw hardware virtualization to virtual machines accessible within minutes via API. Then all the way to container orchestration and serverless functions that spin up in seconds (or even tens of milliseconds), with per-second (or even per-millisecond) billing options and an incredible amount of built-in automation.

And that’s just for the computing side of things. There are literally hundreds of managed services when you look at storage, networking, databases, analytics, and so on. Managed databases are especially interesting because you can find plenty of options (including your favorite open-source engines), and they often automate the most tedious parts of operating a database in production.

Some argue that cloud services have made building web applications easier and faster than ever, while others feel it was simpler and more straightforward 10 or 20 years ago. The reality is quite subjective and depends on what you’re building and what your business goals and priorities are.

What else has changed in the last 10 years?

Back to our quick historical analysis, something else happened—almost silently—in the last decade or so. Content delivery networks underwent a similar evolution, leading to what I previously called “CDN-based edge computing.”

A content delivery network (CDN) is a global network of proxy servers distributed worldwide to improve website performance. In simple terms, a CDN helps content owners deliver content to Internet users by accelerating the transfer and caching the content across hundreds of edge servers. Positioned in front of your website, a CDN can also shield the origin from DDoS attacks and other vulnerabilities.

But that’s not the entire picture anymore. Let’s look at how they have also evolved over the last 10 years.

Cloud Courses

The Evolution of Content Delivery Networks (CDNs)

Initially focused on caching and protecting origin servers, CDNs began evolving with the rise of serverless computing. A new idea became popular very quickly: developers could define an atomic “function” that runs on-demand in response to an event, such as a new image upload or a new database record, without having to own and maintain the underlying physical or virtual infrastructure.

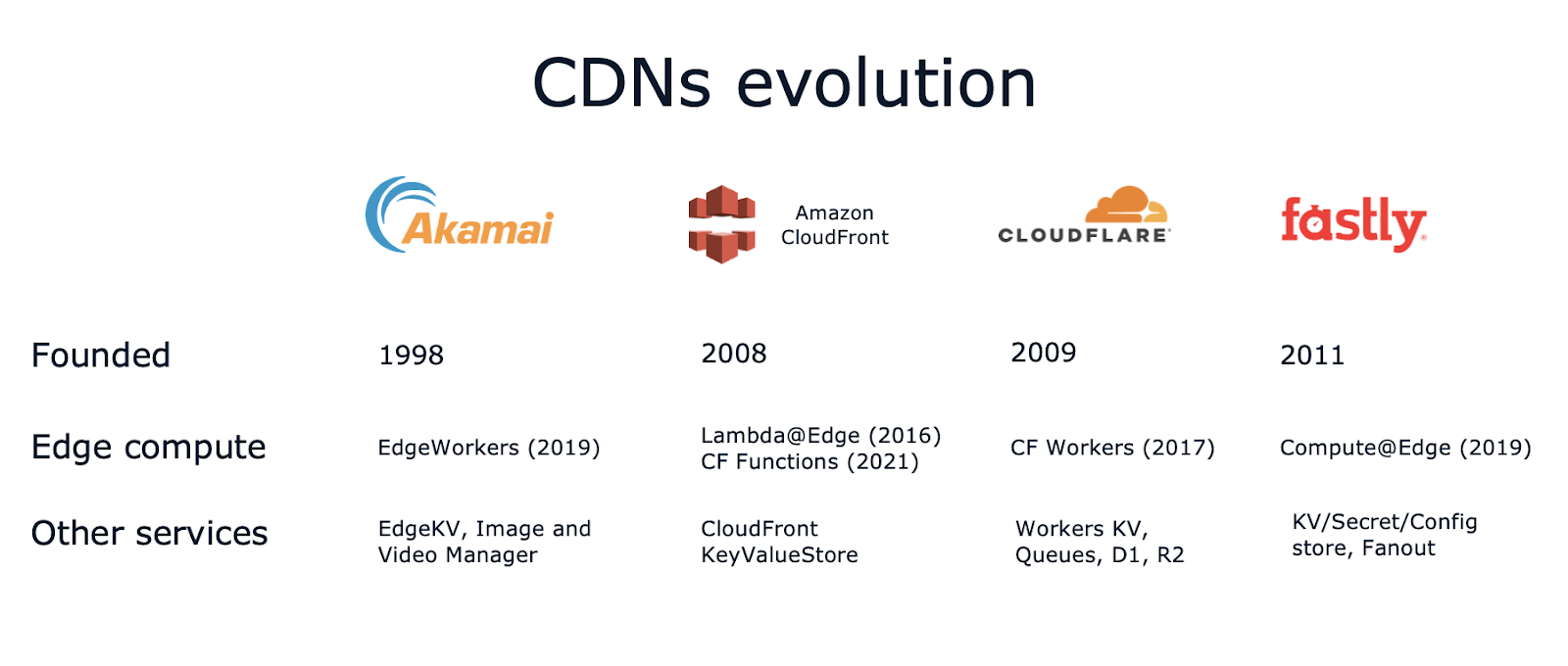

AWS Lambda was the first example of this new paradigm in 2015. AWS Lambda@Edge followed in 2016, allowing you to adopt the same approach with functions running on top of Amazon CloudFront - AWS’s CDN service.

Popular CDNs followed suit, introducing services such as Cloudflare Workers in 2017, Fastly Compute@Edge in 2019, and Akamai EdgeWorkers in 2019.

And they didn’t stop there! As we said at the beginning of this article, edge computing isn’t just about bringing compute capabilities to the edge. In most cases, you also need some sort of data storage. So CDNs kept evolving and shipped new edge services such as key-value stores, object storage, SQL databases, image resizing, AI inference, and more!

For example:

- Cloudflare’s development platform includes edge services such as Workers KV for key-value storage, D1 for relational SQL databases with point-in-time recovery, R2 for object storage with no egress fee, and Queues for asynchronous task execution.

- Similarly, on Fastly you find KV Store for storing key-value pairs, secrets, and config data, and Fanout for bi-directional asynchronous pub/sub at the edge.

- And you guessed it, Akamai offers EdgeKV.

- Amazon CloudFront introduced its CloudFront KeyValueStore in 2023.

Today, CDNs offer services that you’d normally expect to run only in a cloud region (or data center), from serverless functions to key-value stores to object storage, queues, and relational databases. Coincidentally, queues, object storage, and compute capacity were the very first cloud services announced back in 2006, now debuting at the edge in a globally distributed fashion.

Under the hood, these new edge compute platforms use different technologies and support different programming languages. For instance, Cloudflare uses the Chrome V8 engine, which initially supported only JavaScript but began incorporating WebAssembly in 2018. On the other hand, Fastly decided to use WebAssembly from the beginning for their environment sandboxing to avoid some of the startup latency limitations of V8. This allows Fastly to support many programming languages, such as TypeScript, Rust, Go, .NET, Ruby, and Swift.

Let’s open a quick parenthesis about WebAssembly.

WebAssembly at the edge

WebAssembly (or Wasm in short) might be the most interesting development in this space. Since it was announced in 2017 and then became a W3C recommendation in 2019, a lot of folks talk about it for browser execution of Wasm binaries, but there is also a lot of potential for server-side execution, including the edge.

Wasm allows you to write business logic in various supported languages and compile it into a binary file that runs on Wasm runtimes like Bytecode Alliance’s wasmtime.

Together with WASI (WebAssembly System Interface), it allows you to compose software written in different languages with a standardized interface by W3C.

If this sounds familiar to something very popular in the cloud world, check out this 2019 tweet from the founder of Docker:

If WASM+WASI existed in 2008, we wouldn't have needed to create Docker. That's how important it is. WebAssembly on the server is the future of computing. A standardized system interface was the missing link. Let's hope WASI is up to the task!

So, the expectations for Wasm + WASI are very high. I highly encourage you to keep an eye on the Bytecode Alliance and its efforts to create secure and open compilers, runtimes, and tooling for WebAssembly. The nonprofit organization was founded in 2019 by Mozilla, Fastly, Intel, and Red Hat. Today, its members include other cloud and edge players such as Amazon, Arm, Cisco, Docker, Fermyon, Microsoft, Nginx, Shopify, Siemens, and VMware.

Edge and Cloud Computing Comparison

Now, back to our exploration of edge computing.

One of the most interesting and notable differences with respect to cloud computing is that the edge is globally distributed by design. Your code and data storage natively runs on hundreds of edge servers. That’s a big difference compared to cloud architectures that typically start as centralized applications running in a single data center (or across 2-3 availability zones within a cloud region).

While many applications can easily run in a centralized way, if you’re building a website or product with a global audience, your architecture will definitely involve some sort of caching and content distribution. And with edge computing that happens automatically, your edge app is globally distributed with a click (or CLI command) and it runs as close as possible to your users for optimized latency.

So here comes the rhetorical question.

Do you still need the cloud?

In other words, is the cloud still the best option for designing and building well-architected, scalable applications in 2024?

Well, yes!

The reality is that for most use cases, the edge still needs some kind of origin to depend on. The origin is where the code business logic runs, whether it’s your website backend or other complex workloads that need to remain centralized (for convenience, technical limitations, or cost).

Let’s have a look at which workloads are likely to remain in the cloud (for now):

- Backend APIs + RDMS - Typically the core of your origin and production databases (there are at least 10 different database services on AWS alone).

- Massive storage - The cloud provides ways to reduce costs via cold storage.

- Data analytics - Your typical data warehouse, data lake, and data pipelines need a centralized data repository to run queries and build reports.

- AI/ML - Training efficiently and cost-effectively requires massive datasets (while inference can already run at the edge).

- HPC/Simulations - High-performance computing is distributed by design but usually within the same data center or region to reduce network overhead.

- CI/CD pipelines - These could run anywhere, but it’s probably more convenient and cheaper to use the cloud (including the pipelines to deploy your edge services).

To summarize, the cloud excels at general-purpose services, supporting almost every possible workload type out there, with virtually infinite storage and horizontal scalability (within a region or across a small number of regions for resiliency and disaster recovery).

Conversely, the edge simplifies global deployments, enhances security, and enables caching for cloud origins while supporting latency-optimized features without adding complexity to your main application.

In other words, cloud computing and edge computing complement each other. They will continue to coexist and solve different technical problems.

Cost-aware architectures

Another interesting angle to consider is cost. As often happens with managed services, the tradeoff is between using an off-the-shelf solution and designing, building, and maintaining your own globally distributed solution.

Since the edge is optimized for global content distribution, it often reduces costs for egress fees. However, edge data storage must be distributed across hundreds of edge servers, making storage and data operations look more expensive than regional cloud services, which typically replicate data only across 2-3 availability zones.

Let’s look at some numbers, focusing on key-value storage for 3 edge services (Cloudflare Workers KV, Fastly KV, CloudFront KeyValueStore) and 2 cloud services (Redis serverless on AWS and DynamoDB on-demand on AWS):

|

Cloudflare KV |

Fastly KV |

CloudFront KeyValueStore |

Redis serverless on AWS |

DynamoDB on-demand on AWS |

|

|

1M writes |

$5 |

$6.25 |

$1000 |

$0.0038 * |

$0.76 |

|

1M reads |

$0.50 |

$0.50 |

$0.03 |

$0.0038 * |

$0.15 |

|

1GB/month |

$0.50 |

$0.50 |

$0 |

$100 |

$0.3 ** |

* for each KB

** the first 25 GB are free

A few interesting differences and observations:

- Cloudflare doesn’t charge for data transfer but delete operations cost like write operations.

- Fastly doesn’t charge for delete operations, but you’re limited to 250k writes and 5M reads per month (unless you pay for an add-on).

- CloudFront KeyValueStore seems crazy optimized for reads, but writes are incredibly expensive compared to the other options.

- Redis looks pretty cheap, but you have to keep in mind that it’s $0.0038 per KB, so if you’re reading or writing 5KB, it will be 5x more expensive (which is still pretty cheap).

- DynamoDB is not just a key-value store, but it can definitely be used that way, and it still seems an order of magnitude cheaper than the edge services.

Although cloud services may seem cheaper, it’s crucial to note that they don’t operate on a global scale by default. You can enable DynamoDB Global Tables or Amazon ElastiCache Global Datastore (although it’s not compatible with ElastiCache serverless, so you’ll need to manage multiple clusters, too).

For example, if you enable DynamoDB Global Tables on 10 regions, the write and storage cost is multiplied by 10, so it becomes $7.60 per 1M writes and $3 for 1GB/month. That’s already more expensive than the edge alternatives and only for 10 regions (compared to the hundreds of edge servers).

Real-world Use Cases for Edge Computing

So, what can actually be done at the edge? There are many interesting use cases, and I’m hopeful this article will help you determine which one makes sense for your product.

A few examples:

- Authentication: Implementing edge-based CAPTCHA, passwordless authentication, or JWT validation can reduce the origin load by performing stateless operations at the edge that don’t require database access or other origin resources.

- Data collection: Integrate with external data sources or data destinations, offloading client-side and server-side tracking to the edge to avoid heavy SDKs in the browsers or performance bottlenecks on the server.

- Geo-based enrichment: Enhance your website with ‘near you’ content stored at the edge, implement localized redirects (

/en->/fr), geofencing or per-country throttling using geolocation APIs. - Content personalization: Store and display recently viewed articles or recommended products at the edge, implement paywalls for premium content, or use A/B testing to provide personalized, contextualized content while minimizing complexity at the origin.

- SEO optimizations: Manipulate HTTP headers and HTML responses directly at the edge to implement custom caching policies, content stitching, or ad-hoc compression algorithms.

- Security: Integrate custom WAFs (Web Application Firewalls) or implement secret headers and custom access control logic.

Or you could design an entire product or suite of products that operates almost entirely at the edge—exactly what we’re doing at Edgee.

Takeaways

I hope this article clarified that CDNs are no longer limited to caching and DDoS protection - they now provide a wealth of valuable services for developers. The edge is a new way to build apps or integrate new features while keeping the origin fast and straightforward.

WebAssembly has a lot of potential, not only for the browser but also for server-side and edge binary execution.

The cloud is here to stay, and the edge complements its limitations by enabling the creation of globally distributed applications with optimized latency and cost. My personal recommendation is to design and build cost-aware architectures, as it’s a key skill for becoming a more effective developer or architect.

Cloud Courses

FAQs

Who invented or coined the term "edge computing"?

The concept of edge computing emerged in the late 1990s and early 2000s, as the need for distributed computing solutions began to grow with the advent of the internet and mobile technology. Though its exact origin as a coined phrase isn't definitively attributed to a single individual or organization, it has evolved over time to include various use cases, such as IoT, 5G, CDNs, and autonomous systems.

How does edge computing impact energy efficiency?

Edge computing can improve energy efficiency by reducing the need to transmit large amounts of data to and from centralized cloud servers. Local processing minimizes network traffic and lowers energy consumption. However, running numerous edge devices globally can also lead to higher cumulative power usage, depending on the scale and efficiency of the devices.

What industries are adopting edge computing the fastest, and why?

Industries like manufacturing, healthcare, automotive, and retail are rapidly adopting edge computing. Manufacturing benefits from real-time monitoring and predictive maintenance on factory floors. Healthcare uses edge for quick diagnostics on wearable devices. Automotive industries leverage it for autonomous vehicle operations, and retail applies it for personalized shopping experiences.

How does WebAssembly (Wasm) enhance edge computing?

WebAssembly (Wasm) enhances edge computing by enabling developers to run lightweight, secure, and high-performance applications on edge devices. It supports multiple programming languages, allowing flexibility in development. Its fast startup time and compatibility with edge platforms like Cloudflare Workers or Fastly make Wasm an ideal choice for latency-sensitive applications.

What are the security challenges of edge computing compared to cloud computing?

Edge computing introduces unique security challenges because data is processed closer to users on distributed devices, which increases potential attack surfaces. Ensuring data encryption, secure device authentication, and regular software updates are critical to mitigating risks. Unlike cloud systems with centralized security controls, edge environments require more decentralized and adaptive measures.

Alex is a software engineer passionate about web technologies and music. He began working on web projects and sharing his experiences in 2011. His passion for programming spans different languages such as Python and JavaScript, as well as the open-source world and startups. After spending 6 years helping developers and companies adopt cloud technologies, Alex returned to the startup life to help businesses adopt edge computing technologies and services.