There are a lot of components to a data science project which require collaboration for successful deployment. With these connected parts, an agile approach is necessary for the development and deployment processes. This approach allows organizations to learn fast and iterate quickly. In a recent DataCamp webinar, Brian Campbell, Engineering Manager, Internal Engineering at Lucid Software, discusses the best practices for successfully deploying data science projects.

Early Steps to Allow for Agile Development

Brian explains that two key steps can be taken early in a project to allow for parallel progress on both the development and deployment of a model: working with baseline models and creating a prototype. While these approaches complicate timelines, they lead to significantly faster and better results.

Work with Baseline Models

A helpful step to successfully shipping machine learning projects is creating a baseline model. A baseline model gives the same outputs as the final model. However, it is built before most of the development process and may be based on heuristics or random data. This baseline gives a target for future iterations to beat.

As the team develops new models, new results can be compared to the baseline and inform learning. The gap between the baseline and the desired states informs project requirements and helps teams identify the most valuable data and solutions for the problem.

Work with Prototypes

Prototypes are models that ingest the same inputs and provide the same format of outputs that the final model will. This model can be informed by the baseline model. Once the prototype is ready, those in charge of deployment can begin work integrating the model into its intended purpose. The prototype enables parallel progress on the model; data scientists improve the model while the implementation team works on how the model will be packaged to customers or within a business process.

Prototypes only work when the final model behaves the same as the prototype. Thus, it is necessary to understand the available data and intended output format before creating the prototype. This requires some knowledge about the project beyond the initial problem formulation, and strong collaboration with problem experts.

Real-World Use-Case of Baselines and Prototypes

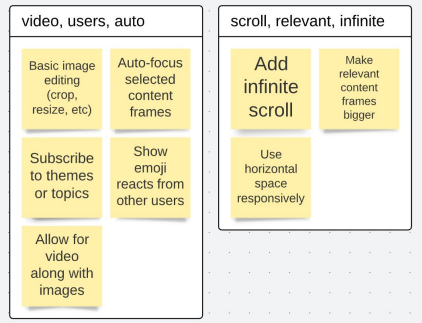

Brian discusses how his team at Lucid used baselines and prototypes to accelerate the development of a clustering model for a sticky note product used by product, design, and engineering teams. These teams often run design thinking sessions where a facilitator needs to manually cluster sticky notes of similar types under a given category. The system's goal was to reduce the time between brainstorming and discussion by automatically clustering ideas into categories and removing duplicates.

The baseline model took in random ideas and generated random categories for them. Then, they created a prototype of this model to enable the implementation experts to begin working early in the development process. Over time, they improved the baseline model by leveraging natural language processing and machine learning to cluster similar sticky notes together.

Communication is important with these models

The first iteration of this project ran into some problems because there was a lack of consistent collaboration between the deployment team and the data scientists working on the model. This lack of teamwork led to model performance issues, inaccurate testing, and a poor product experience. They were not able to realize the synergies of developing the model in parallel.

When they relaunched the project and collaborated effectively, they solved these issues and set realistic expectations. Collaboration was made possible by having a prototype model for the deployment team to work parallel with the data science team developing the model.

To understand the lessons learned from the project, and how to maneuver complex data science projects effectively, make sure to tune in to the on-demand webinar.