Over the last few years, AI has seen significant growth in utilization and effectiveness in organizations. Its applications range from predictive analytics for more efficient and accurate business operations, to machine vision for direct interactions with the world, and natural language processing (NLP) for more effective interaction with technology through language. Accenture reports that 84% of surveyed executives believe they need to leverage AI to achieve their growth objectives.

With this fast growth in a relatively new and maturing technology comes a lot of responsibility and ethical concerns. Capgemini found that 70% of customers expect transparency and fairness in their AI interactions. Additionally, regulators are beginning to tighten regulations around AI transparency and fairness. The European Union recently released a set of AI regulations that aim to minimize the harmful impacts of AI systems, and the FTC announced plans to go after companies selling biased algorithms (MIT Tech Review).

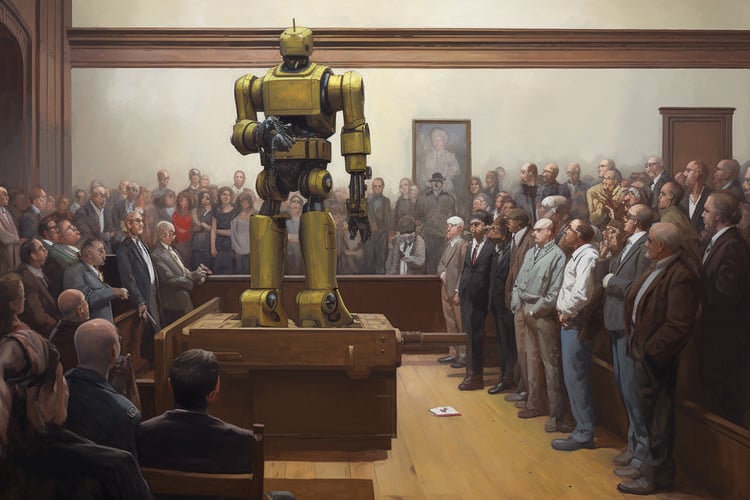

The increases in ethical concerns are justified and driven by AI use cases that increasingly involve highly sensitive settings such as healthcare, judicial systems, and finance. Without proper tuning and understanding of the potential ethical risks, AI can lead to the reproduction and amplification of historical bias and discrimination. For example, ProPublica investigated the COMPAS algorithm which was used to inform sentencing for criminals by predicting the likelihood of an individual to re-offend. They discovered that black defendants were significantly more likely to receive false-positive recommendations suggesting they would re-offend when they would not while white defendants were more likely to receive false-negative recommendations suggesting they would not re-offend.

Similarly, OpenAI’s GPT-3 NLP transformer model with 175 billion parameters has demonstrated significant bias in generating sentences that include religion. The model has also shown bias toward other groups, including gender and race which is discussed in the original paper.

As AI becomes more ubiquitous, it’s crucial to ensure the responsible use of AI. If we are to trust these systems to inform important and sensitive decisions, they cannot be biased or include historical stereotypes.

What is Algorithmic Bias?

Algorithmic bias is pretty prominent in the news, as seen in gender bias in hiring decisions favoring men, online advertisements that reproduce redlining on a map (housing discrimination), and healthcare algorithms that include racial bias. All of these examples clearly show a group being treated differently by the model than another group.

Many metrics exist for formally defining algorithmic bias. The basic definition is that an algorithm treats groups in the data differently. The groups we are concerned about, such as race, gender, religion, and pregnancy or veteran status are called protected or sensitive characteristics. When analyzing an algorithm, it’s necessary to understand what groups are potentially most vulnerable to harmful impacts of the model.

There are two categories of metrics to determine the bias and fairness of an algorithm: fairness by representation and fairness by error.

Fairness by Representation

These metrics evaluate the outcomes of the model for different groups. For example, these metrics could look at the proportion of men who get called back for an interview versus women for a hiring algorithm.

Fairness by Error

Fairness by error metrics observe the difference in the proportion of error rates made for each group. For example, in the COMPAS algorithm bias described above, the false-positive rate for black defendants was significantly higher than other groups, and the false-negative rate for white defendants was significantly lower.

Causes of Algorithmic Bias

Bias in an algorithm is caused by the underlying data. Simple models like linear regression and more complex transformer models are not biased on their own; they produce outputs based on the data provided to them. Below are five causes of bias in data.

- Skewed dataset: A dataset may have different proportions of data for each group or training examples for each group which may lead to biased model outputs.

- Tainted examples: The data may have unreliable labels or historical bias may exist in the data.

- Limited Features: The collected features may be unreliable or not informative for specific groups due to bad data collection practices.

- Samples Size: AI algorithms struggle to perform well with smaller datasets.

- Proxy Features: Some features can leak information about protected variables, even if the protected variable is not included in the data. Research has shown that variables like zip code, sports activity, and the university a person attended can all indirectly inform a model about protected variables which can lead to algorithmic bias.

What are mitigation techniques that can be used?

We now have a good formal understanding of what algorithmic bias is and what it is caused by. There are two potential avenues for mitigating algorithmic bias: technical techniques and AI governance. Most likely a combination of these two techniques will yield the best results in reducing the presence of bias in an algorithm.

Technical Mitigation Techniques

Within technical techniques, there are three approaches: pre-processing techniques which reduce bias in the original data before training, in-processing methods that mitigate bias during model training, and post-processing algorithms that reduce bias by modifying predictions.

This paper describes four common pre-processing techniques used in the literature in the introduction:

- Suppression: remove a variable that correlates most with a sensitive variable

- Massaging: Relabel some observations in the data in an efficient way using a ranker to remove discrimination in the input data

- Reweighting: Assign weights to each tuple in the training data to remove discrimination

- Sampling: Sample from each group to get a more balanced representation of each group using either uniform sampling or preferential sampling by duplicating or skipping observations.

Other techniques like this paper’s probabilistic formulation and this paper, which describes two “repair procedures,” provide good methods of removing bias as well in the pre-processing stage.

In-processing techniques focus on removing bias during training. These techniques typically include fairness constraints which impact the optimization problem and lead to more fair results. Examples discussing in-process methods include this one looking at fairness constraints and this one looking at fairness-aware classification.

Finally, post-processing algorithms modify model outputs to reduce bias. These algorithms can either change predictions for privileged or unprivileged groups as seen by Pleiss et al. and Hardt et al. or they reduce bias when an algorithm output is close to the decision boundary (where it is less sure about the result) as seen in this paper on decision theory.

For any of these techniques, it is essential to discuss with all stakeholders involved the trade-offs in accuracy and the risks being taken by changing the underlying data or model results. These techniques can lead to predicting outcomes that have not been seen historically or are not seen in the data.

Governance and Accountability

There are a lot of benefits to using AI in organizations. However, as seen throughout the blog post, ethical risks posed by AI systems lead to organizational risks such as reputational damage, which lead to loss of revenue. No firm wants to have a headline saying they used a biased algorithm to make business decisions that negatively impacted a group of customers or employees.

Proper AI governance is conducted through comprehensive risk management. The specific use case of the AI decision and the associated impact assessment for each stakeholder needs to be identified. Understanding the potential monetary and human risks associated with the use case is important. Once the magnitude of each risk is accurately assessed for each stakeholder, the likelihood for these repercussions should be calculated.

AI governance best practices include comprehensive checklists for stakeholders, automated and manual testing, business standards compliance testing, detailed reports, and assertions from individuals and groups who are directly responsible for the model. For more, details make sure to download our white paper.

Responsible AI Relies on Data Literacy

Scaling responsible AI in organizations requires the development of data literacy within the organization. Successful AI governance includes multiple stakeholders engaging with and adopting a single framework. Understanding the scope of projects, how they are deployed and governed, and their impact and potential risks is a requirement for responsible AI for all involved stakeholders. Accenture argues that data literacy builds trust and allows for responsible AI by including diverse perspectives which lead to consistently better outcomes.

By increasing baseline data literacy in an organization, subject matter experts who do not consistently interact with AI systems can include their expertise in these systems and communicate with technical stakeholders more effectively. This improved communication leads to more trust throughout the organization for all stakeholders involved.

The important aspects of data literacy for promoting responsible AI in an organization include an understanding of the following areas: the data science and machine learning workflow, different data roles, the flow of data through an organization, the distinction between various types of AI systems, and evaluation metrics for machine learning models.

Increase Baseline Data Literacy to Scale Responsible AI

Training all stakeholders in an organization is required to promote responsible AI. Avoiding the associated risks with algorithmic bias is necessary to allow an organization to successfully take advantage of the many benefits of leveraging AI in business contexts. Data literacy is the key to successful communication between stakeholders, trust within the organization, and diverse solutions to be explored for responsible AI. The EU is allocating €1.1 trillion to their Skills Agenda, with a heavy emphasis on AI skills and data literacy for regulators, demonstrating the importance of these tools in organizations. Many programs teach the fundamentals of data literacy. For more details on how data literacy can fuel responsible AI — check out our latest white paper.