Kurs

NVIDIA announced a "pandas Accelerator Mode" for their cuDF package, letting you write high-performance pandas code for data manipulation in Python. It is another step towards the dream of writing human-readable Python code that runs quickly, even on big datasets.

In this post, we unpack how it can help you, how to get started, and what the alternatives are.

Towards the Dream of High-Performance pandas Code

The problem with pandas

pandas is the most popular data manipulation package for Python, with 144 million downloads last month. A large part of pandas's popularity comes from its ease of use and extensive set of features for data manipulation.

Unfortunately, pandas's extensive development history—it was first made publicly available in 2011—means that it predates many innovations in high-performance computing (HPC). That means that pandas code runs too slowly to be useful for large datasets.

Many attempts at making pandas faster

There have been many attempts to solve this problem. The goal is to be able to write the same pandas code that millions of users are familiar with but have it run more quickly. Some high-performance pandas alternatives include Polars, which speeds things up by rewriting the backend in Rust; PySpark, which provides a Python interface to the Spark HPC platform; Vaex, which uses out-of-memory computation; and DuckDB, which performs computation inside an analytics-optimized database.

Check out these tutorials, which discuss some of the alternatives in more detail:

- High Performance Data Manipulation in Python: pandas 2.0 vs. polars

- Benchmarking High-Performance pandas Alternatives

- An Introduction to DuckDB: What is It and Why Should You Use It?

NVIDIA's solution for faster pandas

NVIDIA has been developing a suite of tools for high-performance data science called RAPIDS. This toolbox includes cuDF, NVIDIA's Python package for high-performance pandas code. (The name combines CUDA, NVIDIA's low-level toolkit for building GPU-enabled applications, and DataFrame, the pandas object for storing analytics data. The latter inspired the name for DataCamp's DataFramed podcast.)

cuDF's trick for speeding up data manipulation code is to make the code run on a GPU rather than a CPU. While originally designed for computations to display graphics, GPUs are incredibly effective at data science computations.

cuDF Had Problems

Although cuDF has been very successful at letting you run pandas code faster, it had several issues that have prevented widespread adoption.

Not all of pandas is supported

One large blocker is that cuDF only implements about 60% of the Pandas API. That is, only about 60% of all the possible code that you could write in pandas can be run in cuDF. The 60% of code that can run is, naturally, the most common 60% of code that most people will want to run. That means that for day-to-day analyses, cuDF code should be fine. However, if you want to do something a little unusual, you would run into problems with cuDF.

A GPU was required for development and testing

cuDF only supports running code on GPUs. That means that you need a GPU when developing the code and when testing it. This is often infeasible if you want to run code locally on a laptop and expensive if you want to run your code in the cloud.

Interacting with other Python packages required processor swapping

Another issue is that the vast majority of Python packages aren't GPU-enabled. That means that for any analysis that uses other packages—for example, more or less any machine learning workflow—you'd have to work out how to move computation from GPU to CPU and back again.

The existing solution to the lack of GPUs was tedious

Together, these three problems meant that you would have to write two versions of your code: one that would run if GPUs were available and cuDF could compute everything and one where no GPU was available.

Most data scientists want to focus more on getting insights from data rather than on such code details. This has historically made using cuDF problematic for many data science tasks.

How pandas Accelerator Mode Improves cuDF

The solution to these problems that pandas Accelerator Mode provides is that you only need to write one line of code to enable GPU support, and then you can write standard pandas code.

When GPU computation is supported (there's an NVIDIA GPU available, and cuDF knows how to run the pandas code), your code will run on the GPU. In cases where this is not possible, cuDF automatically switches to running on the CPU. You don't need to write two versions of your code, and you don't need to manually handle switching between GPU and CPU.

Is pandas Accelerator Mode Faster Than the Alternatives?

Image copyright NVIDIA.

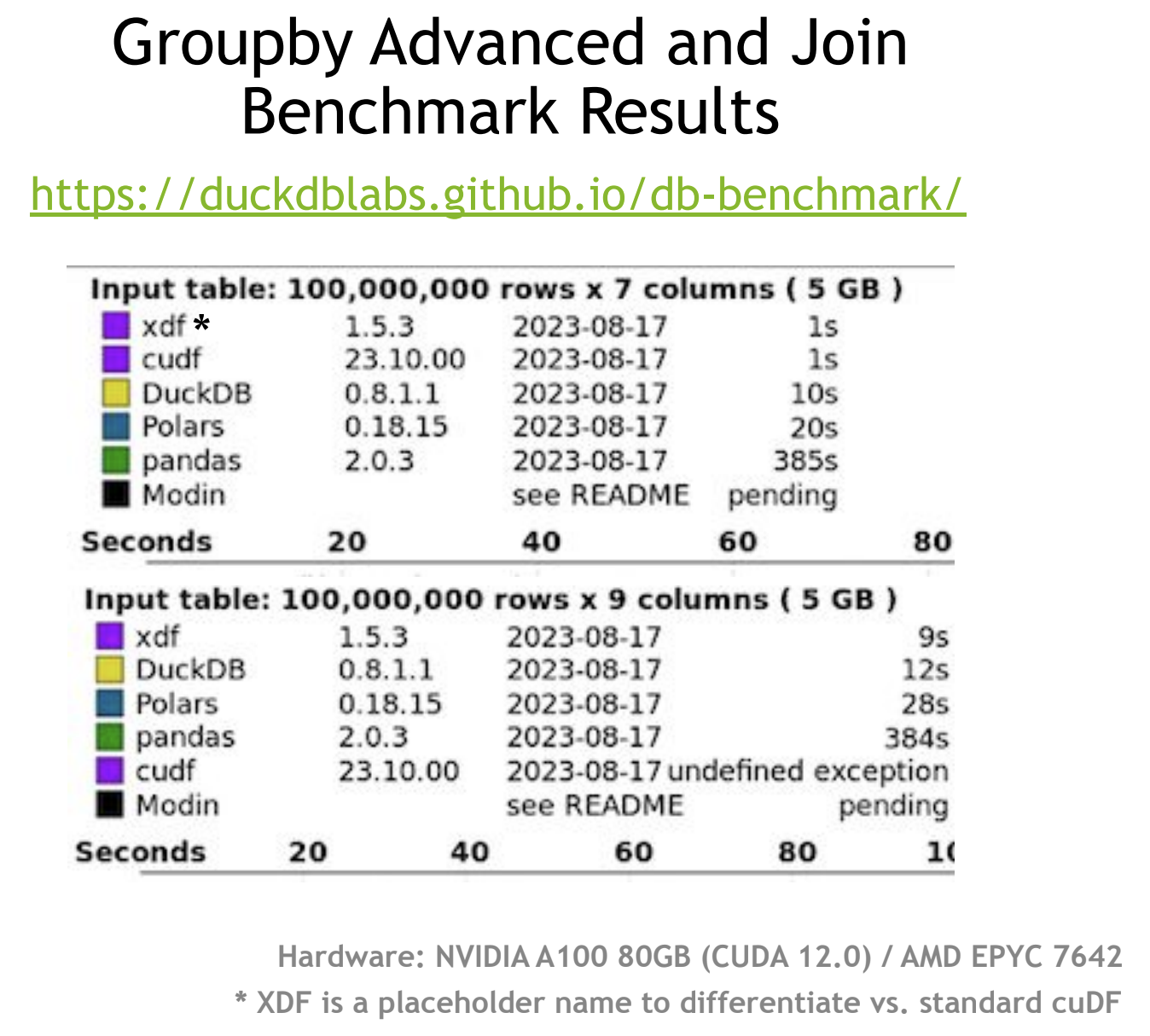

NVIDIA tested pandas Accelerator Mode against other high-performance Python data manipulation tools on the DuckDB Database-like ops benchmark. This suite of data manipulation challenges provides a measure for how well a technology can perform tasks like providing grouped summary statistics and table joins on big datasets.

According to NVIDIA, pandas Accelerator Mode (denoted xdf in the image) comes first place in the benchmark. This is notable because the standard version of cuDF currently fails the join test since it cannot perform all the required operations on GPU. (You'd need to mix cuDF with another tool to make everything work.)

One thing to note is that NVIDIA tested this on the top-end NVIDIA A100 80GB GPU, and the CPU that was used is not specified. Your performance will vary depending on your hardware setup, your dataset, and the calculations you want to perform.

How can I use pandas Accelerator Mode?

Let’s look at how you can start using Accelerator Mode in pandas:

How to install the latest cuDF

The latest version of cuDF, which includes pandas Accelerator Mode, is called cudf-cu11. It is available via a standard pip install, with the proviso that you currently have to get it from the NVIDIA PyPi repository.

Run this code to install the package.

pip install cudf-cu11 index-url=https://pypi.nvidia.comIf you are running inside a Juypter notebook, prefix the code with an exclamation mark

!pip install cudf-cu11 index-url=https://pypi.nvidia.comEnabling pandas Accelerator Mode in a Jupyter notebook

To enable pandas Accelerator Mode in a Jupyter notebook, add the following line of code in a cell near the start of your notebook.

%load_ext cudf.pandasEnabling pandas Accelerator Mode from a terminal

To enable pandas Accelerator Mode from a terminal, replace the standard command to run a Python script

python script.py

with

python -m cudf.pandas script.pyWhat else do I need to do?

Simply enable a GPU, then write and run your pandas code as usual.

Can I Profile pandas Accelerator Mode code?

-

In a notebook,

add %%cudf.pandas.profileto the cell you want to profile. -

You get stats (by function call or line by line) on the number of GPU calls and CPU calls + time spent on each PU.

If you are using cuDF, then it is likely that how long your code takes to run is important to you. In order to optimize this, you need to be able to measure it. The technique for measuring the time that code takes to run is called profiling. pandas Accelerator Mode provides a profiling tool for code run in Jupyter notebooks.

To profile the code in a cell, add the following line to the start of the cell.

%%cudf.pandas.profileWhen you run the code, you will get statistics, either by function call or line-by-line, on how much time was spent computing on GPU and on CPU.

Is All pandas Code Supported?

At the time of the announcement, all pandas code was supported with two exceptions: pandas 2.0 DataFrames that are built on Apache Arrow were not yet supported, though support was in development. Secondly, compiled pandas code generated by Numba or Cython is not fully supported.

In either case, the code that is not supported by cuDF will run on a CPU.

Keep Learning

If you are interested in how data scientists use cuDF, listen to this episode of DataFramed: Becoming a Kaggle Grandmaster with Jean-Francois Puget, a Distinguished Engineer at NVIDIA and Kaggle Grandmaster.

You can learn how to work with big data in Python using PySpark in the Big Data with PySpark skill track.

Lastly, make sure to stay up to date with the latest developments from NVIDIA, which announced GPU Acceleration in scikit-learn, UMAP, and HDBSCAN. Read and learn how to make your Python machine learning libraries run on GPUs.

Richie helps individuals and organizations get better at using data and AI. He's been a data scientist since before it was called data science, and has written two books and created many DataCamp courses on the subject. He is a host of the DataFramed podcast, and runs DataCamp's webinar program.