Cursus

OpenAI has released GPT-4o mini, a more accessible version of the powerful GPT-4o. This new model aims to balance performance with cost-efficiency, addressing the needs of businesses and developers who want powerful AI solutions at a lower price point.

In 2024, the narrative around AI seems to be shifting from bigger and better models to more cost-effective options, especially for B2B applications. There's a shift from cloud-based AI to local AI, making smaller models more important.

Until now, OpenAI lacked a strong candidate for this space since GPT-3.5. GPT-4o mini changes that by making powerful AI accessible and affordable for integration into every app and website.

In this article, we’ll explore the key features of GPT-4o mini, how it compares to other similar LLMs, and what this launch means for AI developments.

OpenAI Fundamentals

Get Started Using the OpenAI API and More!

What Is GPT-4o Mini?

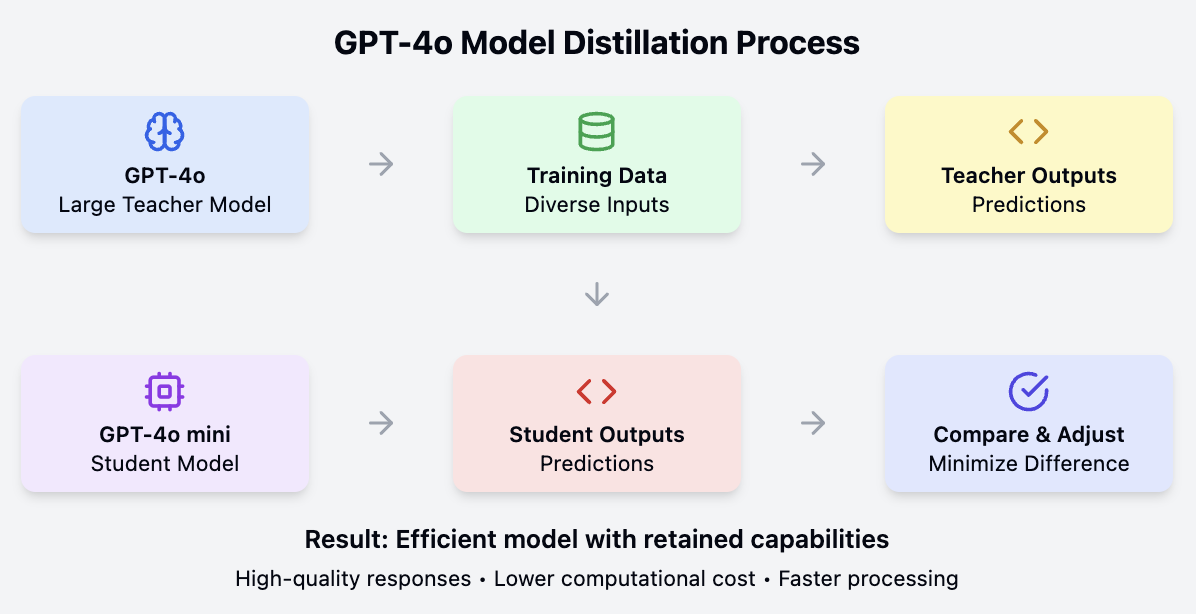

GPT-4o mini is derived from the larger GPT-4o model through a distillation process. This process involves training a smaller model to mimic the behavior and performance of the larger, more complex model, resulting in a cost-efficient yet highly capable version of the original.

Key features

- Large context window: GPT-4o mini retains the 128k token context window of GPT-4o, enabling it to handle lengthy texts effectively. This is ideal for applications that need extensive context, such as analyzing large documents or maintaining conversation history.

- Multimodal capabilities: The model processes both text and image inputs, with future support planned for video and audio inputs and outputs. This versatility makes it suitable for various applications, from text analysis to image recognition.

- Reduced cost: GPT-4o mini is much more affordable than its predecessors. It costs $0.15 per million input tokens and $0.60 per million output tokens, significantly cheaper than the GPT-4o model, which is priced at $5.00 per million input tokens and $15.00 per million output tokens. Compared to GPT-3.5 Turbo, GPT-4o mini is over 60% cheaper.

- Enhanced safety: The model includes the same safety features as GPT-4o and is the first in the API to use the instruction hierarchy method. This improves its resistance to jailbreaks, prompt injections, and system prompt extractions, making it safer to use in various applications.

GPT-4o mini competition

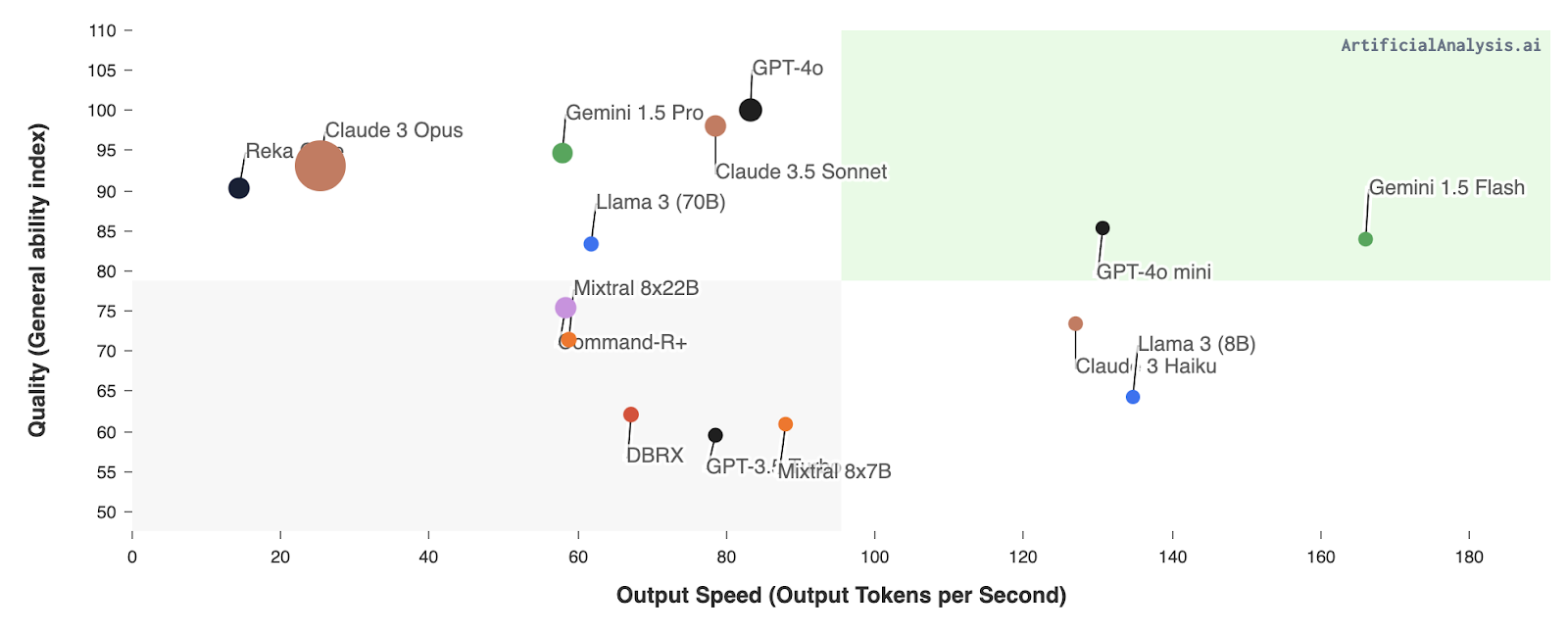

GPT-4o mini competes with models like Llama 3 8B, Gemini 1.5 Flash, and Claude Haiku, as well as OpenAI's own GPT-3.5 Turbo. These models offer similar functionalities but often come at higher costs or with less advanced performance metrics.

- Gemini 1.5 Flash: Although Gemini 1.5 Flash has a slightly higher output speed, GPT-4o mini excels in quality, making it a more balanced choice for applications needing both speed and high accuracy.

- Claude 3 Haiku and Llama 3 (8B): GPT-4o mini outperforms these models in both quality and output speed, showcasing its efficiency and effectiveness.

- GPT-3.5 Turbo: GPT-4o mini outperforms GPT-3.5 Turbo in output speed and overall quality and offers vision capabilities that GPT-3.5 Turbo lacks.

Source: Artificial Analysis

How GPT-4o Mini Works: The Mechanics of Distillation

GPT-4o mini achieves its balance of performance and efficiency through a process known as model distillation. In essence, this involves training a smaller, more streamlined model (the "student") to mimic the behavior and knowledge of a larger, more complex model (the "teacher").

The larger model, in this case, GPT-4o, has been pre-trained on vast amounts of data and possesses a deep understanding of language patterns, semantics, and even reasoning abilities. However, its sheer size makes it computationally expensive and less suitable for certain applications.

Model distillation addresses this by transferring the knowledge and capabilities of the larger GPT-4o model to the smaller GPT-4o mini. This is typically done by having the smaller model learn to predict the outputs of the larger model on a diverse set of input data. Through this process, GPT-4o mini effectively "distills" the most important knowledge and skills from its larger counterpart.

The result is a model that, while smaller and more efficient, retains much of the performance and capabilities of the original. GPT-4o mini can handle complex language tasks, understand context, and generate high-quality responses, all while consuming fewer computational resources. This makes it a practical and affordable solution for a wide range of applications, especially those where speed and cost-efficiency are important.

GPT-4o Mini Performance

GPT-4o mini showcases impressive performance across various benchmarks. I've created Claude Artifacts for each benchmark to explain what each LLM benchmark is and what it measures.

Reasoning tasks

For reasoning tasks, we evaluated GPT-4o mini on the following:

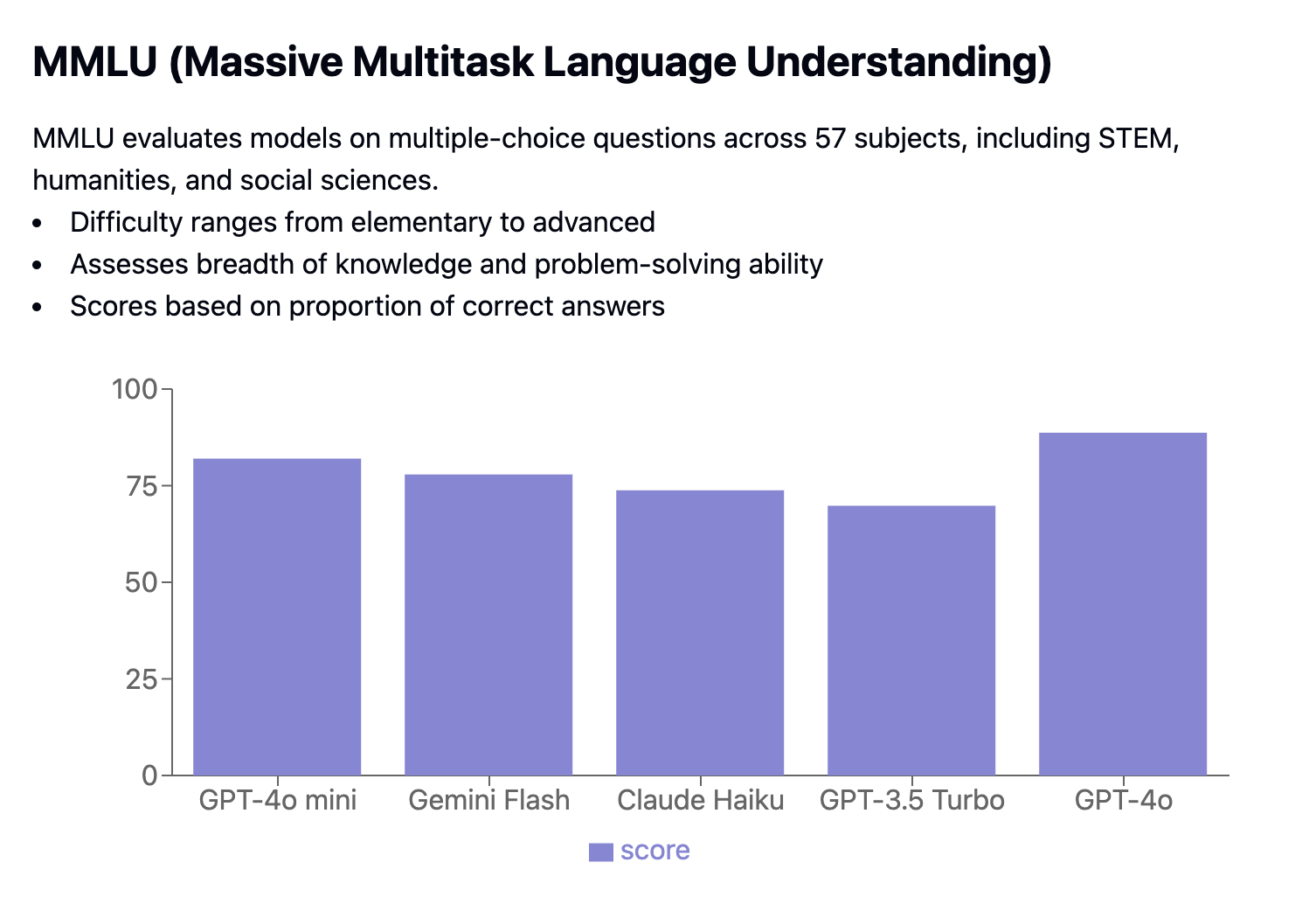

MMLU (Massive Multitask Language Understanding) is a benchmark that tests models with multiple-choice questions across 57 different subjects, including STEM, humanities, and social sciences. The questions vary in difficulty from basic to advanced. It measures how many answers are correct and require exact matches. GPT-4o Mini scored 82.0%, surpassing competitors like Gemini Flash (77.9%) and Claude Haiku (73.8%).

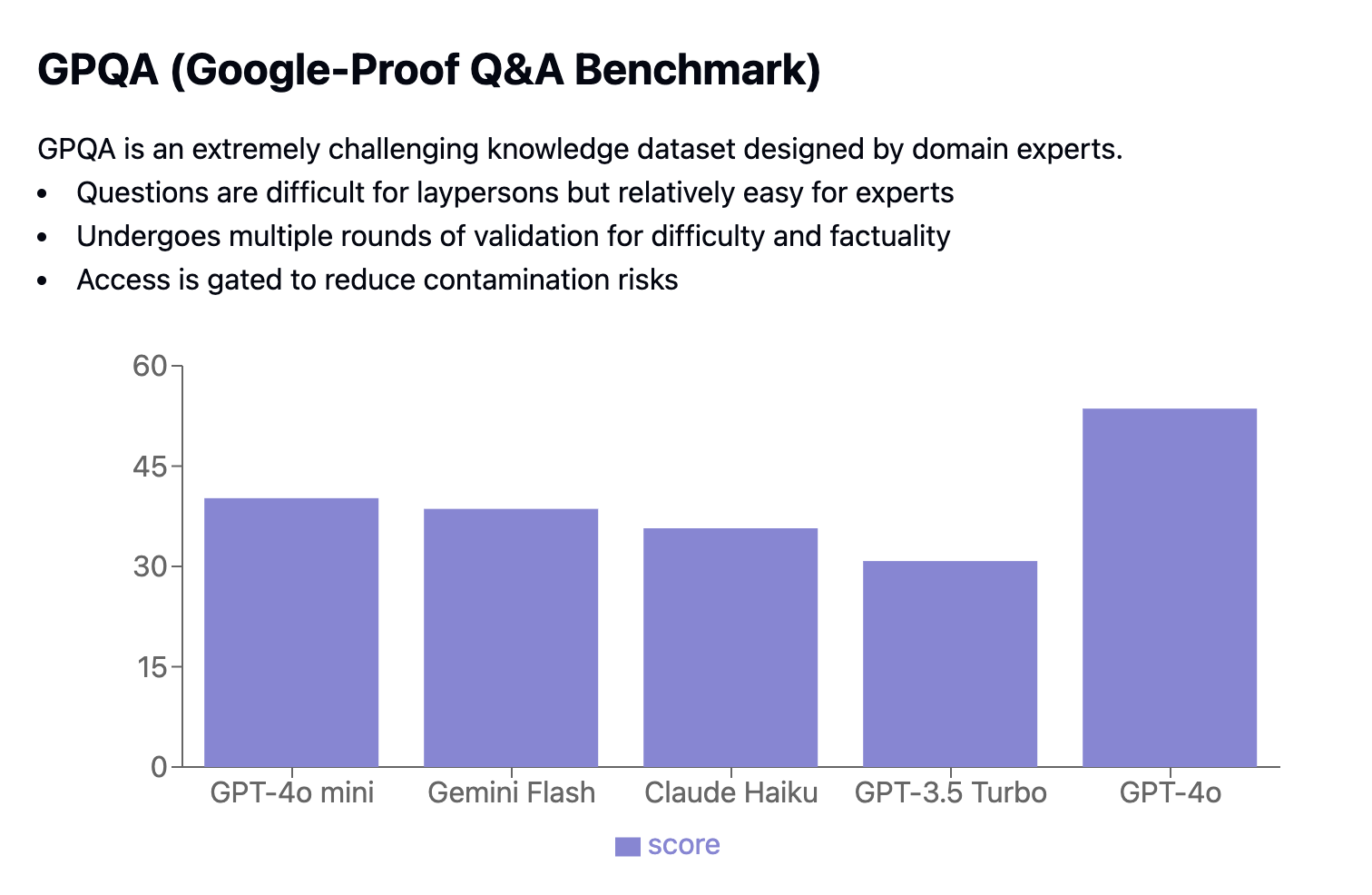

GPQA (Google-Proof Q&A Benchmark) is a tough dataset with questions crafted by experts to challenge non-experts while being manageable for specialists. The questions are carefully validated for both difficulty and accuracy through multiple rounds to reduce contamination risks.

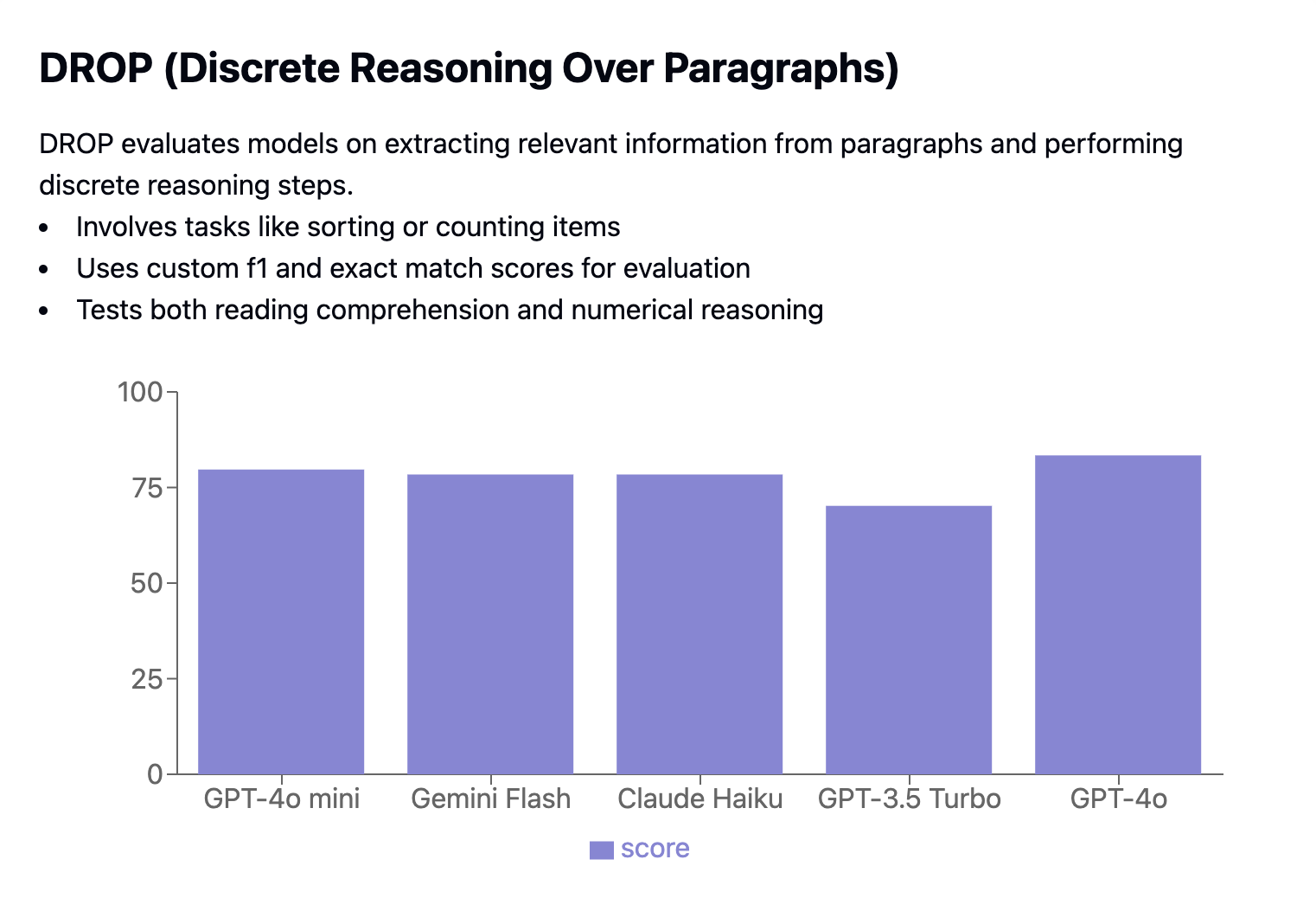

DROP (Discrete Reasoning Over Paragraphs) tests how well models can extract relevant information from paragraphs and perform reasoning tasks like sorting or counting. Performance is evaluated using custom F1 and exact match scores.

Math and coding proficiency

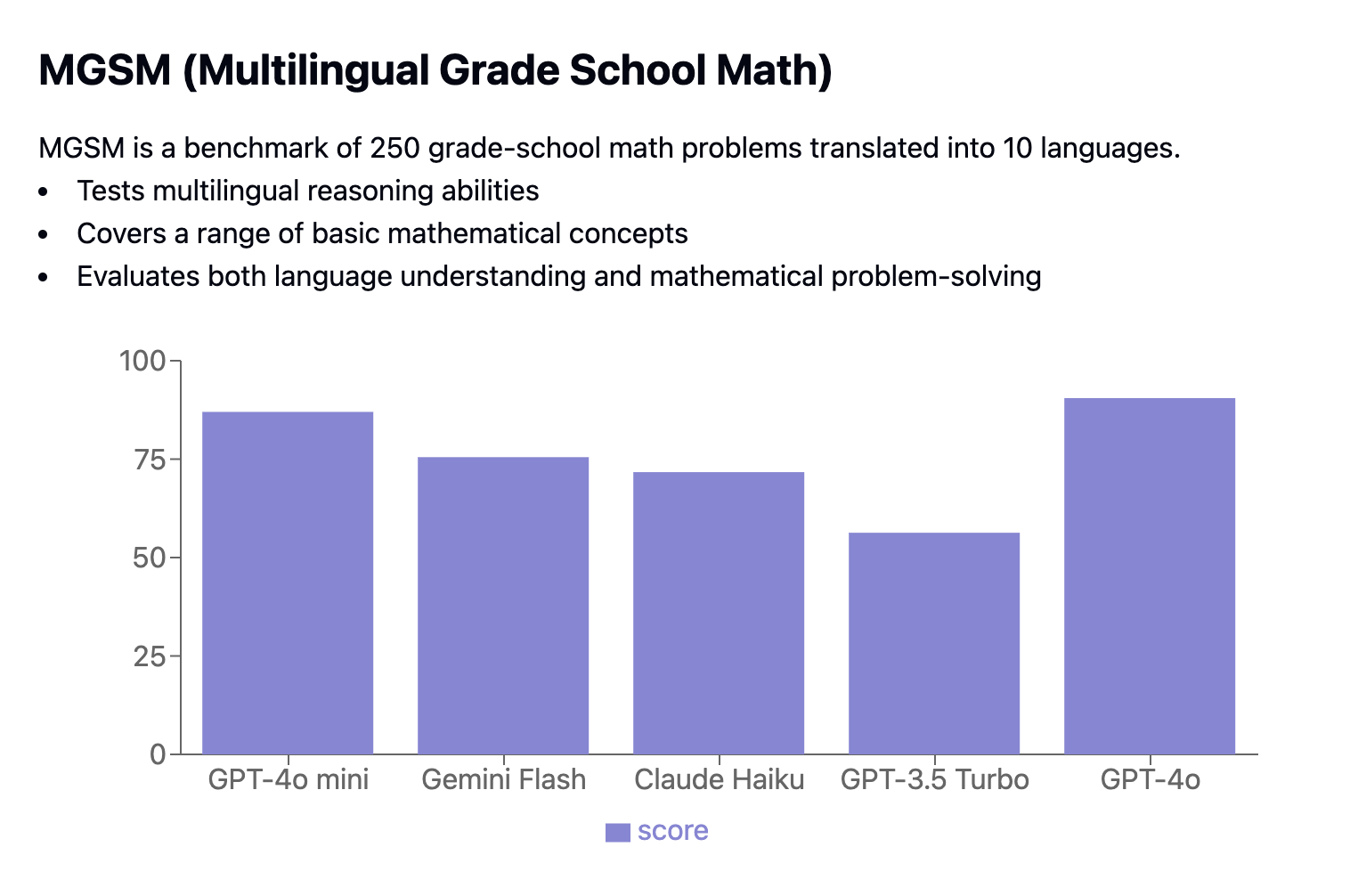

MGSM benchmark includes 250 grade-school math problems translated into 10 languages, testing multilingual reasoning abilities.

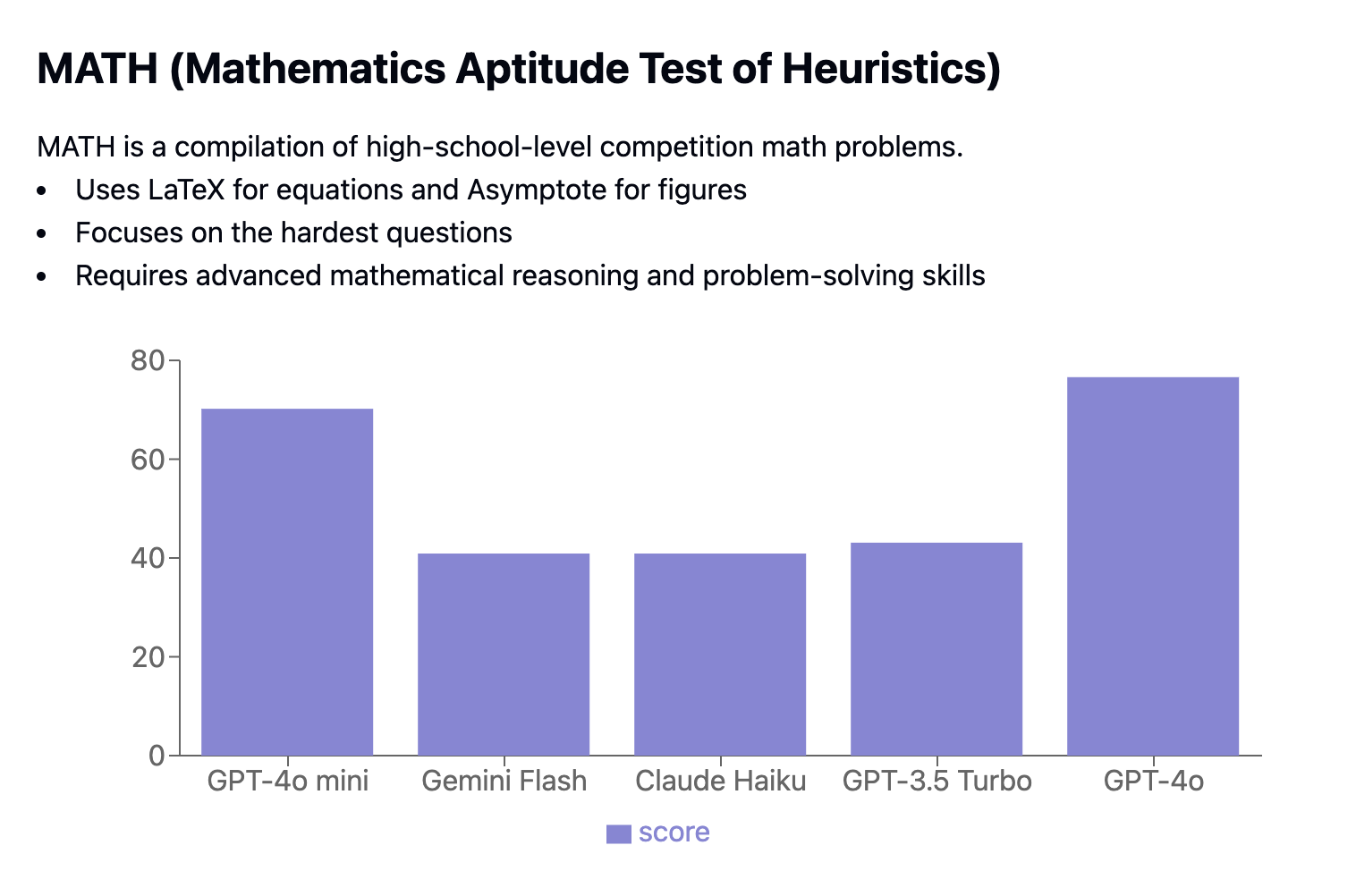

The Mathematics Aptitude Test of Heuristics (MATH) features high-school-level competition problems. It evaluates models on their ability to solve complex math problems formatted in Latex and Asymptote, focusing on the most challenging questions.

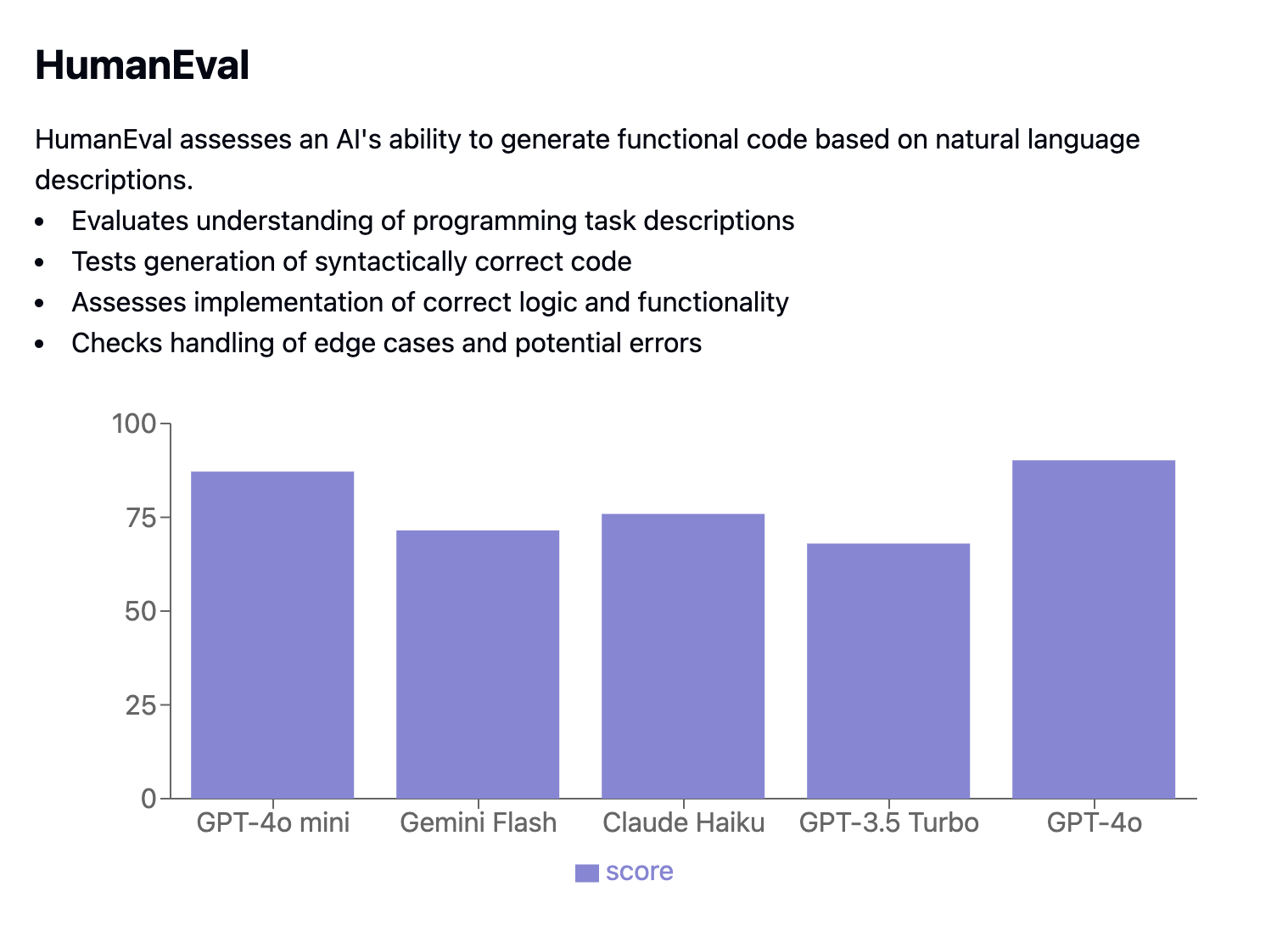

HumanEval benchmark measures code generation performance by evaluating if the generated code passes specific unit tests. It uses the pass@k metric to determine the probability that at least one of the k solutions for a coding problem passes the tests.

Multimodal reasoning

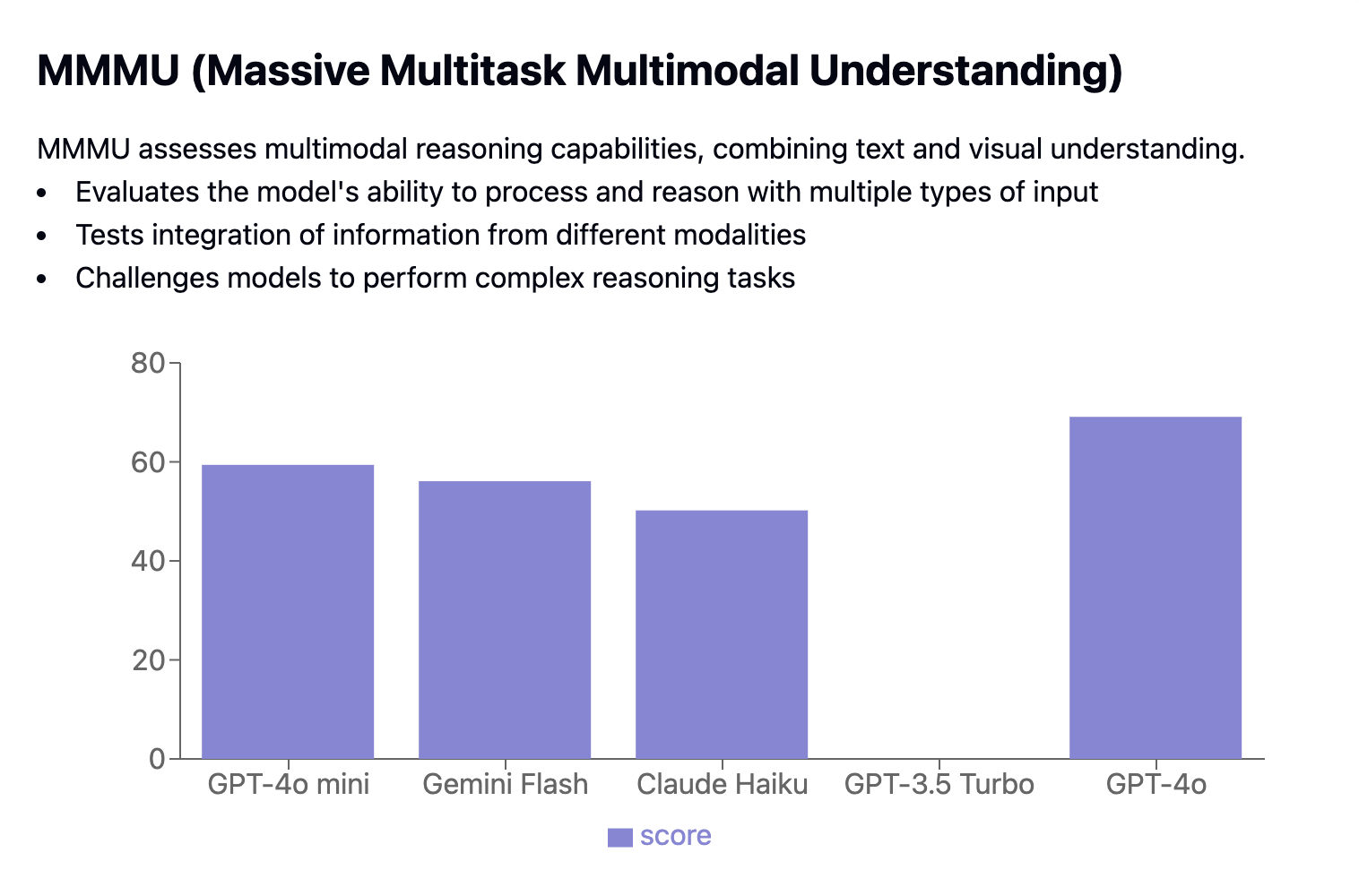

Massive Multitask Language Understanding (MMLU) benchmark tests a model’s breadth of knowledge, depth of natural language understanding, and problem-solving abilities. It features over 15,000 multiple-choice questions spanning 57 subjects, from general knowledge to specialized fields. MMLU evaluates models in few-shot and zero-shot settings, measuring accuracy across subjects and averaging the results for a final score.

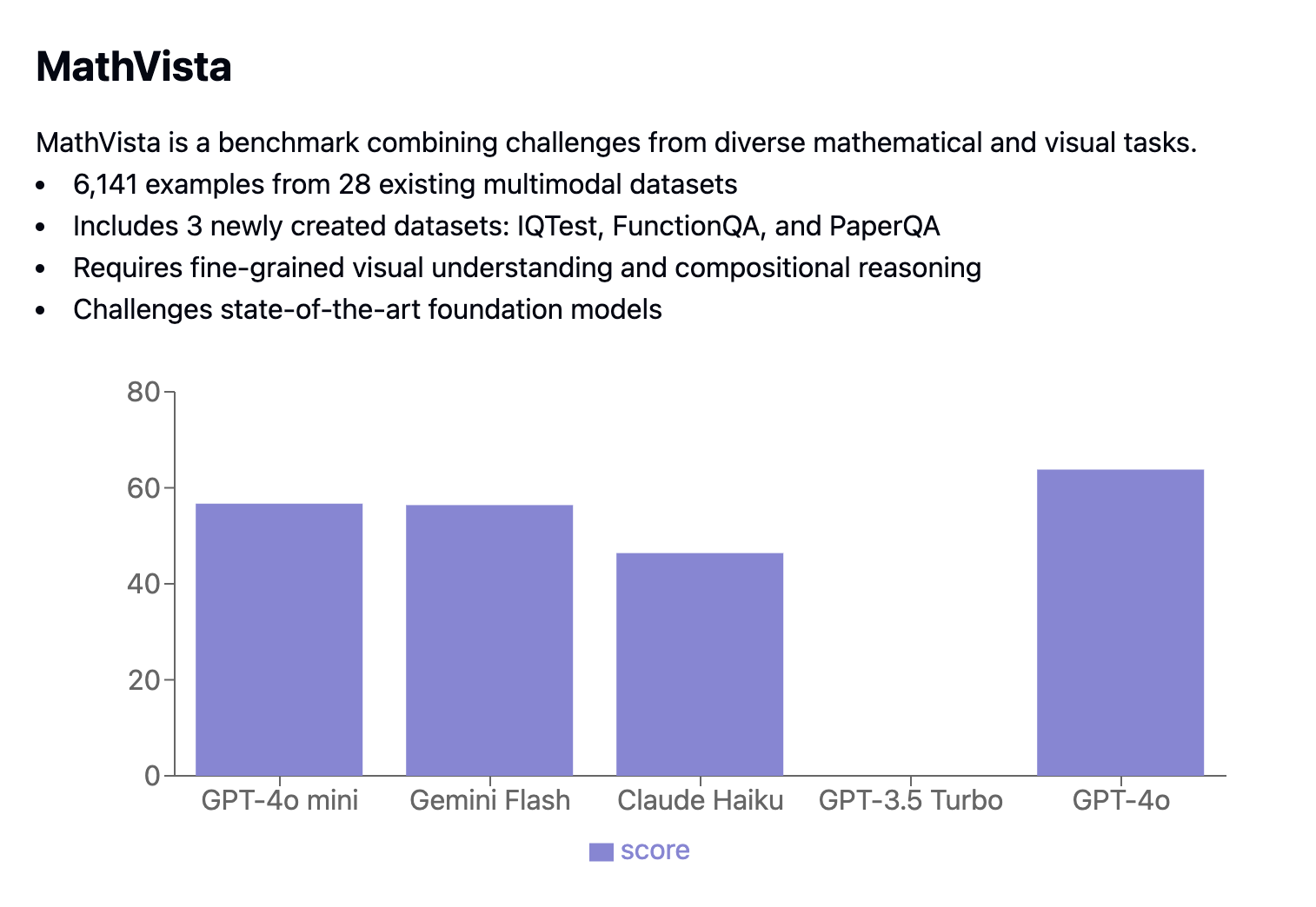

MathVista benchmark combines mathematical and visual tasks, featuring 6,141 examples drawn from 28 existing multimodal datasets and 3 newly created datasets (IQTest, FunctionQA, and PaperQA). It challenges models with tasks that require advanced visual understanding and complex compositional reasoning.

Use Cases for GPT-4o Mini

GPT-4o mini’s small size, low cost, and strong performance make it perfect for use on personal devices, quick prototyping, and in resource-limited settings. Plus, its real-time response capability improves interactive applications. Here’s how GPT-4o mini can be used effectively:

|

Use Case Category |

Benefits |

Example Applications |

|

Smaller size allows for local processing on laptops, smartphones, and edge servers, reducing latency and improving privacy. |

Language learning apps, personal assistants, offline translation tools |

|

|

Rapid Prototyping |

Faster iteration and lower costs enable experimentation and refinement before scaling to larger models. |

Testing new chatbot ideas, developing AI-powered prototypes, experimenting with different AI features in a cost-effective way |

|

Real-Time Applications |

Quick response time enhances interactive experiences. |

Chatbots, virtual assistants, real-time language translation, interactive storytelling in games and virtual reality |

|

Educational Use |

Affordable and accessible for educational institutions, providing hands-on experience with AI. |

AI-powered tutoring systems, language learning platforms, coding practice tools |

Accessing GPT-4o Mini

You can use GPT-4o Mini via the OpenAI API, which includes options like the Assistants API, Chat Completions API, and Batch API. Here’s a simple guide on how to use GPT-4o Mini with the OpenAI API.

First, you'll need to authenticate using your API key—replace your_api_key_here with your actual API key. Once you’re set up, you can start generating text with GPT-4o Mini:

from openai import OpenAI

MODEL="gpt-4o-mini"

## Set the API key

client = OpenAI(api_key="your_api_key_here")

completion = client.chat.completions.create(

model=MODEL,

messages=[

{"role": "system", "content": "You are a helpful assistant that helps me with my math homework!"},

{"role": "user", "content": "Hello! Could you solve 20 x 5?"}

]

)For more details on setting up and using the OpenAI API, check out the GPT-4o API tutorial.

Earn a Top AI Certification

Conclusion

GPT-4o mini stands out as a powerful and cost-effective AI model, achieving a notable balance between performance and affordability.

Its distillation from the larger GPT-4o model, combined with its large context window, multimodal capabilities, and enhanced safety features, makes it a versatile and accessible option for a wide range of applications.

As the demand for efficient and affordable AI solutions continues to grow, GPT-4o mini is well-positioned to play a significant role in democratizing AI technology.

FAQs

What is the key difference between GPT-4o and GPT-4o Mini?

The main difference lies in their size and cost. GPT-4o is a larger, more powerful model, but it comes with a higher price tag. GPT-4o Mini is a distilled version of GPT-4o, making it smaller, more affordable, and faster for certain tasks.

Can GPT-4o Mini process images, video, and audio?

Currently, GPT-4o Mini supports text and image inputs, with support for video and audio planned for the future.

How does GPT-4o Mini's performance compare to other models?

GPT-4o Mini outperforms several similar models, including Llama 3 (8B), Claude 3 Haiku, and GPT-3.5 Turbo, in terms of both quality and speed. While Gemini 1.5 Flash might have a slight edge in output speed, GPT-4o Mini excels in overall quality.

Is GPT-4o Mini suitable for real-time applications?

Yes, its fast processing and lower latency make it ideal for real-time applications like chatbots, virtual assistants, and interactive gaming experiences.

How can I access GPT-4o Mini?

You can access GPT-4o Mini through the OpenAI API, which offers different options like the Assistants API, Chat Completions API, and Batch API.

Ryan is a lead data scientist specialising in building AI applications using LLMs. He is a PhD candidate in Natural Language Processing and Knowledge Graphs at Imperial College London, where he also completed his Master’s degree in Computer Science. Outside of data science, he writes a weekly Substack newsletter, The Limitless Playbook, where he shares one actionable idea from the world's top thinkers and occasionally writes about core AI concepts.