Programa

Geração aumentada por recuperação (RAG) aprimora modelos de linguagem grandes ao buscar documentos relevantes de uma fonte externa para dar suporte à geração de texto. No entanto, o RAG não é perfeito - ele ainda pode produzir conteúdo enganoso se os documentos recuperados não forem precisos ou relevantes.

Para superar esses problemas, foi proposta ageração aumentada de recuperação corretiva (CRAG). O CRAG funciona adicionando uma etapa para verificar e refinar as informações recuperadas antes de usá-las para gerar texto. Isso torna os modelos de linguagem mais precisos e reduz a chance de gerar conteúdo enganoso.

Neste artigo, apresentarei o CRAG e orientarei você em uma implementação passo a passo usando LangGraph.

O que é o RAG corretivo (CRAG)?

O CRAG (Corrective retrieval-augmented generation) é uma versão aprimorada do RAG que visa a tornar os modelos de linguagem mais precisos.

Enquanto o RAG tradicional simplesmente usa documentos recuperados para ajudar a gerar texto, o CRAG vai além, verificando e refinando ativamente esses documentos para garantir que sejam relevantes e precisos. Isso ajuda a reduzir erros ou alucinações em que o modelo pode produzir informações incorretas ou enganosas.

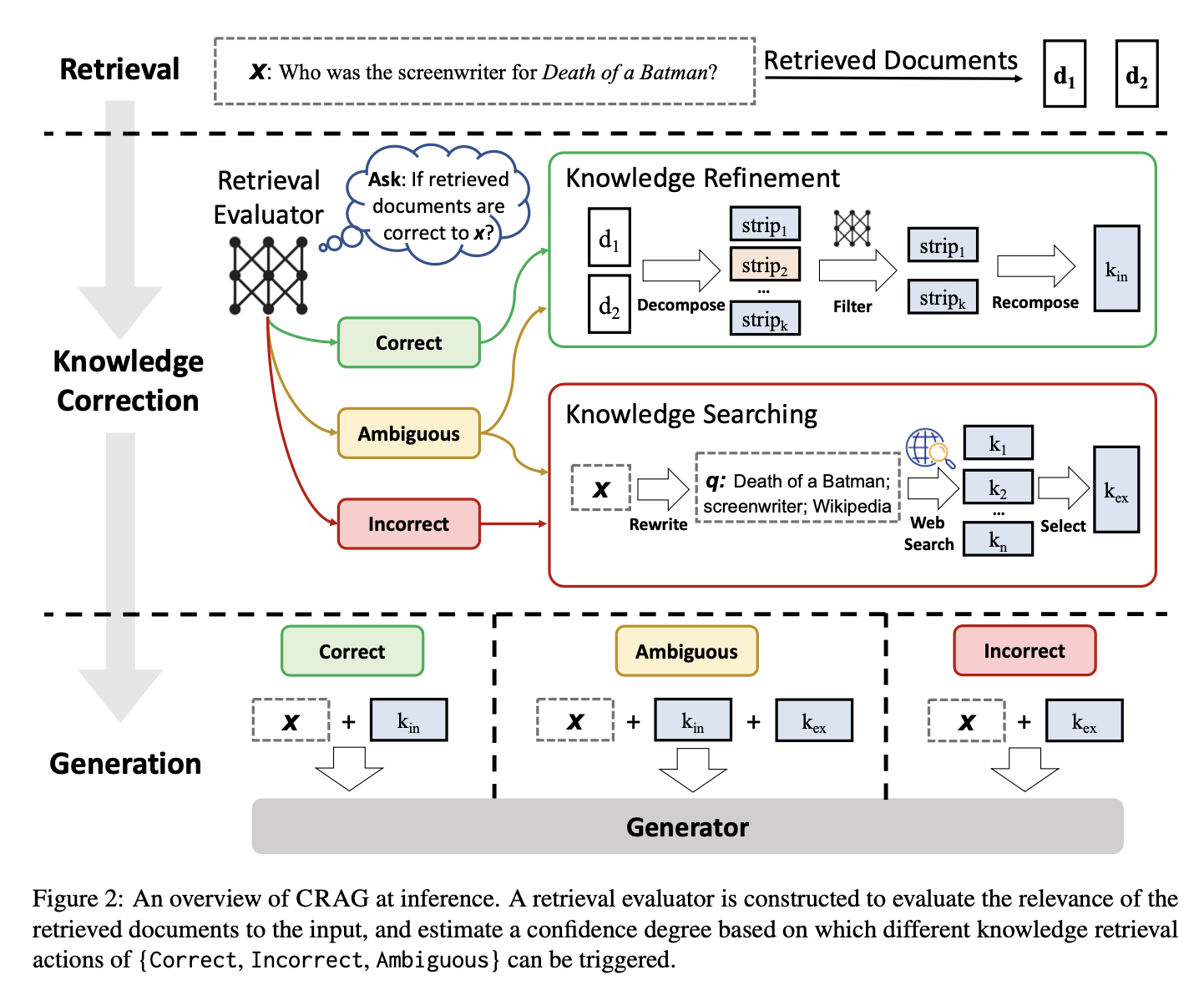

Fonte: Shi-Qi Yan et al., 2024

A estrutura do CRAG opera por meio de algumas etapas principais, que envolvem um avaliador de recuperação e ações corretivas específicas.

Para qualquer consulta de entrada, um recuperador padrão extrai primeiro um conjunto de documentos de uma base de conhecimento. Em seguida, esses documentos são revisados por um avaliador de recuperação para determinar a relevância de cada documento para a consulta.

No CRAG, o avaliador de recuperação é um modelo T5-large com ajuste fino. O avaliador atribui uma pontuação de confiança a cada documento, categorizando-os em três níveis de confiança:

- Correto: Se pelo menos um documento tiver pontuação acima do limite superior, ele será considerado correto. Em seguida, o sistema aplica um processo de refinamento do conhecimento, usando um algoritmo de decomposição e recomposição para extrair as faixas de conhecimento mais importantes e relevantes e, ao mesmo tempo, filtrar os dados irrelevantes ou ruidosos dos documentos. Isso garante que apenas as informações mais precisas e relevantes sejam retidas para o processo de geração.

- Incorreto: Se todos os documentos estiverem abaixo de um limite inferior, eles serão marcados como incorretos. Nesse caso, o CRAG descarta todos os documentos recuperados e, em vez disso, realiza uma pesquisa na Web para reunir novos conhecimentos externos potencialmente mais precisos. Essa etapa amplia o processo de recuperação para além da base de conhecimento estática ou limitada, aproveitando as informações vastas e dinâmicas disponíveis na Web, aumentando a probabilidade de recuperação de dados relevantes e precisos.

- Ambíguo: Quando os documentos recuperados contêm resultados mistos, eles são considerados ambíguos. Nesse caso, o CRAG combina as duas estratégias: ele refina as informações dos documentos recuperados inicialmente e incorpora conhecimentos adicionais obtidos em pesquisas na Web.

Depois que uma dessas ações é executada, o conhecimento refinado é usado para gerar a resposta final.

CRAG vs. RAG tradicional

O CRAG apresenta vários aprimoramentos importantes em relação ao RAG tradicional. Uma de suas maiores vantagens é a capacidade de corrigir erros nas informações recuperadas. O avaliador de recuperação no CRAG ajuda a identificar quando as informações estão erradas ou são irrelevantes, para que possam ser corrigidas antes de afetar o resultado final. Isso significa que a CRAG fornece informações mais precisas e confiáveis, reduzindo erros e desinformações.

O CRAG também se destaca por garantir que as informações sejam relevantes e precisas. Enquanto o RAG tradicional pode verificar apenas as pontuações de relevância, o CRAG vai além, refinando os documentos para garantir que eles não sejam apenas relevantes, mas também precisos. Ele filtra detalhes irrelevantes e se concentra nos pontos mais importantes, para que o texto gerado seja baseado em informações precisas.

Implementação do CRAG usando LangGraph

Nesta seção, veremos um guia passo a passo sobre como implementar o CRAG usando o LangGraph. Você aprenderá a configurar seu ambiente, criar um armazenamento básico de vetores de conhecimento e configurar os principais componentes necessários para o CRAG, como o avaliador de recuperação, o reescritor de perguntas e a ferramenta de pesquisa na Web.

Também aprenderemos a criar um fluxo de trabalho LangGraph que reúne todas essas partes, demonstrando como o CRAG pode gerenciar vários tipos de consultas para obter resultados mais precisos e confiáveis.

Etapa 1: Configuração e instalação

Primeiro, instale os pacotes necessários. Esta etapa configura o ambiente para executar o pipeline CRAG.

pip install langchain_community tiktoken langchain-openai langchainhub chromadb langchain langgraph tavily-pythonEm seguida, configure suas chaves de API para Tavily e OpenAI:

import os

os.environ["TAVILY_API_KEY"] = "YOUR_TAVILY_API_KEY"

os.environ["OPENAI_API_KEY"] = "YOUR_OPENAI_API_KEY"Etapa 2: Configurar uma base de conhecimento de proxy

Para executar o RAG, primeiro precisamos de uma base de conhecimento repleta de documentos. Nesta etapa, coletaremos alguns documentos de amostra de um boletim informativo do Substack para criar um armazenamento de vetores, que atua como nossa base de conhecimento proxy. Esse armazenamento de vetores nos ajuda a encontrar documentos relevantes com base nas consultas do usuário.

Começamos carregando documentos dos URLs fornecidos e dividindo-os em seções menores usando um divisor de texto. Essas seções são então incorporadas usando o OpenAIEmbeddings e armazenadas em um banco de dados de vetores (Chroma) para uma recuperação eficiente de documentos.

from langchain.text_splitter import RecursiveCharacterTextSplitter

from langchain_community.document_loaders import WebBaseLoader

from langchain_community.vectorstores import Chroma

from langchain_openai import OpenAIEmbeddings

urls = [

"<https://ryanocm.substack.com/p/mystery-gift-box-049-law-1-fill-your>",

"<https://ryanocm.substack.com/p/105-the-bagel-method-in-relationships>",

"<https://ryanocm.substack.com/p/098-i-have-read-100-productivity>",

]

docs = [WebBaseLoader(url).load() for url in urls]

docs_list = [item for sublist in docs for item in sublist]

text_splitter = RecursiveCharacterTextSplitter.from_tiktoken_encoder(

chunk_size=250, chunk_overlap=0

)

doc_splits = text_splitter.split_documents(docs_list)

# Add to vectorDB

vectorstore = Chroma.from_documents(

documents=doc_splits,

collection_name="rag-chroma",

embedding=OpenAIEmbeddings(),

)

retriever = vectorstore.as_retriever()Etapa 3: Estabelecer uma corrente RAG

Nesta etapa, configuramos uma cadeia RAG básica que recebe a pergunta de um usuário e um conjunto de documentos para gerar uma resposta.

A cadeia RAG usa um prompt predefinido e um modelo de idioma (GPT 4-o mini) para criar respostas com base nos documentos recuperados. Um analisador de saída formata o texto gerado para facilitar a leitura.

### Generate

from langchain import hub

from langchain_core.output_parsers import StrOutputParser

# Prompt

rag_prompt = hub.pull("rlm/rag-prompt")

# LLM

rag_llm = ChatOpenAI(model_name="gpt-4o-mini", temperature=0)

# Post-processing

def format_docs(docs):

return "\\n\\n".join(doc.page_content for doc in docs)

# Chain

rag_chain = rag_prompt | rag_llm | StrOutputParser()

print(rag_prompt.messages[0].prompt.template)You are an assistant for question-answering tasks. Use the following pieces of retrieved context to answer the question. If you don't know the answer, just say that you don't know. Use three sentences maximum and keep the answer concise.

Question: {question}

Context: {context}

Answer:generation = rag_chain.invoke({"context": docs, "question": question})

print("Question: %s" % question)

print("----")

print("Documents:\\n")

print('\\n\\n'.join(['- %s' % x.page_content for x in docs]))

print("----")

print("Final answer: %s" % generation)Question: what is the bagel method

----

Documents:

- the book was called The Bagel Method.The Bagel Method is designed to help partners be on the same team when dealing with differences and trying to find a compromise.The idea behind the method is that, to truly compromise, we need to figure out a way to include both partners’ dreams and core needs; things that are super important to us that giving up on them is too much.Let’s dive into the bagel 😜🚀 If you are new here…Hi, I’m Ryan 👋� I am passionate about lifestyle gamification � and I am obsesssssssss with learning things that can help me live a happy and fulfilling life.And so, with The Limitless Playbook newsletter, I will share with you 1 actionable idea from the world's top thinkers every Sunday �So visit us weekly for highly actionable insights :)…or even better, subscribe below and have all these information send straight to your inbox every Sunday 🥳Subscribe🥯

- #105 | The Bagel Method in Relationships 🥯

- The Limitless Playbook 🧬SubscribeSign inShare this post#105 | The Bagel Method in Relationships 🥯ryanocm.substack.comCopy linkFacebookEmailNoteOther#105 | The Bagel Method in Relationships 🥯A collection of the best hidden gems, mental models, and frameworks from the world’s top thinkers; to help you become 1% better and live a happier life ��Ryan Ong �Feb 25, 2024Share this post#105 | The Bagel Method in Relationships 🥯ryanocm.substack.comCopy linkFacebookEmailNoteOtherShareHello curious minds 🧠I recently finished the book Fight Right: How Successful Couples Turn Conflict into Connection and oh my days, I love every chapter of it!There were many repeating concepts but this time, it was applied in the context of conflicts in relationships. As usual with the Gottman’s books, I highlighted the hell out of the entire book 😄One of the cool exercises in

- The Bagel MethodThe Bagel Method involves mapping out your core needs and areas of flexibility so that you and your partner understand what's important and where there's room for flexibility.It’s called The Bagel Method because, just like a bagel, it has both the inner and outer circles representing your needs.Here are the steps:In the inner circle, list all the aspects of an issue that you can’t give in on. These are your non-negotiables that are usually very closely related to your core needs and dreams.In the outer circle, list all the aspects of an issue that you are able to compromise on IF you are able to have what’s in your inner circle.Now, talk to your partners about your inner and outer circle. Ask each other:Why are the things in your inner circle so important to you?How can I support your core needs here?Tell me more about your areas of flexibility. What does it look like to be flexible?Compare both your “bagel� of needsWhat do we agree on?What feelings do we have in common?What shared goals do we have?How might we accomplish these goals

----

Final answer: The Bagel Method is a relationship strategy that helps partners identify their core needs and areas where they can be flexible. It involves mapping out non-negotiables in the inner circle and compromise areas in the outer circle, facilitating open communication about each partner's priorities. This method aims to foster understanding and collaboration in resolving differences.Etapa 4: Configurar um avaliador de recuperação

Para melhorar a precisão do conteúdo gerado, configuramos um avaliador de recuperação. Essa ferramenta verifica a relevância de cada documento recuperado para garantir que apenas as informações mais úteis sejam usadas.

O avaliador de recuperação é configurado com um prompt e um modelo de linguagem. Ele determina se os documentos são relevantes ou não, filtrando qualquer conteúdo irrelevante antes que uma resposta seja gerada.

### Retrieval Evaluator

from langchain_core.prompts import ChatPromptTemplate

from langchain_core.pydantic_v1 import BaseModel, Field

from langchain_openai import ChatOpenAI

# Data model

class RetrievalEvaluator(BaseModel):

"""Classify retrieved documents based on how relevant it is to the user's question."""

binary_score: str = Field(

description="Documents are relevant to the question, 'yes' or 'no'"

)

# LLM with function call

retrieval_evaluator_llm = ChatOpenAI(model="gpt-4o-mini", temperature=0)

structured_llm_evaluator = retrieval_evaluator_llm.with_structured_output(RetrievalEvaluator)

# Prompt

system = """You are a document retrieval evaluator that's responsible for checking the relevancy of a retrieved document to the user's question. \\n

If the document contains keyword(s) or semantic meaning related to the question, grade it as relevant. \\n

Output a binary score 'yes' or 'no' to indicate whether the document is relevant to the question."""

retrieval_evaluator_prompt = ChatPromptTemplate.from_messages(

[

("system", system),

("human", "Retrieved document: \\n\\n {document} \\n\\n User question: {question}"),

]

)

retrieval_grader = retrieval_evaluator_prompt | structured_llm_evaluatorEtapa 5: Configurar um reescritor de perguntas

Adicionaremos um reescritor de perguntas para tornar as consultas dos usuários mais claras e específicas, o que ajuda a melhorar o processo de pesquisa.

O reescritor refina a consulta original para tornar a pesquisa mais focada, levando a resultados melhores e mais relevantes.

### Question Re-writer

# LLM

question_rewriter_llm = ChatOpenAI(model="gpt-4o-mini", temperature=0)

# Prompt

system = """You are a question re-writer that converts an input question to a better version that is optimized \\n

for web search. Look at the input and try to reason about the underlying semantic intent / meaning."""

re_write_prompt = ChatPromptTemplate.from_messages(

[

("system", system),

(

"human",

"Here is the initial question: \\n\\n {question} \\n Formulate an improved question.",

),

]

)

question_rewriter = re_write_prompt | question_rewriter_llm | StrOutputParser()Etapa 6: Inicializar a ferramenta de pesquisa na Web da Tavily

Se a base de conhecimento não tiver informações suficientes, o CRAG recorre à pesquisa na Web para preencher as lacunas. Isso amplia a gama de possíveis fontes de informação. Nesta etapa, usamos a API da Tavily para pesquisar na Web e encontrar documentos adicionais.

### Search

from langchain_community.tools.tavily_search import TavilySearchResults

web_search_tool = TavilySearchResults(k=3)Etapa 7: Configurar o fluxo de trabalho do LangGraph

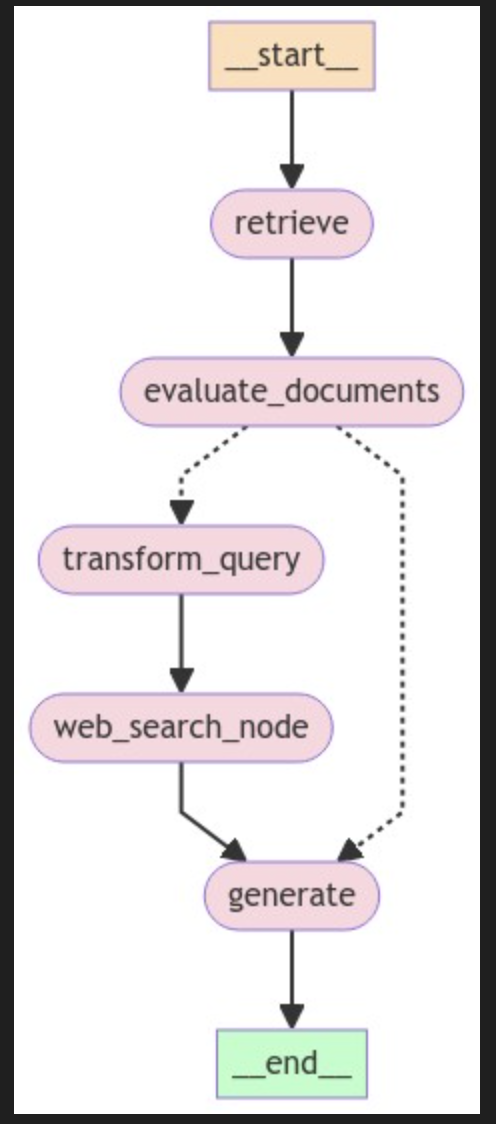

Para criar o fluxo de trabalho do CRAG com o LangGraph, siga estas três etapas principais:

- Definir o estado do gráfico

- Definir nós de função

- Conectar todos os nós de função

Definir o estado do gráfico

Crie um estado compartilhado para armazenar dados à medida que eles se movem entre os nós durante o fluxo de trabalho. Esse estado manterá todas as variáveis, como a pergunta do usuário, os documentos recuperados e as respostas geradas.

from typing import List

from typing_extensions import TypedDict

class GraphState(TypedDict):

"""

Represents the state of our graph.

Attributes:

question: question

generation: LLM generation

web_search: whether to add search

documents: list of documents

"""

question: str

generation: str

web_search: str

documents: List[str]Definir nós de função

No fluxo de trabalho LangGraph, cada nó de função lida com uma tarefa específica no pipeline CRAG, como recuperação de documentos, geração de respostas, avaliação de relevância, transformação de consultas e pesquisa na Web. Aqui está um detalhamento de cada função:

A função retrieve encontra documentos da base de conhecimento que são relevantes para a pergunta do usuário. Ele usa um objeto retriever, que geralmente é um armazenamento vetorial criado a partir de documentos pré-processados. Essa função pega o estado atual, incluindo a pergunta do usuário, e usa o recuperador para obter documentos relevantes. Em seguida, ele adiciona esses documentos ao estado.

from langchain.schema import Document

def retrieve(state):

"""

Retrieve documents

Args:

state (dict): The current graph state

Returns:

state (dict): New key added to state, documents, that contains retrieved documents

"""

print("---RETRIEVE---")

question = state["question"]

# Retrieval

documents = retriever.get_relevant_documents(question)

return {"documents": documents, "question": question}A função generate cria uma resposta à pergunta do usuário usando os documentos recuperados. Ele funciona com a cadeia RAG, que combina um prompt com um modelo de linguagem. Essa função pega os documentos recuperados e a pergunta do usuário, processa-os por meio da cadeia RAG e, em seguida, adiciona a resposta ao estado.

def generate(state):

"""

Generate answer

Args:

state (dict): The current graph state

Returns:

state (dict): New key added to state, generation, that contains LLM generation

"""

print("---GENERATE---")

question = state["question"]

documents = state["documents"]

# RAG generation

generation = rag_chain.invoke({"context": documents, "question": question})

return {"documents": documents, "question": question, "generation": generation}A função evaluate_documents verifica a relevância de cada documento recuperado para a pergunta do usuário usando o avaliador de recuperação. Isso ajuda a garantir que somente informações úteis sejam usadas na resposta final. Essa função avalia a relevância de cada documento e filtra aqueles que não são úteis. Ele também atualiza o estado com um sinalizador web_search para mostrar se uma pesquisa na Web é necessária quando a maioria dos documentos não é relevante.

def evaluate_documents(state):

"""

Determines whether the retrieved documents are relevant to the question.

Args:

state (dict): The current graph state

Returns:

state (dict): Updates documents key with only filtered relevant documents

"""

print("---CHECK DOCUMENT RELEVANCE TO QUESTION---")

question = state["question"]

documents = state["documents"]

# Score each doc

filtered_docs = []

web_search = "No"

for d in documents:

score = retrieval_grader.invoke(

{"question": question, "document": d.page_content}

)

grade = score.binary_score

if grade == "yes":

print("---GRADE: DOCUMENT RELEVANT---")

filtered_docs.append(d)

else:

print("---GRADE: DOCUMENT NOT RELEVANT---")

continue

if len(filtered_docs) / len(documents) <= 0.7:

web_search = "Yes"

return {"documents": filtered_docs, "question": question, "web_search": web_search}A função transform_query melhora a pergunta do usuário para obter melhores resultados de pesquisa, especialmente se a consulta original não encontrar documentos relevantes. Ele usa um reescritor de perguntas para tornar a pergunta mais clara e específica. Uma pergunta melhor aumenta as chances de você encontrar documentos úteis tanto na base de conhecimento quanto nas pesquisas na Web.

def transform_query(state):

"""

Transform the query to produce a better question.

Args:

state (dict): The current graph state

Returns:

state (dict): Updates question key with a re-phrased question

"""

print("---TRANSFORM QUERY---")

question = state["question"]

documents = state["documents"]

# Re-write question

better_question = question_rewriter.invoke({"question": question})

return {"documents": documents, "question": better_question}A função web_search procura informações adicionais on-line usando a consulta refinada. É usado quando a base de conhecimento não tem informações suficientes, ajudando você a reunir mais conteúdo. Essa função usa a ferramenta de pesquisa na Web da Tavily para encontrar documentos adicionais na Web, que são adicionados aos documentos existentes para melhorar a base de conhecimento.

def web_search(state):

"""

Web search based on the re-phrased question.

Args:

state (dict): The current graph state

Returns:

state (dict): Updates documents key with appended web results

"""

print("---WEB SEARCH---")

question = state["question"]

documents = state["documents"]

# Web search

docs = web_search_tool.invoke({"query": question})

web_results = "\\n".join([d["content"] for d in docs])

web_results = Document(page_content=web_results)

documents.append(web_results)

return {"documents": documents, "question": question}A função decide_to_generate decide o que fazer em seguida: gerar uma resposta com os documentos atuais ou refinar a consulta e pesquisar novamente. Ele faz essa escolha com base na relevância dos documentos (conforme avaliado anteriormente).

def decide_to_generate(state):

"""

Determines whether to generate an answer, or re-generate a question.

Args:

state (dict): The current graph state

Returns:

str: Binary decision for next node to call

"""

print("---ASSESS GRADED DOCUMENTS---")

state["question"]

web_search = state["web_search"]

state["documents"]

if web_search == "Yes":

# All documents have been filtered check_relevance

# We will re-generate a new query

print(

"---DECISION: ALL DOCUMENTS ARE NOT RELEVANT TO QUESTION, TRANSFORM QUERY---"

)

return "transform_query"

else:

# We have relevant documents, so generate answer

print("---DECISION: GENERATE---")

return "generate"Conectar todos os nós de função

Uma vez que todos os nós de função tenham sido definidos, agora podemos vincular todos os nós de função no fluxo de trabalho LangGraph para criar o pipeline CRAG. Isso significa conectar os nós com bordas para gerenciar o fluxo de informações e decisões, garantindo que o fluxo de trabalho seja executado corretamente com base nos resultados de cada etapa.

from langgraph.graph import END, StateGraph, START

workflow = StateGraph(GraphState)

# Define the nodes

workflow.add_node("retrieve", retrieve) # retrieve

workflow.add_node("grade_documents", evaluate_documents) # evaluate documents

workflow.add_node("generate", generate) # generate

workflow.add_node("transform_query", transform_query) # transform_query

workflow.add_node("web_search_node", web_search) # web search

# Build graph

workflow.add_edge(START, "retrieve")

workflow.add_edge("retrieve", "grade_documents")

workflow.add_conditional_edges(

"grade_documents",

decide_to_generate,

{

"transform_query": "transform_query",

"generate": "generate",

},

)

workflow.add_edge("transform_query", "web_search_node")

workflow.add_edge("web_search_node", "generate")

workflow.add_edge("generate", END)

# Compile

app = workflow.compile()from IPython.display import Image, display

try:

display(Image(app.get_graph(xray=True).draw_mermaid_png()))

except Exception:

# This requires some extra dependencies and is optional

pass

Etapa 8: Testar o fluxo de trabalho

Para testar nossa configuração, executamos o fluxo de trabalho com consultas de amostra para verificar como ele recupera informações, avalia a relevância do documento e gera respostas.

A primeira consulta verifica a capacidade do CRAG de encontrar respostas em sua base de conhecimento.

from pprint import pprint

# Run

inputs = {"question": "What's the bagel method?"}

for output in app.stream(inputs):

for key, value in output.items():

# Node

pprint(f"Node '{key}':")

# Optional: print full state at each node

pprint(value, indent=2, width=80, depth=None)

pprint("\\n---\\n")

# Final generation

pprint(value["generation"])---RETRIEVE---

"Node 'retrieve':"

{ 'documents': [ Document(page_content="the book was called The Bagel Method.The Bagel Method is designed to help partners be on the same team when dealing with differences and trying to find a compromise.The idea behind the method is that, to truly compromise, we need to figure out a way to include both partners’ dreams and core needs; things that are super important to us that giving up on them is too much.Let’s dive into the bagel 😜🚀 If you are new here…Hi, I’m Ryan 👋� I am passionate about lifestyle gamification � and I am obsesssssssss with learning things that can help me live a happy and fulfilling life.And so, with The Limitless Playbook newsletter, I will share with you 1 actionable idea from the world's top thinkers every Sunday �So visit us weekly for highly actionable insights :)…or even better, subscribe below and have all these information send straight to your inbox every Sunday 🥳Subscribe🥯", metadata={'description': 'A collection of the best hidden gems, mental models, and frameworks from the world’s top thinkers; to help you become 1% better and live a happier life ��', 'language': 'en', 'source': '<https://ryanocm.substack.com/p/105-the-bagel-method-in-relationships>', 'title': '#105 | The Bagel Method in Relationships 🥯'}),

Document(page_content='#105 | The Bagel Method in Relationships 🥯', metadata={'description': 'A collection of the best hidden gems, mental models, and frameworks from the world’s top thinkers; to help you become 1% better and live a happier life ��', 'language': 'en', 'source': '<https://ryanocm.substack.com/p/105-the-bagel-method-in-relationships>', 'title': '#105 | The Bagel Method in Relationships 🥯'}),

Document(page_content='The Limitless Playbook 🧬SubscribeSign inShare this post#105 | The Bagel Method in Relationships 🥯ryanocm.substack.comCopy linkFacebookEmailNoteOther#105 | The Bagel Method in Relationships 🥯A collection of the best hidden gems, mental models, and frameworks from the world’s top thinkers; to help you become 1% better and live a happier life â�¤ï¸�Ryan Ong ğŸ�®Feb 25, 2024Share this post#105 | The Bagel Method in Relationships 🥯ryanocm.substack.comCopy linkFacebookEmailNoteOtherShareHello curious minds ğŸ§\\xa0I recently finished the book Fight Right: How Successful Couples Turn Conflict into Connection and oh my days, I love every chapter of it!There were many repeating concepts but this time, it was applied in the context of conflicts in relationships. As usual with the Gottman’s books, I highlighted the hell out of the entire book 😄One of the cool exercises in', metadata={'description': 'A collection of the best hidden gems, mental models, and frameworks from the world’s top thinkers; to help you become 1% better and live a happier life â�¤ï¸�', 'language': 'en', 'source': '<https://ryanocm.substack.com/p/105-the-bagel-method-in-relationships>', 'title': '#105 | The Bagel Method in Relationships 🥯'}),

Document(page_content="The Bagel MethodThe Bagel Method involves mapping out your core needs and areas of flexibility so that you and your partner understand what's important and where there's room for flexibility.It’s called The Bagel Method because, just like a bagel, it has both the inner and outer circles representing your needs.Here are the steps:In the inner circle, list all the aspects of an issue that you can’t give in on. These are your non-negotiables that are usually very closely related to your core needs and dreams.In the outer circle, list all the aspects of an issue that you are able to compromise on IF you are able to have what’s in your inner circle.Now, talk to your partners about your inner and outer circle. Ask each other:Why are the things in your inner circle so important to you?How can I support your core needs here?Tell me more about your areas of flexibility. What does it look like to be flexible?Compare both your “bagel� of needsWhat do we agree on?What feelings do we have in common?What shared goals do we have?How might we accomplish these goals", metadata={'description': 'A collection of the best hidden gems, mental models, and frameworks from the world’s top thinkers; to help you become 1% better and live a happier life ��', 'language': 'en', 'source': '<https://ryanocm.substack.com/p/105-the-bagel-method-in-relationships>', 'title': '#105 | The Bagel Method in Relationships 🥯'})],

'question': "What's the bagel method?"}

'\\n---\\n'

---CHECK DOCUMENT RELEVANCE TO QUESTION---

---GRADE: DOCUMENT RELEVANT---

---GRADE: DOCUMENT RELEVANT---

---GRADE: DOCUMENT NOT RELEVANT---

---GRADE: DOCUMENT RELEVANT---

---ASSESS GRADED DOCUMENTS---

---DECISION: GENERATE---

"Node 'evaluate_documents':"

{ 'documents': [ Document(page_content="the book was called The Bagel Method.The Bagel Method is designed to help partners be on the same team when dealing with differences and trying to find a compromise.The idea behind the method is that, to truly compromise, we need to figure out a way to include both partners’ dreams and core needs; things that are super important to us that giving up on them is too much.Let’s dive into the bagel 😜🚀 If you are new here…Hi, I’m Ryan 👋� I am passionate about lifestyle gamification � and I am obsesssssssss with learning things that can help me live a happy and fulfilling life.And so, with The Limitless Playbook newsletter, I will share with you 1 actionable idea from the world's top thinkers every Sunday �So visit us weekly for highly actionable insights :)…or even better, subscribe below and have all these information send straight to your inbox every Sunday 🥳Subscribe🥯", metadata={'description': 'A collection of the best hidden gems, mental models, and frameworks from the world’s top thinkers; to help you become 1% better and live a happier life ��', 'language': 'en', 'source': '<https://ryanocm.substack.com/p/105-the-bagel-method-in-relationships>', 'title': '#105 | The Bagel Method in Relationships 🥯'}),

Document(page_content='#105 | The Bagel Method in Relationships 🥯', metadata={'description': 'A collection of the best hidden gems, mental models, and frameworks from the world’s top thinkers; to help you become 1% better and live a happier life ��', 'language': 'en', 'source': '<https://ryanocm.substack.com/p/105-the-bagel-method-in-relationships>', 'title': '#105 | The Bagel Method in Relationships 🥯'}),

Document(page_content="The Bagel MethodThe Bagel Method involves mapping out your core needs and areas of flexibility so that you and your partner understand what's important and where there's room for flexibility.It’s called The Bagel Method because, just like a bagel, it has both the inner and outer circles representing your needs.Here are the steps:In the inner circle, list all the aspects of an issue that you can’t give in on. These are your non-negotiables that are usually very closely related to your core needs and dreams.In the outer circle, list all the aspects of an issue that you are able to compromise on IF you are able to have what’s in your inner circle.Now, talk to your partners about your inner and outer circle. Ask each other:Why are the things in your inner circle so important to you?How can I support your core needs here?Tell me more about your areas of flexibility. What does it look like to be flexible?Compare both your “bagel� of needsWhat do we agree on?What feelings do we have in common?What shared goals do we have?How might we accomplish these goals", metadata={'description': 'A collection of the best hidden gems, mental models, and frameworks from the world’s top thinkers; to help you become 1% better and live a happier life ��', 'language': 'en', 'source': '<https://ryanocm.substack.com/p/105-the-bagel-method-in-relationships>', 'title': '#105 | The Bagel Method in Relationships 🥯'})],

'question': "What's the bagel method?",

'web_search': 'No'}

'\\n---\\n'

---GENERATE---

"Node 'generate':"

{ 'documents': [ Document(page_content="the book was called The Bagel Method.The Bagel Method is designed to help partners be on the same team when dealing with differences and trying to find a compromise.The idea behind the method is that, to truly compromise, we need to figure out a way to include both partners’ dreams and core needs; things that are super important to us that giving up on them is too much.Let’s dive into the bagel 😜🚀 If you are new here…Hi, I’m Ryan 👋� I am passionate about lifestyle gamification � and I am obsesssssssss with learning things that can help me live a happy and fulfilling life.And so, with The Limitless Playbook newsletter, I will share with you 1 actionable idea from the world's top thinkers every Sunday �So visit us weekly for highly actionable insights :)…or even better, subscribe below and have all these information send straight to your inbox every Sunday 🥳Subscribe🥯", metadata={'description': 'A collection of the best hidden gems, mental models, and frameworks from the world’s top thinkers; to help you become 1% better and live a happier life ��', 'language': 'en', 'source': '<https://ryanocm.substack.com/p/105-the-bagel-method-in-relationships>', 'title': '#105 | The Bagel Method in Relationships 🥯'}),

Document(page_content='#105 | The Bagel Method in Relationships 🥯', metadata={'description': 'A collection of the best hidden gems, mental models, and frameworks from the world’s top thinkers; to help you become 1% better and live a happier life ��', 'language': 'en', 'source': '<https://ryanocm.substack.com/p/105-the-bagel-method-in-relationships>', 'title': '#105 | The Bagel Method in Relationships 🥯'}),

Document(page_content="The Bagel MethodThe Bagel Method involves mapping out your core needs and areas of flexibility so that you and your partner understand what's important and where there's room for flexibility.It’s called The Bagel Method because, just like a bagel, it has both the inner and outer circles representing your needs.Here are the steps:In the inner circle, list all the aspects of an issue that you can’t give in on. These are your non-negotiables that are usually very closely related to your core needs and dreams.In the outer circle, list all the aspects of an issue that you are able to compromise on IF you are able to have what’s in your inner circle.Now, talk to your partners about your inner and outer circle. Ask each other:Why are the things in your inner circle so important to you?How can I support your core needs here?Tell me more about your areas of flexibility. What does it look like to be flexible?Compare both your “bagel� of needsWhat do we agree on?What feelings do we have in common?What shared goals do we have?How might we accomplish these goals", metadata={'description': 'A collection of the best hidden gems, mental models, and frameworks from the world’s top thinkers; to help you become 1% better and live a happier life ��', 'language': 'en', 'source': '<https://ryanocm.substack.com/p/105-the-bagel-method-in-relationships>', 'title': '#105 | The Bagel Method in Relationships 🥯'})],

'generation': 'The Bagel Method is a framework designed to help partners '

'navigate differences and find compromises by mapping out '

'their core needs and areas of flexibility. It involves '

'creating two circles: the inner circle for non-negotiable '

'needs and the outer circle for aspects where compromise is '

'possible. This method encourages open communication about '

"each partner's priorities and shared goals.",

'question': "What's the bagel method?"}

'\\n---\\n'

('The Bagel Method is a framework designed to help partners navigate '

'differences and find compromises by mapping out their core needs and areas '

'of flexibility. It involves creating two circles: the inner circle for '

'non-negotiable needs and the outer circle for aspects where compromise is '

"possible. This method encourages open communication about each partner's "

'priorities and shared goals.')E a segunda consulta testa a capacidade do CRAG de pesquisar informações adicionais na Web quando a base de conhecimento não tiver os documentos relevantes.

from pprint import pprint

# Run

inputs = {"question": "What is prompt engineering?"}

for output in app.stream(inputs):

for key, value in output.items():

# Node

pprint(f"Node '{key}':")

# Optional: print full state at each node

pprint(value, indent=2, width=80, depth=None)

pprint("\\n---\\n")

# Final generation

pprint(value["generation"])---RETRIEVE---

"Node 'retrieve':"

{ 'documents': [ Document(page_content='Mystery Gift Box #049 | Law 1: Fill your Five Buckets in the Right Order (The Diary of a CEO)', metadata={'description': "The best hidden gems I've found; interesting ideas and concepts, thought-provoking questions, mind-blowing books/podcasts, cool animes/films, and other mysteries ��", 'language': 'en', 'source': '<https://ryanocm.substack.com/p/mystery-gift-box-049-law-1-fill-your>', 'title': 'Mystery Gift Box #049 | Law 1: Fill your Five Buckets in the Right Order (The Diary of a CEO)'}),

Document(page_content='ğŸ�¦Â\\xa0Twitter, 👨ğŸ�»â€�💻Â\\xa0LinkedIn, ğŸŒ�Â\\xa0Personal Website, and 📸Â\\xa0InstagramShare this postMystery Gift Box #049 | Law 1: Fill your Five Buckets in the Right Order (The Diary of a CEO)ryanocm.substack.comCopy linkFacebookEmailNoteOtherSharePreviousNextCommentsTopLatestDiscussionsNo postsReady for more?Subscribe© 2024 Ryan Ong ğŸ�®Privacy ∙ Terms ∙ Collection notice Start WritingGet the appSubstack is the home for great cultureShareCopy linkFacebookEmailNoteOther', metadata={'description': "The best hidden gems I've found; interesting ideas and concepts, thought-provoking questions, mind-blowing books/podcasts, cool animes/films, and other mysteries â�¤ï¸�", 'language': 'en', 'source': '<https://ryanocm.substack.com/p/mystery-gift-box-049-law-1-fill-your>', 'title': 'Mystery Gift Box #049 | Law 1: Fill your Five Buckets in the Right Order (The Diary of a CEO)'}),

Document(page_content='skills are the foundation of which you build your life and career and it’s truly yours to own; you can lose your network, resources, and reputation but you will never lose your knowledge and skills.Never try to skip the first two buckets. If you try to jump straight to network, resources, and / or reputation bucket, you might “succeed� in the short-run but in the long run, your lack of knowledge and skill will catch on to you.There is no skipping the first two buckets of knowledge and skills if you’re playing long-term sustainable results. Any attempt to do so is equivalent to building your house on sand.💥 Key takeawayFocus on using your knowledge and skills to create lots of values in the world and the world will reward you with growing network (people will come to you), resources (people will pay for your services), and reputation (people will know what you are capable of).⛰ 4-4-4 Exploration ProjectEach month, I would explore one new thing; a skill, a subject, or an experience.January 2023: Writing and Storytelling (Subject)', metadata={'description': "The best hidden gems I've found; interesting ideas and concepts, thought-provoking questions, mind-blowing books/podcasts, cool animes/films, and other mysteries ��", 'language': 'en', 'source': '<https://ryanocm.substack.com/p/mystery-gift-box-049-law-1-fill-your>', 'title': 'Mystery Gift Box #049 | Law 1: Fill your Five Buckets in the Right Order (The Diary of a CEO)'}),

Document(page_content='This site requires JavaScript to run correctly. Please turn on JavaScript or unblock scripts', metadata={'description': 'A collection of the best hidden gems, mental models, and frameworks from the world’s top thinkers; to help you become 1% better and live a happier life ��', 'language': 'en', 'source': '<https://ryanocm.substack.com/p/105-the-bagel-method-in-relationships>', 'title': '#105 | The Bagel Method in Relationships 🥯'})],

'question': 'What is prompt engineering?'}

'\\n---\\n'

---CHECK DOCUMENT RELEVANCE TO QUESTION---

---GRADE: DOCUMENT NOT RELEVANT---

---GRADE: DOCUMENT NOT RELEVANT---

---GRADE: DOCUMENT NOT RELEVANT---

---GRADE: DOCUMENT NOT RELEVANT---

---ASSESS GRADED DOCUMENTS---

---DECISION: ALL DOCUMENTS ARE NOT RELEVANT TO QUESTION, TRANSFORM QUERY---

"Node 'evaluate_documents':"

{ 'documents': [],

'question': 'What is prompt engineering?',

'web_search': 'Yes'}

'\\n---\\n'

---TRANSFORM QUERY---

"Node 'transform_query':"

{ 'documents': [],

'question': 'What is the concept of prompt engineering and how is it applied '

'in artificial intelligence?'}

'\\n---\\n'

---WEB SEARCH---

"Node 'web_search_node':"

{ 'documents': [ Document(page_content="Prompt engineering is the method to ask generative AI to produce what the individual needs. There are two main principles of building a successful prompt for any AI, specificity and iteration. The box below includes one example of a framework that can be applied when prompting any generative AI.\\nPrompt Engineering is the process of designing and refining text inputs (prompts) to achieve specific application objectives with AI models. Think of it as a two-step journey: Designing the Initial Prompt: Creating the initial input for the model to achieve the desired result. Refining the Prompt: Continuously adjusting the prompt to enhance ...\\nPrompt engineering is the process of designing and refining the inputs given to language models, like those in AI, to achieve desired outputs more effectively. It involves creatively crafting prompts that guide the model in generating responses that are accurate, relevant, and aligned with the user's intentions.. For students and researchers in higher education, mastering prompt engineering is ...\\nJune 25, 2024. Prompt engineering means writing precise instructions for AI models. These instructions are different from coding because they use natural language. And today, everybody does it—from software developers to artists and content creators. Prompt engineering can help you improve productivity and save time by automating repetitive ...\\nMaster Prompt Engineering - The (AI) Prompt\\nAI Takes Wall Street by Storm: C3.ai's Strong Forecast Sparks a Surge in AI Stocks\\nAsk Me Anything (AMA) Prompting\\nHow Self-Critique Improves Logic and Reasoning in LLMs Like ChatGPT\\nOptimizing Large Language Models to Maximize Performance\\nThe Black Box Problem: Opaque Inner Workings of Large Language Models\\nHow to Evaluate Large Language Models for Business Tasks\\nIntroduction to the AI Prompt Development Process\\nSubscribe to new posts\\nThe Official Source For Everything Prompt Engineering & Generative AI Defining Prompt Engineering\\nGiven that the prompt is the singular input channel to large language models, prompt engineering can be defined as:\\nPrompt Engineering can be thought of as any process that contributes to the development of a well-crafted prompt to generate quality, useful outputs from an AI system.\\n A Simplified Approach to Defining Prompt Engineering\\nThe Prompt is the Sole Input\\nWhen interacting with Generative AI Models such as large language models (LLMs), the prompt is the only thing that gets input into the AI system. Its applications cut across diverse sectors, from healthcare and education to business, securing its place as a cornerstone of our interactions with AI.\\nExploration of Essential Prompt Engineering Techniques and Concepts\\nIn the rapidly evolving landscape of Artificial Intelligence (AI), mastering key techniques of Prompt Engineering has become increasingly vital. The key concepts of Prompt Engineering include prompts and prompting the AI, training the AI, developing and maintaining a prompt library, and testing, evaluation, and categorization.\\n")],

'question': 'What is the concept of prompt engineering and how is it applied '

'in artificial intelligence?'}

'\\n---\\n'

---GENERATE---

"Node 'generate':"

{ 'documents': [ Document(page_content="Prompt engineering is the method to ask generative AI to produce what the individual needs. There are two main principles of building a successful prompt for any AI, specificity and iteration. The box below includes one example of a framework that can be applied when prompting any generative AI.\\nPrompt Engineering is the process of designing and refining text inputs (prompts) to achieve specific application objectives with AI models. Think of it as a two-step journey: Designing the Initial Prompt: Creating the initial input for the model to achieve the desired result. Refining the Prompt: Continuously adjusting the prompt to enhance ...\\nPrompt engineering is the process of designing and refining the inputs given to language models, like those in AI, to achieve desired outputs more effectively. It involves creatively crafting prompts that guide the model in generating responses that are accurate, relevant, and aligned with the user's intentions.. For students and researchers in higher education, mastering prompt engineering is ...\\nJune 25, 2024. Prompt engineering means writing precise instructions for AI models. These instructions are different from coding because they use natural language. And today, everybody does it—from software developers to artists and content creators. Prompt engineering can help you improve productivity and save time by automating repetitive ...\\nMaster Prompt Engineering - The (AI) Prompt\\nAI Takes Wall Street by Storm: C3.ai's Strong Forecast Sparks a Surge in AI Stocks\\nAsk Me Anything (AMA) Prompting\\nHow Self-Critique Improves Logic and Reasoning in LLMs Like ChatGPT\\nOptimizing Large Language Models to Maximize Performance\\nThe Black Box Problem: Opaque Inner Workings of Large Language Models\\nHow to Evaluate Large Language Models for Business Tasks\\nIntroduction to the AI Prompt Development Process\\nSubscribe to new posts\\nThe Official Source For Everything Prompt Engineering & Generative AI Defining Prompt Engineering\\nGiven that the prompt is the singular input channel to large language models, prompt engineering can be defined as:\\nPrompt Engineering can be thought of as any process that contributes to the development of a well-crafted prompt to generate quality, useful outputs from an AI system.\\n A Simplified Approach to Defining Prompt Engineering\\nThe Prompt is the Sole Input\\nWhen interacting with Generative AI Models such as large language models (LLMs), the prompt is the only thing that gets input into the AI system. Its applications cut across diverse sectors, from healthcare and education to business, securing its place as a cornerstone of our interactions with AI.\\nExploration of Essential Prompt Engineering Techniques and Concepts\\nIn the rapidly evolving landscape of Artificial Intelligence (AI), mastering key techniques of Prompt Engineering has become increasingly vital. The key concepts of Prompt Engineering include prompts and prompting the AI, training the AI, developing and maintaining a prompt library, and testing, evaluation, and categorization.\\n")],

'generation': 'Prompt engineering is the process of designing and refining '

'text inputs to guide AI models in generating desired outputs. '

'It focuses on specificity and iteration to create effective '

'prompts that align with user intentions. This technique is '

'widely applicable across various sectors, enhancing '

'productivity and automating tasks.',

'question': 'What is the concept of prompt engineering and how is it applied '

'in artificial intelligence?'}

'\\n---\\n'

('Prompt engineering is the process of designing and refining text inputs to '

'guide AI models in generating desired outputs. It focuses on specificity and '

'iteration to create effective prompts that align with user intentions. This '

'technique is widely applicable across various sectors, enhancing '

'productivity and automating tasks.')As limitações do CRAG

Embora o CRAG seja melhor que o RAG tradicional, ele tem algumas limitações que precisam de atenção.

Um dos principais problemas é sua dependência da qualidade do avaliador de recuperação. Esse avaliador é essencial para julgar se os documentos recuperados são relevantes e precisos. No entanto, o treinamento e o ajuste fino o avaliador pode ser exigente, requerendo muitos dados de alta qualidade e poder computacional. Manter o avaliador atualizado com novos tipos de consultas e fontes de dados aumenta a complexidade e o custo.

Outra limitação é o uso de pesquisas na Web pelo CRAG para localizar ou substituir documentos incorretos ou ambíguos. Embora essa abordagem possa fornecer informações mais recentes e diversificadas, ela também corre o risco de introduzir dados tendenciosos ou não confiáveis. A qualidade do conteúdo da Web varia muito, e a classificação desse conteúdo para encontrar as informações mais precisas pode ser um desafio. Isso significa que mesmo um avaliador bem treinado não pode evitar completamente a inclusão de informações de baixa qualidade ou tendenciosas.

Esses desafios destacam a necessidade de pesquisa e desenvolvimento contínuos.

Conclusão

De modo geral, o CRAG aprimora os sistemas RAG tradicionais ao adicionar recursos que verificam e refinam as informações recuperadas, tornando os modelos de linguagem mais precisos e confiáveis. Isso torna o CRAG uma ferramenta útil para muitos aplicativos diferentes.

Para saber mais sobre o CRAG, confira o artigo original aqui.

Se você estiver procurando mais recursos de aprendizagem sobre o RAG, recomendo estes blogs:

Desenvolver aplicativos de IA

Ryan é um cientista de dados líder, especializado na criação de aplicativos de IA usando LLMs. Ele é candidato a PhD em Processamento de Linguagem Natural e Gráficos de Conhecimento no Imperial College London, onde também concluiu seu mestrado em Ciência da Computação. Fora da ciência de dados, ele escreve um boletim informativo semanal da Substack, The Limitless Playbook, no qual compartilha uma ideia prática dos principais pensadores do mundo e, ocasionalmente, escreve sobre os principais conceitos de IA.