Track

Imagine you are managing terabytes of customer transaction data, and your existing system is buckling under the pressure.

You need a solution that scales on demand, optimizes costs, and integrates with your existing AWS setup. Amazon Elastic MapReduce (EMR) can help with this. When I first started working with big data, I faced the same challenge—until I discovered EMR.

In this guide, we will go through everything from setting up an EMR cluster to executing workloads, optimizing performance, ensuring security, troubleshooting issues, and managing costs.

What is Amazon EMR?

Amazon EMR is a fully managed cluster-based service that simplifies big data processing by providing automated provisioning, scaling, and configuration of open-source frameworks.

It allows you to analyze vast amounts of structured and unstructured data without the hassle of manually managing on-premises clusters.

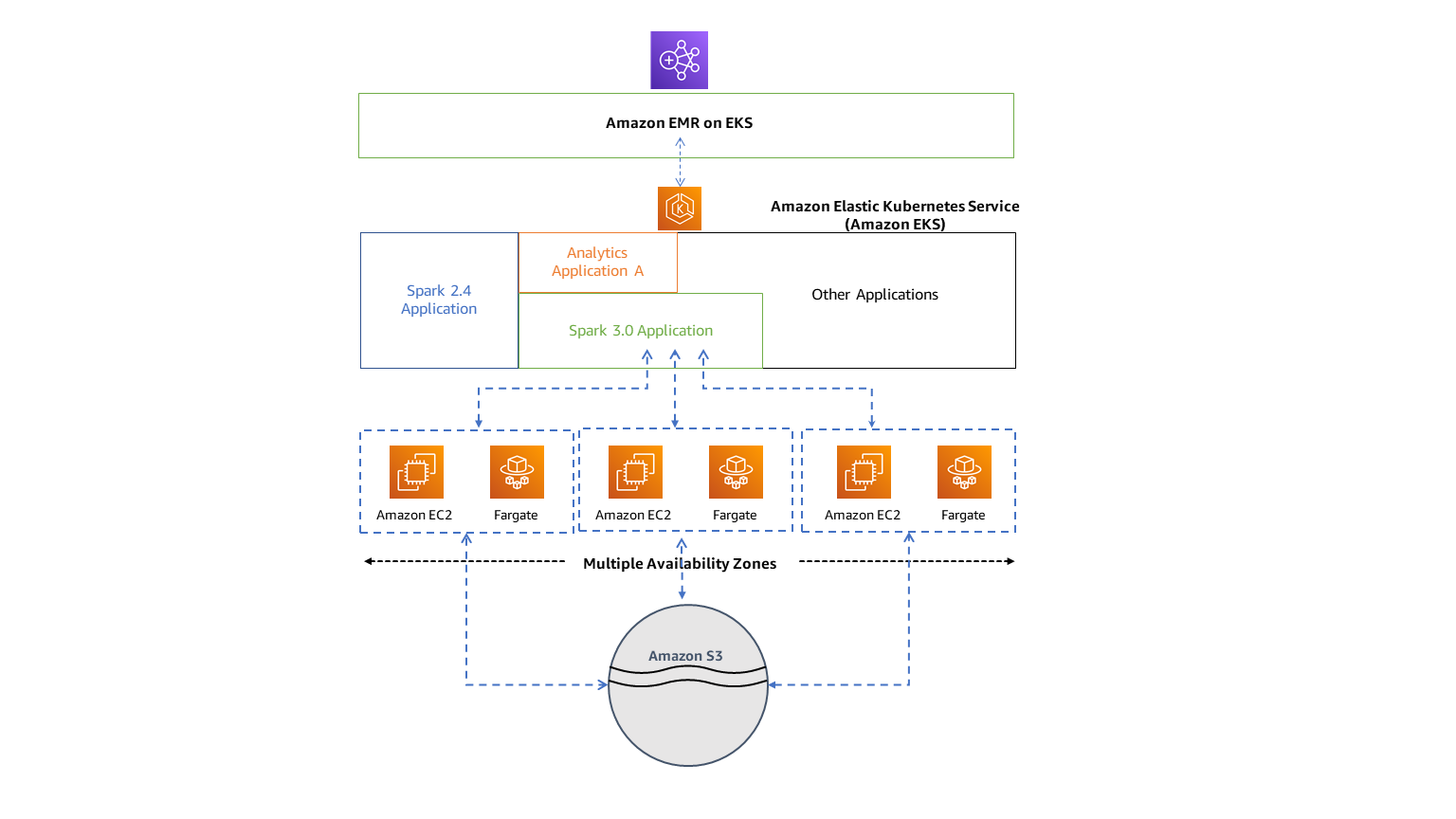

The image below shows how Amazon EMR on EKS works with other AWS services, providing a visual representation of its integration and workflow.

A diagram illustrating how Amazon EMR integrates with Amazon EKS and other AWS services for big data processing. Source: AWS Docs

If you are new to AWS, I recommend checking out this Introduction to AWS course to build foundational knowledge.

Some of the key features of Amazon EMR include:

- Scalability: EMR allows you to dynamically add or remove instances based on workload requirements.

- Cost efficiency: Leveraging Spot Instances and auto-scaling allows you to optimize their compute costs.

- Integration with AWS services: EMR integrates seamlessly with services like Amazon S3 (for data storage), AWS Lambda (for serverless computing), Amazon RDS (for relational databases), and Amazon CloudWatch (for monitoring).

- Support for popular frameworks: EMR supports Apache Spark, Hadoop, Hive, Pig, Presto, and more, which enables you to work with familiar big data tools.

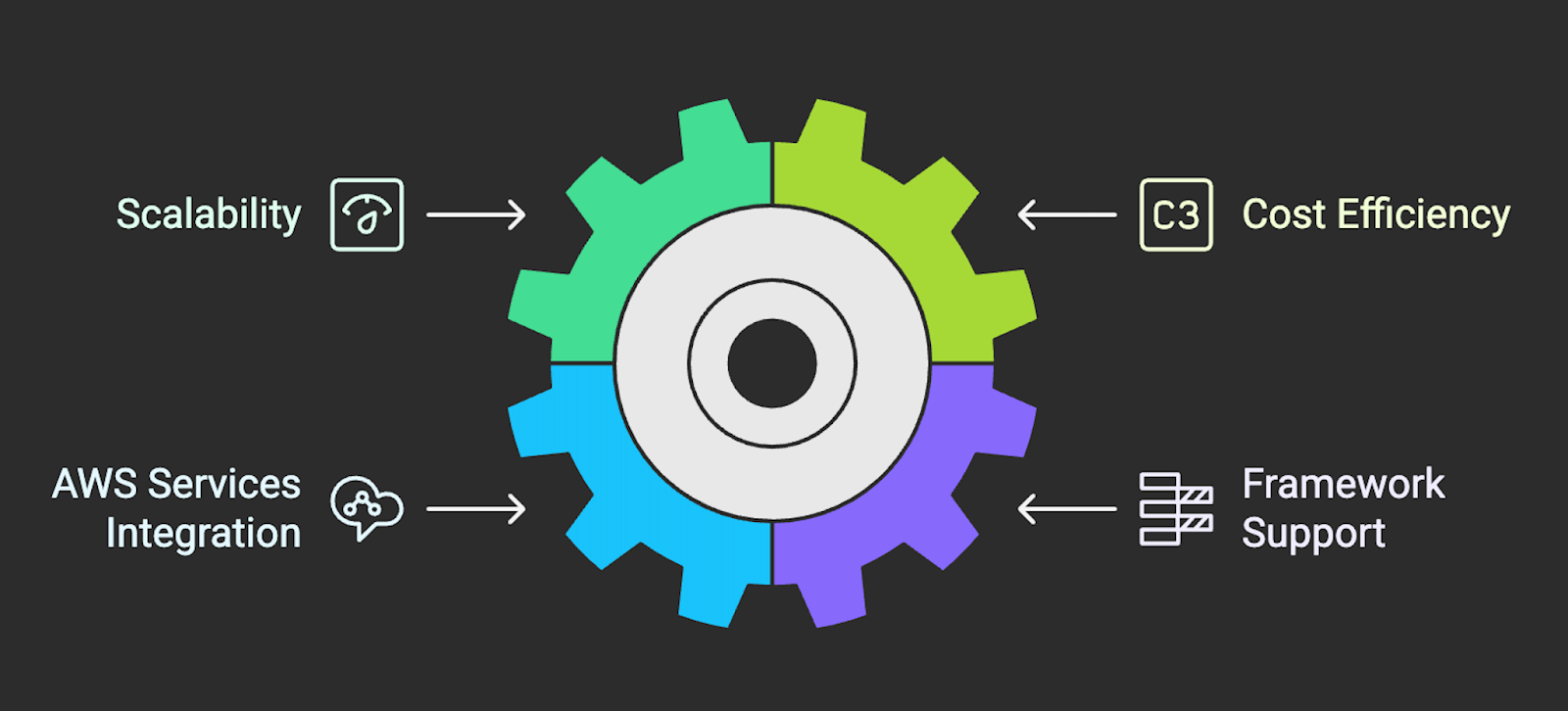

Diagram listing the key features of Amazon EMR. Image created using Napkin AI

Diagram listing the key features of Amazon EMR. Image created using Napkin AI

Automating cluster management and scaling allows you to reduce operational complexity and focus on data processing and analytics.

If you want to better understand AWS storage services like Amazon S3 before proceeding with Amazon EMR, check out this AWS Storage Tutorial.

Setting Up an Amazon EMR Cluster

To set up an Amazon EMR cluster, you will need to access the EMR service, create the cluster, and configure it to suit your workload.

Creating an EMR cluster

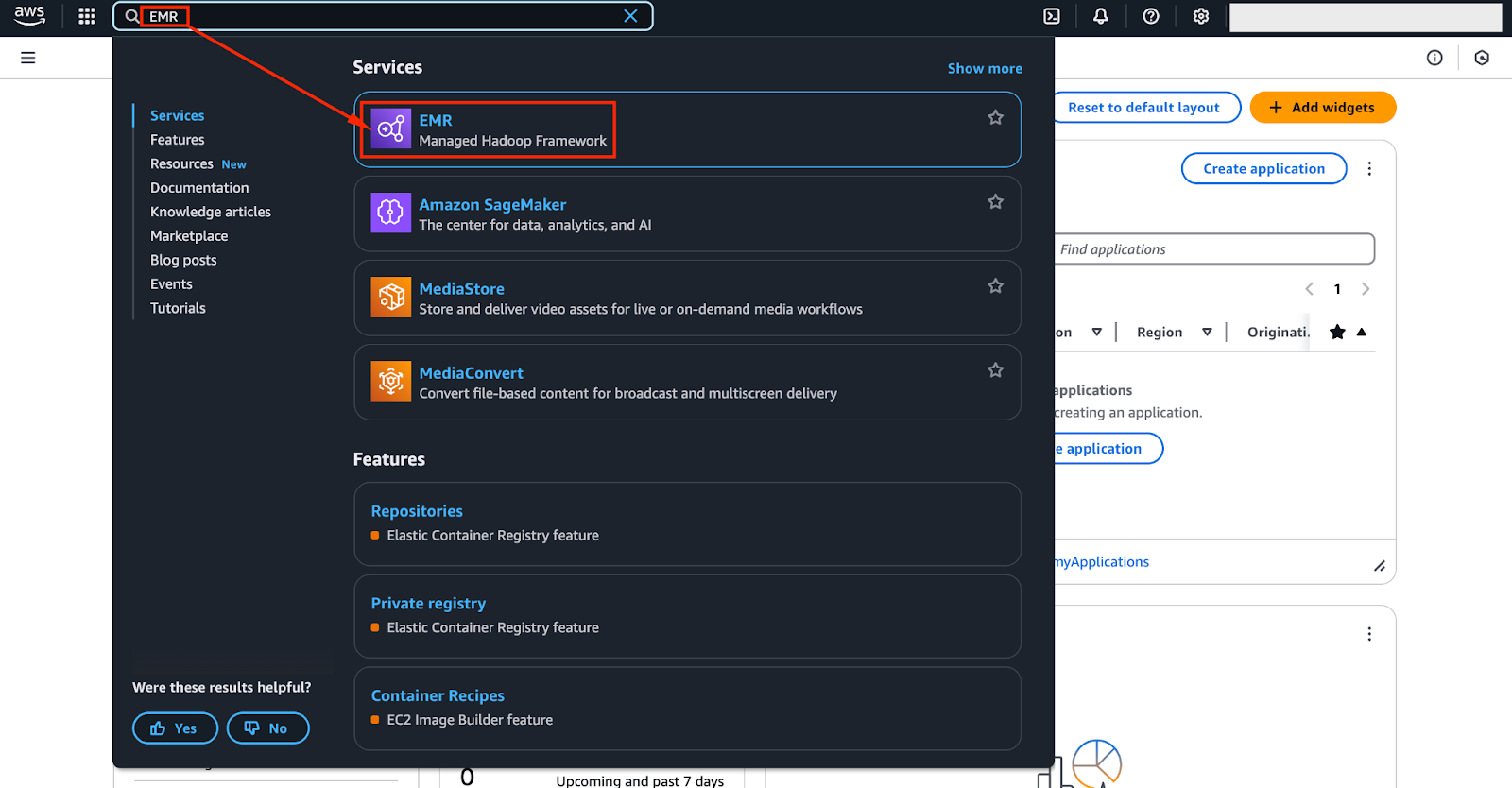

To get started, log in to the AWS Management Console and navigate to the EMR service.

You can do this by searching for "EMR" in the AWS Management Console’s search panel - as shown in the image below.

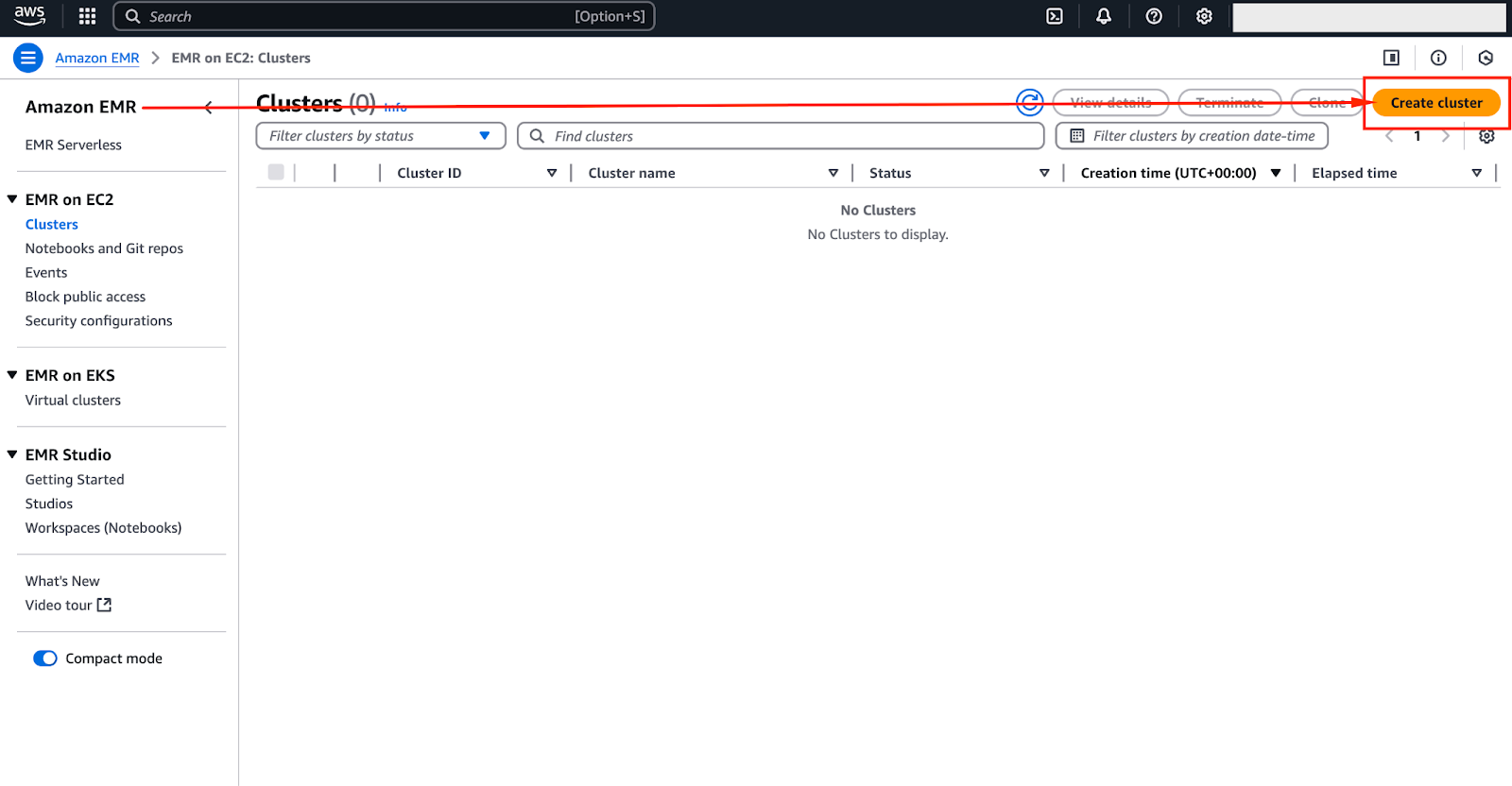

Once you are there, click on “Create Cluster” - as shown in the image below.

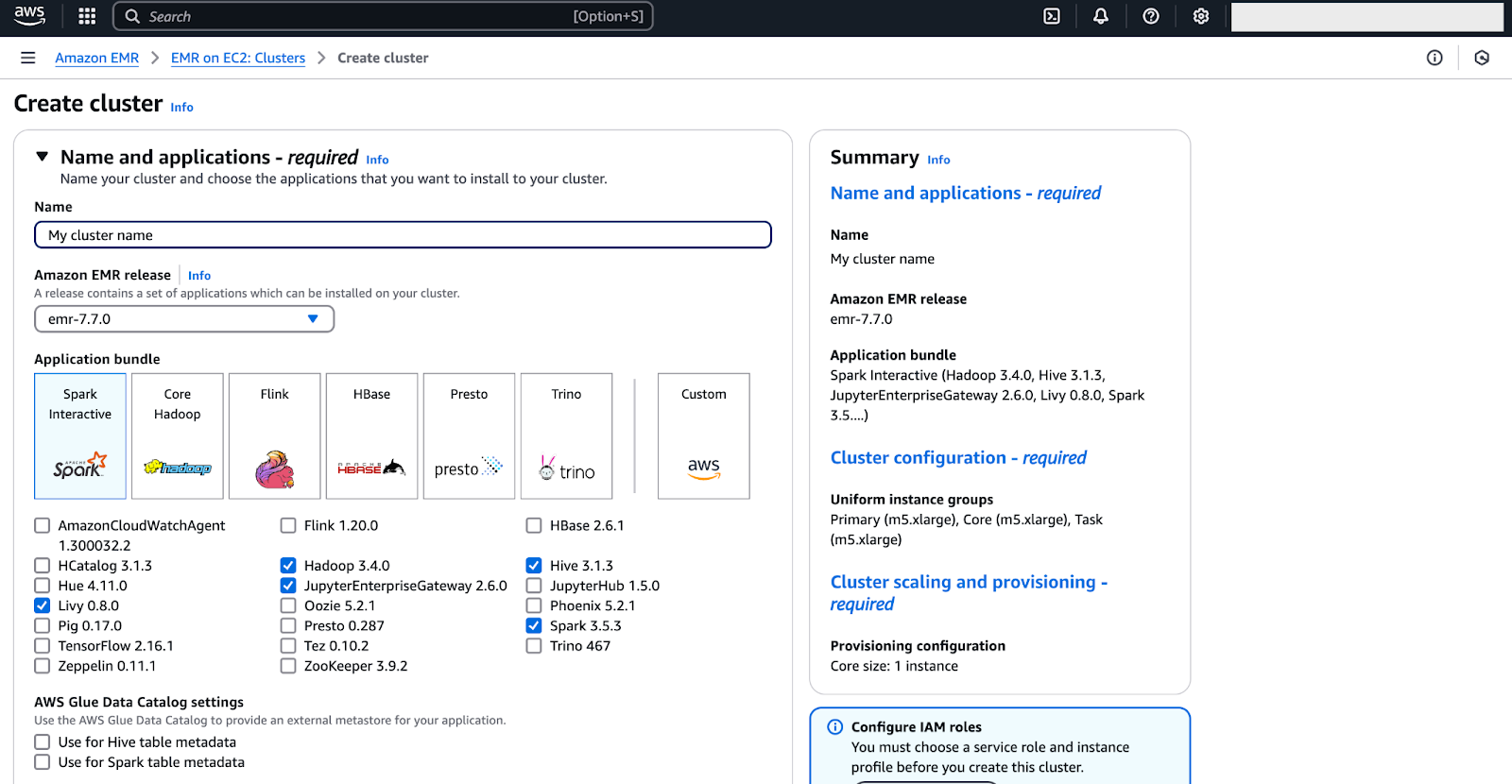

The image below provides a screenshot of the current EMR interface—while it may evolve over time, the core settings will remain similar.

To configure your Amazon EMR cluster, you will need to adjust several settings to align with your specific workload requirements. Follow the instructions below:

01: Select a big data framework

EMR provides multiple frameworks, such as Apache Spark for in-memory processing, Hadoop for distributed storage and processing, and Presto for interactive SQL queries.

Choose the framework that best suits your use case.

02: Choose an instance type

The instance type impacts performance and cost. A common choice is m5.xlarge, as it offers a balance between computing power and affordability.

For memory-intensive workloads, use r5.xlarge, while c5.xlarge is better suited for compute-intensive tasks.

03: Configure cluster nodes

EMR clusters consist of three types of nodes:

- Master node: This manages the cluster and coordinates processing jobs. (Typically, a single instance).

- Core nodes: These perform data processing and store HDFS data. (Minimum one, and they are scalable as needed).

- Task nodes: These are optional nodes that handle additional workloads without storing data. The number of core and task nodes should be chosen based on the workload size.

04: Configure security and access

Set up EC2 key pairs for SSH access and configure IAM roles to control permissions.

It would be a good idea for you to enable Kerberos authentication or AWS Lake Formation for enhanced security.

05: Set up networking and storage

Define VPC settings, enable auto-scaling to dynamically adjust cluster size, and specify Amazon S3 as the primary storage location (s3://your-bucket-name/).

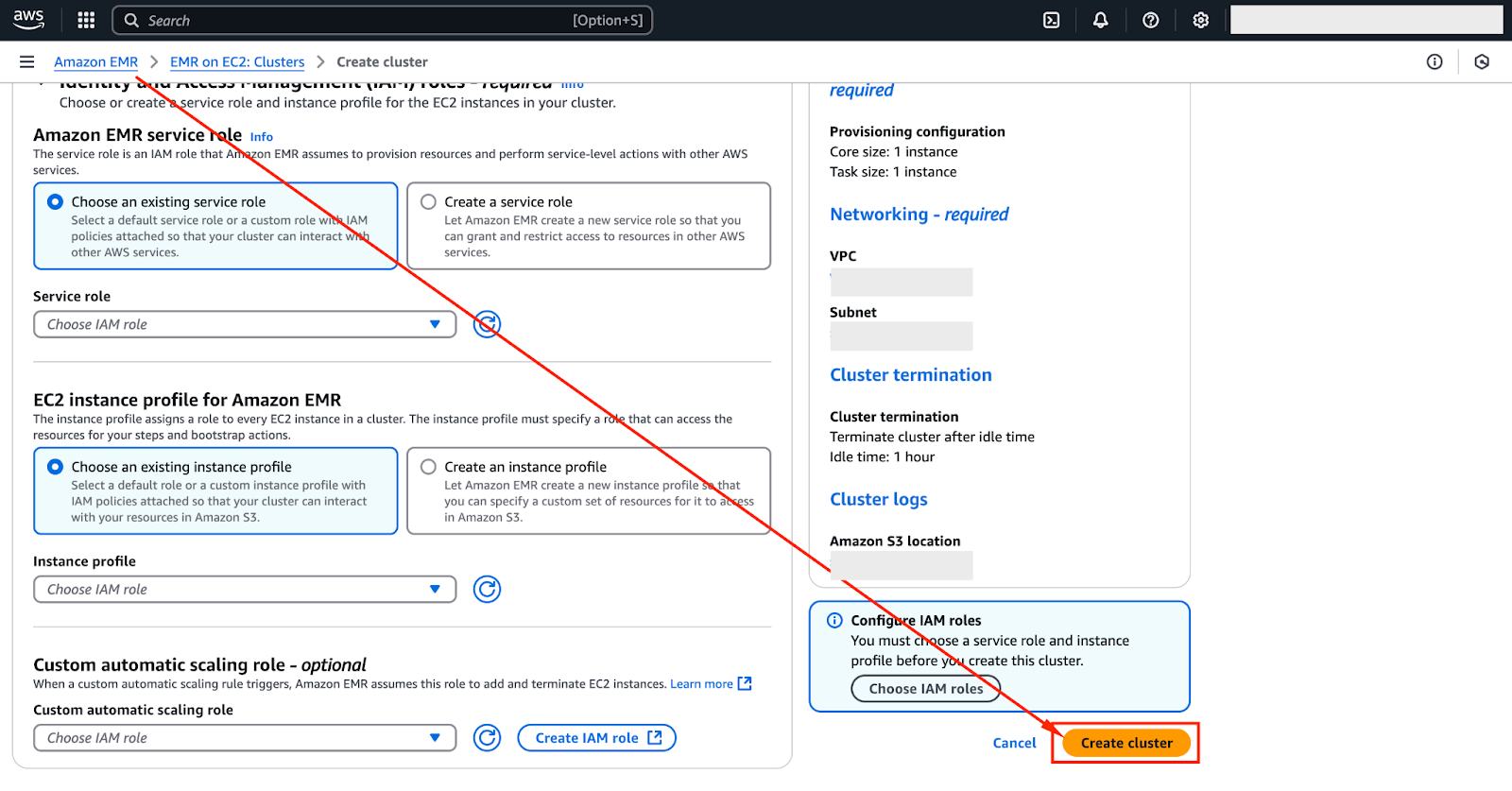

06: Launch the cluster

After reviewing the configuration, click "Create Cluster" to launch it.

The cluster will take a few minutes to initialize before becoming available.

The image below highlights the interface where you can create the cluster.

Configuring applications and dependencies

Once the cluster is up and running, you can fine-tune it by selecting pre-installed applications and configuring bootstrap actions:

- Pre-installed applications: Choose from a range of applications, such as Hive for SQL-based querying, Pig for high-level scripting, and HBase for NoSQL database support.

- Bootstrap actions: You can customize the cluster by installing additional libraries, modifying system settings, or preparing datasets. Example bootstrap actions include:

- Installing Python libraries (

pip install pandas numpy) - Configuring logging settings

- Tuning JVM settings for Hadoop/Spark

Proper configuration ensures that your EMR cluster is fully optimized for its workload, reducing processing time and operational costs.

Working with Amazon EMR

Once your EMR cluster is set up and configured, the next step is to start working with your data.

Uploading data to Amazon EMR

Amazon EMR primarily relies on Amazon S3 to store input datasets and save output results.

Unlike HDFS, which stores data within the cluster, S3 offers durability, scalability, and cost-efficiency, which makes it the preferred choice for managing data in EMR.

To upload data to Amazon S3, follow these steps:

01: Navigate to the S3 console

Log in to the AWS Management Console and open the Amazon S3 service.

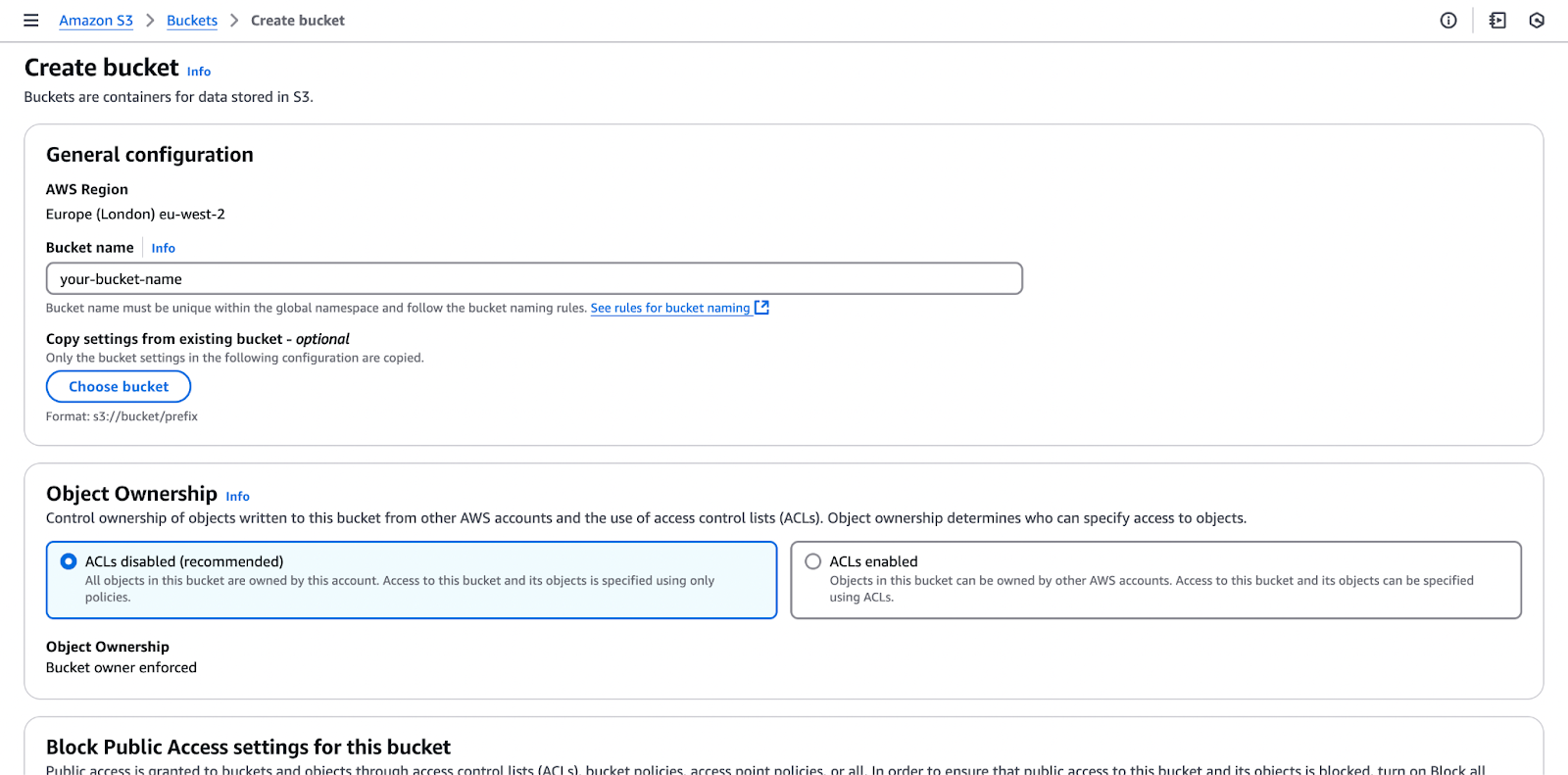

02: Create or select a bucket

Choose an existing bucket or create a new one. Make sure the bucket is in the same region as your EMR cluster to minimize latency.

The image below shows the process of creating an Amazon S3 bucket.

When creating a bucket, remember that the bucket name must be globally unique and comply with AWS naming conventions. Make sure that you choose a name that reflects your use case while adhering to these requirements.

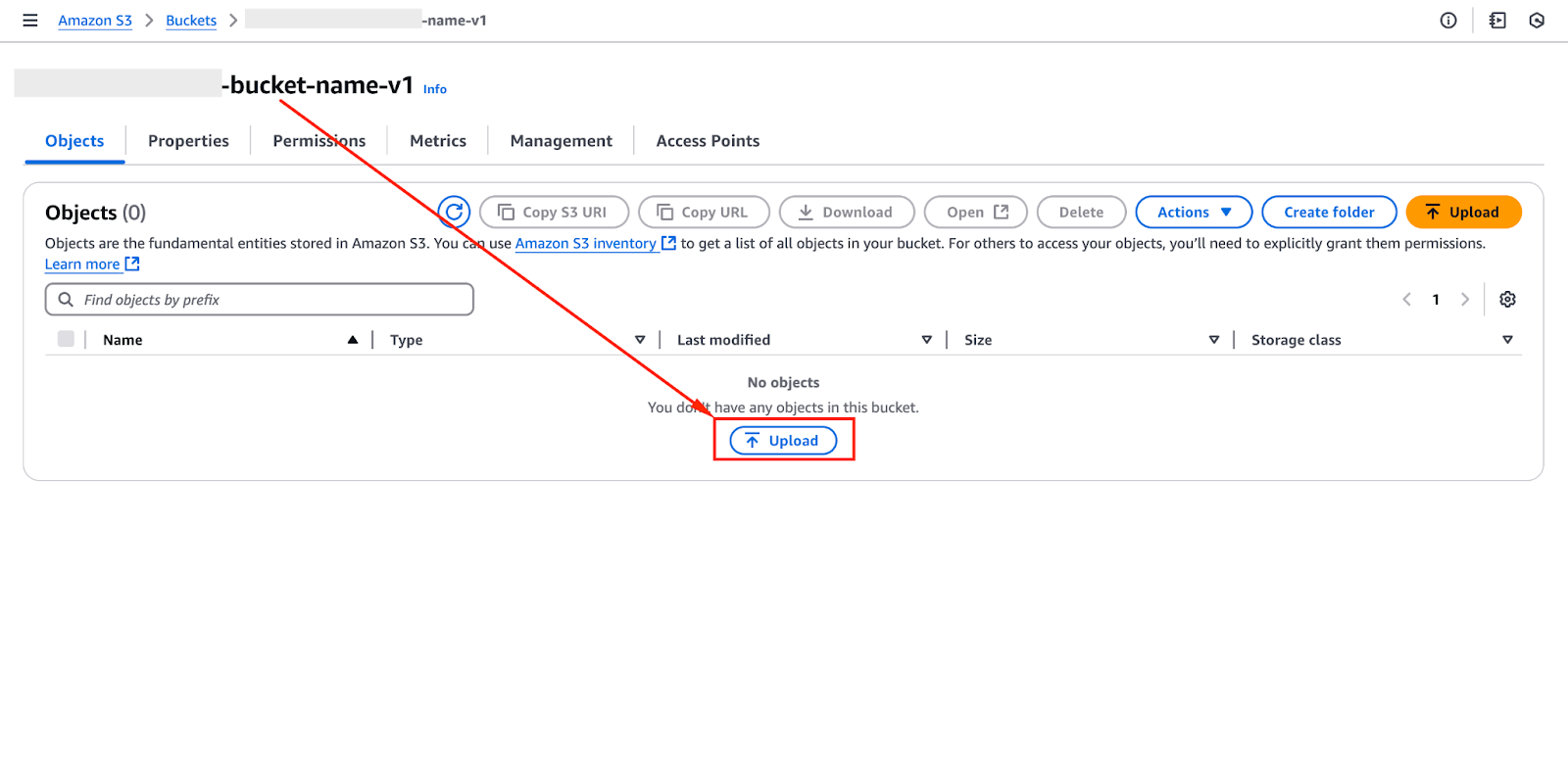

03: Upload data files

Click "Upload", select the required files, and define access permissions.

The image below highlights the interface where you can upload files to an Amazon S3 bucket.

Alternatively, you can use the AWS CLI to upload files programmatically.

Before doing so, you will need to ensure that the AWS CLI is installed and configured with the appropriate credentials by running:

aws configureThis will prompt you to enter your AWS Access Key ID, Secret Access Key, region, and output format to authenticate your session. You can skip the above step if the AWS CLI is already configured.

Once configured, you can upload files using the following command:

aws s3 cp local_file.csv s3://your-bucket-name/data/04: Access data from EMR

Once uploaded, data can be accessed from EMR using an S3 path: s3://your-bucket-name/data/

Applications like Spark, Hadoop, and Hive can then process the data directly from S3.

Alternative data storage options

- HDFS (Hadoop Distributed File System): This is used for temporary storage during processing, but data is lost when the cluster terminates.

- Amazon DynamoDB: This is a NoSQL storage and real-time data retrieval.

- AWS Glue Data Catalog: This is used to organize and manage metadata for datasets stored in S3.

Running Spark or Hadoop jobs

After uploading data, you can process it using Apache Spark, Hadoop, or other big data frameworks.

EMR allows job execution through the AWS CLI, EMR console, or direct SSH access.

Running a Spark job

To submit a Spark job, SSH into the cluster and use the spark-submit command:

spark-submit --deploy-mode cluster s3://your-bucket-name/scripts/sample_job.pyAlternatively, you can submit jobs through the AWS EMR "Steps" feature - which allows job automation without manually accessing the cluster.

To explore foundational concepts before running Spark jobs on EMR, the Big Data Fundamentals with PySpark course offers a great starting point. If your workflow includes preparing messy datasets, you might find the Cleaning Data with PySpark course particularly useful.

Running a Hadoop MapReduce job

For Hadoop jobs, use the command-line interface:

hadoop jar s3://your-bucket-name/jars/sample_job.jar input_dir output_dirHadoop jobs can also be managed using AWS Step Functions to automate workflows.

Effective security and access control are crucial when running big data jobs.

As Spark and Hadoop jobs interact with sensitive data, Amazon EMR integrates with Apache Ranger to enforce fine-grained access control and permissions.

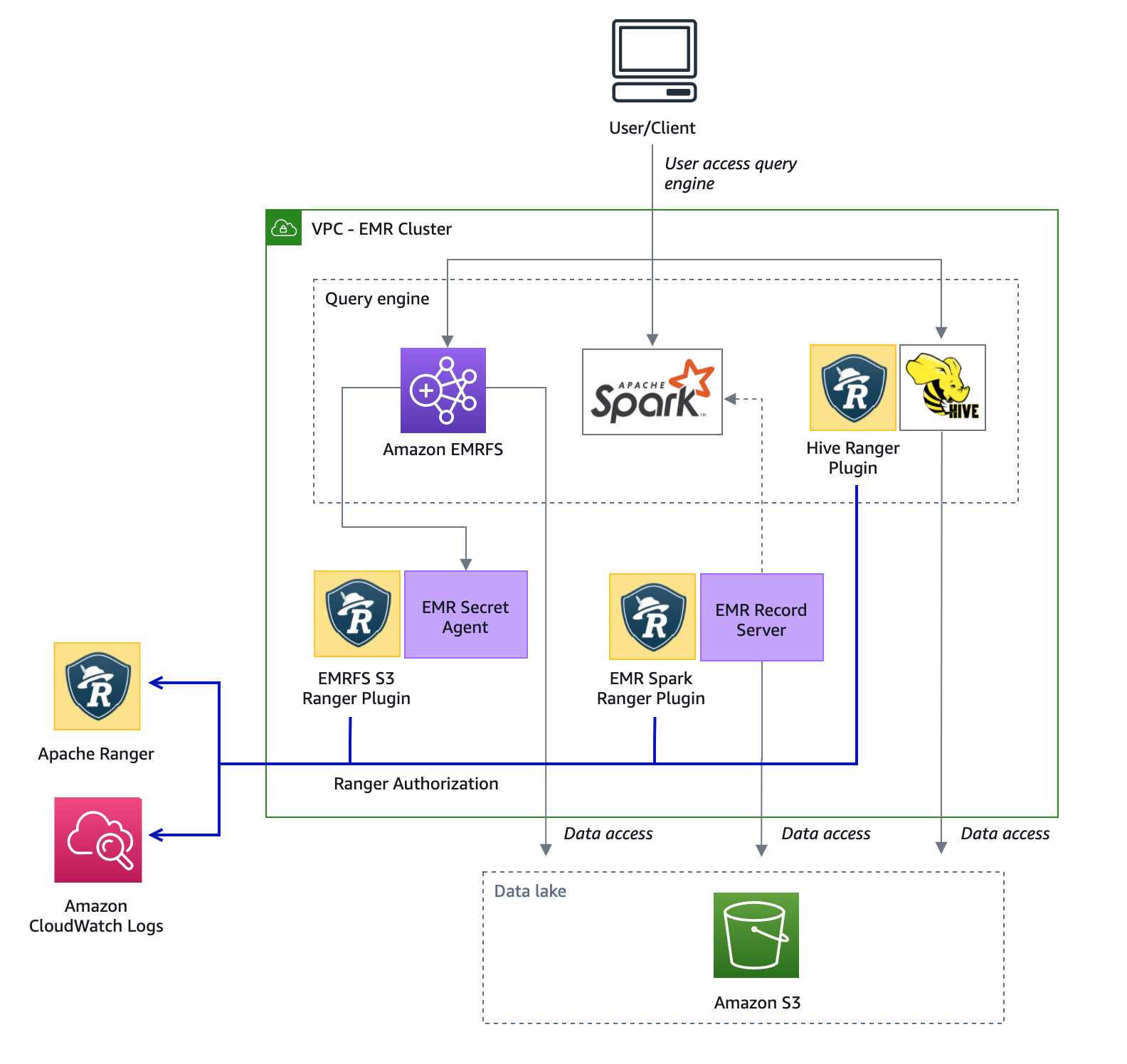

The image below illustrates an example architecture of this integration, highlighting how security policies are applied across EMR clusters.

Diagram illustrating how Apache Ranger enforces security policies across Amazon EMR clusters. Source: AWS Docs

Diagram illustrating how Apache Ranger enforces security policies across Amazon EMR clusters. Source: AWS Docs

Monitoring cluster performance

To ensure efficient processing, monitor your EMR cluster using Amazon CloudWatch, Ganglia, and the Spark UI.

These tools provide real-time insights into resource utilization, job progress, and potential bottlenecks.

Key monitoring tools

- Amazon CloudWatch: Tracks CPU utilization, memory usage, disk I/O, and network activity. You can set CloudWatch alarms to notify you of performance issues.

- EMR logs: Access system logs in Amazon S3 for debugging job failures. Logs can be enabled under the "Cluster Logging" section during cluster creation.

- Ganglia: Provides detailed visualizations of cluster performance, available under the "Monitoring" tab in the EMR console.

- Spark UI: If you are running Spark jobs, use the Spark Web UI to inspect job execution plans, stage dependencies, and resource consumption.

Best practices for performance optimization

- Enable auto-scaling: Automatically add or remove cluster nodes based on workload demand.

- Use spot instances: Reduce costs by using EC2 Spot Instances for task nodes.

- Tune Spark and Hadoop configurations: Adjust memory settings (

spark.executor.memory), parallelism (spark.default.parallelism), and Hadoop block size for optimal performance.

You can efficiently process large datasets while keeping your EMR cluster cost-effective and high-performing by following these steps.

AWS Cloud Practitioner

Scaling Amazon EMR Clusters

As your data processing needs evolve, you may need to adjust your EMR cluster's resources to maintain performance and cost efficiency.

EMR offers manual and automated scaling options, allowing you to modify instance counts based on workload demands.

To add to this, leveraging Spot Instances can help optimize costs while ensuring scalability.

Manual scaling

Manual scaling allows you to increase or decrease the number of instances in your cluster based on real-time workload demands.

This adjustment can be made through the EMR console, the AWS CLI, or the EMR API.

- Using the EMR console: Navigate to your cluster, select "Resize", and specify the desired instance count.

- Using the AWS CLI: Run the following command to modify the cluster size:

aws emr modify-instance-groups --cluster-id <your-cluster-id> --instance-groups InstanceGroupId=<your-instance-group-id>,InstanceCount=<new-instance-count>- Using the EMR API: You can use the

ModifyInstanceGroupsAPI to dynamically adjust the number of instances.

Manual scaling is best suited for predictable workloads where you can anticipate the resource needs in advance.

For example, I used manual scaling when I knew my data processing job would have steady demand, which allowed me to adjust the instance count based on expected workload and ensured optimal resource utilization.

Auto-scaling in Amazon EMR

Auto-scaling in EMR dynamically adjusts the number of instances in response to workload changes, ensuring efficient resource utilization while keeping costs under control.

Auto-scaling policies define when to add or remove instances based on specific metrics such as CPU utilization, YARN memory usage, or task queue length.

Key auto-scaling configurations:

- Scale-out policy: Adds instances when the workload increases, ensuring timely processing of jobs.

- Scale-in policy: Reduces instances when the demand decreases, preventing unnecessary costs.

- Cooldown periods: Prevents excessive scaling actions within short time intervals.

To enable auto-scaling, configure an Auto-Scaling Policy via the AWS Management Console, CLI, or API.

An example of an AWS CLI command to set an auto-scaling policy is:

aws emr put-auto-scaling-policy --cluster-id <your-cluster-id> --instance-group-id <your-instance-group-id> --auto-scaling-policy file://policy.jsonAuto-scaling is particularly beneficial for variable workloads, such as streaming analytics, batch processing, and machine learning tasks that experience fluctuating resource requirements.

For instance, I used auto-scaling when running a machine learning model that experienced unpredictable spikes in traffic. The system automatically scaled up during peak times and scaled down when demand dropped, which optimized both costs and performance.

Spot instances for cost optimization

Amazon EC2 Spot Instances provide a cost-effective way to run EMR clusters by utilizing spare EC2 capacity at significantly reduced rates.

These instances are ideal if you have fault-tolerant workloads, such as big data processing and machine learning.

Benefits of using Spot Instances in EMR:

- Cost savings: Spot Instances can be up to 90% cheaper than On-Demand instances.

- Scalability: You can dynamically add more compute power at a lower cost.

- Hybrid instance types: EMR allows mixing Spot, On-Demand, and Reserved Instances to balance cost and reliability.

However, Spot Instances may be interrupted if AWS reclaims capacity. To mitigate this:

- Use Instance Fleets instead of Instance Groups to mix Spot and On-Demand instances dynamically.

- Implement checkpointing in workloads to recover from potential interruptions.

- Set up diversified Spot Requests across multiple Availability Zones and instance types to increase stability.

To configure Spot Instances in EMR, use the following AWS CLI command:

aws emr create-cluster --instance-fleets file://instance-fleet-config.jsonCombining manual scaling, auto-scaling, and Spot Instances can help you optimize your EMR clusters for performance, cost-efficiency, and reliability.

Security and Access Control in Amazon EMR

Misconfigured IAM roles in EMR can expose sensitive data to unintended users. Therefore, you should always use the principle of least privilege and restrict SSH access to only trusted IPs.

AWS provides robust security features, including IAM roles for access control, encryption for data protection, and best practices to safeguard your environment.

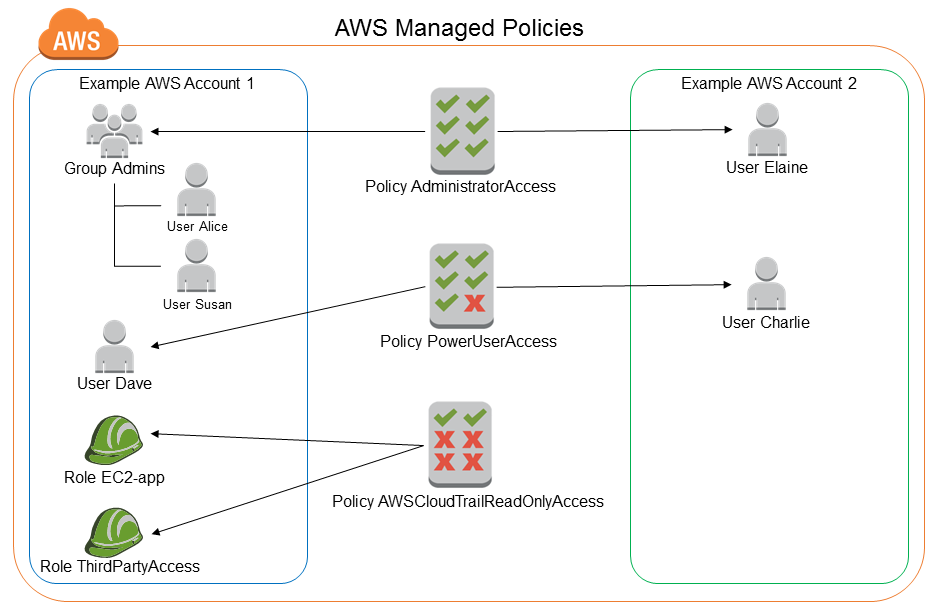

IAM roles and policies

AWS Identity and Access Management (IAM) controls access to EMR clusters and related resources.

When creating a cluster, you must assign IAM roles that grant permissions to interact with S3, DynamoDB, and other AWS services.

Defining least-privilege policies ensures security by limiting access to only necessary resources.

The image below shows managed policies and how they could be used with EMR.

Screenshot of AWS IAM policy settings showing how permissions are assigned. Source: AWS Docs

Data encryption and security best practices

Amazon EMR supports encryption for data at rest using Amazon S3 server-side encryption (SSE) or AWS Key Management Service (KMS). Data in transit can be secured using SSL/TLS protocols.

Best security practices include using multi-factor authentication (MFA) for accessing the AWS console, restricting SSH access, and managing API keys securely.

If you want to learn more about AWS security, have a look at the AWS Security and Cost Management course.

Troubleshooting Amazon EMR

While Amazon EMR is designed for scalability and reliability, issues can still arise during cluster operation and job execution.

Common challenges include performance bottlenecks, job failures, and resource constraints. Understanding how to diagnose and resolve these problems can help you maintain an efficient workflow.

Common issues with EMR clusters

Users often encounter problems such as slow job execution, insufficient memory allocation, instance failures, and inefficient data shuffling.

Performance issues may stem from incorrect instance types, under-provisioned clusters, or excessive disk I/O. To address these problems:

- Optimize job parameters: Adjust settings like executor memory, parallelism, and shuffle partitions to balance resource usage.

- Scale instances appropriately: Utilize auto-scaling policies to dynamically adjust cluster size based on workload demands.

- Monitor and review logs: Use Amazon CloudWatch, the EMR console, or direct log analysis in Amazon S3 to identify bottlenecks and failure points.

Diagnosing Spark and Hadoop jobs

When Spark or Hadoop jobs fail, understanding the root cause is crucial for remediation.

Logs stored in Amazon S3 or accessible through the EMR console provide valuable insights into execution failures, memory issues, and task slowdowns.

- Use the Spark history server: This tool helps analyze job execution timelines, shuffle operations, and task distribution to identify performance bottlenecks.

- Leverage the Hadoop job tracker: For MapReduce workloads, the Job Tracker provides detailed execution statistics, helping pinpoint issues such as long-running tasks or data skew.

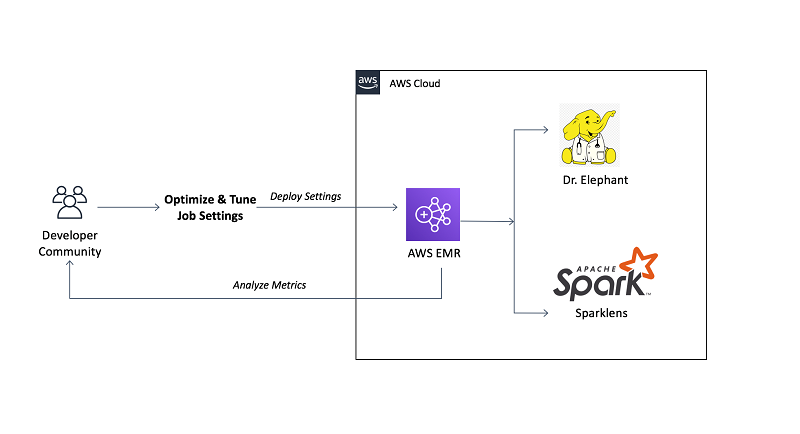

- Tune performance with Dr. Elephant and Sparklens: These tools provide recommendations for optimizing Spark and Hadoop workloads by analyzing past execution metrics.

The image below shows how Dr. Elephant and Sparklens can be used to tune performance in Hadoop and Spark on Amazon EMR.

Dr. Elephant and Sparklens interface displaying performance tuning insights for Hadoop and Spark jobs on Amazon EMR. Source: AWS Blogs

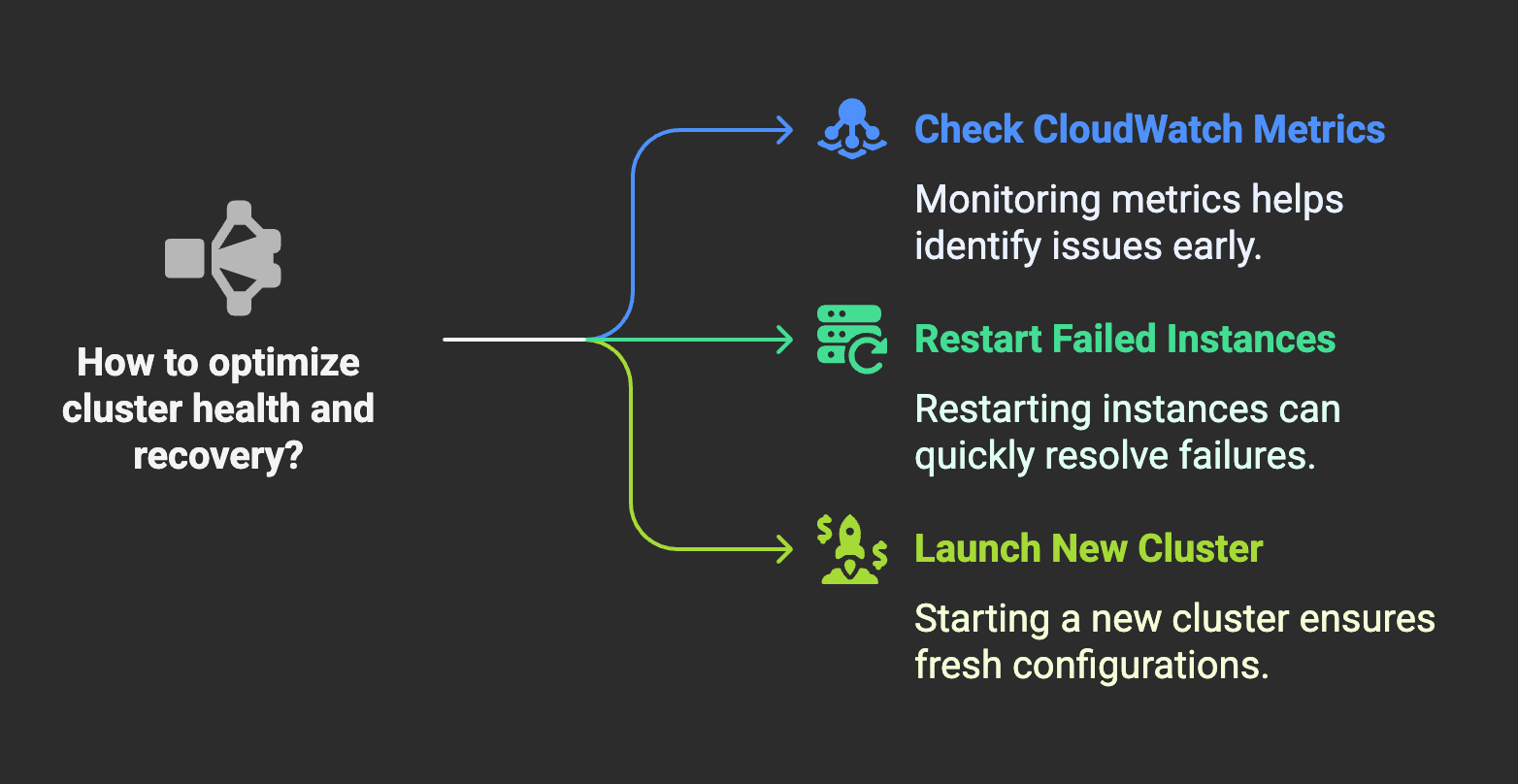

Cluster health and recovery

If a cluster experiences failures, diagnosing the problem quickly can minimize downtime and prevent data loss.

Amazon CloudWatch and AWS CloudTrail offer monitoring and alerting capabilities that can help identify underlying issues.

- Check CloudWatch metrics: Look at CPU utilization, memory consumption, and disk I/O to determine if resources are underutilized or overutilized.

- Restart failed instances: If an instance fails due to hardware or software issues, restarting it or replacing it with a new instance can restore cluster stability.

- Launch a new cluster from saved configurations: To recover from critical failures, you can use Amazon EMR’s cloning feature to launch a new cluster with the same settings and bootstrap actions.

Diagram illustrating ways to optimize cluster health and recovery in Amazon EMR. Image created using Napkin AI

Diagram illustrating ways to optimize cluster health and recovery in Amazon EMR. Image created using Napkin AI

Cost Management with Amazon EMR

Effectively managing costs is crucial when running workloads on Amazon EMR. Pricing depends on factors such as instance types, storage, and data transfer, but there are strategies to optimize spending.

Understanding pricing

Amazon EMR pricing is primarily based on several key factors: compute instances, storage, and data transfer.

- On-demand instances allow you to scale clusters as needed, which provides flexibility without long-term commitments. However, this model can be expensive for continuous workloads.

- Spot Instances offer a cost-effective alternative by allowing you to bid for unused EC2 capacity at significantly lower rates. While Spot Instances can provide substantial savings, they are subject to availability and potential interruptions.

To estimate costs accurately, you can use the AWS Pricing Calculator, which takes into account cluster configurations, instance types, and workload demands.

You need to understand these pricing structures to enable better forecasting and budgeting, which ensures efficient resource allocation.

In addition, leveraging AWS Savings Plans or Reserved Instances for predictable workloads can lock in lower rates and reduce overall costs.

Cost optimization strategies

To optimize costs, you should implement several best practices when running Amazon EMR workloads:

- Auto-scaling: Dynamically adjusting cluster size based on workload demand ensures that you are only using the necessary resources at any given time. This prevents over-provisioning and reduces wasteful spending.

- Right-sizing instances: Choosing the most appropriate instance types for your workloads can enhance performance while minimizing costs. For example, compute-optimized instances work well for processing-heavy tasks, whereas memory-optimized instances are better for in-memory analytics.

- Spot instances for cost savings: Using Spot Instances where possible can significantly lower compute costs. To mitigate interruptions, consider running fault-tolerant workloads that can handle instance terminations and rebalancing strategies.

- Cluster optimization: Configuring clusters efficiently by selecting the correct number of nodes and adjusting Hadoop or Spark settings can improve performance without excessive spending.

- Terminate idle clusters: Monitoring cluster activity and shutting down inactive clusters prevents unnecessary costs from accruing.

- Utilizing managed services: Offloading certain ETL tasks to AWS Glue can be a cost-effective alternative to running the same processes on Amazon EMR. AWS Glue is a serverless service that can automatically scale based on workload requirements, eliminating the need to manage and pay for idle compute resources.

Implementing these cost optimization strategies will help you balance performance and cost efficiency.

For a broader understanding of AWS cloud cost management, check out AWS Cloud Technology and Services.

Conclusion

After working with Amazon EMR, I have come to appreciate how it simplifies big data processing by automating cluster management, scaling, and AWS service integration. It is essential for anyone dealing with large-scale data workloads, regardless of whether you are just starting out or optimizing an existing pipeline.

If you are exploring cloud-based big data solutions, EMR is a powerful, scalable option worth considering!