Track

Recently, OpenAI introduced the GPT-4.1 family, which includes three variants: GPT-4.1, GPT-4.1 Mini, and GPT-4.1 Nano. These models are designed to excel in coding and instruction-following tasks, offering significant advancements over their predecessors.

One of their standout features is an expanded context window that supports up to 1 million tokens—more than eight times the capacity of GPT-4. This enhancement allows the models to handle extensive inputs, such as entire code repositories or multi-document workflows.

In this tutorial, we will explore the capabilities of GPT-4.1 by building a project that enables users to search an entire code repository using keywords from their prompts. The relevant content from the repository will then be provided to the GPT-4.1 model, along with the user’s prompt.

As a result, we will create an interactive application that allows users to chat with their code repository and use GPT-4.1 to analyze and improve the entire code source.

We keep our readers updated on the latest in AI by sending out The Median, our free Friday newsletter that breaks down the week’s key stories. Subscribe and stay sharp in just a few minutes a week:

Getting Started with GPT-4.1 Models

Before diving into building the project, it is essential to first test the various versions of GPT-4.1 models and explore the new responses API. This advanced API enables stateful interactions by using previous outputs as inputs, supports multimodal inputs like text and images, and extends the model’s capabilities with built-in tools for file search, web search, and external system integration through function calling.

To begin, ensure you have the latest version of the OpenAI Python client installed. You can do this by running the following command in terminal:

$ pip install -U openai -q1. Using the GPT-4.1 Nano model for text generation

In this example, we will use the GPT-4.1 Nano model, which is optimized for speed and cost-efficiency, to generate text.

First, we will initialize the OpenAI client and use it to access the response API to generate a response.

from openai import OpenAI

from IPython.display import Markdown, display

client = OpenAI()

response = client.responses.create(

model="gpt-4.1-nano",

input= "Write a proper blog on getting rich."

)

Markdown(response.output_text)The GPT-4.1 Nano model provided a well-formatted and concise response.

2. Using the GPT-4.1 Mini model for image understanding

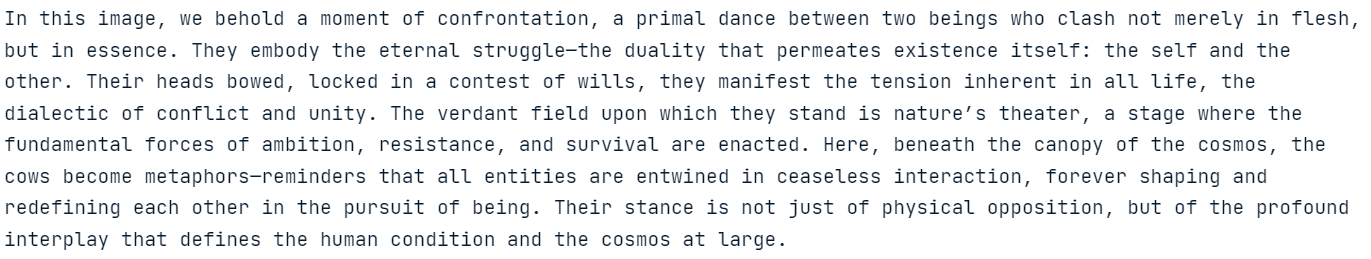

Next, we will test the GPT-4.1 Mini model by providing it with an image URL and asking it to describe the image in a philosophical style.

response = client.responses.create(

model="gpt-4.1-mini",

input=[{

"role": "user",

"content": [

{"type": "input_text", "text": "Please describe the image as a philosopher would."},

{

"type": "input_image",

"image_url": "https://thumbs.dreamstime.com/b/lucha-de-dos-vacas-56529466.jpg",

},

],

}],

)

print(response.output_text)The GPT-4.1 Mini model provided a detailed and creative description of the image, showcasing its ability to interpret visual content.

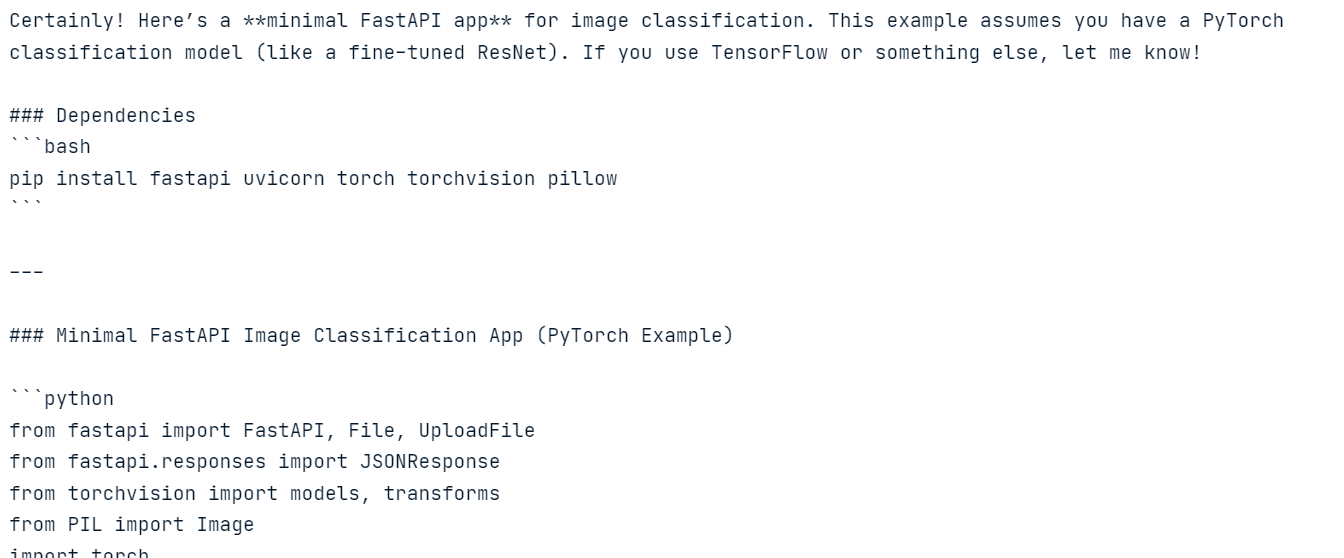

3. Using the GPT-4.1 (Full) model for code generation

Now, we will use the “full” GPT-4.1 model to generate code.

In this example, we will provide the model with system instructions and a user prompt and enable stream response.

import sys

# Request a streamed response from the model.

stream = client.responses.create(

model="gpt-4.1",

instructions="You are a machine learning engineer, which is an expert in creating model inference.",

input="Create a FastAPI app for image classification",

stream=True,

)

# Iterate over stream events and print text as soon as it's received.

for event in stream:

# Check if the event includes a text delta.

if hasattr(event, "delta") and event.delta:

sys.stdout.write(event.delta)

sys.stdout.flush()We have used system-level output (sys.stdout) to display the streaming response in real time.

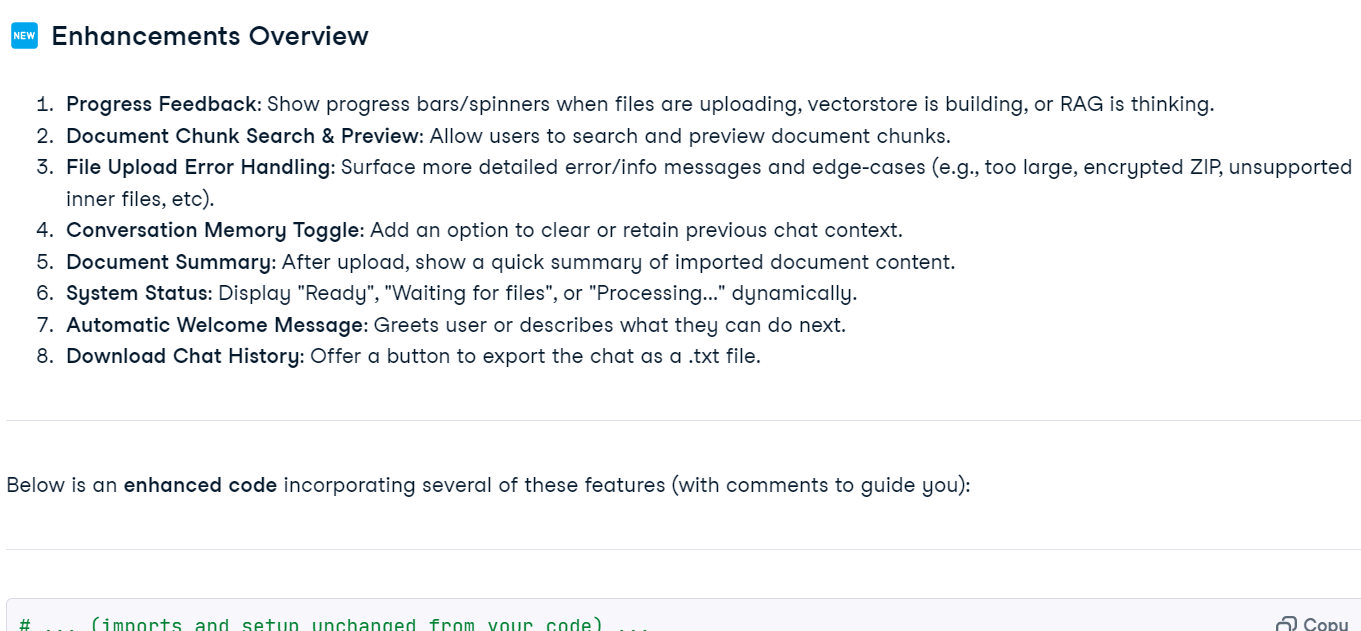

4. Using the GPT-4.1 model for code generation with file inputs

Finally, we will test the GPT-4.1 model's large context window by providing it with a Python file as input. The file is encoded in Base64 format and sent to the model along with user instructions.

import base64

from openai import OpenAI

client = OpenAI()

with open("main.py", "rb") as f:

data = f.read()

base64_string = base64.b64encode(data).decode("utf-8")

response = client.responses.create(

model="gpt-4.1",

input=[

{

"role": "user",

"content": [

{

"type": "input_file",

"filename": "main.py",

"file_data": f"data:text/x-python;base64,{base64_string}",

},

{

"type": "input_text",

"text": "Enhance the code by incorporating additional features to improve the user experience.",

},

],

},

]

)

Markdown(response.output_text)The GPT-4.1 model provided context-aware suggestions for improving the code, demonstrating its ability to handle large inputs.

If you encounter any issues running the above code, please refer to the DataLab workbook: Playing with GPT-4.1 API — DataLab.

Building the Keyword Code Search Application

The Keyword Code Search Application is designed to help developers efficiently search through a codebase for relevant snippets based on specific keywords and then use GPT-4.1 to provide explanations or insights about the code.

The application consists of three major parts:

1. Search codebase

The first step in the application is to search the codebase for relevant snippets that match the developer's query. This is achieved using the search_codebase function, which scans files in a given directory for lines containing the specified keywords.

searcher.py:

import os

def search_codebase(directory: str, keywords: list, allowed_extensions: list) -> list:

"""

Search for code snippets in the codebase that match any of the given keywords.

Returns a list of dictionaries with file path, the line number where a match was found,

and a code snippet that includes 2 lines of context before and after the matched line.

"""

matches = []

for root, _, files in os.walk(directory):

for file in files:

if file.endswith(tuple(allowed_extensions)):

file_path = os.path.join(root, file)

try:

with open(file_path, "r", encoding="utf-8", errors="ignore") as f:

lines = f.readlines()

for i, line in enumerate(lines):

# Check if any keyword is in the current line (case insensitive)

if any(keyword in line.lower() for keyword in keywords):

snippet = {

"file": file_path,

"line_number": i + 1,

"code": "".join(lines[max(0, i - 2) : i + 3]),

}

matches.append(snippet)

except Exception as e:

print(f"Skipping file {file_path}: {e}")

return matches2. GPT 4.1 query

Once relevant code snippets are identified, the next step is to query GPT-4.1 to generate explanations or insights about the code. The query_gpt function takes the developer's question and the matched code snippets as input and returns a detailed response.

gpt_explainer.py:

import os

from openai import OpenAI

def query_gpt(question: str, matches: list):

"""

Queries GPT-4.1 with the developer's question and the relevant code snippets,

then returns the generated explanation.

"""

if not matches:

return "No code snippets found to generate an explanation."

# Combine all matches into a formatted string

code_context = "\n\n".join(

[

f"File: {match['file']} (Line {match['line_number']}):\n{match['code']}"

for match in matches

]

)

# Create the GPT-4.1 prompt

system_message = "You are a helpful coding assistant that explains code based on provided snippets."

user_message = f"""A developer asked the following question:

"{question}"

Here are the relevant code snippets:

{code_context}

Based on the code snippets, answer the question clearly and concisely.

"""

# Load API key from environment variable

api_key = os.environ.get("OPENAI_API_KEY")

if not api_key:

return "Error: OPENAI_API_KEY environment variable is not set."

# Create an OpenAI client using the API key

client = OpenAI(api_key=api_key)

try:

# Return the stream object for the caller to process

return client.responses.create(

model="gpt-4.1",

input=[

{"role": "system", "content": system_message},

{"role": "user", "content": user_message},

],

stream=True,

)

except Exception as e:

return f"Error generating explanation: {e}"3. Keyword code search chat application

The final part of the application is the interactive chatbot, which combines everything. It allows developers to ask questions about their codebase, extract keywords from the query, search the codebase for relevant snippets, and send everything along with the user's question to GPT-4.1 for response generation.

main.py:

import argparse

from gpt_explainer import query_gpt

from searcher import search_codebase

def print_streaming_response(stream):

"""Print the streaming response from GPT-4.1"""

print("\nGPT-4.1 Response:")

for event in stream:

# Check if the event includes a text delta.

if hasattr(event, "delta") and event.delta:

print(event.delta, end="")

print("\n")

def main():

parser = argparse.ArgumentParser(

description="Keyword-Based Code Search with GPT-4.1"

)

parser.add_argument("--path", required=True, help="Path to the codebase")

args = parser.parse_args()

allowed_extensions = [".py", ".js", ".txt", ".md", ".html", ".css", ".sh"]

print("Welcome to the Code Search Chatbot!")

print("Ask questions about your codebase or type 'quit' to exit.")

while True:

question = input("\nEnter your question about the codebase: ")

if question.lower() == "quit":

print("Exiting chatbot. Goodbye!")

break

# Extract keywords from the question and remove stop words

stop_words = {'a', 'an', 'the', 'and', 'or', 'but', 'is', 'are', 'was', 'were',

'in', 'on', 'at', 'to', 'for', 'with', 'by', 'about', 'like',

'from', 'of', 'as', 'how', 'what', 'when', 'where', 'why', 'who'}

keywords = [word for word in question.lower().split() if word not in stop_words][:5]

print("Searching codebase...")

matches = search_codebase(args.path, keywords, allowed_extensions)

if not matches:

print("No relevant code snippets found.")

continue

print("Querying GPT-4.1...")

stream = query_gpt(question, matches)

print_streaming_response(stream)

if __name__ == "__main__":

main()This chatbot provides a seamless experience for developers, enabling them to interact with their codebase and receive AI-powered insights.

The project also includes a code repository (kingabzpro/Keyword-Code-Search) that contains source code and instructions on how to use it.

Testing the Keyword Code Search Application

In this section, we will test the Keyword Code Search application by running it in the terminal and exploring its features.

To run the application, you need to provide the path to the code repository you want to analyze. For this example, we will use the “Redis ML project.”

$ python main.py --path C:\Repository\GitHub\Redis-ml-project You will see the welcome message and instructions. Just type your question about your code or ask it to improve a certain part of the codebase.

Welcome to the Code Search Chatbot!

Ask questions about your codebase or type 'quit' to exit.

Enter your question about the codebase: help me improve the training scriptThe application will search the codebase for relevant snippets and query GPT-4.1 to generate a response.

Searching codebase...

Querying GPT-4.1...

GPT-4.1 Response:

Certainly! Based on the provided snippets, here's how you can **improve the training script (`train.py`)** for your phishing email classification proje...........You can ask follow-up questions to understand the whole project.

Enter your question about the codebase: help me understand the projectThis detailed explanation helps developers understand the purpose, functionality, and structure of the project.

Searching codebase...

Querying GPT-4.1...

GPT-4.1 Response:

Certainly! Here's a concise overview of the project based on your code snippets:

## Project Purpose

**Phishing Email Classification App with Redis**

This project classifies emails as "Phishing" or "Safe" using machine learning and serves predictions via a web API. It also uses Redis for caching predictions to optimize performance…………To exit the application, simply type ‘quit’:

Enter your question about the codebase: quitExiting chatbot. Goodbye!The Keyword Code Search Application is a small but powerful tool that allows developers to chat with their code repositories. It combines efficient keyword-based code searching with GPT-4.1's ability to generate context-aware responses. Whether you need to improve a specific part of your codebase or understand the overall project, this application provides a seamless and intuitive experience.

Final Thoughts

The entire landscape of AI is moving away from hype and superficial benchmarks, focusing instead on delivering real value rather than mere illusions. The GPT4.1 models are improving developers' experiences by enabling large language models to generate highly accurate and runnable code right out of the box. Additionally, these models come with large context windows, allowing them to understand the entire code repository in one go.

If you want to get hands-on with the OpenAI API, check out our course, Working with the OpenAI API to start developing AI-powered applications. You can also check out our tutorial on the Responses API and learn more about the new o4-mini reasoning model.

As a certified data scientist, I am passionate about leveraging cutting-edge technology to create innovative machine learning applications. With a strong background in speech recognition, data analysis and reporting, MLOps, conversational AI, and NLP, I have honed my skills in developing intelligent systems that can make a real impact. In addition to my technical expertise, I am also a skilled communicator with a talent for distilling complex concepts into clear and concise language. As a result, I have become a sought-after blogger on data science, sharing my insights and experiences with a growing community of fellow data professionals. Currently, I am focusing on content creation and editing, working with large language models to develop powerful and engaging content that can help businesses and individuals alike make the most of their data.