Course

CSV files are essential pillars in data processing and analytics. Almost every data professional, from intermediate data engineers and data scientists to developers leveraging PySpark, eventually deals with CSV files at some point. However, as datasets grow from megabytes to gigabytes, or even to terabytes and beyond, simple, single tools like Pandas or standard Python libraries struggle to handle the load. This is where Apache Spark and PySpark become important for managing large-scale CSV files in distributed computing environments.

This article will teach you everything you need to know when reading large CSV files with PySpark.

Ensure that you have PySpark installed and that you’re familiar with the basics by following our Getting Started with PySpark tutorial.

What is Reading CSV in PySpark?

PySpark allows users to read CSV files into distributed DataFrames. DataFrames in PySpark closely resemble Pandas DataFrames, providing a familiar interface. Beneath the surface, however, PySpark DataFrames distribute computation and storage across multiple nodes, delivering exceptional performance for massive datasets.

PySpark offers compelling advantages for large CSV files, such as:

- Distributed data loading,

- Robust handling of null values,

- Flexibility in defining schema, and

- Straightforward ways to manage multiple or compressed CSV files.

Nevertheless, common challenges arise, including efficiently handling headers, accurately inferring or specifying schemas, and managing malformed or inconsistent records. Keep reading to discover how to deal with all these challenges.

Reading CSV files in PySpark is one of the topics you must understand to ace a PySpark interview. Our Top 36 PySpark Interview Questions and Answers for 2025 provides a comprehensive guide to PySpark interview questions and answers, covering topics from foundational concepts to advanced techniques and optimization strategies.

Fundamentals of Reading CSV Files in PySpark

Reading CSV data is often one of the initial and most critical steps in PySpark workflows, forming the foundation for subsequent transformations, exploratory analysis, and machine learning tasks. Getting this step right ensures cleaner data processing and improved downstream performance.

Conceptual framework

PySpark reads CSV files within Spark's distributed model. Instead of reading CSV data entirely into memory on a single machine, Spark splits large data tasks across multiple cluster nodes. Spark's built-in Catalyst optimizer further improves performance by efficiently executing the underlying operations required during CSV ingestion.

Core read syntax

The simple way to read CSV files involves Spark’s built-in functions:

spark.read.csv("file_path", header=True, inferSchema=True)Or explicitly:

spark.read.format("csv").option("header", "True").load("file_path")Key parameters include:

file_path- location of CSV files.header- set column names from CSV headers ifTrue.inferSchema- automatically infers column data types.delimiter- character separating columns; default is comma.

Our Learn PySpark From Scratch in 2025 tutorial goes into more detail about the fundamentals of PySpark and how to learn it.

Reading CSV Files: Options and Configurations

PySpark provides extensive options that give granular control over the CSV-reading process.

Header and schema inference

Setting header=True instructs Spark to use the first CSV line as column names.

inferSchema=True lets Spark automatically guess column types by scanning your data:

spark.read.csv("customers.csv", header=True, inferSchema=True)While schema inference is convenient and effective initially, large datasets suffer reduced performance since Spark makes repeated passes over data to determine data types.

Custom schema specification

Explicitly defining your schema significantly enhances performance by eliminating Spark's repeated data scans. A defined schema communicates column names and types up front.

Here is how to define a custom schema in PySpark:

from pyspark.sql.types import StructType, StructField, StringType, IntegerType, DoubleType

schema = StructType([

StructField("user_id", IntegerType(), True),

StructField("name", StringType(), True),

StructField("score", DoubleType(), True),

])

df = spark.read.csv("customers.csv", schema=schema, header=True)Next, let’s explore the handling of delimiters in PySpark when reading CSV files.

Handling delimiters and special characters

Many CSV files use delimiters other than commas, such as pipes or tabs. PySpark allows specifying the delimiter explicitly:

spark.read.csv("customers.csv", header=True, delimiter="|")Additionally, escape and quote characters can also be configured for handling special character scenarios:

spark.read.csv("data.csv", header=True, escape='\"', quote='"')Managing null and missing values

Real-world CSV data frequently contains inconsistencies or incomplete records. PySpark makes handling null values simple by translating custom placeholders to null values:

spark.read.csv("customers.csv", header=True, schema=schema, nullValue="NA")This clarifies null values, dramatically reducing manual data cleaning later.

Let’s explore other strategies for dealing with null values.

You can decide to filter out the null values:

# Filter rows where Age is not null

df_filtered = df.filter(df["Age"].isNotNull())

df_filtered.show()This filters the DataFrame to include only rows where the Age column is not null. The output should look like:

+---+-----+---+------+

| ID| Name|Age|Salary|

+---+-----+---+------+

| 1| John| 25| 50000|

| 3| Bob| 30| NULL|

| 4|Carol| 28| 55000|

+---+-----+---+------+The other strategy is to fill the null values:

# Replace null values in Age and Salary with default values

df_filled = df.na.fill({"Age": 0, "Salary": 0})

df_filled.show()The output will look like this:

+---+-----+---+------+

| ID| Name|Age|Salary|

+---+-----+---+------+

| 1| John| 25| 50000|

| 2|Alice| 0| 60000|

| 3| Bob| 30| 0|

| 4|Carol| 28| 55000|

| 5|David| 0| 48000|

+---+-----+---+------+Reading multiple files and directories

PySpark excels at managing large, multi-file datasets. Rather than manually loading and merging files from a directory sequentially, PySpark supports wildcard patterns for fast, efficient bulk loading:

spark.read.csv("/data/sales/*.csv", header=True, schema=schema)This effectively aggregates numerous CSV files into one DataFrame in a single, streamlined operation.

Once the data is loaded in PySpark, the next steps include wrangling, feature engineering, and building machine learning models. Our Feature Engineering with PySpark course covers these concepts in depth.

Optimization Techniques for Efficient CSV Reading

When handling large-scale CSV data, leveraging PySpark's optimization strategies is crucial.

Partitioning strategies

Partitioning heavily influences performance by evenly distributing data across cluster nodes. Spark allows explicit control over partition size and number during data ingestion to help speed up subsequent operations:

df = spark.read.csv("data.csv", header=True, schema=schema).repartition(20).repartition(20) splits the DataFrame into 20 partitions across your Spark cluster. Since Spark processes data in chunks, having more partitions can:

- Improve parallelism

- Balance workload across the cluster

- Speed up transformations and writes

If you’re running on a cluster with many cores, this helps make full use of them. But you can slow things down if you overdo it (say, 1000 partitions on a small dataset).

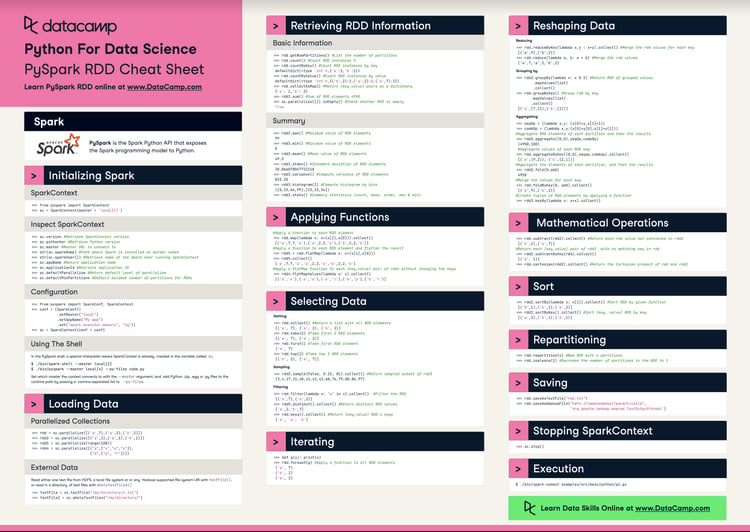

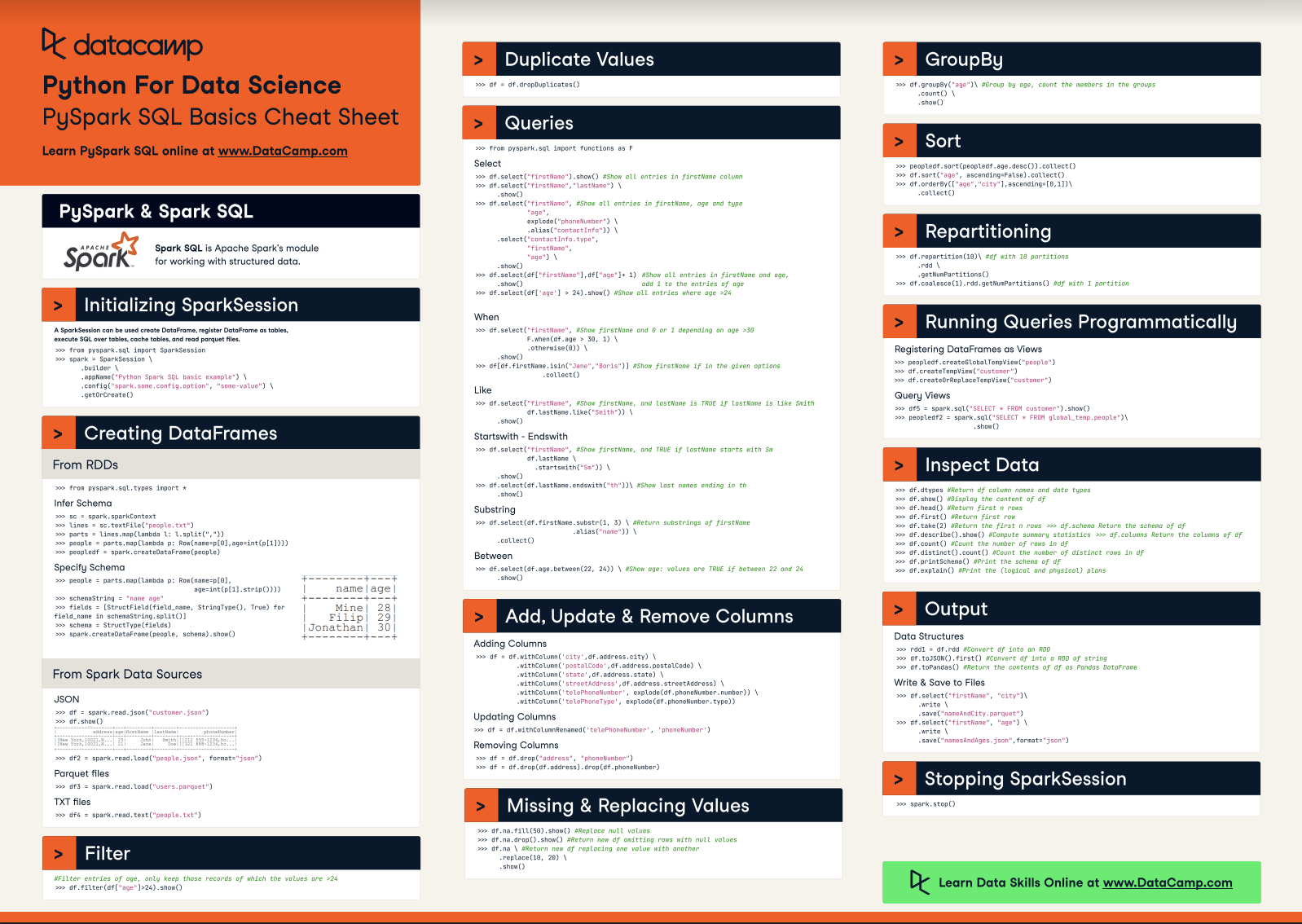

Discover more Spark functions like repartition with our PySpark Cheat Sheet: Spark in Python. It goes into detail about initializing Spark in Python, loading data, sorting, and repartitioning.

Caching and persistence

If your workflow involves repeatedly accessing the same dataset after ingestion, caching your DataFrame in memory or on disk can boost performance significantly:

df.cache()However, remember that caching requires sufficient system resources; always balance memory usage against performance gains.

Lazy evaluation and triggering actions

PySpark relies on a lazy evaluation model: dataframe operations transform plans rather than executing immediately. Actual file reading executes only when needed, triggered by commands like show(), count(), or collect():

# no reading yet

df = spark.read.csv("data.csv", header=True, schema=schema)

# actual read triggered here

df.show(5) Advanced Use Cases and Considerations

Let’s explore more complex scenarios you may encounter when reading CSV files:

Reading compressed CSV files

Spark efficiently manages compressed CSV files like gz or .bz2 transparently without extra configurations:

spark.read.csv("logs.csv.gz", header=True, schema=schema)Handling malformed records

CSV datasets can contain malformed lines. PySpark gives multiple options to help you manage errors or malformed records gracefully:

mode="PERMISSIVE"(default): includes malformed rows with null-filled columns.mode="DROPMALFORMED: silently skips malformed records. This mode is unsupported in the CSV built-in functions.mode="FAILFAST": throws an exception on encountering any malformed record.

spark.read.csv("data.csv", header=True, schema=schema, mode="FAILFAST")Locale and encoding settings

Sometimes, CSV data uses non-standard encodings. PySpark easily handles varying encodings via the encoding parameter:

spark.read.csv("data_utf8.csv", header=True, encoding="UTF-8")Supported encodings include, US-ASCII, ISO-8859-1, UTF-8, UTF-16BE, UTF-16LE, and UTF-16.

Best practices and common pitfalls

Here are some best practices to keep in mind when reading CSV files with Spark:

- Explicitly specify the schema wherever the dataset structure is known.

- Control partitioning to efficiently distribute the workload.

- Cache strategically for frequently accessed DataFrames.

- Trigger reading actions intentionally, mindful of lazy evaluation.

Be sure to avoid:

- Setting

header=Falsefor CSVs with header rows. - Solely relying on

inferSchemain large or repeatedly accessed datasets. - Ignoring critical delimiter or encoding settings.

Conclusion

Properly understanding and leveraging PySpark’s powerful CSV ingestion capabilities is essential to effective big data processing. With clear schema specification, handling custom null formats, using efficient partitioning, and managing compressed or multiple-file scenarios, your workflow becomes streamlined and performant.

Remember that while PySpark provides enormous advantages for large-scale data tasks, simpler tools like Pandas may still suffice for small datasets. Utilize PySpark when working with data that surpasses single-machine capabilities, and always consider its distributed computing advantages.

To explore PySpark further, check out our in-depth Spark courses, including:

PySpark Read CSV FAQs

What is the best way to read large CSV files in PySpark?

The best practice is to specify a custom schema using StructType instead of relying on inferSchema. This method improves performance by avoiding repeated data scans.

Can PySpark handle CSV files with different delimiters?

Yes. You can set a custom delimiter using the option delimiter in spark.read.csv(). For example, use delimiter="|" for pipe-separated values.

How do I read multiple CSV files at once in a directory?

You can use a wildcard path, such as spark.read.csv("/data/*.csv", ...), to load multiple files at once into a single DataFrame.

What does `mode="DROPMALFORMED"` do when reading CSVs?

It tells Spark to skip and ignore malformed records in the CSV file instead of including them or causing failures.

Can PySpark read compressed CSV files like `.gz` or `.bz2`?

Yes. PySpark automatically decompresses and reads compressed CSV files without requiring extra configuration when you provide the correct file path.