The financial services sector sits on a treasure trove of data. It’s no wonder that the industry is rife with promising artificial intelligence and machine learning use cases. If rolled out successfully, AI can generate a value of up to $2 trillion in the banking industry according to a McKinsey estimate. In a recent webinar, Shameek Kundu, former Group CDO of Standard Chartered and current Chief Strategy Officer at TruEra, outlined how to accelerate the value of AI in Financial Services.

Shameek explained that the value of data science and AI in financial services can be grouped into three major buckets:

- Higher revenues through better customer experience and better decision making

- Lowering cost through more effective risk management and improved operational efficiency

- Uncovering unrealized potentials and business models.

AI Application in financial services is broad but shallow

Today, many AI projects in financial services remain in their infancy, unable to reach the end goal of full deployment due to a variety of reasons. These can range from technical issues like limited data availability and quality, technological bottlenecks in deploying AI systems—to non-technical obstacles like the lack of data talent and limited trust in AI systems.

Addressing the lack of trust in AI systems

While complex machine learning models like deep learning models can provide profound solutions to previously intractable problems like protein folding, they are not great at explaining their predictions.

These black boxes pose societal risks if their results are implemented blindly in high-stake decisions like anti-money laundering and credit scoring. Not knowing how black-box models arrive at their predictions, end-users find it hard to trust their outputs, especially given high-profile incidents of bias AI systems.

According to Shameek, three key actions can foster trust in AI.

Demystify Machine Learning through Education

General education for all Education is the long-term strategy to address the fear towards AI. As internal stakeholders, regulators, and customers gain a general understanding of how AI impacts them, they become more likely to accept AI.

Specialized education to address the skills gap The lack of technical talent prevents companies from implementing AI systems at scale. As such, companies aiming to extract value from their AI systems must be willing to hire the data talents or provide specialized training to upskill existing employees.

Set Up Internal Guardrails for AI Systems

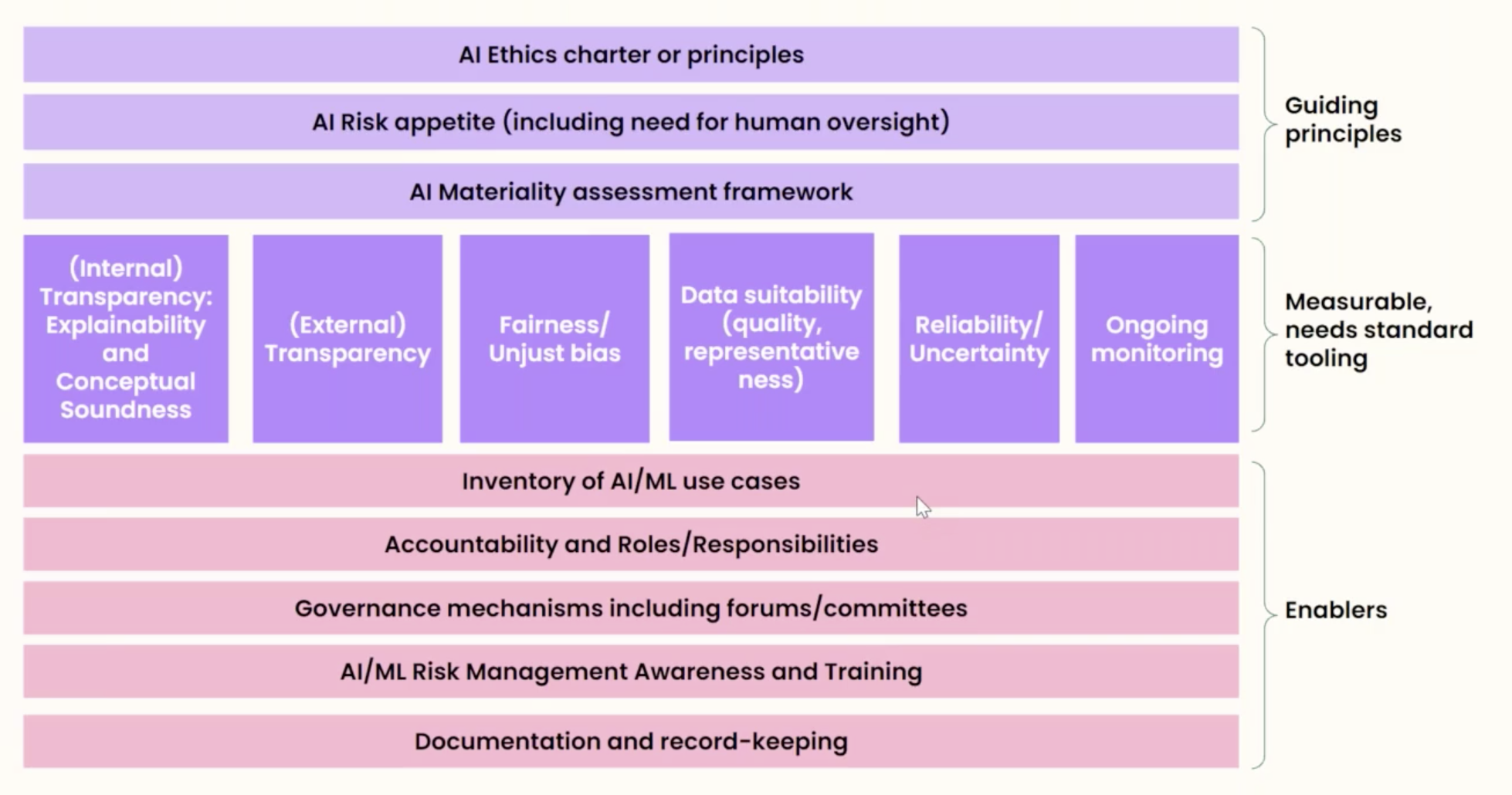

Internal guardrails serve as the guidelines for implementing, evaluating, and monitoring AI systems.

These guardrails have three major components.

- A set of guiding principles defines the boundaries of what AI can do within the company.

- Enablers for internal stakeholders facilitates the safe implementation of AI

- Standard tools and technologies evaluate the AI systems for fairness

Use tools and technologies to improve AI quality

Gaps in the AI lifecycle cause quality issues to creep into AI systems. Fortunately, there are tools to mitigate them.

Explaining black-box models The field of explainable AI remains an active field of research. It holds the promise to open up the black boxes, demystifying and building trust in the prediction-generation process.

Addressing the low data and labels quality A student might fail a test on an unfamiliar topic; a machine learning model can underperform if trained on the wrong data. AI systems can fail if the data used to train an AI system differs drastically from the data it sees in deployment. Microsoft’s python Responsible AI Widget can help identify and solve such problems.

Testing model transparency and fairness Models can be unfairly biased against a population, like a minority race or a particular gender, due to the hidden biases in the data used to train the model. Open-sourced solutions like fairlearn and IBM’s AI Fairness 360 can help to address these gaps.

Conclusion

Building trust in AI within a company takes collaboration and time. Organizations need to upskill AI talent and set up safeguards for AI safety, while AI practitioners need to fully utilize tools available to them to make AI fair for all. Only when AI successfully wins the trust of its users can AI systems see widespread adoption.

If you are interested in the application of AI in financial services, make sure to check out Shameek’s on-demand webinar on “Scaling AI Adoption in Financial Services”. It offers insights into the applications of AI and practical advice for accelerating its adoption.