Course

Introduction

Clustering is an unsupervised machine learning technique with a lot of applications in the areas of pattern recognition, image analysis, customer analytics, market segmentation, social network analysis, and more. A broad range of industries use clustering, from airlines to healthcare and beyond.

It is a type of unsupervised learning, meaning that we do not need labeled data for clustering algorithms; this is one of the biggest advantages of clustering over other supervised learning like Classification. In this clustering tutorial, you will learn:

- What is clustering?

- Business applications/use-cases of clustering

- 5 essential clustering algorithms

- K-Means

- MeanShift

- DBSCAN

- Hierarchical clustering

- BIRCH

- Common FAQs on clustering

What is Clustering?

Clustering is the process of arranging a group of objects in such a manner that the objects in the same group (which is referred to as a cluster) are more similar to each other than to the objects in any other group. Data professionals often use clustering in the Exploratory Data Analysis phase to discover new information and patterns in the data. As clustering is unsupervised machine learning, it doesn’t require a labeled dataset.

Clustering itself is not one specific algorithm but the general task to be solved. You can achieve this goal using various algorithms that differ significantly in their understanding of what constitutes a cluster and how to find them efficiently.

Later in this tutorial, we will compare output from different clustering algorithms, followed by a detailed discussion of 5 essential and popular clustering algorithms used in industry today. Although algorithms are essentially math, this clustering tutorial aims to build an intuitive understanding of algorithms rather than mathematical formulation.

Key Success Criteria for Clustering Analysis

Clustering, unlike supervised learning use-cases such as classification or regression, cannot be completely automated end-to-end. Instead, it is an iterative process of information discovery that requires domain expertise and human judgment used frequently to make adjustments to the data and the model parameters to achieve the desired result.

Most importantly, because clustering is unsupervised learning and doesn’t use labeled data, we cannot calculate performance metrics like accuracy, AUC, RMSE, etc., to compare different algorithms or data preprocessing techniques. As a result, this makes it really challenging and subjective to assess the performance of clustering models.

The key success criteria in clustering models revolve around:

- Is it interpretable?

- Is the output of clustering useful for business?

- Have you learned new information or discovered new patterns in the data that you weren’t aware of before clustering?

Building Intuition behind Clustering

Before diving into algorithmic details, let’s just build an intuition behind clustering using a toy example of fruit datasets. Let’s say we have a huge collection of image dataset containing three fruits (i) strawberries, (ii) pears, and (iii) apples.

In the dataset all the images are mixed up and your use-case is to group similar fruits together i.e. create three groups with each one of them containing one type of fruit. This is exactly what a clustering algorithm will do.

Image Source: https://static.javatpoint.com/tutorial/machine-learning/images/clustering-in-machine-learning.png

Business Applications of Clustering

Clustering is a very powerful technique and has broad applications in various industries ranging from media to healthcare, manufacturing to service industries, and anywhere you have large amounts of data. Let’s take a look at some practical use-cases:

Customer Segmentation

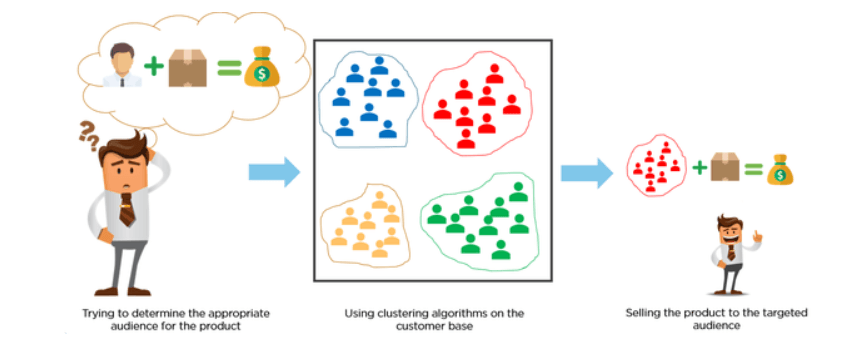

Customers are categorized by using clustering algorithms according to their purchasing behavior or interests to develop focused marketing campaigns.

Imagine you have 10M customers, and you want to develop customized or focused marketing campaigns. It is unlikely that you will develop 10M marketing campaigns, so what do we do? We could use clustering to group 10M customers into 25 clusters and then design 25 marketing campaigns instead of 10M.

Image Source: https://miro.medium.com/max/845/1*rFATWK6tWBrDJ1o1rzEZ8w.png

Retail Clustering

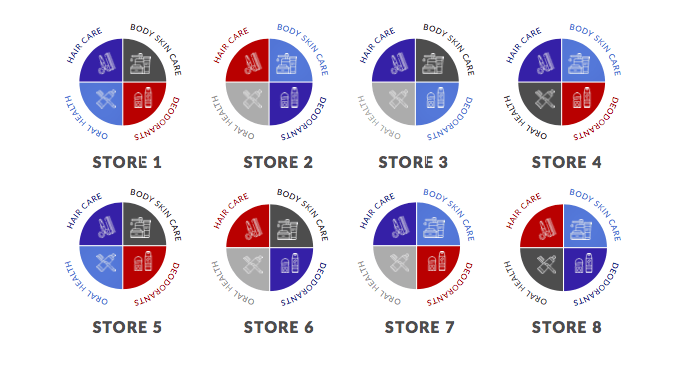

There are many opportunities for clustering in retail businesses. For example, you can gather data on each store and cluster at store level to generate insights that may tell you which locations are similar to each other based on attributes like foot traffic, average store sales, number of SKUs, etc.

Another example could be clustering at a category level. In the diagram below, we have eight stores. Different colors represent different clusters. There are four clusters in this example.

Notice that the deodorants category in Store 1 is represented by the red cluster, whereas the deodorants category in Store 2 is represented by the blue cluster. This depicts that Store 1 and Store 2 have completely different target markets for the deodorants category.

Clustering in Clinical Care / Disease Management

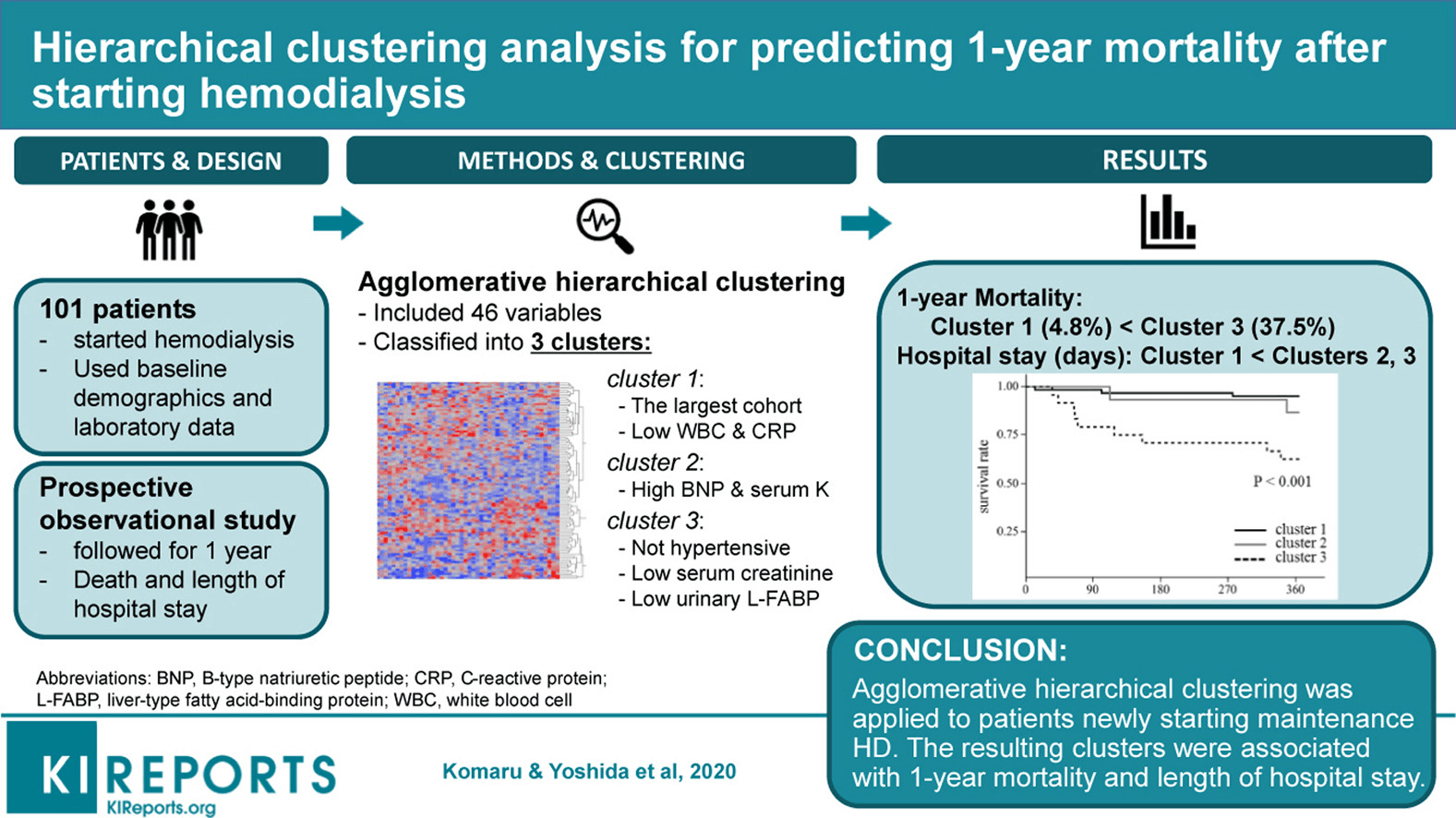

Healthcare and Clinical Science is again one of those areas that are full of opportunities for clustering that are indeed very impactful in the field. One such example is research published by Komaru & Yoshida et al. 2020, where they collected demographics and laboratory data for 101 patients and then segmented them into 3 clusters.

Each cluster was represented by different conditions. For example, cluster 1 has patients with Low WBC & CRP. Cluster 2 has patients with High BMP & Serum, and Cluster 3 has patients with Low Serum. Each cluster represents a different survival trajectory given the 1-year mortality after hemodialysis.

Image source: https://els-jbs-prod-cdn.jbs.elsevierhealth.com/cms/attachment/da4cb0c9-0a86-4702-8a78-80ffffcf1f9c/fx1_lrg.jpg

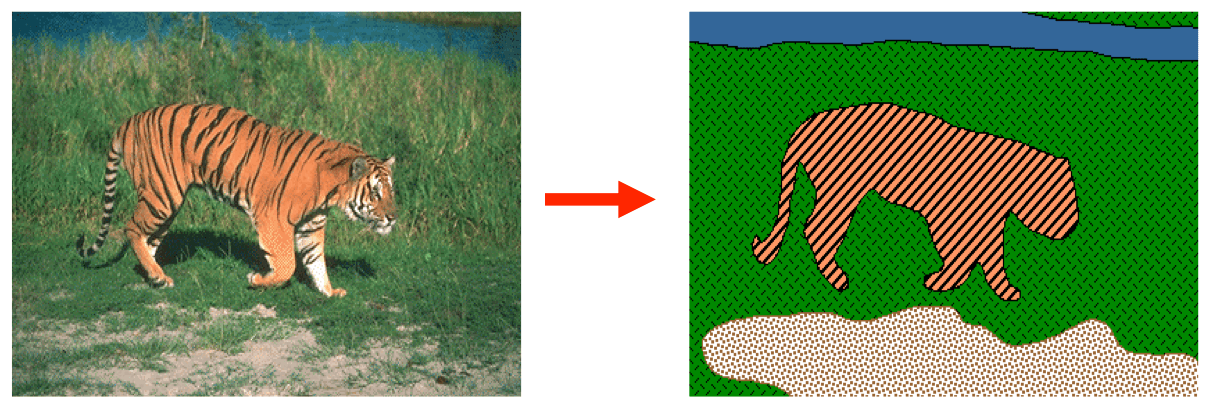

Image Segmentation

Image segmentation is the classification of an image into different groups. Much research has been done in the area of image segmentation using clustering. This type of clustering is useful if you want to isolate objects in an image to analyze each object individually to check what it is.

In the example below, the left-hand side represents the original image, and the right-hand side is the result of the clustering algorithm. You can clearly see there are 4 clusters which are 4 different objects in the image determined based on the pixels (tiger, grass, water, and sand).

|

Want to jump-start your career Master the essential skills to land a job as a machine learning scientist? Check out these amazing courses by DataCamp Machine Learning Scientist with Python and Machine Learning Scientist with R. |

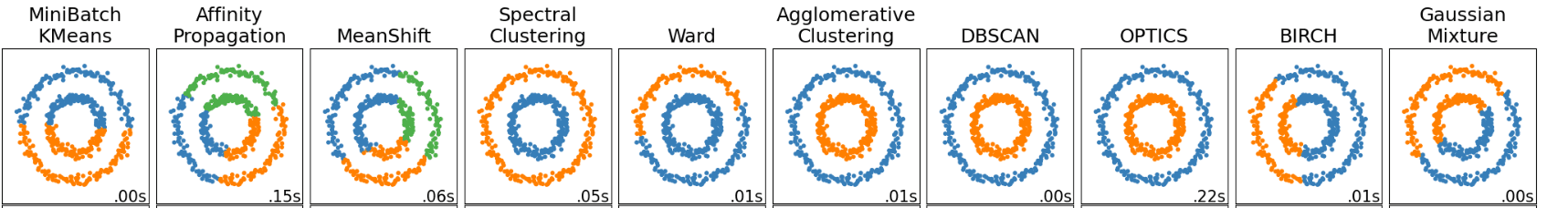

Comparison of Different Clustering Algorithms

There are 10 unsupervised clustering algorithms implemented in scikit-learn - a popular machine learning library in Python. There are fundamental underlying differences in how each algorithm determines and assigns clusters in the dataset.

The underlying differences in the mathematical modality of these algorithms boil down to four aspects on which we can compare and contrast these algorithms:

- Parameters required for the model

- Scalability

- Use-cases,

- Geometry, i.e., metric used for calculation of distances.

Let’s focus on the output of these algorithms. In the diagram below, each column represents an output from a different clustering algorithm such as KMeans, Affinity Propagation, MeanShift, etc. There are a total of 10 algorithms that are trained on the same dataset.

Some algorithms have yielded the same output. Notice Agglomerative Clustering, DBSCAN, OPTICS, and Spectral Clustering have resulted in the same clusters.

However, if you notice and compare the output of KMeans with the output of MeanShift algorithm, you will notice both the algorithms yielded different results. In the case of KMeans, there are only two groups (clusters: blue and orange), whereas, in the case of MeanShift, there are three i.e., blue, green, and orange.

Image Source: https://scikit-learn.org/stable/_images/sphx_glr_plot_cluster_comparison_001.png

Unfortunately (or fortunately), there is no right or wrong answer in Clustering. It would have been so simple to determine and make a statement like “X Algorithm is performing best here.”

This is not possible and it is because of this reason clustering is a very challenging task.

Ultimately, which algorithm works better doesn’t depend on any metric that is easily measurable but rather on the interpretation and how useful the output is for the use-case in hand.

5 Essential Clustering Algorithms

K-Means

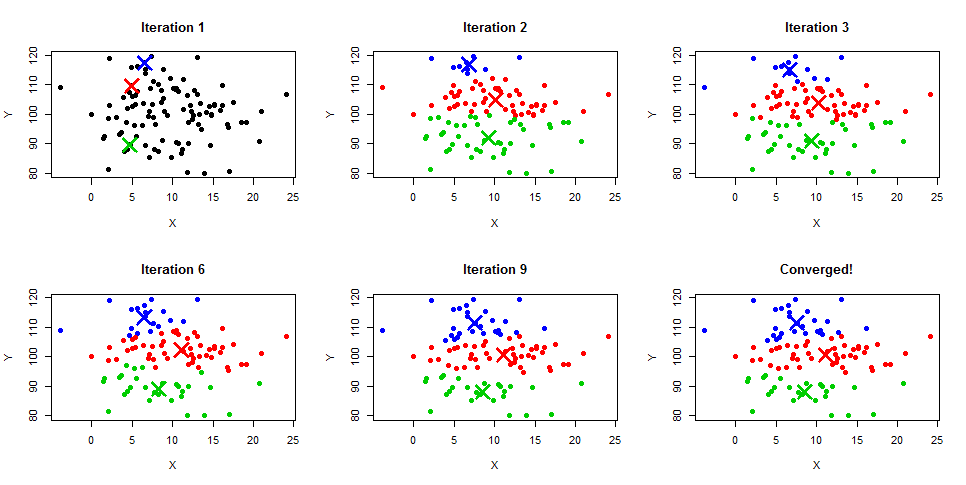

K-Means clustering algorithm is easily the most popular and widely used algorithm for clustering tasks. It is primarily because of the intuition and the ease of implementation. It is a centroid-based algorithm where the user must define the required number of clusters it wants to create.

This normally comes from business use-case or by trying different values for the number of clusters and then evaluating the output.

K-Means clustering is an iterative algorithm that creates non-overlapping clusters meaning each instance in your dataset can only belong to one cluster exclusively. The easiest way to get the intuition of the K-Means algorithm is to understand the steps along with the example diagram below. You can also get a detailed description of the process in our K-Means Clustering in Python and K-Means Clustering in R tutorials.

- User specifies the number of clusters.

- Initialize centroids randomly based on the number of clusters. In the diagram below in Iteration 1, notice three centroids are initialized randomly in blue, red, and green colors.

- Calculate the distance between data points and each centroid and assign each data point to the nearest centroids.

- Recalculate the mean of the centroid based on all the assigned data points, and this will change the position of the centroid, as you can see in Iteration 2 - 9, until it finally converges.

- Iteration keeps on going until there is no change to the centroid's mean or a parameter max_iter is reached, which is the maximum number of the iterations as defined by the user during training. In scikit-learn, max_iter by default is set to 300.

Image Source: https://www.learnbymarketing.com/wp-content/uploads/2015/01/method-k-means-steps-example.png

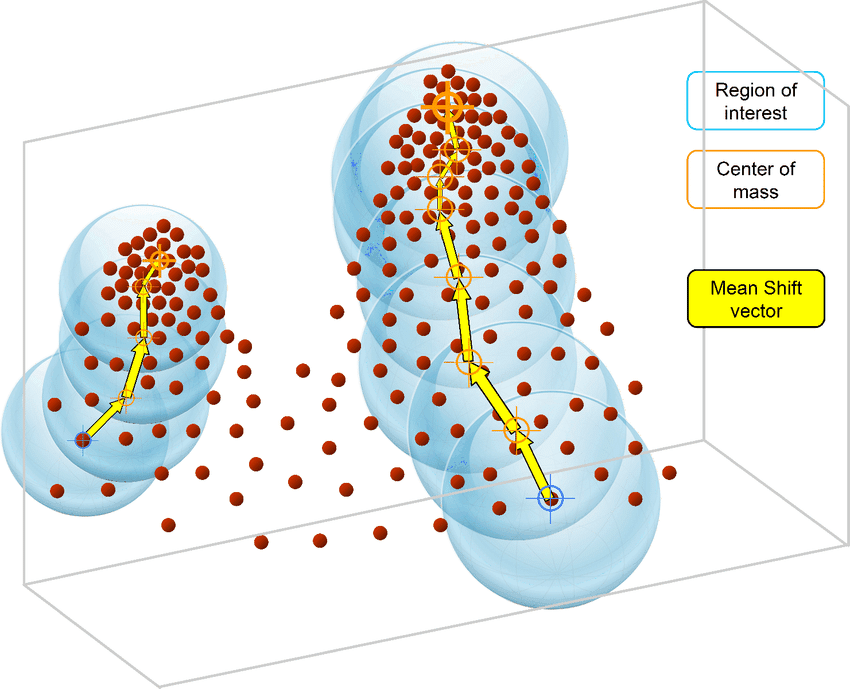

MeanShift

Unlike the K-Means algorithm, MeanShift algorithm does not require specifying the number of clusters. The algorithm itself automatically determines the number of clusters, which is a pretty big advantage over K-Means if you are not sure about patterns in data.

MeanShift is also based on centroids and iteratively assigns each data point to clusters. The most common use case for MeanShift clustering is image segmentation tasks.

The MeanShift algorithm is based on kernel density estimation. Similar to the K-Means algorithm, MeanShift algorithms iteratively assigns each data point towards the closest cluster centroid which are initialized randomly and each point are iteratively moved in the space based on where the most points are i.e. the Mode (mode is the highest density of data points in the region, in the context of the MeanShift).

This is why the MeanShift algorithm is also known as the Mode-seeking algorithm. The steps of the MeanShift algorithm are as follows:

- Pick any random point, and create a window around that random point.

- Calculate the mean of all the points inside this window.

- Shift the window by following the direction of mode.

- Repeat the steps till convergence.

DBSCAN

DBSCAN or Density-Based Spatial Clustering of Applications with Noise is an unsupervised clustering algorithm that works on the premise that clusters are dense spaces in the region separated by lower-density regions.

The biggest advantage of this algorithm over K-Means and MeanShift is that it is robust to outliers meaning it will not include outliers data points in any cluster.

DBSCAN algorithms require only two parameters from the user:

- The radius of the circle to be created around each data point, also known as `epsilon`

- minPoints which defines the minimum number of data points required inside that circle for that data point to be classified as a Core point.

Every data point is surrounded by a circle with a radius of epsilon, and DBSCAN identifies them as being either a Core point, Border point, or Noise point. A data point is considered to be a Core point if the circle that surrounds it has a minimum number of points specified by minPoints parameter.

It is considered a Border Point if the number of points is lower than the minimum required, and it is considered Noise if there are no additional data points located within an epsilon radius of any data point. Noise data points are not categorized in any cluster (basically, they are outliers).

Some of the common use-cases for DBSCAN clustering algorithm are:

- It performs great at separating clusters of high density versus low density;

- It works great on non-linear datasets; and

- It can be used for anomaly detection as it separates out the noise points and do not assign them to any cluster.

Comparing DBSCAN with K-Means algorithms, the most common differences are:

- K-Means algorithm cluster all the instances in the datasets whereas DBSCAN doesn’t assign noise points (outliers) to a valid cluster

- K-Means has difficulty with non-global clusters whereas DBSCAN can handle that smoothly

- K-Means algorithm makes assumptions that all data points in the dataset come from a gaussian distribution whereas DBSCAN makes no assumption about the data.

You can learn more about DBSCAN in Python in our tutorial.

Image Souce: https://miro.medium.com/proxy/1*tc8UF-h0nQqUfLC8-0uInQ.gif

Hierarchical Clustering

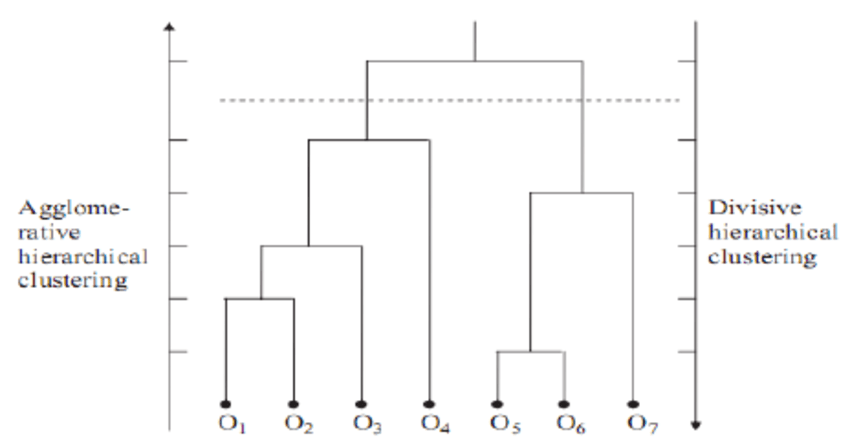

Hierarchical clustering is a method of clustering that builds a hierarchy of clusters. There are two types of this method.

- Agglomerative: This is a bottom-up approach where each observation is treated as its own cluster in the beginning and as we move from bottom to top, each observation is merged into pairs, and pairs are merged into clusters.

- Divisive: This is a "top-down" approach: all observations start in one cluster, and splits are performed recursively as we move from top to bottom.

When it comes to analyzing data from social networks, hierarchical clustering is by far the most common and popular method of clustering. The nodes (branches) in the graph are compared to each other depending on the degree of similarity that exists between them. By linking together smaller groups of nodes that are related to one another, larger groupings may be created.

The biggest advantage of hierarchical clustering is that it is easy to understand and implement. Usually, the output of this clustering method is analyzed in an image such as below. It is called a Dendrogram.

You can learn more about hierarchical clustering and K-Means clustering in our hierarchical clustering in Python tutorial.

BIRCH

BIRCH stands for Balanced Iterative Hierarchical Based Clustering. It is used on very large datasets where K-Means cannot practically scale. BIRCH algorithm divides large data into small clusters and tries to retain the maximum amount of information possible. Smaller groups are then clustered for a final output instead of clustering the large datasets directly.

BIRCH is often used to supplement other clustering algorithms by generating a summary of the information that the other clustering algorithms can utilize. Users have to define the number of clusters for training the BIRCH algorithm, similar to how we define it in K-Means.

One of the benefits of using BIRCH is that it can progressively and dynamically cluster multi-dimensional data points. This is done to create the highest quality clusters under a given memory and time constraints. In most cases, BIRCH just needs to do one search across the database, which makes BIRCH scalable.

The most common use-case of BIRCH clustering algorithm is that it is a memory-efficient alternative to KMeans that can be used to cluster large datasets that cannot be handled through KMeans due to memory or compute limitations.

Conclusion

Clustering is a very useful machine learning technique, but it is not as straightforward as some of the supervised learning use-cases like classification and regression. It is mostly because the performance evaluation and assessing the quality of the model is hard as well as there are some critical parameters such as the number of clusters that the user must define correctly to get meaningful results.

However, there are tons of use-cases of clustering in a wide range of industries, and it is an important skill even for data scientists, machine learning engineers, and data analysts.

If you would like to learn more about Clustering and unsupervised machine learning and learn the implementation using Python and R language, the courses below can help you make progress:

Frequently Asked Questions (FAQs)

Is Clustering unsupervised or supervised machine learning?

Clustering is an unsupervised machine learning technique. It does not require labeled data for training.

Do we need labeled data for Clustering?

No, we do not need labeled data for clustering algorithms. If you have labeled data, you need a supervised classification algorithm.

Can I do clustering on categorical data?

Yes, just like supervised machine learning, if you have categorical features in your data, you have to encode them with techniques like one-hot-encoding. Some algorithms, like K-Modes are designed to accept categorical data directly without any encoding.

Is clustering machine learning?

Yes, clustering is machine learning. Specifically, unsupervised machine learning.

Is clustering descriptive analytics or predictive?

Clustering can be used for both descriptive and predictive analytics. It is more commonly used around Exploratory Data Analysis which is descriptive analytics.

Can we measure the performance of clustering algorithms?

There isn’t a sure way of measuring the performance of clustering algorithms like you have in supervised machine learning (AUC, Accuracy, R2, etc.). The quality of the model depends on the interpretation of output and the use case. However, there are some workaround metrics such as Homogeneity Score, Silhouette Score, etc.

Can we use clustering for feature engineering in supervised machine learning?

Yes, clustering algorithms assign labels in terms of groups in your dataset. At the end of the day, it is a new categorical column in your dataset. So, clustering is often used for feature engineering in supervised learning tasks.