Good project management can bring increased efficiency and productivity, as well as reducing the risk of failure. Unfortunately, data science projects can be especially tricky to manage, since they include stakeholders across many teams, and have to juggle both business and technical goals.

Project management frameworks can provide guidance to help you plan, allocate resources, and execute tasks to achieve your goals on time and within budget.

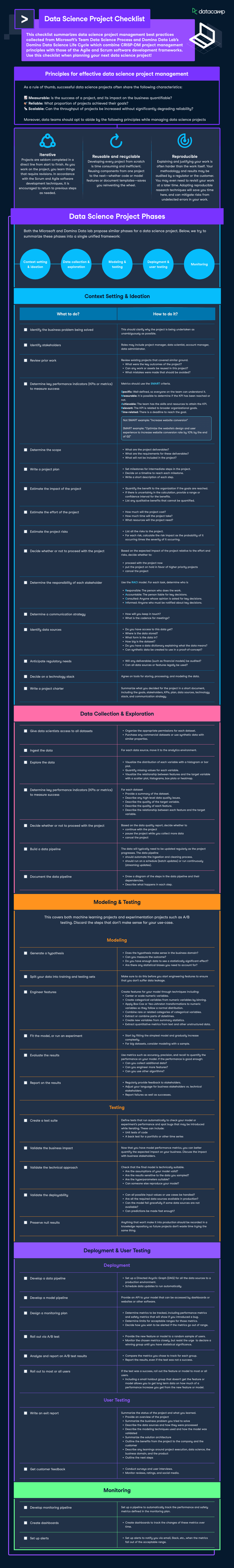

This infographic synthesizes the key points from two leading project management frameworks - Microsoft’s Team Data Science Process and Domino Data Lab's Domino Data Science Life Cycle - in a handy checklist that you can use for your own projects.

Have this infographic at your fingertips

Download this infographic and keep it handy for your next data science project

How to use this cheat sheet

This cheat sheet summarizes data science project management best practices collected from Microsoft’s Team Data Science Process and Domino Data Lab's Domino Data Science Life Cycle, combining CRISP-DM principles with those of the Agile and Scrum software development frameworks. Use this checklist when planning your next data science project!

Principles for effective data science project management

As a rule of thumb, successful data science projects often share the following characteristics:

- Measurable: Is the success of a project, and its impact on the business quantifiable?

- Reliable: What proportion of projects achieved their goals?

- Scalable: Can the throughput of projects be increased without significantly degrading reliability?

Moreover, data teams should opt to abide by the following principles while managing data science projects:

- Iterate: Projects are seldom completed in a direct line from start to finish. As you work on the project, you learn things that require revisions. In accordance with the Scrum and Agile software development techniques, it is encouraged to return to previous steps as needed.

- Reuse and recycle: Developing every project from scratch is time-consuming and inefficient. Reusing components from one project to the next—whether code or model features or document templates—saves you from reinventing the wheel.

- Ensure reproducibility: Explaining and justifying your work is often harder than the work itself. Your methodology and results may be audited by a regulator or the customer. You may even need to revisit your work at a later time. Adopting reproducible research techniques will save you time here and can mitigate risks from undetected errors in your work.

Data Science Project Checklist

Both the Microsoft and Domino Data lab propose similar phases for a data science project. Below, we try to summarize these phases into a single unified framework:

Context Setting & Ideation

| What to do? |

How to do it? |

|

Identify the business problem being solved |

This should clarify why the project is being undertaken as unambiguously as possible. |

|

Identify stakeholders |

Roles may include project manager, data scientist, account manager, data administrator. |

|

Review prior work |

Review existing projects that covered similar ground.

|

|

Determine key performance indicators (KPIs or metrics) to measure success |

Metrics should use the SMART criteria.

Not SMART example: "Increase website conversion" SMART example: "Optimize the website's design and user experience to increase website conversion rate by 10% by the end of Q2" |

|

Determine the scope |

|

|

Write a project plan |

|

|

Estimate the impact of the project |

|

|

Estimate the effort of the project |

|

|

Estimate the project risks |

|

|

Decide whether or not to proceed with the project |

Based on the expected impact of the project relative to the effort and risks, decide whether to

|

|

Determine the responsibility of each stakeholder |

Use the RACI model. For each task, determine who is

|

|

Determine a communication strategy |

|

|

Identify data sources |

|

|

Anticipate regulatory needs |

|

|

Decide on a technology stack |

|

|

Write a project charter |

|

Data Collection & Exploration

| What to do? |

How to do it? |

|

Give data scientists access to all datasets |

|

|

Ingest the data |

|

|

Explore the data |

|

|

Write a data quality report |

For each dataset

|

|

Decide whether or not to proceed with the project |

Based on the data quality report, decide whether to

|

|

Build a data pipeline |

The data will typically need to be updated regularly as the project progresses. The data pipeline

|

|

Document the data pipeline |

|

Modeling & Testing

This covers both machine learning-based projects and experimentation projects such as A/B testing. Discard the steps that don’t make sense for your use-case.

Modeling

| What to do? |

How to do it? |

|

Generate a hypothesis |

|

|

Split your data into training and testing sets |

Make sure to do this before you start engineering features to ensure that you don't suffer data leakage. |

|

Engineer features |

Create features for your model through techniques including:

|

|

Fit the model, or run an experiment |

|

|

Evaluate the results |

Use metrics such as accuracy, precision, and recall to quantify the performance on your model. If the performance is good enough:

|

|

Report on the results |

|

Testing

| What to do? |

How to do it? |

|

Create a test suite |

Define tests that run automatically to check your model or experiment's performance and spot bugs that may be introduced while iterating. These can include:

|

|

Validate the business impact |

Now that you have model performance metrics, you can better quantify the expected impact on your business. Discuss the impact with business stakeholders. |

|

Validate the technical approach |

Check that the final model is technically suitable.

|

|

Validate the deployability |

|

|

Preserve null results |

Anything that won't make it into production should be recorded in a knowledge repository so future projects don't waste time trying the same thing. |

Deployment & User Testing

Deployment

| What to do? |

How to do it? |

|

Develop a data pipeline |

|

|

Develop a model pipeline |

|

|

Operationalize the model |

Provide an API to your model that can be accessed by dashboards or websites or other software. |

|

Design a monitoring plan |

|

|

Roll out via A/B test |

|

|

Analyze and report on A/B test results |

|

|

Roll out to most or all users |

If the test was a success, roll the feature or model out to most or all users.

|

User Testing

| What to do? |

How to do it? |

|

Write an exit report |

Summarize the status of the project and what you learned.

|

|

Get customer feedback |

|

Monitoring

| What to do? |

How to do it? |

|

Develop monitoring pipeline |

Set up a pipeline to automatically track the performance and safety metrics defined in the monitoring plan. |

|

Create dashboards |

Create dashboards to track the changes in these metrics over time. |

|

Set up alerts |

Set up alerts to notify you via email, Slack, etc., when the metrics fall out of the acceptable range. |