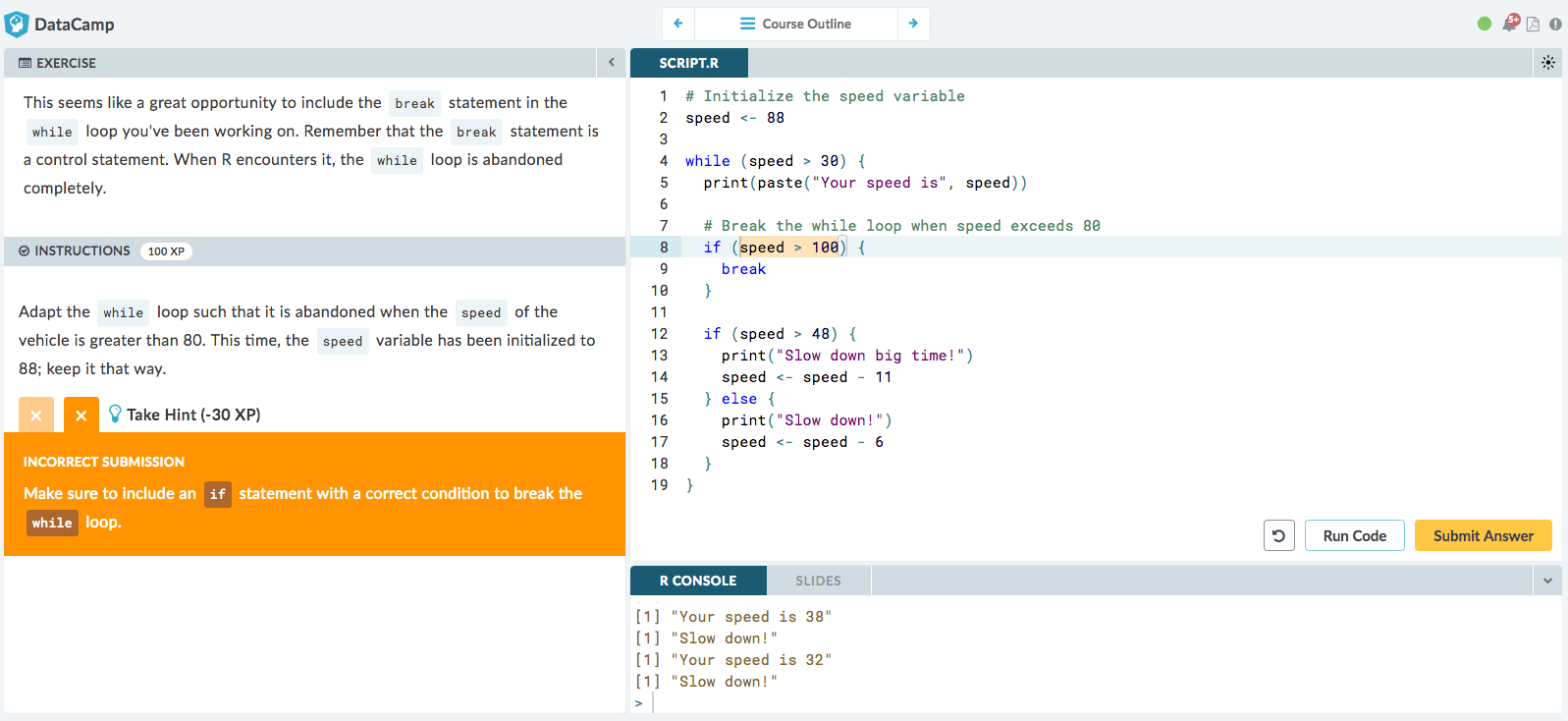

Immediate and personalized feedback has been central to the learning experience on DataCamp since we launched the first courses. If students submit code that contains a mistake, they are told where they made a mistake, and how they can fix this. You can play around with it in our free Introduction to R course. The screenshot below is from our Intermediate R course.

To check submissions and generate feedback, every exercise on DataCamp features a so-called Submission Correctness Test, or SCT.

The SCT is a script of custom tests that assesses the code students submitted and the output and variables they created with their code. For every language that we teach on DataCamp, we have built a corresponding open-source package to easily verify all these elements of a student submission. For R exercises, this package is called testwhat. Over the years, we've added a lot of functions to:

- check variable assignment

- check function calls and results of function calls

- check if statements, for loops, and while loops

- check function definition

- check whether the proper packages are loaded

- check the output the student generated

- checking ggplot and ggvis plotting calls

- ...

When these checking functions spot a mistake, they will automatically generate a meaningful feedback message that points students to their mistakes. You can also specify custom feedback messages that override these automatically generated messages.

Historically, testwhat was closely linked with our proprietary backend that executes R code on DataCamp's servers. Even though testwhat has always been open source, it wouldn't work well without this custom backend and testwhat could only be used in the context of DataCamp. Today, however, testwhat can be used independently and supports other use cases as well. You can leverage everything testwhat has to offer to test student submissions, even when your format of teaching is very different from DataCamp's. You can install the package from GitHub:

library(remotes) # devtools works fine too

install_github('datacamp/testwhat')

As a quick demo, assume that you ask students to create a variable x equal to 5. A string version of the ideal solution would be something like this:

solution_code <- 'x <- 5'

Now suppose that the student submitted a script where x is incorrectly set to be a vector of two values, which can be coded up as follows:

student_code <- 'x <- c(4, 5)'

testwhat features a function called setup_state() that executes a student submission and a solution script in separate environments and captures the output and errors the student code generated. All of this information is stored in the so-called exercise state that you can access with ex():

library(testwhat)

setup_state(stu_code = student_code,

sol_code = solution_code)

ex()

## <RootState>

This exercise state can be passed to the wide range of checking functions that testwhat features with the piping syntax from magrittr. To check whether the student defined a variable x, you can use check_object():

ex() %>% check_object('x')

## <ObjectState>

This code runs fine, because the student actually defined a variable x. To continue and check whether the student defined the variable x correctly, you can use check_equal():

ex() %>% check_object('x') %>% check_equal()

## Error in check_that(is_equal(student_obj, solution_obj, eq_condition), : The contents of the variable `x` aren't correct. It has length 2, while it should have length 1.

This errors out. check_equal() detects that the value of x in the student environment (a vector) does not match the value of x in the solution environment (a single number). Notice how the error message contains a human-readable message that describes this mistake.

The above example was interactive: you set up a state, and then go on typing chains of check functions. On a higher level, you can use run_until_fail() to wrap around the checking code. This will run your battery of tests until it fails:

library(testwhat)

setup_state(stu_code = 'x <- 4',

sol_code = 'x <- 5')

res <- run_until_fail({

ex() %>% check_object('x') %>% check_equal()

})

res$correct

## [1] FALSE

res$message

## [1] "The contents of the variable `x` aren't correct."

This basic approach of building an exercise state with a student submission and solution and then using run_until_fail() to execute a battery of tests can easily be embedded in an autograding workflow that fits your needs. Suppose your students have submitted their assignments in the form of an R script that you have downloaded onto your computer in the folder submissions (we have prepopulated the folder with some random data). We have a bunch of R scripts in the submissions folder. Each R script contains a student submission:

dir('submissions')

## [1] "student_a.R" "student_b.R" "student_c.R" "student_d.R" "student_e.R"

## [6] "student_f.R" "student_g.R" "student_h.R" "student_i.R" "student_j.R"

readLines('submissions/student_a.R')

## [1] "x <- 4"

readLines('submissions/student_b.R')

## [1] "x <- 5"

We can now cycle through all of these submissions, and generate a data.frame that specifies whether or not the submission was correct per student:

library(testwhat)

folder_name <- 'submissions'

all_files <- file.path(folder_name, dir(folder_name))

student_results <- sapply(all_files, function(file) {

student_code <- readLines(file)

setup_state(sol_code = 'x <- 5',

stu_code = student_code)

res <- run_until_fail({

ex() %>% check_object('x') %>% check_equal()

})

res$correct

})

results_df <- data.frame(name = all_files, correct = student_results,

row.names = NULL, stringsAsFactors = FALSE)

results_df

## name correct

## 1 submissions/student_a.R FALSE

## 2 submissions/student_b.R TRUE

## 3 submissions/student_c.R TRUE

## 4 submissions/student_d.R TRUE

## 5 submissions/student_e.R FALSE

## 6 submissions/student_f.R TRUE

## 7 submissions/student_g.R FALSE

## 8 submissions/student_h.R FALSE

## 9 submissions/student_i.R TRUE

## 10 submissions/student_j.R TRUE

This small demo is still a bit rough, but I hope it brings the point across: with some R fiddling to build the appropriate inputs for setup_state(), you can put all of testwhat's checking functions to work. The tests you specify in run_until_fail() will both verify the students' work and generate meaningful feedback to point them to the mistakes they are making.

To learn more about testwhat, you can visit the GitHub repo or the package documentation, generated with pkgdown. It features both vignettes that outline how to use certain checking functions as well as reference function that describes all arguments you can specify. Don't hesitate to raise issues on GitHub or reach out through content-engineering@datacamp.com, we love feedback! Happy teaching!

This blog was generated with RMarkdown. You can find the source here.