Neural Networks (NN) are computational models inspired by the human brain's interconnected neuron structure. They are fundamental to many machine learning algorithms today, allowing computers to recognize patterns and make decisions based on data.

Neural Networks Explained

A neural network is a series of algorithms designed to recognize patterns and relationships in data through a process that mimics the way the human brain operates. Let's break this down:

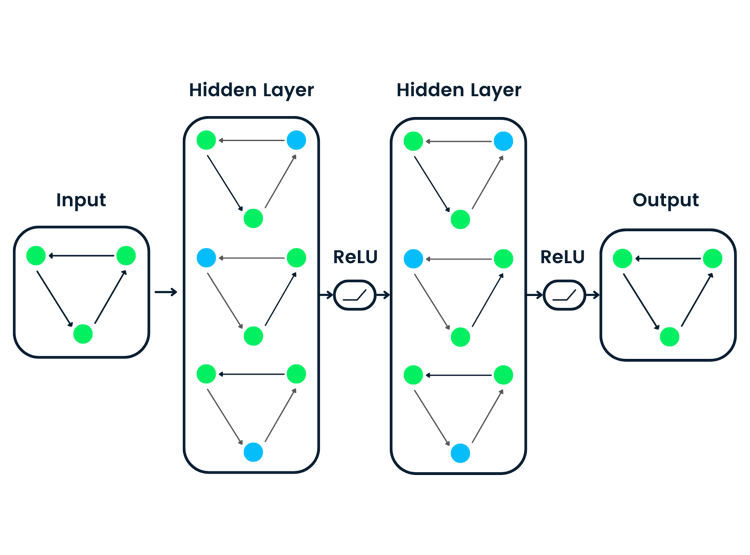

At its core, a neural network consists of neurons, which are the fundamental units akin to brain cells. These neurons receive inputs, process them, and produce an output. They are organized into distinct layers: an Input Layer that receives the data, several Hidden Layers that process this data, and an Output Layer that provides the final decision or prediction.

The adjustable parameters within these neurons are called weights and biases. As the network learns, these weights and biases are adjusted, determining the strength of input signals. This adjustment process is akin to the network's evolving knowledge base.

Before training starts, certain settings, known as hyperparameters, are tweaked. These determine factors like the speed of learning and the duration of training. They're akin to setting up a machine for optimal performance.

During the training phase, the network is presented with data, makes a prediction based on its current knowledge (weights and biases), and then evaluates the accuracy of its prediction. This evaluation is done using a loss function, which acts as the network's scorekeeper. After making a prediction, the loss function calculates how far off the prediction was from the actual result, and the primary goal of training becomes minimizing this "loss" or error.

Backpropagation plays a pivotal role in this learning process. Once the error or loss is determined, backpropagation helps adjust the weights and biases to reduce this error. It acts as a feedback mechanism, identifying which neurons contributed most to the error and refining them for better future predictions.

To adjust the weights and biases efficiently, techniques like "gradient descent" are employed. Imagine navigating a hilly terrain, and your goal is to find the lowest point. The path you take, always moving towards a lower point, is guided by gradient descent.

Lastly, an essential component of neural networks is the activation function. This function decides whether a neuron should be activated based on the weighted sum of its inputs and a bias.

To visualize the entire process, think of a neural network trained to recognize handwritten numbers. The input layer receives the image of a handwritten digit, processes the image through its layers, making predictions and refining its knowledge, until it can confidently identify the number.

What are Neural Networks Used for?

Neural networks have a broad spectrum of applications, such as:

- Image recognition. Platforms like Facebook employ neural networks for tasks such as photo tagging. By analyzing millions of images, these networks can identify and tag individuals in photos with remarkable accuracy.

- Speech recognition. Virtual assistants like Siri and Alexa utilize neural networks to understand and process voice commands. By training on vast datasets of human speech from various languages, accents, and dialects, they can comprehend and respond to user requests in real-time.

- Medical diagnosis. In the healthcare sector, neural networks are revolutionizing diagnostics. By analyzing medical images, they can detect anomalies, tumors, or diseases, often with greater accuracy than human experts. This is especially valuable in early disease detection, potentially saving lives.

- Financial forecasting. Neural networks analyze vast amounts of financial data, from stock prices to global economic indicators, to forecast market movements and help investors make informed decisions.

While neural networks are powerful, they are not a one-size-fits-all solution. Their strength lies in handling complex tasks that involve large datasets and require pattern recognition or predictive capabilities. However, for simpler tasks or problems where data is limited, traditional algorithms might be more suitable. For instance, if you're sorting a small list of numbers or searching for a specific item in a short list, a basic algorithm would be more efficient and faster than setting up a neural network.

Types of Neural Networks

There are several different types of neural networks that are designed for specific tasks and applications, such as:

- Feedforward Neural Networks. The most straightforward type where information moves in only one direction.

- Recurrent Neural Networks (RNN). They have loops to allow information persistence.

- Convolutional Neural Networks (CNN). Primarily used for image recognition tasks.

- Radial Basis Function Neural Networks. Used for function approximation problems.

What are the Benefits of Neural Networks?

- Adaptability. They can learn and make independent decisions.

- Parallel processing. Large networks can process multiple inputs simultaneously.

- Fault tolerance. Even if a part of the network fails, the entire network can still function.

What are the Limitations of Neural Networks?

- Data dependency. They require a large amount of data to function effectively.

- Opaque nature. Often termed as "black boxes" because it's challenging to understand how they derive specific decisions.

- Overfitting. They can sometimes memorize data rather than learning from it.

Neural Networks vs Deep Learning

While all deep learning models are neural networks, not all neural networks are deep learning. Deep learning refers to neural networks with three or more layers. These neural networks attempt to simulate the behavior of the human brain—allowing it to "learn" from large amounts of data. While a neural network with a single layer can make approximate predictions, additional hidden layers can help to refine accuracy. Check out our guide on deep learning vs machine learning in a separate article.

A Beginners Guide to Building a Neural Networks Project

Years ago, I took a Deep Learning course and had my first experience with neural networks. I learned how to build my own image classifier using just a few lines of code and was amazed to see these algorithms accurately classify images.

Nowadays, things have changed, and it's become much easier for beginners to build state-of-the-art deep neural network models using deep learning frameworks like TensorFlow and PyTorch. You no longer need a Ph.D. to build powerful AI.

Here are the steps to build a simple convolutional neural network for classifying cat and dog photos:

- Get a labeled cat and dog image dataset from Kaggle.

- Use Keras as the deep learning framework. I believe it's easier for beginners to understand than PyTorch.

- Import Keras, scikit-learn, and data visualization libraries like Matplotlib.

- Load and preprocess the images using Keras utilities.

- Visualize the data - images, labels, distributions.

- Augment the data through resizing, rotating, flipping, etc.

- Build a convolutional neural network (CNN) architecture in Keras. Start simple.

- Compile the model by setting loss function, optimizer, and metrics to monitor.

- Train the model for several iterations (epochs) to fit to the data.

- Evaluate model accuracy on a test set.

- If needed, use pre-trained models like ResNet or add layers to improve accuracy.

- Save and export the trained Keras model.

High-level frameworks like TorchVision, Transformers, and TensorFlow have made building image classifiers easy even for beginners. With just a small labeled dataset and Google Colab, you can start building AI computer vision applications.

Want to learn more about AI and machine learning? Check out the following resources:

FAQs

How do neural networks "learn"?

Through a process called backpropagation and iterative optimization techniques like gradient descent.

Are neural networks the future of AI?

They are a significant part of AI's future, but not the only component. Other techniques and algorithms are also crucial.

Why are neural networks compared to the human brain?

Because they are inspired by the structure and functionalities of the human brain, especially the interconnectedness of neurons.

Do neural networks make decisions on their own?

No, they make decisions based on the data they've been trained on and the patterns they've recognized.

As a certified data scientist, I am passionate about leveraging cutting-edge technology to create innovative machine learning applications. With a strong background in speech recognition, data analysis and reporting, MLOps, conversational AI, and NLP, I have honed my skills in developing intelligent systems that can make a real impact. In addition to my technical expertise, I am also a skilled communicator with a talent for distilling complex concepts into clear and concise language. As a result, I have become a sought-after blogger on data science, sharing my insights and experiences with a growing community of fellow data professionals. Currently, I am focusing on content creation and editing, working with large language models to develop powerful and engaging content that can help businesses and individuals alike make the most of their data.