Track

Deep neural networks have changed the landscape of artificial intelligence in the modern era. In recent times, there have been several research advancements in both deep learning and neural networks, which dramatically increase the quality of projects related to artificial intelligence.

These deep neural networks help developers to achieve more sustainable and high-quality results. Hence, they are even replacing several conventional machine learning techniques.

But what exactly are deep neural networks, and why are they the most optimal choice for a wide array of tasks? And what are the different libraries and tools to get started with deep neural networks?

This article will explain deep neural networks, their library requirements, and how to construct a basic deep neural network architecture from scratch.

What are Deep Neural Networks?

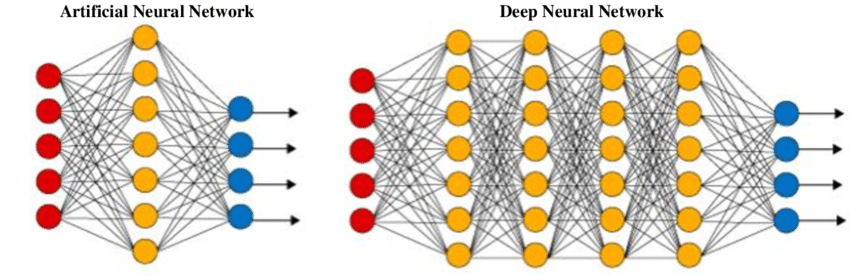

An artificial neural network (ANN) or a simple traditional neural network aims to solve trivial tasks with a straightforward network outline. An artificial neural network is loosely inspired from biological neural networks. It is a collection of layers to perform a specific task. Each layer consists of a collection of nodes to operate together.

These networks usually consist of an input layer, one to two hidden layers, and an output layer. While it is possible to solve easy mathematical questions, and computer problems, including basic gate structures with their respective truth tables, it is tough for these networks to solve complicated image processing, computer vision, and natural language processing tasks.

For these problems, we utilize deep neural networks, which often have a complex hidden layer structure with a wide variety of different layers, such as a convolutional layer, max-pooling layer, dense layer, and other unique layers. These additional layers help the model to understand problems better and provide optimal solutions to complex projects. A deep neural network has more layers (more depth) than ANN and each layer adds complexity to the model while enabling the model to process the inputs concisely for outputting the ideal solution.

Deep neural networks have garnered extremely high traction due to their high efficiency in achieving numerous varieties of deep learning projects. Explore the differences between machine learning vs deep learning in a separate article.

Why use a DNN?

After training a well-built deep neural network, they can achieve the desired results with high accuracy scores. They are popular in all aspects of deep learning, including computer vision, natural language processing, and transfer learning.

The premier examples of the prominence of deep neural networks are their utility in object detection with models such as YOLO (You Only Look Once), language translation tasks with BERT (Bidirectional Encoder Representations from Transformers) models, transfer learning models, such as VGG-19, RESNET-50, efficient net, and other similar networks for image processing projects.

To understand these deep learning concepts of artificial intelligence more intuitively, I recommend checking out DataCamp’s Deep Learning in Python course.

Deep Learning Tools and Libraries

Constructing neural networks from scratch helps programmers to understand concepts and solve trivial tasks by manipulating these networks. However, building these networks from scratch is time-consuming and requires enormous effort. To make deep learning simpler, we have several tools and libraries at our disposal to yield an effective deep neural network model capable of solving complex problems with a few lines of code.

The most popular deep learning libraries and tools utilized for constructing deep neural networks are TensorFlow, Keras, and PyTorch. The Keras and TensorFlow libraries have been linked synonymously since the start of TensorFlow 2.0. This integration allows users to develop complex neural networks with high-level code structures using Keras within the TensorFlow network.

PyTorch

The PyTorch library is another extremely popular machine learning framework that enables users to develop high-end research projects.

While it slightly lacks in the visualization department, PyTorch compensates with its compact and fast performance with relatively quicker and simpler GPU installations for constructing deep neural network models.

DataCamp’s Introduction to PyTorch in Python course is the best starting point for learning more about PyTorch.

TensorFlow and Keras

The TensorFlow framework grants its developers a wide range of fantastic options for visualization tools for deep learning tasks. The tensorboard graphic dashboard presents an outstanding choice to visualize, analyze, and interpret the data and results of a project accordingly.

The Keras library integration allows faster construction of projects with simplistic code structures, making it a popular choice for long-term development projects. The Introduction to TensorFlow in Python is a great place for beginners to get started with TensorFlow.

How to Build a Basic Deep Neural Network

In this section, we will understand some fundamental concepts of deep neural networks and how to construct such a network from scratch.

Importing the required libraries

The first step is to choose your preferred library for the required application. We will use the TensorFlow and Keras deep learning frameworks to construct the deep neural network.

# Importing the necessary functionality

import tensorflow as tf

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Input, Dense, Conv2D

from tensorflow.keras.layers import Flatten, MaxPooling2DCreating a sequential-type model

Once we finish importing the desired libraries for this task, we will use the Sequential type modeling for building the deep learning model. The sequential model is a plain stack of layers with one input and output value. The other available options are the functional API class or a custom model build. However, the Sequential class provides a straightforward approach to constructing the neural network architecture.

# Creating the model

DNN_Model = Sequential()

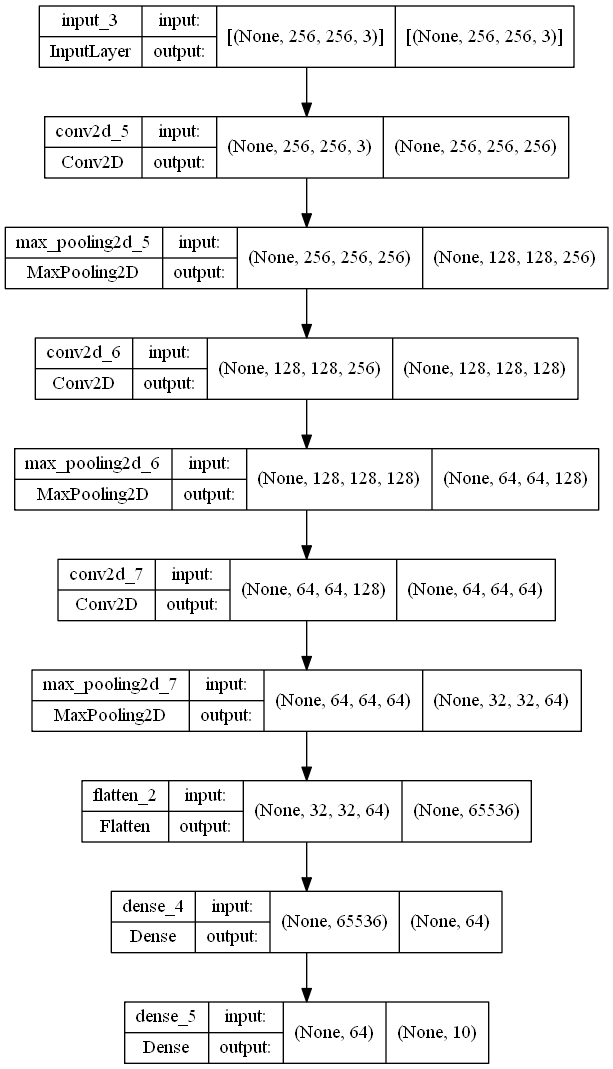

Constructing the Deep Neural Network architecture

We will add an input shape, usually equivalent to the size of the type of image you are utilizing in your project. The size contains the width, height, and color coding of the image. In the example code below, the height and width of the image are 256 with an RGB color scheme, represented by 3 (1 is used for gray-scale images). We will then construct the necessary hidden layers with convolution and max-pooling layers with varying filter sizes. Finally, we will utilize a flattened layer to flatten the outputs and use a dense layer as the final output layer.

The hidden layers add to the complexity of the neural network. A convolutional layer performs a convolution operation on visual images to filter the information. Each filter size in a convolution layer helps to extract specific features from the input. A max-pooling layer helps to downsample (reduce) the number of features by considering the maximum values from the extracted features.

A flattened layer reduces the spatial dimensions into a single dimension for faster computation. A dense layer is the simplest layer that receives an output from the previous layers and is typically used as the output layer. The 2D convolution performs an elementwise multiplication over a 2D input. The ReLU (rectified linear unit) activation function provides non-linearity to the model for better computation performance. We will use the same padding to maintain the input and output shapes of the convolutional layers.

# Inputting the shape to the model

DNN_Model.add(Input(shape = (256, 256, 3)))

# Creating the deep neural network

DNN_Model.add(Conv2D(256, (3, 3), activation='relu', padding = "same"))

DNN_Model.add(MaxPooling2D(2, 2))

DNN_Model.add(Conv2D(128, (3, 3), activation='relu', padding = "same"))

DNN_Model.add(MaxPooling2D(2, 2))

DNN_Model.add(Conv2D(64, (3, 3), activation='relu', padding = "same"))

DNN_Model.add(MaxPooling2D(2, 2))

# Creating the output layers

DNN_Model.add(Flatten())

DNN_Model.add(Dense(64, activation='relu'))

DNN_Model.add(Dense(10))Model architecture

The model structure and the plot diagram of the constructed deep neural network are provided below.

Model: "sequential_2"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

conv2d_5 (Conv2D) (None, 256, 256, 256) 7168

max_pooling2d_5 (MaxPooling (None, 128, 128, 256) 0

2D)

conv2d_6 (Conv2D) (None, 128, 128, 128) 295040

max_pooling2d_6 (MaxPooling (None, 64, 64, 128) 0

2D)

conv2d_7 (Conv2D) (None, 64, 64, 64) 73792

max_pooling2d_7 (MaxPooling (None, 32, 32, 64) 0

2D)

flatten_2 (Flatten) (None, 65536) 0

dense_4 (Dense) (None, 64) 4194368

dense_5 (Dense) (None, 10) 650

=================================================================

Total params: 4,571,018

Trainable params: 4,571,018

Non-trainable params: 0

_________________________________________________________________The plot is as follows:

tf.keras.utils.plot_model(DNN_Model, to_file='model_big.png', show_shapes=True)

Additional information

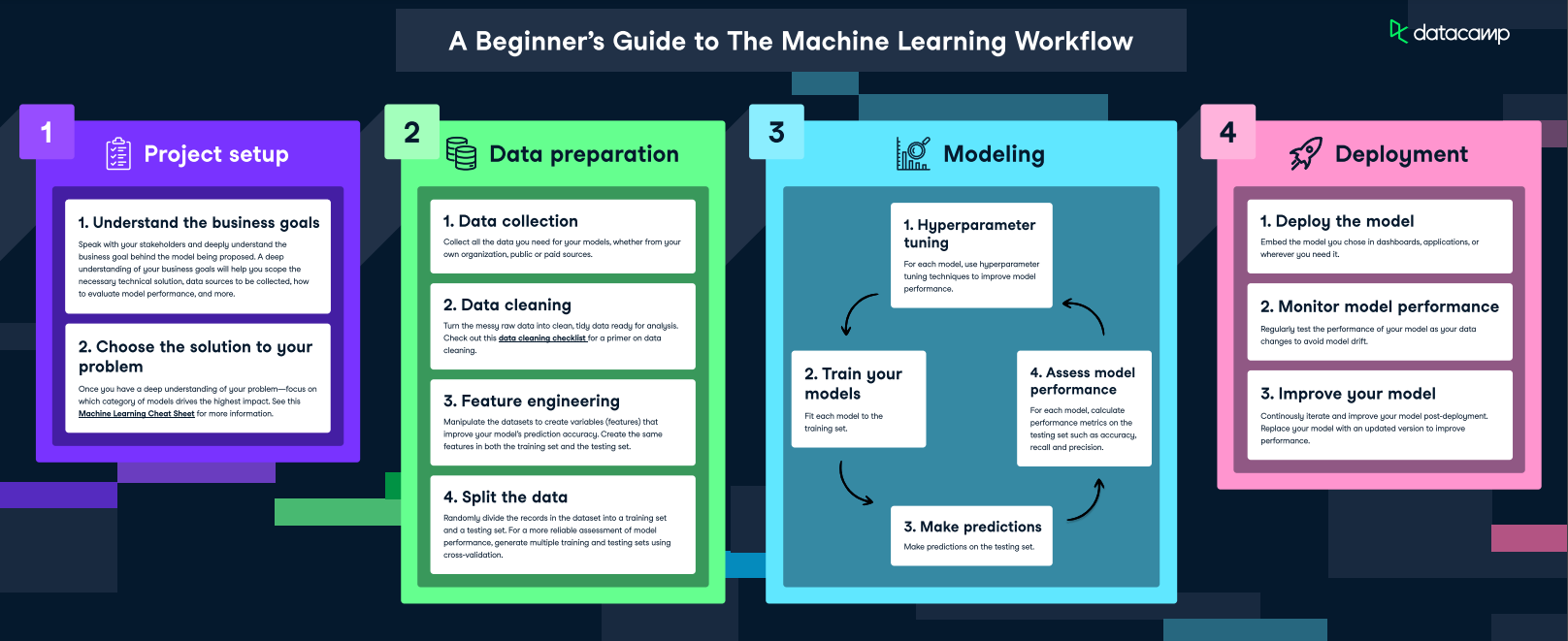

Machine Learning Workflow - Image Source

Once the model is built, it needs to be compiled for configuring the model. During the compilation of the model, the significant operations in deep learning models include forward propagation and backpropagation. In forward propagation, all the essential information is passed through the different nodes until the output layer. In the output layer, for classification tasks, the predicted values and true values are computed accordingly.

In the training or fitting stage, the process of backpropagation occurs. The weights are readjusted in each layer by fixing the weights until the predicted and true values match close to each other to achieve the desired results. For an in-depth explanation of this topic, I recommend checking the following backpropagation guide from an Introduction to Deep Learning in Python course.

There are a lot of intricacies to exploring deep learning. I highly recommend checking out the Deep Learning with Keras course to further understand how to construct deep neural networks.

Understanding Deep Learning Models

Computing any particular machine learning task requires a specific deep neural network to perform the necessary actions. Two primarily utilized deep learning models are convolutional neural networks (CNN) and Recurrent Neural Networks (RNN). Convolutional neural networks find enormous utility in image processing and computer vision projects.

In these deep neural networks, instead of performing a typical matrix operation in the hidden layers, we perform a convolution operation. It enables the network to have a more scalable approach producing higher efficiency and accurate results. In image classification and object detection tasks, there is a lot of data and images for the model to compute. These convolutional neural networks help to combat these issues successfully.

For natural language processing and semantic projects, recurrent neural networks are often used to optimize the results. A popular variant of these RNNs, long-short term memory (LSTMs), is typically used to perform various machine translation tasks, text classification, speech recognition, and other similar tasks.

These networks carry the essential information from each of the previous cells and transmit them to the next while storing the crucial information for optimized model performance. The Convolutional Neural Networks for Image Processing is a fantastic guide for exploring more on CNNs and Deep Learning in Python for a thorough deep learning understanding.

Challenges and Considerations in Deep Neural Networks

We have a brief understanding of deep neural networks and their construction with the TensorFlow deep learning framework. However, there are certain challenges that every developer must consider before developing a neural network for a particular project. Let us look at a few of these challenges.

Data requirements

One of the primary requirements for deep learning is data. Data is the most critical component in constructing a highly accurate model. In several cases, deep neural networks typically require large amounts of data in order to prevent overfitting and perform well. The data requirements for object detection tasks might require more data for a model to detect different objects with high accuracy.

While data augmentation techniques are useful as a quick fix to some of these issues, data requirements are a must to consider for every deep learning project.

Computational resources

Apart from a large amount of data, one must also consider the high computational cost of computing the deep neural network. Models like the Generative Pre-trained Transformer 3 (GPT-3) have 175 billion parameters, for example.

The compilation and training of models for complex tasks will require a resourceful GPU. Models can often be trained more efficiently on GPUs or TPUs rather than CPUs. For extremely complex tasks, the system requirements range higher, requiring more resources for a particular task.

Training issues

During training, the model might also encounter issues such as underfitting or overfitting. Underfitting usually occurs due to a lack of data, while overfitting is a more prominent issue that occurs due to training data consistently improving while the test data remains constant. Hence, the training accuracy is high, but the validation accuracy is low, leading to a highly unstable model that does not yield the best results.

Final Thoughts

In this article, we explored deep neural networks and understood their core concepts. We understood the difference between these neural networks and a traditional network and built an understanding of the different types of deep learning frameworks for computing deep learning projects. We then used the TensorFlow and Keras libraries to demonstrate a deep neural network build. Finally, we considered some of the critical challenges of deep learning and a few methods to overcome them.

Deep neural networks are a fantastic resource for accomplishing most of the common artificial intelligence applications and projects. They enable us to solve image processing and natural language processing tasks with high accuracy.

It is significant for all skilled developers to stay updated with the emerging trends as a model that is popular today may not be as popular or the best choice in the upcoming future.

Hence, it is essential to keep learning and gaining knowledge, as the world of artificial intelligence is an adventure filled with excitement and new technological developments. One of the best ways to stay updated is by checking out DataCamp’s Deep Learning in Python Skill track to cover topics including TensorFlow and Keras and Deep Learning in PyTorch to learn more about PyTorch. You can also check out the AI Fundamentals courses for a gentler introduction. The former helps to unleash the enormous potential of deep learning projects, while the latter helps to stabilize the foundations.

Love to explore and learn new concepts. I am extremely interested in artificial intelligence, deep learning, robots, and the universe. I also write articles on Python, Machine Learning, Deep Learning, NLP, and AI.