Track

Azure OpenAI allows access to OpenAI’s models, like GPT-4, using the Azure cloud services platform.

In this tutorial, I introduce Azure OpenAI and show how to get started with it. We will cover how to set up an account, deploy an AI model, and perform various simple tasks using the API.

What is Azure OpenAI?

Azure offers a full range of web services, from SQL databases to data warehousing and analytics solutions like Azure Synapse. Many users of these services need to integrate OpenAI’s models with their workflows.

The traditional method of accessing OpenAI’s models is via the OpenAI API. Azure OpenAI is Microsoft’s offering of OpenAI’s models via the Azure platform. It allows Azure users to integrate OpenAI’s services (natural language processing, code generation, image generation, and many more) into their Azure-based infrastructure.

Thus, developers do not need to learn a new web services platform and integrate it into their workflows. As it is based on the Azure platform, it is easy to scale and handle heavy workloads. Developers and organizations who use the Azure platform can use the same familiar interface to provision, manage, monitor, and budget for AI-based services. It also makes it easier to manage compliance and payment workflows.

Develop AI Applications

Setting Up Azure OpenAI

In this section, I show how to set up Azure OpenAI. I assume you have some experience with using Azure.

Note that user interfaces change frequently. So, the layout and text of various sections and titles in the instructions and screenshots can change over time or from region to region.

Step 1: Create an Azure account

Go to the Azure OpenAI homepage and select Sign in at the top right. Log in with your Microsoft account. If you don’t have a Microsoft account, select the option to create a new account on the Sign-in page and create a Microsoft account.

Then, create an Azure account following the steps below:

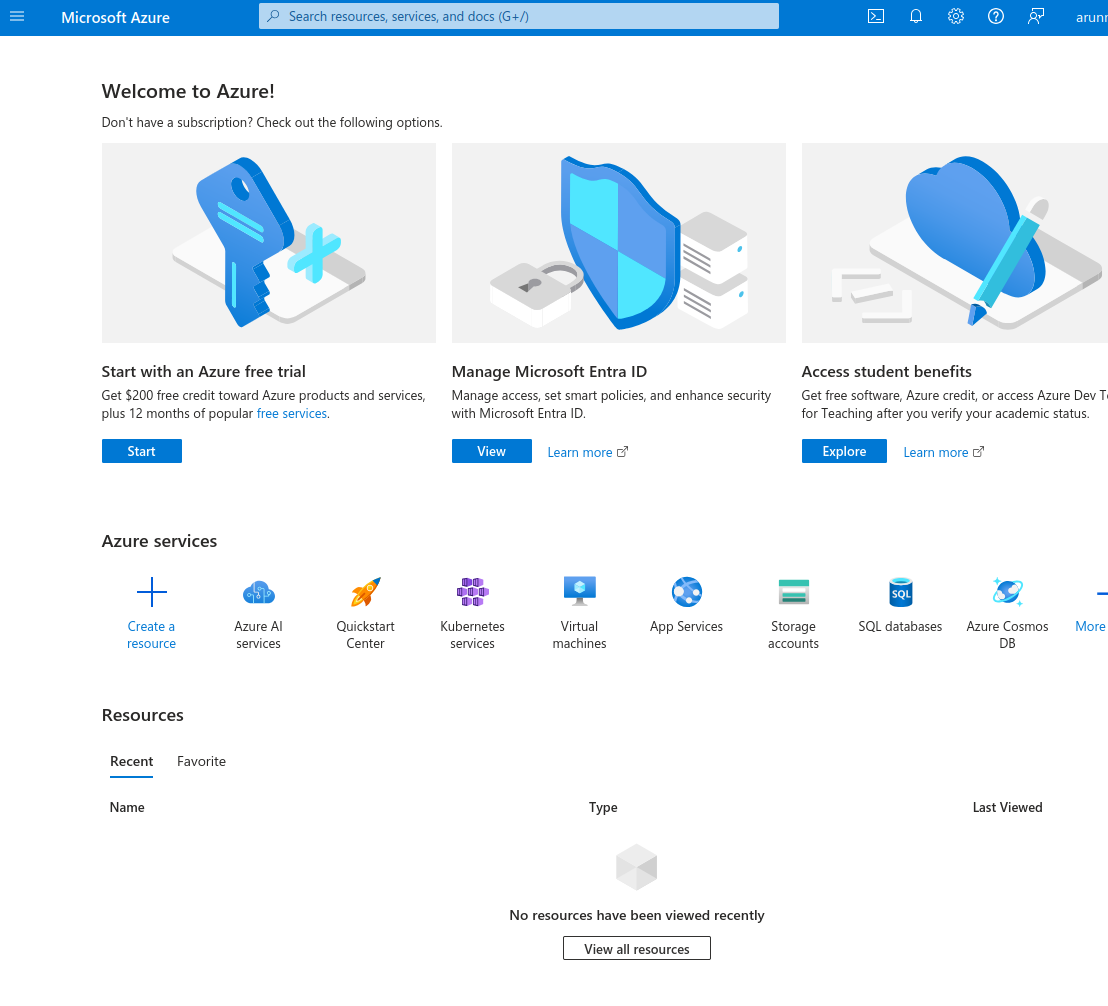

- Signing in using your Microsoft account on the Azure Portal. Select the option to Start with an Azure free trial.

The Microsoft Azure Portal.

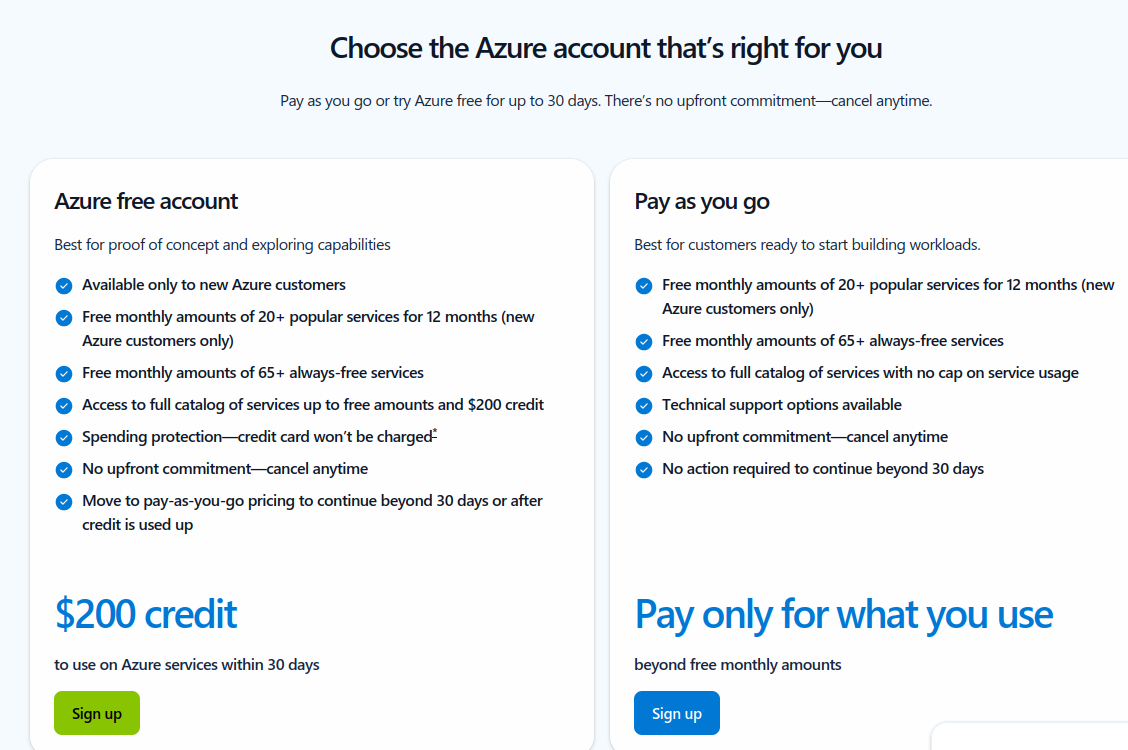

- On the next page, select the option to create an Azure free account with $200 free credits.

Choose an Azure account type.

- Fill out your personal information in the form.

Create a free Azure account.

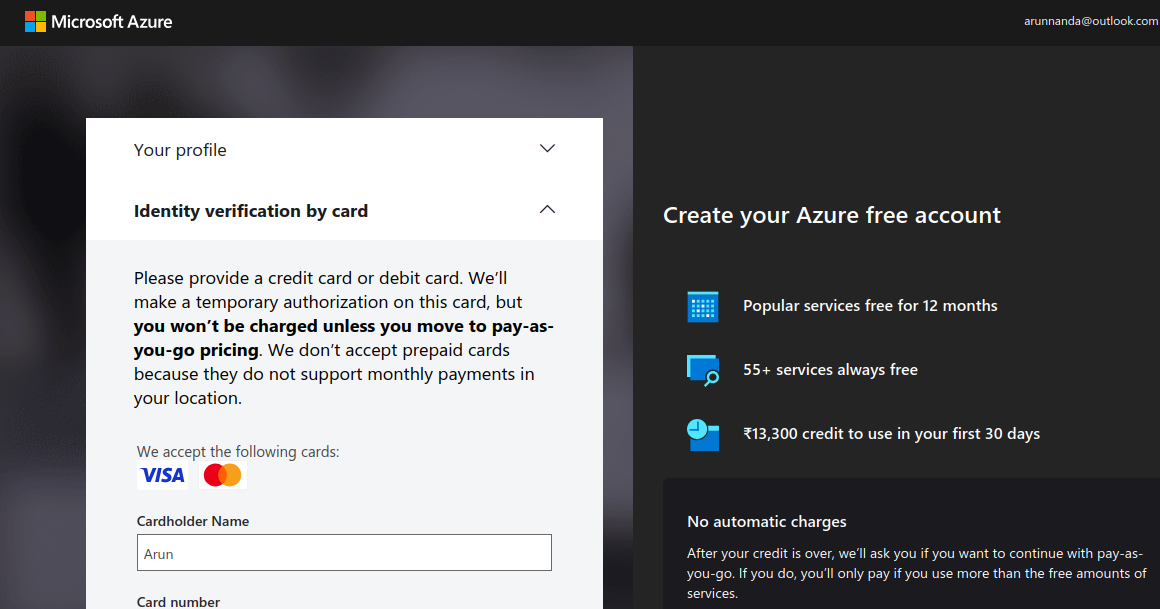

- Verify your identity using a credit or debit card. Note that there can be a temporary holding charge (usually a few cents or equivalent) debited from your account.

Verify your Azure account (screenshot by author)

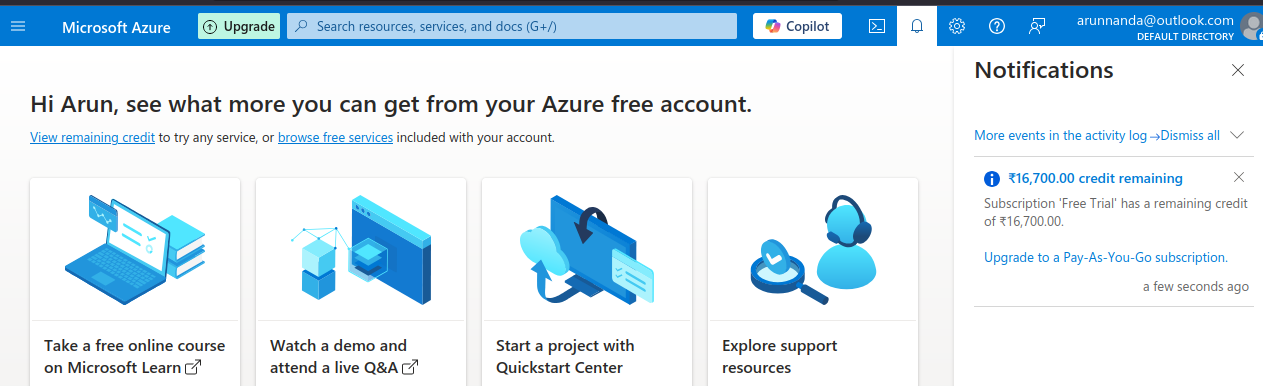

- After verifying your payment information, select Go to Azure Portal. It redirects you to the Quickstart Center.

- Note that the free $200 credits cannot be used for Azure OpenAI services. Azure OpenAI models can only be accessed with a paid account. To upgrade your account to a pay-as-you-go subscription, go back to the Azure portal and select Upgrade in the top bar.

Upgrade your Azure account.

- Fill out the details on the upgrade page and select Upgrade to pay as you go.

After upgrading to a paid account, you can access OpenAI models.

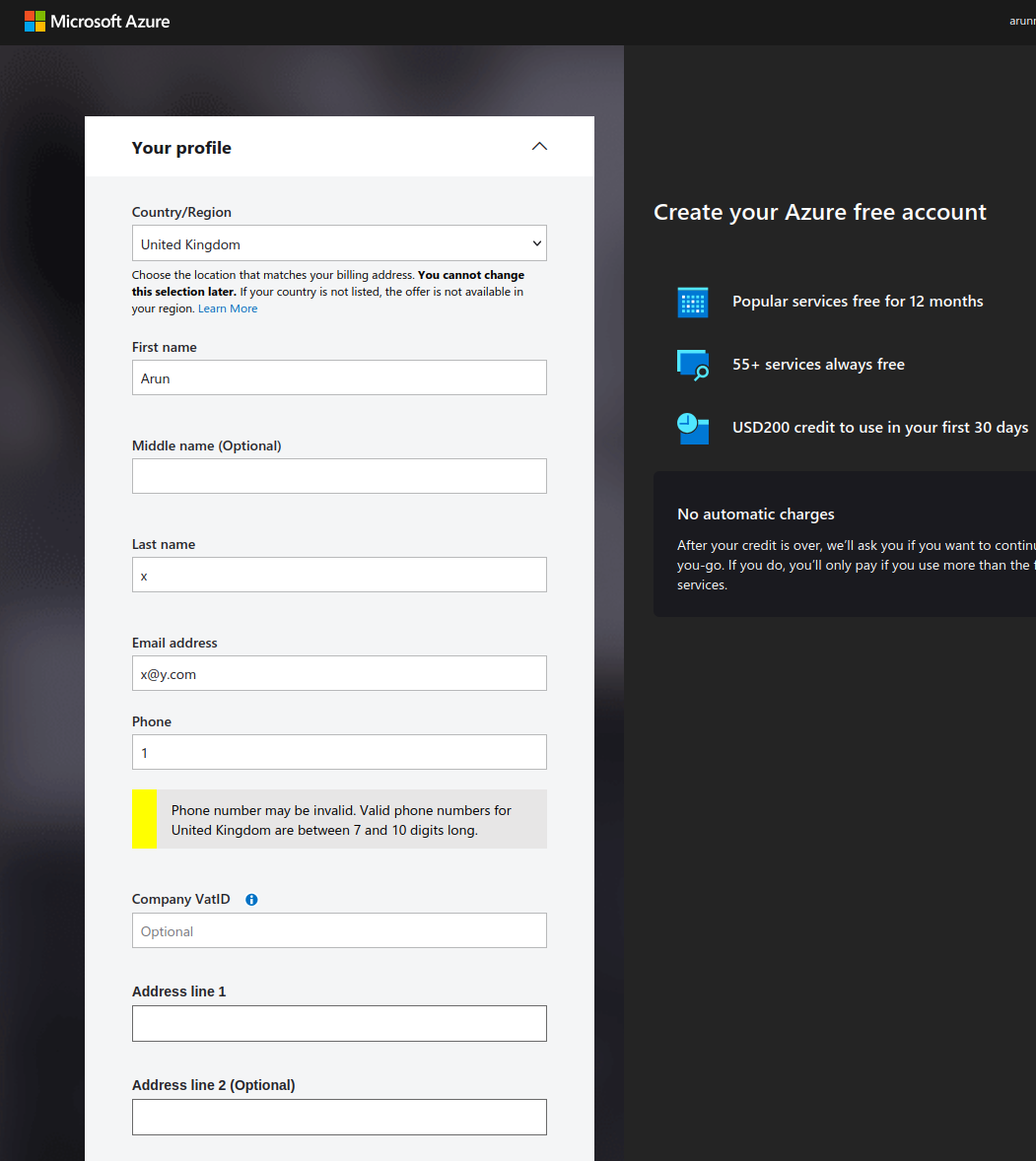

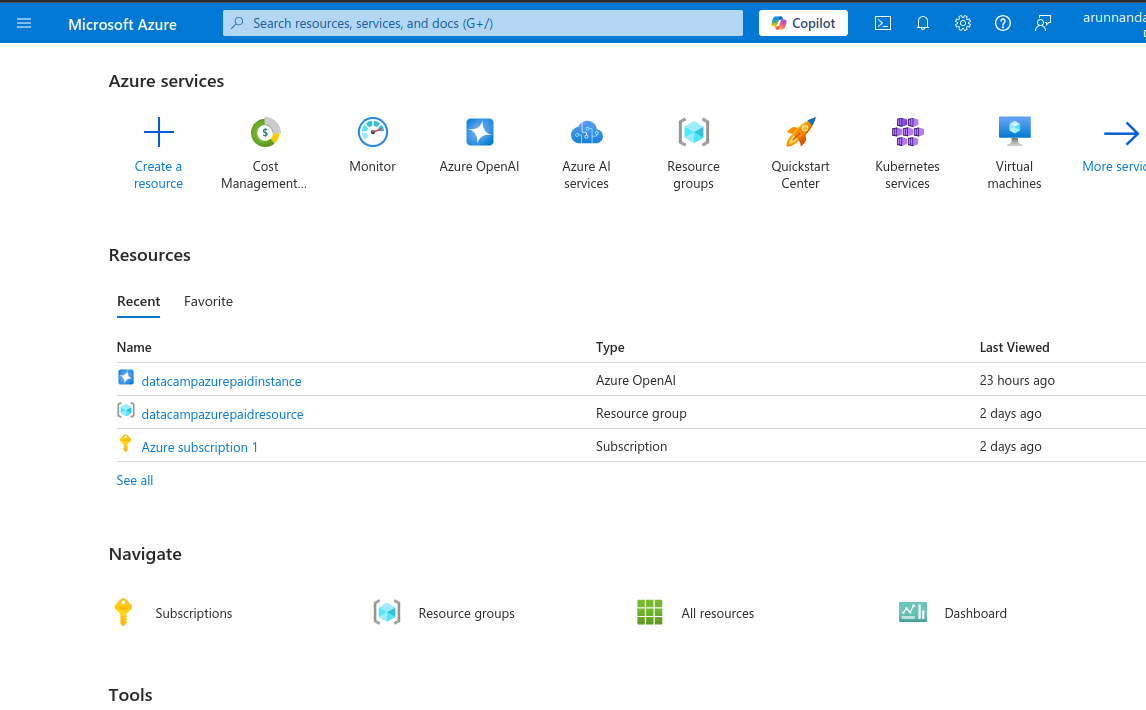

Step 2: Access Azure OpenAI in the Azure portal

- Go back to the Azure portal, which shows a variety of services, such as AI services, virtual machines, storage accounts, and more.

Azure portal showing available services.

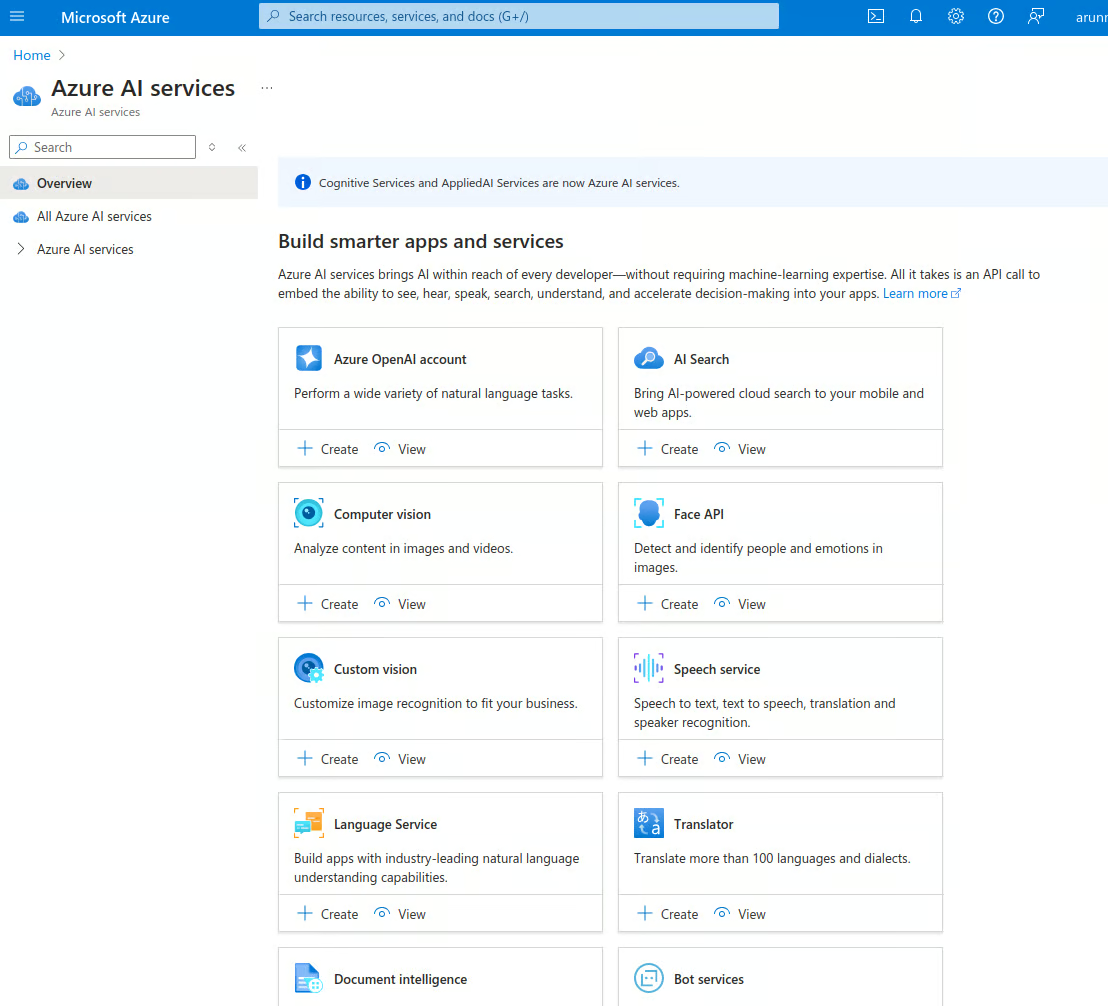

- Select Azure AI services and go to the home page for AI services. Azure offers various AI-based services, such as face recognition, anomaly detection, speech, computer vision, OpenAI, and more.

Azure AI services.

- In the Azure OpenAI account tab, select the View option.

- This leads to the Azure OpenAI page, which shows your account's list of OpenAI instances. Select Create Azure OpenAI to create a new instance. You can also select Create on the previous page.

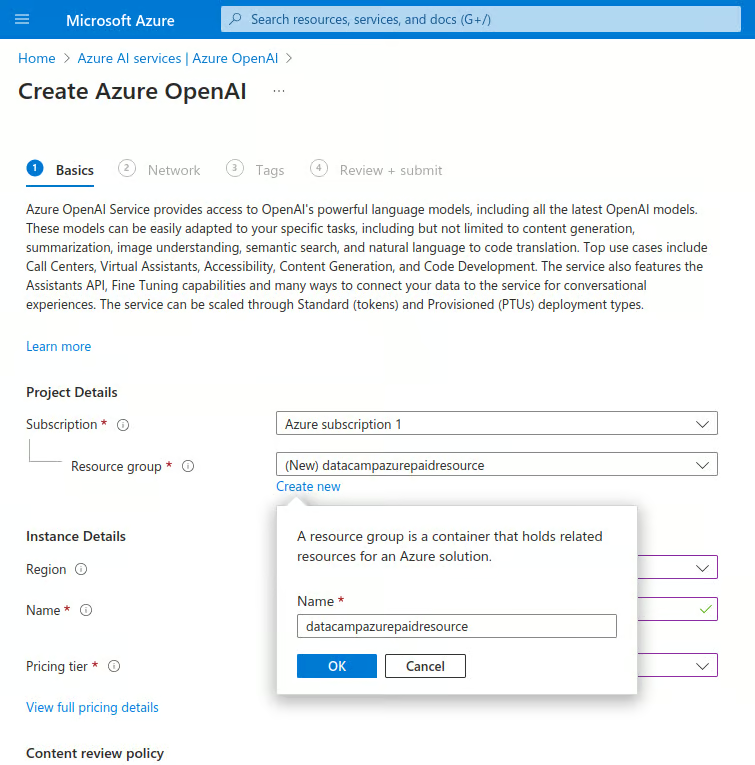

- Enter the details below:

- Under Subscription, select the name of your paid subscription.

- Under Resource group, select Create new:

- Enter a name for the new resource group.

Create a new Azure OpenAI instance.

-

-

- Under Region, select the nearest region.

- Under Name, enter a unique name.

- Under the Pricing tier, choose Standard S0.

- Select Next to proceed.

-

- In the Network section, select "All networks, including the Internet, can access this resource." Then, proceed to the next step.

- In the Tags section, enter name-value pairs to categorize this instance. This helps in consolidated billing. This step is optional.

- In the next section, select Review + submit, double-check the values, and select Create. This deploys a new OpenAI instance.

- After a short while, it shows the message "Your deployment is complete." On this page, select Go to resource.

- On the resource page, select the name of the resource under the Resources tab.

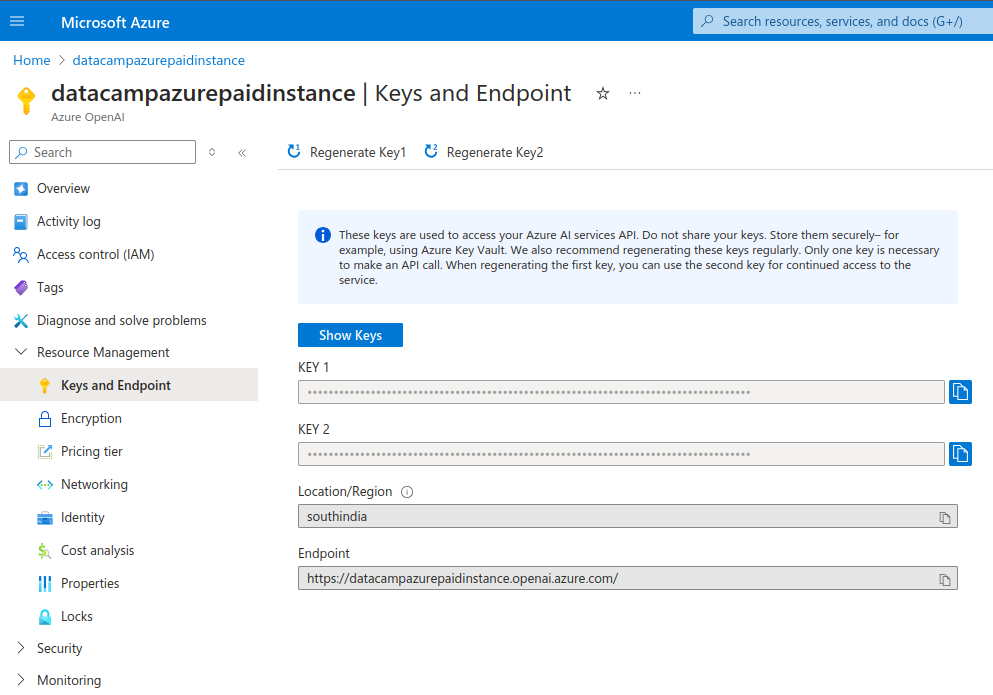

Step 3: Set up the API access

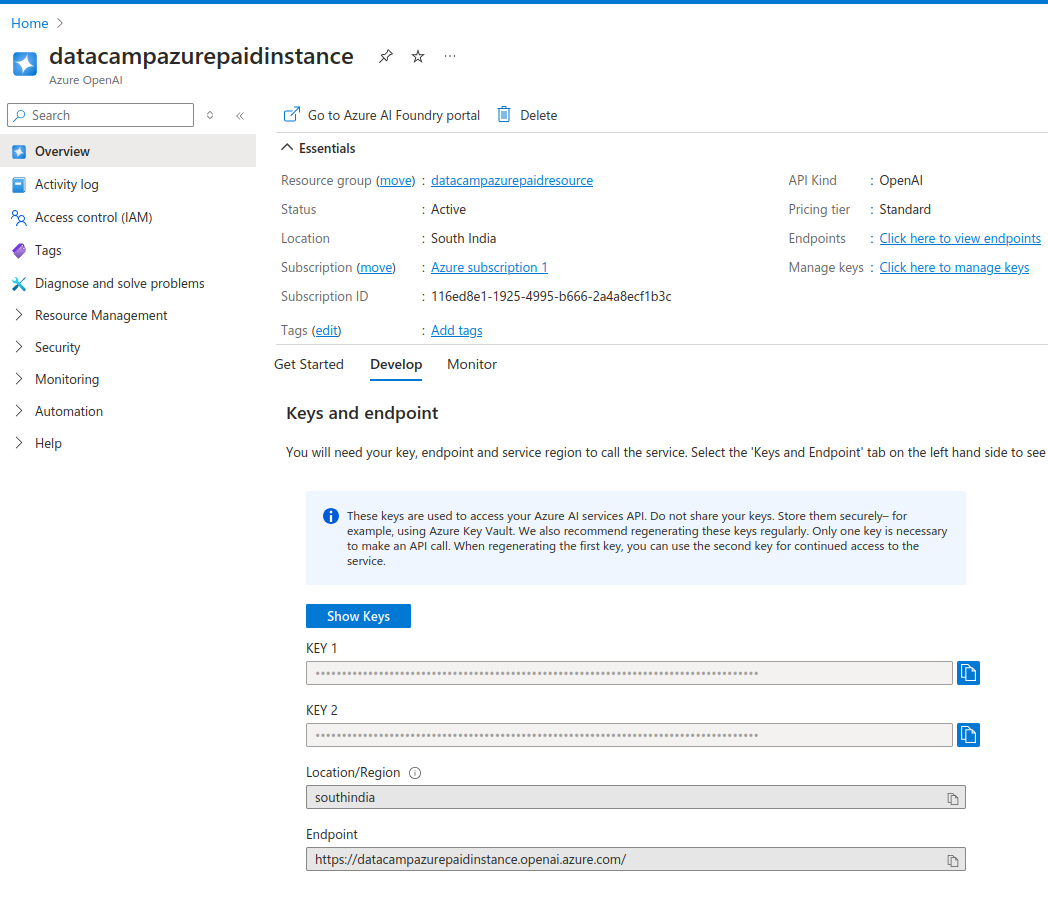

The resource page shows the details of the instance.

The Develop tab shows the API keys and API endpoint URLs for this instance. The keys have been automatically generated for you.

- Copy the keys and the endpoint in a text file.

You get two API keys. This allows you to use the second key in case the first is compromised. You can also use the second key while regenerating the first. This allows you to rotate keys periodically without suffering any downtime.

Azure OpenAI instance API keys

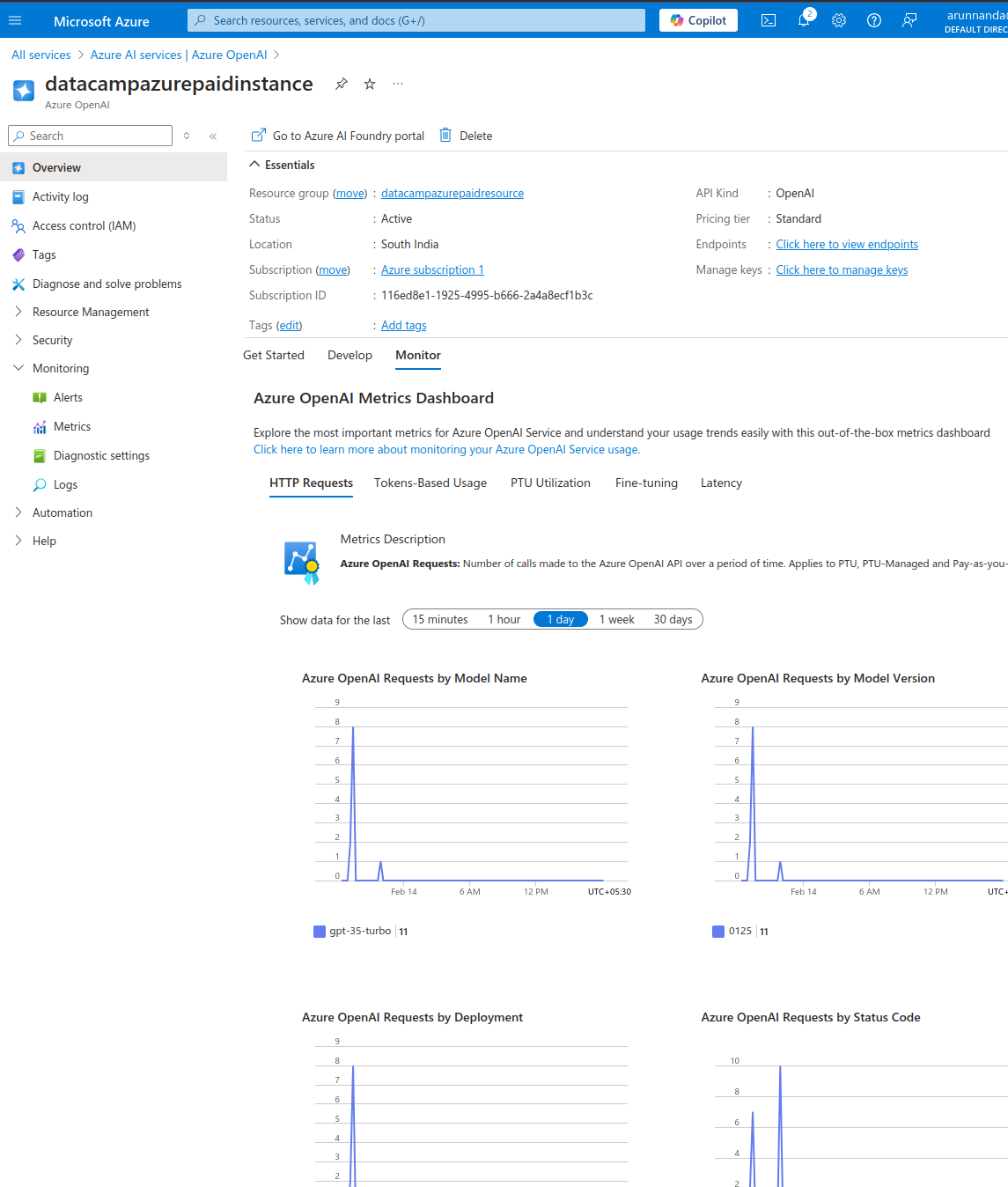

- In the Monitor tab, check the usage metrics of this instance.

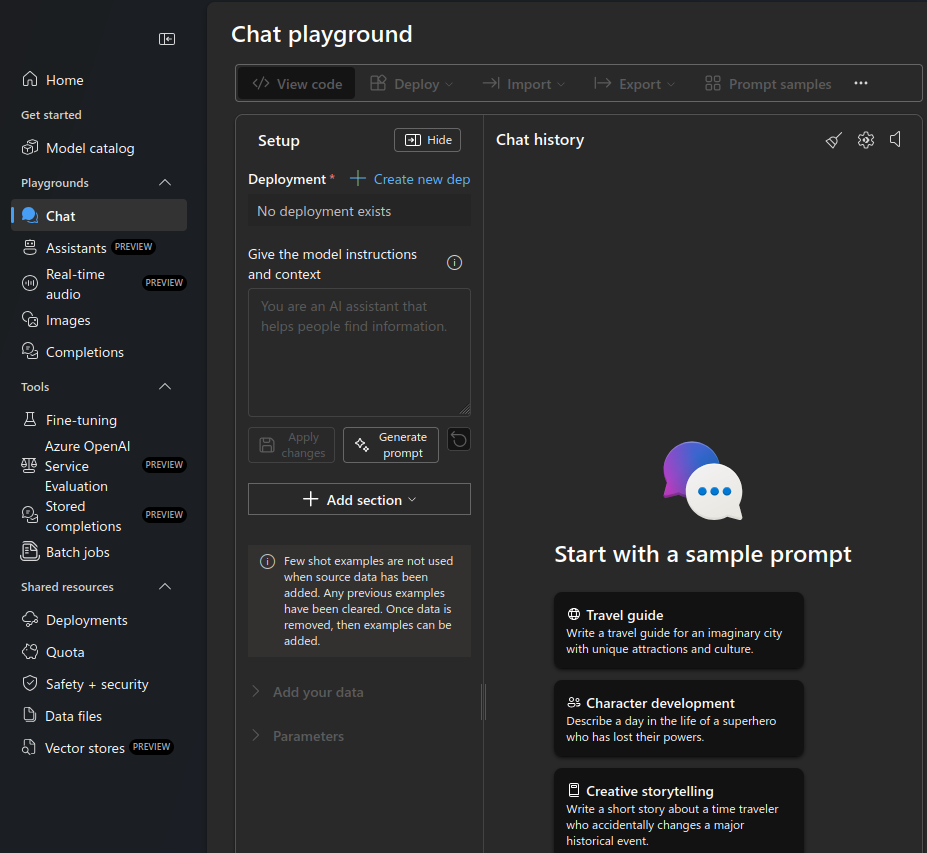

- In the Get Started tab, select Explore Azure AI Foundry portal to open the AI playground on a new page.

Azure OpenAI chat playground.

- Select the Chat playground from the left menu.

- Under Deployment, select Create new dep.

- Select From base models in the new deployment pop-up

- This shows a popup that allows you to select the model.

- In the search bar, type “gpt-3”.

- Select gpt-35-turbo from the list. This is an economical model that is optimized for chat completions. After getting a working configuration, switch to a more advanced (and more expensive) model if needed.

- Select Confirm.

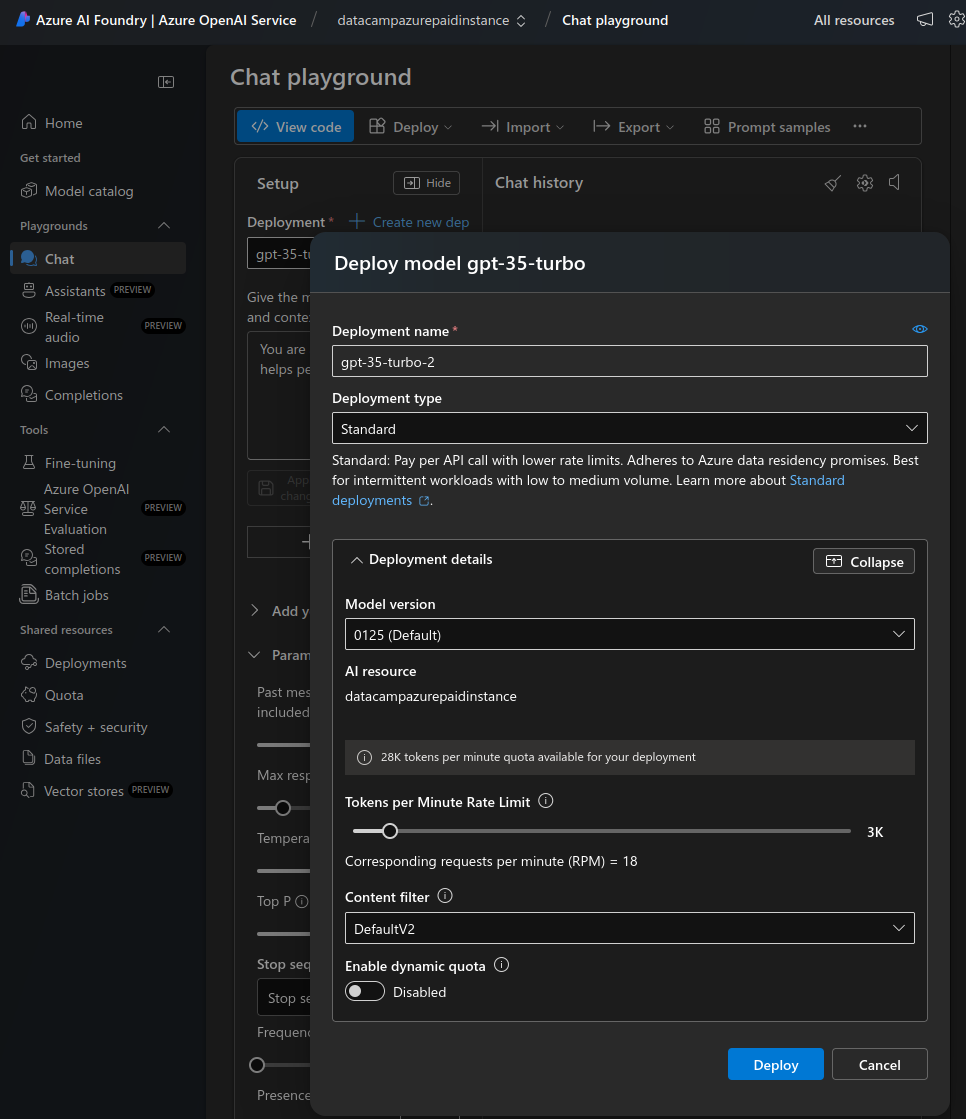

- In the Deploy model popup, select Customize.

- Reduce the Tokens per Minute Rate Limit to a low value (for example, 12 requests per minute) for manual testing. Increase this later as you put the model into production.

- Disable dynamic quota. You don’t need it until the deployment receives heavy traffic.

- Select Deploy.

Deploy a new model in the chat playground.

That’s it! In the next section, we will see how to access this deployment of the OpenAI gpt-35-turbo model.

Regenerating API keys

It is advisable to regenerate the API access keys periodically. This ensures the account isn’t compromised if old keys are inadvertently leaked. The steps below describe how to regenerate keys:

- Under Resource Management, from the left menu, choose Keys and Endpoint.

- Regenerate each key individually.

Azure OpenAI instance API keys.

Using Azure OpenAI in Applications

After setting up Azure OpenAI and deploying a model, we see how to access it programmatically.

Step 1: Setting up your development environment

I will show how to use Python to connect to Azure OpenAI. Install the required libraries:

$ pip install openaiIf you’re using a notebook, execute the following command. Note: I am using a DataLab notebook.

!pip install openaiImport the necessary packages within the Python shell or in the notebook:

import os

from openai import AzureOpenAIThe AzureOpenAI module allows access to OpenAI services within Azure. This is different from the OpenAI module used to access the standalone OpenAI API.

Before using Azure OpenAI, set the API key and endpoint in your environment. The previous section showed how to find these values. In principle, you can set these within the programming environment, but I recommend never doing that. Never expose API keys in the program.

Set API keys using the terminal

To run Python locally, set the environment variable in the shell configuration file. I will show you how to set it up for the Bash shell.

- Edit the

.bashrcfile in a text editor. - At the end of the file, add these two lines:

export AZURE_OPENAI_API_KEY=MY_API_KEY'export AZURE_OPENAI_ENDPOINT=MY_ENDPOINT'- Save and close the file.

- Reload the changes in the terminal using the command

source ~/.bashrc. - For other shells, like

shorzsh, the process can be slightly different. - Open (or reopen) the Python shell in the same terminal session.

Import the API key and endpoint values in the Python terminal:

azure_openai_api_key = os.getenv["AZURE_OPENAI_API_KEY"]

azure_openai_endpoint = os.getenv["AZURE_OPENAI_ENDPOINT"]Set API keys using online notebooks (DataLab)

DataLab allows you to directly set environment variables for a notebook.

- From the top menubar, select Environment and select Environment variables from the dropdown.

- Select the option to add environment variables.

- Add two variables,

AZURE_OPENAI_API_KEYandAZURE_OPENAI_ENDPOINT, and their respective values. - Give a name to this set of environment values and save the changes.

In the notebook, extract these values as shown below:

azure_openai_api_key = os.environ["AZURE_OPENAI_API_KEY"]

azure_openai_endpoint = os.environ["AZURE_OPENAI_ENDPOINT"]Step 2: Make your first API call to Azure OpenAI

You can now use the AzureOpenAI() function to create a client object to accept requests. This function uses the following parameters:

api_version: The version of the Azure OpenAI API. Check the latest version from the Azure documentation.api_key: the Azure OpenAI API key. Get this from the environment variables you set earlier. Do not set this manually here.azure_endpoint: the URL of the Azure OpenAI API endpoint (get this value from the environment).

client = AzureOpenAI(

api_version="2024-06-01",

api_key=azure_openai_api_key,

azure_endpoint=azure_openai_endpoint

)Use this client object for tasks like chat completion using the .chat.completions.create() function. This function accepts the following parameters:

model: The model name. In older releases of the OpenAI API, this used to be theengineparameter. Note that themodelparameter has to match the model specified during the deployment (on the Azure AI Foundry portal). If you try to use a different model than the one deployed, it throws an error as shown below:

NotFoundError: Error code: 404 - {'error': {'code': 'DeploymentNotFound', 'message': 'The API deployment for this resource does not exist. If you created the deployment within the last 5 minutes, please wait a moment and try again.'}}messages: This parameter specifies the conversation between the user and the model. It consists of a set of message objects. Each message object has a key-value pair denoted byrole(eithersystem,user, orassistant) andcontent(the text of the message). These message objects provide context for the interaction and guide the model’s responses.

Below, I give examples of key-value (role, content) pairs for different values of role:

user: the prompt entered by the end-user.

Example: {“role”: “user”, “content”: “explain the difference between rational and irrational numbers”}

system: the description of the role OpenAI is expected to play in the interaction.

Example: {“role”: “system”, “content”: “you are a helpful teacher to guide elementary mathematics students”}

assistant: the response expected from OpenAI.

Example: {“role”: “assistant”, “content”: “the difference between rational and irrational numbers is … an example explanation …”}

The assistant role is necessary only when training data is provided to fine-tune the model. When using the client for tasks like chat completion, only the user and system roles are sufficient. For many simple tasks, only the user role is sufficient.

max_tokens: this parameter decides the maximum number of tokens that can be used to process (both the input and output of) the API call. You are directly charged for the tokens you use. The default value is 16. This value is sufficient for simple use cases; hence, this parameter can be omitted.

simple_completion = client.chat.completions.create(

model="gpt-35-turbo",

messages=[

{

"role": "user",

"content": "Explain the difference between OpenAI and Azure OpenAI in 20 words",

},

],

)Print the output of the chat completion task:

print(simple_completion.to_json())The output is a JSON object with various key-value pairs. It includes the model’s output, as shown in the example below. Note that assistant is the default role assigned to the model.

"message": {

"content": "OpenAI is a research organization focused on advancing AI, while Azure OpenAI is a collaboration between Microsoft and OpenAI.",

"role": "assistant"

},Step 3: Using OpenAI models for different tasks

To access any model with Azure OpenAI, you must first deploy the model. In the previous section, you deployed the gpt-35-turbo model. To use a different model:

- Go back to the Azure AI Foundry and deploy a different model, as explained in the previous section. Choose from various models like GPT-3.5, GPT-4o, o1 and o1-mini, o3 and o3-mini, and more.

- Rewrite the prompts above (in the

chat.completions.create()function) using the name of the new model.

In older releases of OpenAI, using different models for different tasks was common. There were dedicated models for code generation, NLP, and more. Modern models like GPT-3.5 and GPT-4 can perform all text-based generative tasks. Thus, many of the older task-specific models (like davinci models for code generation) have been phased out.

We now examine how to use the API for various simple tasks like question answering and text summarization. In the example tasks below, we use the same model—GPT-3.5. You can also choose one of the GPT-4 models.

Since these are both text-based tasks, let’s first declare a block of text to work on. I have arbitrarily copied a review of a pair of boots from an e-commerce website. Assign the text of this review to a variable text. Ensure that you escape any single or double quote characters within the text.

text = 'Comfort and Quality wise the boots are absolutely excellent. A 10 miles hike along the city Streets and the alps nearby felt like a smooth sail. The most plus it was quite well ventilated cause of the heavy duty Gore-tex material. After 12 hours when I removed it, there was just very little bit of perspiration (My foot sweats a lot) and absolutely no foul smell. Speaking of the Gore-Tex I would say, I sported this on a heavy rainy day after buying it in India, I was fearing the boot, though not very much like normal boots, would still be drenching with water, instead it was very little soaked. And some 5-6 hours later it was completely dry (Kept inside a closed room in the night). The only disadvantage I think is, that it fits my foot perfectly according to the size I chose, and there is no slight pain from 7 AM till 8 PM due to the shoe weight (of course its 1.2 KG, even though it doesn\'t feel like)' Text summarization

To summarize the above text:

- Use the

clientobject created earlier. - Pass the appropriate roles within the

messagesparameter as shown in the example below:

summary_completion = client.chat.completions.create(

model="gpt-35-turbo",

messages=[

{

"role": "system",

"content": "You are a helpful assistant for summarizing text"

},

{

"role": "user",

"content": f"Summarize this text in 10 words: {text}",

},

],

)Notice how we pass the text as a variable in the prompt in the above snippet.

print(summary_completion.to_json())The output contains the assistant’s summarization of the long text, as shown in this excerpt:

"message": {

"content": "Excellent comfort and quality, well-ventilated, quick-drying, lightweight boots, minimal perspiration, no foul smell.",

"role": "assistant"

},Question answering

To answer questions (based on the input text above), we modify the prompt to pass the question as a string variable. As before, the prompt also includes the text as a variable. The model answers the question based on the given text.

question = 'Are these shoes heavy?'

qna_completion = client.chat.completions.create(

model="gpt-35-turbo",

messages=[

{"role": "system",

"content": "You are a helpful assistant for answering questions"

},

{

"role": "user",

"content": f"Answer this question: {question} in 10 words based on this text: {text}",

},

],

)Convert the output into JSON and print it:

print(qna_completion.to_json())The output includes the assistant’s answer to the question:

"message": {

"content": "Lightweight. Not heavy. Comfortable for long hikes. Well-ventilated Gore-tex material.",

"role": "assistant"

},Become Azure AZ-900 Certified

Use Cases for Azure OpenAI

Now, let’s look at the different use cases for the Azure OpenAI service.

Natural language processing (NLP)

Being a language model at its core, OpenAI’s general purpose models like GPT-3 and GPT-4 excel at NLP tasks, such as:

- Text summarization: Provide the API with a large piece of text as input and ask it to summarize it according to your needs. In the earlier example, we summarized the long customer review into a 10-word summary.

- Text classification: Given a document (or a set of documents) and a set of predefined labels, OpenAI can classify the document(s) into the most appropriate label.

- Sentiment analysis: The API can analyze a piece of input text to gauge its sentiment. For example, it can classify a customer review as positive, negative, or neutral.

In the following example, we perform sentiment analysis to classify the customer review text (defined earlier).

sentiment_classification = client.chat.completions.create(

model="gpt-35-turbo",

messages=[

{"role": "system",

"content": "You are a helpful assistant for classifying customer reviews into one of the following categories: very positive, positive, neutral, negative, very negative"

},

{

"role": "user",

"content": f"Classify this review: {text}",

},

],

#max_tokens=16

)

print(sentiment_classification.to_json())The output contains a snippet resembling the excerpt below. It contains the assistant’s classification of the review:

"message": {

"content": "This review can be classified as very positive.",

"role": "assistant"

},Code generation

OpenAI models can generate code in various programming languages. Previous releases of OpenAI had dedicated code-specific models, like Codex and code-davinci, which were fine-tuned for code generation.

However, newer releases of OpenAI, such as GPT-3.5 and GPT-4 generate code without fine-tuning. The base models are pre-training on coding-based datasets. Thus, Codex, code-davinci, and many other legacy models have been deprecated and are no longer available for new deployments.

OpenAI models can help write code in various common programming languages. Interact with the model in natural language to give instructions for programming tasks like:

- Generate code: Given a text prompt or a comment, the model can generate a code snippet. The programmer can combine various such snippets into a proper working program. This helps automate repetitive tasks like writing boilerplate code.

- Debug: The model can analyze errors and warning messages to explain the problem in natural language and make suggestions on how to fix it.

- Optimize: OpenAI models can help refactor code into cleaner structures and use more efficient algorithms for common tasks like sorting.

- Explain: OpenAI models can also explain the code snippets they generate. These explanations help customize the generated code to suit the developer’s needs. They can also help new programmers understand programming concepts.

Consider a simple example of writing a Python function to open and read a file. It is a task common enough that can be easily automated. We ask OpenAI to generate and explain the code. Use the client object created earlier.

code_completion = client.chat.completions.create(

model="gpt-35-turbo",

messages=[

{"role": "system",

"content": "You are a helpful assistant for generating programming code"

},

{

"role": "user",

"content": "Write and explain a Python function to open and read a file",

},

],

)Print the output:

print(code_completion.to_json())Notice that the output JSON object contains the assistant’s output resembling the sample excerpt below:

"content": "Below is a Python function that opens a file, reads its contents, and returns the content as a string:\n\n```python\ndef read_file(file_path):\n try:\n with open(file_path, 'r') as file:\n …Image generation with DALL·E

The previous examples were all text-based. They used OpenAI as a large language model (LLM), which is typically built using the transformer architecture. In addition to LLMs, OpenAI includes diffuser-based models, like DALL·E, which generate images given an input prompt.

To use DALL·E, provide a text-based prompt with a detailed description of the image you want to see. Based on this description, DALL·E generates a high-quality image. This is useful for tasks like creating illustrations, designing product concepts, sketching for marketing campaigns, and more.

DALL·E is not currently available in all geographical regions. If it is available in your region, create a new deployment (as explained earlier in this tutorial) but using the model DALL·E-3 instead of GPT-3. Access the DALL·E model using the same client object described previously. Use the client.images.generate() method to generate an image:

- Set the model's name as

dalle3using themodelparameter. - Describe the desired image with the

promptparameter. - You can also specify a value for

n, which is the number of images to generate.

result = client.images.generate(

model="dalle3",

prompt="An owl wearing a Christmas hat",

n=1

)Extract the JSON response object from the model’s response:

import json

json_response = json.loads(result.model_dump_json())Declare the image directory. Note that the directory images must exist under the current working directory (from which you started the Python shell).

image_dir = os.path.join(os.curdir, 'images')Specify the image path under this directory:

image_path = os.path.join(image_dir, 'generated_image.png')Extract the URL of the generated image from the JSON response object, download the image (as a stream), and write it to the image file:

image_url = json_response["data"][0]["url"]

generated_image = requests.get(image_url).content

with open(image_path, "wb") as image_file:

image_file.write(generated_image)Display the image:

from PIL import Image

image = Image.open(image_path)

image.show()Best Practices for Using Azure OpenAI

Using the Azure OpenAI service is straightforward, but as with every technology, there are some best practices to follow to get the most out of it. Let’s review them in this section.

Monitoring and managing the API usage

Azure OpenAI is one of Azure’s more expensive services. Even the free credits available to new accounts are not usable towards the OpenAI API. Thus, it is essential to track usage, even for new users. Unchecked API usage can lead to unexpectedly large bills.

OpenAI charges are based on token usage and consumption. Tokens represent units of text processed by the model - both for input and output. Processing a large text document consumes more tokens than generating a longer response. The more tokens you use, the more you pay. Thus, be economical with the information you give the model or ask it to generate.

Use the max_tokens parameter to limit the number of tokens consumed in that request. For production models, experiment to determine how many tokens your requests or users typically need and limit the usage accordingly. Increase the limit on a case-by-case basis.

To monitor the basic metrics:

- From the Azure AI Services | Azure OpenAI homepage, select the resource to monitor.

- On the resource page, choose the Monitor tab to view various metrics, such as the number of HTTP requests and the number of processed tokens per model and per deployment.

Azure OpenAI metrics dashboard.

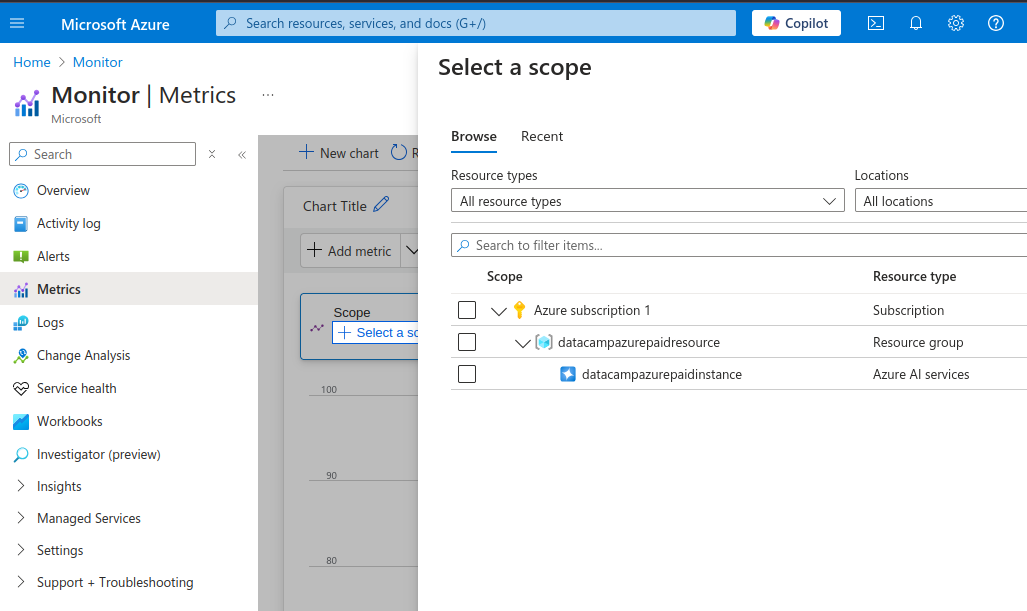

You can also view more detailed metrics:

- From the Monitoring option in the left menu, select Metrics.

Azure OpenAI detailed metrics.

- In the Select a scope pop-up, select the OpenAI instance (mark the checkbox)

- Select Apply.

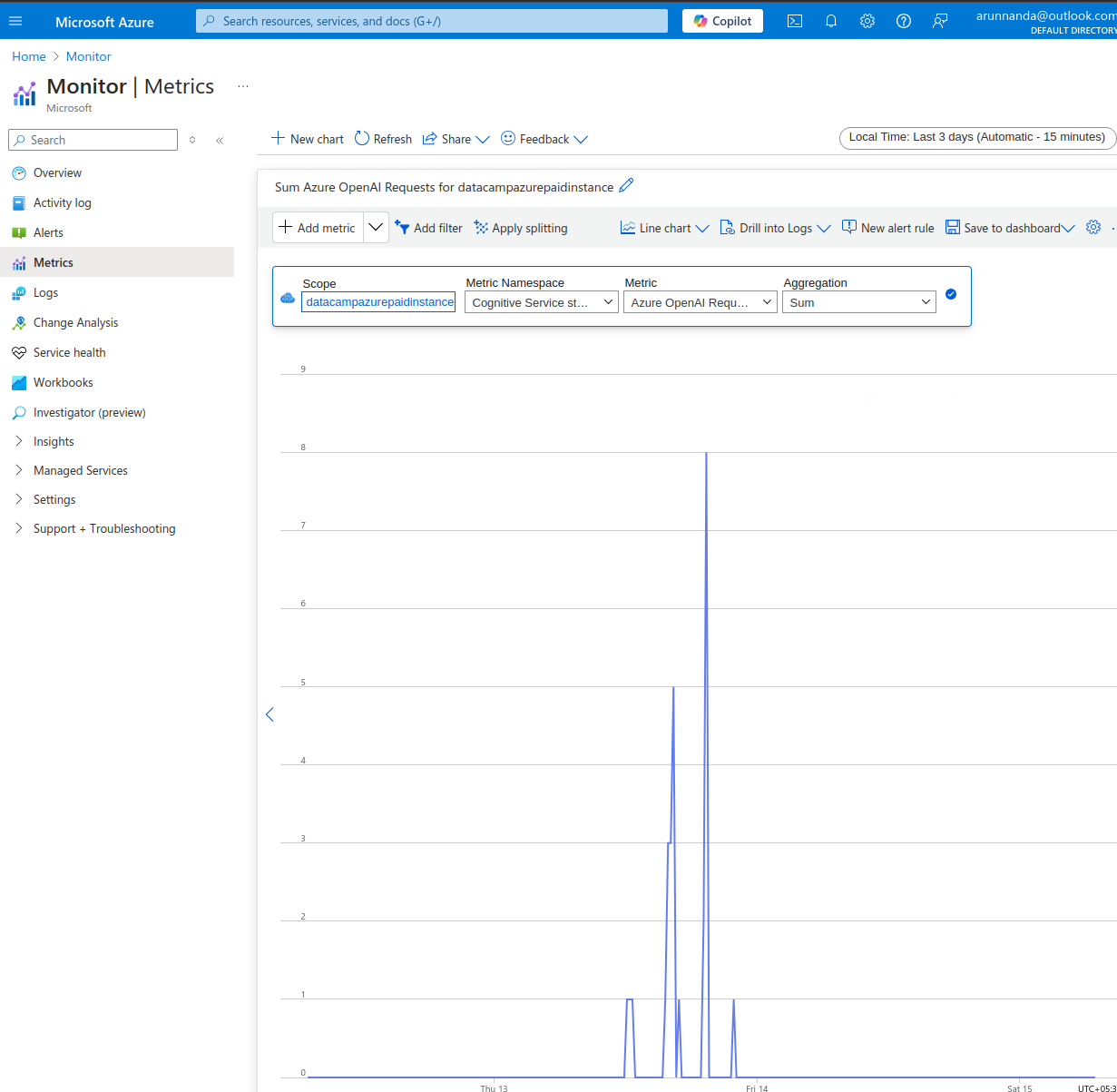

On the Metrics page:

- Select the time window in the top right.

- From the menubar at the top of the (initially empty) chart, select the appropriate metric from the Metric dropdown. Choose from various metrics such as:

- Azure OpenAI requests,

- Statistics about token usage (active tokens, generated tokens, etc.)

- Performance of API calls (time to first byte, etc.), and more.

Azure OpenAI monitoring example.

Lastly, always set up budget limits on API accounts and configure the settings to receive email alerts when you cross a budget threshold.

Fine-tuning models

OpenAI’s base models are trained on huge datasets covering many different domains. Broadly, there are three different ways of getting the desired output from LLM models:

- Prompt engineering: For most use cases, adding specific instructions to the prompt is enough. This is called prompt engineering.

- Few-shot learning: In cases where prompt engineering is insufficient, add a few examples to the prompt to help the model understand the user's desired responses. This is called few-shot learning, in which the model learns based on a small number of examples.

- Fine-tuning: Sometimes, you want to chat with the model about a very niche domain, for example, based on a manual for repairing specialized equipment. Generating text on this topic involves using domain-specific jargon and language patterns, which are unlikely to have been part of public datasets (on which the base models were trained).

You can fine-tune a base model if few-shot learning and prompt engineering don’t help get the right responses.

Fine-tuning refers to retraining a pre-trained base model on a specialized dataset. In practice, only a few layers of the base model (not the entire model) are retrained on the new data. The Azure fine-tuning tutorial explains the steps for fine-tuning a popular OpenAI model.

Remember that to successfully finetune a base model, you need large volumes of relevant, high-quality data with thousands of training examples. Furthermore, fine-tuning invariably involves large upfront costs to train the model. Additionally, the fine-tuned model has to be hosted at your expense before deploying.

Securing your API keys

Publicly exposed API keys can be abused to access sensitive information, perform unsafe and/or illegal tasks, or make unauthorized requests, resulting in a large bill for the account owner. Thus, it is crucial to securely manage API keys instead of hardcoding them in the source code. Various approaches can be taken to do this:

- For cloud services, use key management tools like AWS Secrets Manager and Azure Key Vault.

- Use

.envfiles to store keys in key-value format. Add this file to the.gitignorelist to ensure it doesn’t get committed to the code repository. - For Jupyter notebook-based services use the option to store keys as part of the environment. This method was described earlier in this tutorial.

I advise restricting the permissions for which tasks the keys can be used. It is also helpful to rotate or regenerate keys periodically so that old keys cannot be misused in case they are leaked.

Conclusion

In this tutorial, we discussed starting with an Azure account, setting up OpenAI services, creating a new OpenAI instance, and deploying a model. We then saw examples of using OpenAI models for practical tasks. We also covered how to monitor the usage and performance of Azure OpenAI resources.

For a deeper understanding of Microsoft Azure, follow the DataCamp Azure Fundamentals track. To learn more about the core concepts of OpenAI, follow the DataCamp OpenAI Fundamentals track.

Earn a Top AI Certification

FAQs

How is Azure OpenAI different from OpenAI?

Azure OpenAI allows Azure users to access OpenAI models from within the Azure platform. Thus, Azure users can avoid having to configure and manage yet another software platform. It also makes it easier to integrate with other Azure-based services.

Does Azure OpenAI allow to fine-tune models?

Yes, you can supply training and validation datasets tailored for your specific use case and use it to fine-tune a base model.

However, bear in mind that the performance of the fine-tuned model is highly dependent on the quality of the training data. Poor quality data or irrelevant data leads to degraded performance compared to the base model.

What methods can I use to access Azure OpenAI?

You access Azure OpenAI services in two ways - using the Azure web application, or using the API. The web app works like the ChatGPT web interface. You can programmatically interact with the models using the API. The API allows the integration of AI tools with other applications.

What languages can I use with the Azure OpenAI API?

Azure OpenAI supports various languages like C#, Go, Java, JavaScript, Python, and REST (HTTP requests). Python is one of the most commonly used languages due to its popularity in the data science and AI/ML domain. More importantly, the Python ecosystem includes many tools to work with large datasets.

To integrate with server-side applications, use the same language as the rest of your application.

What models can I access using Azure OpenAI?

Azure OpenAI gives access to models like GPT-4, GPT-4o, GPT-3.5, DALL-E (text to image), Whisper (speech to text), and many more. A list of the currently available models can be found in the Azure documentation.

Arun is a former startup founder who enjoys building new things. He is currently exploring the technical and mathematical foundations of Artificial Intelligence. He loves sharing what he has learned, so he writes about it.

In addition to DataCamp, you can read his publications on Medium, Airbyte, and Vultr.