Course

LangChain’s 90k GitHub stars are all the credibility it needs—right now, it is the hottest framework to build LLM-based applications. Its comprehensive set of tools and components allows you to build end-to-end AI solutions using almost any LLM.

Perhaps at the heart of LangChain’s capabilities are LangChain agents. They are autonomous or semi-autonomous tools that can perform tasks, make decisions, and interact with other tools and APIs. They represent a significant leap forward in automating complex workflows with LLMs.

In this article, you will learn how to build your own LangChain agents that can perform tasks not strictly possible with today's chat applications like ChatGPT.

Multi-Agent Systems with LangGraph

Setup

Before we get into anything, let’s set up our environment for the tutorial.

First, creating a new Conda environment:

$ conda create -n langchain python=3.9 -y

$ conda activate langchainInstalling LangChain’s packages and a few other necessary libraries:

$ pip install langchain langchain_openai langchain_community langgraph ipykernel python-dotenvAdding the newly created Conda environment to Jupyter as a kernel:

$ ipython kernel install --user --name=langchainCreating a .env file to store secrets such as API keys:

$ touch .env

$ vim .env # Paste your OPENAI keyOPENAI_API_KEY='YOUR_KEY_HERE'Retrieving your OpenAI API key from the .env file:

import os

from dotenv import load_dotenv

load_dotenv()

api_key = os.getenv('OPENAI_API_KEY')Testing that everything is working correctly by querying GPT-3.5 (the default language model) of OpenAI:

from langchain_openai import OpenAI

llm = OpenAI(openai_api_key=api_key)

question = "Is Messi the best footballer of all time?"

output = llm.invoke(question)

print(output[:75])There is no definitive answer to this question, as it is subjective and deNow, we are ready to get started.

What are LangChain Agents?

Let’s spend some time thinking about the agent framework. Specifically, we will consider how it differs from the traditional chain paradigm and what the components of an agent are. Understanding why we need to choose a new way of building applications will prepare us for writing the code.

Chains vs. Agents

The defining trait of agents is their ability to choose the best order of actions to solve a problem given a set of tools.

For example, let’s say we have the following:

- A weather API

- ML model for clothing recommendations

- Strava API for biking routes

- User preferences database

- Image recognition model

- Language model (text generation)

Traditional problem-solving would involve using a chain of select tools from the list:

Chain 1: Weather-based clothing recommender

- Call the weather API

- Input weather data into the ML clothing model

- Generate clothing recommendations

- Present results to the user

Chain 2: Weather-based biking route suggester

- Call the weather API

- Call the Strava API for popular routes

- Filter routes based on weather conditions

- Present suitable routes to the user

Chain 3: Outfit Photo Analyzer

- Receive the user's outfit photo

- Use an image recognition model to identify clothing items

- Compare with the user preference database

- Generate feedback using the text generation model

- Present the analysis to the user

Each chain solves a specific problem using a predetermined sequence of steps and a subset of the available tools. They cannot adapt beyond their defined scope. They also require three separate branches of development, which is inefficient in terms of time and resources.

Now, imagine an agentic system with access to all these tools. It would be able to:

- Understand the user's query or problem (through natural language with a language model)

- Assess which tools are relevant to the problem (reasoning)

- Dynamically create a workflow using the most appropriate tools

- Execute the workflow, making real-time adjustments if needed (acting)

- Evaluate the outcome and learn from past interactions

For example, if a user asks, “What should I wear for my bike ride today?” the agent might check the weather API, analyze suitable biking routes through Strava, recommend appropriate clothing, considering the user's past preferences, and generate a personalized response.

The agent can:

- Handle a wide variety of problems using the same set of tools

- Create custom workflows for each unique situation

- Adapt its approach based on the specific context and user needs

- Learn from interactions to improve future performance

LangChain’s capacity to transform language models — which, by themselves, only produce text — into reasoning engines that can use the resources at their disposal to take appropriate action is one of its main applications. In short, LangChain enables the development of strong autonomous agents that interact with the outside world.

Key components

A LangChain agent is made up of several components, such as chat models, prompt templates, external tools, and other related constructs. To build successful agents, we need to review each component and understand their use.

Language and chat models

There are a lot of moving parts involved in creating a LangChain agent. The first and most obvious is a language model.

from langchain_openai import OpenAI

llm = OpenAI(api_key=api_key, model="gpt-3.5-turbo-instruct")

question = "What is special about the number 73?"

output = llm.invoke(question)

print(output[:100])1. Prime Number: 73 is a prime number, which means it is only divisible by 1 and itself. This makeLanguage models, like OpenAI’s GPT-3.5 Turbo, take and generate strings. They are typically older and work best to answer individual user queries.

Newer and more powerful models are usually chat models, which can take a sequence of messages as inputs and return chat messages as outputs (as opposed to using plain text):

from langchain_openai import ChatOpenAI

from langchain_core.messages import HumanMessage, AIMessageChunk, SystemMessage

# Initialize the model

chat_model = ChatOpenAI(api_key=api_key, model='gpt-4o-mini')

# Write the messages

messages = [SystemMessage(content='You are a grumpy pirate.'),

HumanMessage(content="What's up?")]

output = chat_model.invoke(messages)Put differently, chat models allow us to have conversations in natural language. In the example above, we are initializing GPT-4o-mini with a system message followed by a user query. Note the use of SystemMessage and HumanMessage classes.

The output is a message object, which is the expected behavior of chat models:

type(output)langchain_core.messages.ai.AIMessageprint(output.content)Arrr, not much but the sound of waves and the creakin' of me ship. What do ye want? Make it quick, I've got treasure to hunt and rum to drink!Besides, they return other useful metadata accessible with dot-notation:

output.dict(){'content': "Arrr, not much but the sound of waves and the creakin' of me ship. What do ye want? Make it quick, I've got treasure to hunt and rum to drink!",

'additional_kwargs': {},

'response_metadata': {'token_usage': {'completion_tokens': 38,

'prompt_tokens': 21,

'total_tokens': 59},

'model_name': 'gpt-4o-mini-2024-07-18',

'system_fingerprint': 'fp_48196bc67a',

'finish_reason': 'stop',

'logprobs': None},

'type': 'ai',

'name': None,

'id': 'run-fde829bf-8f5f-4926-a1ed-ab53609ce03a-0',

'example': False,

'tool_calls': [],

'invalid_tool_calls': [],

'usage_metadata': {'input_tokens': 21,

'output_tokens': 38,

'total_tokens': 59}}Most agents use chat models because of their updated knowledge base and conversational capabilities. However, for simple agents with no memory requirements, language models like GPT-3.5 will be enough.

Prompt templates

The most efficient way to query language or chat models is by using prompt templates. They allow you to structure your queries consistently and dynamically insert variables, making your interactions with the model more flexible and reusable.

In LangChain, there are many types of prompt templates, with the most basic one being PromptTemplate class. It can be used with language (plain text) models:

from langchain_core.prompts import PromptTemplate

query_template = "Tell me about {book_name} by {author}."

prompt = PromptTemplate(input_variables=["book_name", "author"], template=query_template)

prompt.invoke({"book_name": "Song of Ice and Fire", "author": "GRRM"})StringPromptValue(text='Tell me about Song of Ice and Fire by GRRM.')The class requires you to create a string with placeholders for variables you want to replace using the bracket notation. Then, you need to pass this template string to the PromptTemplate class along with the names of the variables, thus constructing your prompt.

Calling .invoke() with values for variables will show how your prompt will be passed to a model.

Passing this prompt template to a language model requires us to chain it using the pipe operator:

from langchain_openai import OpenAI

llm = OpenAI(api_key=api_key)

# Create a chain

chain = prompt | llm

# Invoke the chain

output = chain.invoke({"book_name": "Deathly Hallows", "author": "J.K. Rowling"})

print(output[:100])Deathly Hallows is the seventh and final book in the popular Harry Potter series, written by J.K. RThe pipe operator (|) is part of LangChain Expression Language (LCEL), designed to chain multiple LangChain components and tools.

type(chain)langchain_core.runnables.base.RunnableSequenceWhen you use the pipe operator on LangChain objects, you create an instance of RunnableSequence class. A runnable sequence represents a chain of objects that support the .invoke() method, like prompt templates and language/chat models.

Now, let’s look at another prompt template class for chat models:

from langchain_core.prompts import ChatPromptTemplateWe mentioned that chat models require a sequence of messages as inputs. The initial input is usually a system prompt telling the chat model how to behave. So, using the ChatPromptTemplate class, we can easily create chat models with different personalities:

chat_model = ChatOpenAI(api_key=api_key, model="gpt-4o-mini")

template = ChatPromptTemplate([

('system', 'You are a helpful AI bot. Your specialty is {specialty}.'),

('human', 'Explain the concept of {concept} based on your expertise.')

])The class requires a list of role-based messages as input. Each member of the list must be a (role, message) tuple with the variable placeholders defined where needed.

After we have it ready, we can use the same pipe operator to create chat models with different behaviors:

specialties = ["psychology", "economics", "politics"]

concept = "time"

# Call the model with different personalities

for s in specialties:

chain = template | chat_model

output = chain.invoke({"specialty": s, "concept": concept})

print(output.content[:100], end="\n" + "-" * 25 + '\n')Time is a complex and multifaceted concept that has been explored from various perspectives, includi

-------------------------

In economics, the concept of time is multifaceted and plays a crucial role in various theories and m

-------------------------

While my primary focus is on politics, I can provide a brief overview of the concept of time as it m

-------------------------Tools

In a previous section, we mentioned that agents can choose a combination of tools at their disposal to solve a particular problem, with LLMs as reasoning engines under the hood.

LangChain offers integrations with dozens of popular APIs and services to let agents interact with the rest of the world. Most of them are available under the langchain_community package, while some are inside langchain_core.

For example, here is how you can use the ArXiv tool to retrieve paper summaries on various topics:

!pip install -Uq arxiv # Install arXiv Python SDKfrom langchain_community.tools import ArxivQueryRun

tool = ArxivQueryRun()

print(tool.invoke('Photosynthesis')[:250])Published: 2019-08-28

Title: Photosynthesis on Exoplanets and Exomoons from Reflected Light

Authors: Manasvi Lingam, Abraham Loeb

Summary: Photosynthesis offers a convenient means of sustaining biospheres. We

quantify the constraints for photosynthesThere is an alternative way to load tools rather than import them by their class name:

from langchain_community.agent_toolkits.load_tools import load_tools

tools = load_tools(["arxiv", "dalle-image-generator"])Above, we are loading both the arXiv and Dall-E image generator tools at the same time using the load_tools() function. Tools loaded with this function have the same usage syntax:

# Call arXiv

print(tools[0].invoke("Kaggle")[:150])Published: 2020-06-06

Title: StackOverflow vs Kaggle: A Study of Developer Discussions About Data Science

Authors: David Hin

Summary: Software develop# Generate an image with DallE

output_image_url = tools[1].invoke("A magnificent chain in a cartoon.")

output_image_url'https://oaidalleapiprodscus.blob.core.windows.net/...'

The load_tools function requires you to know the string names of tool classes, like the example of ArxivQueryRun versus 'arxiv'. You can quickly check the string name of any tool by running the get_all_tool_names function:

from langchain_community.agent_toolkits.load_tools import get_all_tool_names

get_all_tool_names()[:10]['sleep',

'wolfram-alpha',

'google-search',

'google-search-results-json',

'searx-search-results-json',

'bing-search',

'metaphor-search',

'ddg-search',

'google-lens',

'google-serper']Note that load_tools() is only a shorthand function. When building agents, it is recommended to load tools using their class constructor, which allows you to configure them based on their specific behavior.

Step-by-Step Workflow of How to Build LangChain Agents

Finally, in this section, we will see how to create LangChain agents step-by-step using the knowledge we have gained in the previous sections.

In the coming examples, we will build an agent capable of explaining any topic via three mediums: text, image, or video. More specifically, based on the question asked, the agent will decide whether to explain the topic in what format.

Let’s start. Remember to check out how to set up the environment, which is covered at the beginning of the article.

1. Defining tools

The first step after configuring our environment is defining the tools we will give to our agent. Let’s import them:

from langchain_community.tools import WikipediaQueryRun # pip install wikipedia

from langchain_community.utilities import WikipediaAPIWrapper

from langchain_community.tools import YouTubeSearchTool # pip install youtube_search

from langchain_community.tools.openai_dalle_image_generation import (

OpenAIDALLEImageGenerationTool

)

from langchain_community.utilities.dalle_image_generator import DallEAPIWrapperWe are importing five classes:

WikipediaAPIWrapper: to configure how to access the Wikipedia APIWikipediaQueryRun: to generate Wikipedia page summariesYouTubeSearchTool: to search YouTube videos on topicsDallEAPIWrapper: to configure how to access OpenAI's DallE endpointOpenAIDALLEImageGenerationTool: to generate images using prompts

When a user queries our agent, it will decide whether to explain the topic using a Wikipedia article in text format, or by creating an image using Dall-E for visual understanding, or by suggesting YouTube videos for deeper comprehension.

Let’s initialize them, starting with the Wikipedia tool:

wiki_api_wrapper = WikipediaAPIWrapper(top_k_results=1, doc_content_chars_max=250)

wikipedia = WikipediaQueryRun(description="A tool to explain things in text format. Use this tool if you think the user’s asked concept is best explained through text.", api_wrapper=wiki_api_wrapper)

print(wikipedia.invoke("Mobius strip"))Page: Möbius strip

Summary: In mathematics, a Möbius strip, Möbius band, or Möbius loop is a surface that can be formed by attaching the ends of a strip of paper together with a half-twist. As a mathematical object, it was discovered by Johann BenediDallE image generator:

dalle_api_wrapper = DallEAPIWrapper(model="dall-e-3", size="1792x1024")

dalle = OpenAIDALLEImageGenerationTool(

api_wrapper=dalle_api_wrapper, description="A tool to generate images. Use this tool if you think the user’s asked concept is best explained through an image."

)

output = dalle.invoke("A mountain bike illustration.")

print(output)

YouTube search tool:

youtube = YouTubeSearchTool(

description="A tool to search YouTube videos. Use this tool if you think the user’s asked concept can be best explained by watching a video."

)

youtube.run("Oiling a bike's chain")"['https://www.youtube.com/watch?v=X1Vze17bhgk&pp=ygUVT2lsaW5nIGEgYmlrZSdzIGNoYWlu', 'https://www.youtube.com/watch?v=T6xyn1FGHiU&pp=ygUVT2lsaW5nIGEgYmlrZSdzIGNoYWlu']"Take special care of the tool descriptions. The agent will decide which one tool to use based on the description you provide.

Now, we will put the tools into a list:

tools = [wikipedia, dalle, youtube]We can already bind this set of tools to a chat model without creating an agent:

chat_model = ChatOpenAI(api_key=api_key)

model_with_tools = chat_model.bind_tools(tools)Let’s try calling the model with a simple message:

response = model_with_tools.invoke([HumanMessage("What's up?!")])

print(f"Text response: {response.content}")

print(f"Tools used in the response: {response.tool_calls}")Text response: Hello! How can I assist you today?

Tools used in the response: []The output shows that none of the bound tools were used when generating an answer. Now, let’s ask a specific question that would force the model to look beyond its training data:

response = model_with_tools.invoke([

HumanMessage("Can you generate an image of a mountain bike?")

])

print(f"Text response: {response.content}")

print(f"Tools used in the response: {response.tool_calls}")Text response:

Tools used in the response: [{'name': 'openai_dalle', 'args': {'query': 'mountain bike'}, 'id': 'call_92GBfmsYtPi9TpGuIOFB1pG8', 'type': 'tool_call'}]We can see there is no text output, but OpenAI’s DallE is mentioned. The tool isn’t called yet; the model is simply suggesting we use it. To actually call it — to take action, we need to create an agent.

2. Creating a simple agent

After defining the model and the tools, we create the agent. LangChain offers a high-level create_react_agent() function interface from its langgraph package to quickly create ReAct (reason and act) agents:

from langgraph.prebuilt import create_react_agent

system_prompt = SystemMessage("You are a helpful bot named Chandler.")

agent = create_react_agent(chat_model, tools, state_modifier=system_prompt)While initializing the agent with a chat model and a list of tools, we are passing a system prompt to tell the model how to behave in general. It is now ready to accept queries:

from pprint import pprint

response = agent.invoke({"messages": HumanMessage("What's up?")})

pprint(response["messages"])[HumanMessage(content="What's up?", id='133b9380-cfe1-495a-98f7-b835c874bd57'),

AIMessage(content='Hello! How can I assist you today?', response_metadata={'token_usage': {'completion_tokens': 10, 'prompt_tokens': 112, 'total_tokens': 122}, 'model_name': 'gpt-3.5-turbo-0125', 'system_fingerprint': None, 'finish_reason': 'stop', 'logprobs': None}, id='run-0a920c15-39b4-4857-ab51-6ea905575dba-0', usage_metadata={'input_tokens': 112, 'output_tokens': 10, 'total_tokens': 122})]We have received a likely response, which is a simple text answer without tool calls. Now, let’s ask something more to the point:

response = agent.invoke({"messages": [

HumanMessage('Explain how photosynthesis works.')

]})

print(len(response['messages']))4This time, there are four messages. Let’s see the message class names and their content:

for message in response['messages']:

print(

f"{message.__class__.__name__}: {message.content}"

) # Print message class name and its content

print("-" * 20, end="\n")HumanMessage: Explain how photosynthesis works.

--------------------

AIMessage:

--------------------

ToolMessage: Page: Photosynthesis

Summary: Photosynthesis ( FOH-tə-SINTH-ə-sis) is a system of biological processes by which photosynthetic organisms, such as most plants, algae, and cyanobacteria, convert light energy, typically from sunlight, into the chemical

--------------------

AIMessage: Photosynthesis is a biological process where photosynthetic organisms like plants, algae, and cyanobacteria convert light energy, usually from sunlight, into chemical energy. This process involves capturing light energy through pigments like chlorophyll in plant cells and using it to convert carbon dioxide and water into glucose and oxygen. Glucose serves as a source of energy for the plant, while oxygen is released into the atmosphere as a byproduct. Photosynthesis is crucial for the survival of plants and the balance of oxygen and carbon dioxide in the atmosphere.

--------------------Here we go! The third message is from a tool call, which is a summary of a Wikipedia page on photosynthesis. The last message is from the chat model, which is using the tool call’s contents when constructing its answer.

Let’s quickly create a function to modularize the last steps we took:

def execute(agent, query):

response = agent.invoke({'messages': [HumanMessage(query)]})

for message in response['messages']:

print(

f"{message.__class__.__name__}: {message.content}"

) # Print message class name and its content

print("-" * 20, end="\n")

return response3. Refining the system prompt

Now, let’s update our system prompt with detailed instructions on how the agent should behave:

system_prompt = SystemMessage(

"""

You are a helpful bot named Chandler. Your task is to explain topics

asked by the user via three mediums: text, image or video.

If the asked topic is best explained in text format, use the Wikipedia tool.

If the topic is best explained by showing a picture of it, generate an image

of the topic using Dall-E image generator and print the image URL.

Finally, if video is the best medium to explain the topic, conduct a YouTube search on it

and return found video links.

"""

)Let’s recreate our agent with the new system prompt:

agent = create_react_agent(chat_model, tools, state_modifier=system_prompt)

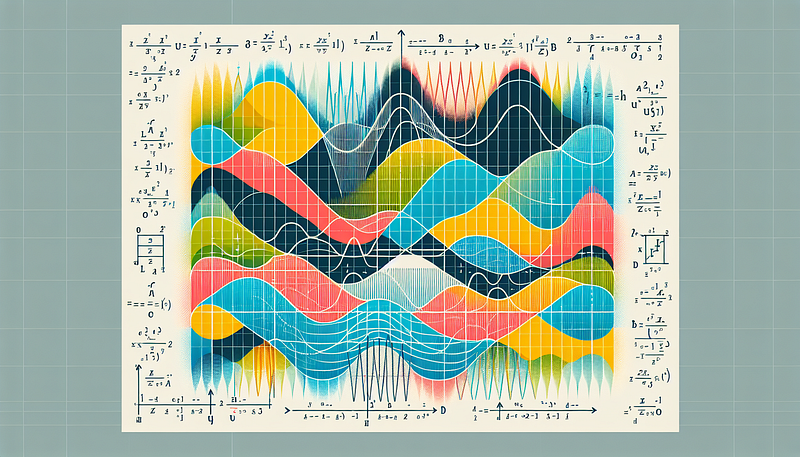

response = execute(agent, query='Explain the Fourier Series visually.')HumanMessage: Explain the Fourier Series visually.

--------------------

AIMessage:

--------------------

ToolMessage: https://oaidalleapiprodscus.blob.core.windows.net/private/org-qRwX4bsgcnaYHHwbxFBdZxUy/user-LOXQPflMtXxamV72hac9oS2O/img-iY3gXXBzWapRWKdmkd9cXEIN.png?st=2024-08-09T18%3A36%3A46Z&se=2024-08-09T20%3A36%3A46Z&sp=r&sv=2024-08-04&sr=b&rscd=inline&rsct=image/png&skoid=d505667d-d6c1-4a0a-bac7-5c84a87759f8&sktid=a48cca56-e6da-484e-a814-9c849652bcb3&skt=2024-08-09T17%3A56%3A46Z&ske=2024-08-10T17%3A56%3A46Z&sks=b&skv=2024-08-04&sig=Ly8F4jvakFeEtpZ/6jMliLq%2BG3Xs%2Bz1AmX1sL06%2BQ3U%3D

--------------------

AIMessage: Here is a visual representation of the Fourier Series:

The Fourier Series is a way to represent a function as the sum of simple sine waves. It is used in many areas of mathematics and physics to analyze and understand periodic phenomena.

--------------------Awesome, based on our message (which was very instructive :), the agent chose the correct tool for the job. Here is the generated image:

4. Adding memory to agents

Right now, our agent is stateless, which means it doesn’t remember previous interactions:

response = execute(agent, query="What did I ask you in the previous query?")HumanMessage: What did I ask you in the previous query?

--------------------

AIMessage: I'm sorry, I cannot remember the previous query as I don't have access to the conversation history. How can I assist you today?

--------------------The easiest way to add chat message history to agents is by using langgraph's SqliteSaver class:

from langgraph.checkpoint.sqlite import SqliteSaver

memory = SqliteSaver.from_conn_string(':agent_history:')

agent = create_react_agent(chat_model, tools, checkpointer=memory, state_modifier=system_prompt)We initialize the memory using the .from_conn_string() method of SqliteSaver class, which creates a database file. Then, we pass the memory to the checkpointer parameter of create_react_agent() function.

Now, we need to create a configuration dictionary:

config = {'configurable': {'thread_id': 'a1b2c3'}}The dictionary defines a thread ID to distinguish one conversation from another and it is passed to the .invoke() method of our agent. So, let's update our execute() function to include this behavior:

def execute(agent, query, thread_id="a1b2c3"):

config = {"configurable": {"thread_id": thread_id}}

response = agent.invoke({'messages': [HumanMessage(query)]}, config=config)

for message in response["messages"]:

print(

f"{message.__class__.__name__}: {message.content}"

) # Print message class name and its content

print("-" * 20, end="\n")

return response

Let’s test it again:

response = execute(

agent, query="Explain how to oil a bike's chain using a YouTube video", thread_id="123"

)HumanMessage: Explain how to oil a bike's chain using a YouTube video

--------------------

AIMessage:

--------------------

ToolMessage: ['https://www.youtube.com/watch?v=X1Vze17bhgk&pp=ygUXaG93IHRvIG9pbCBhIGJpa2UgY2hhaW4%3D', 'https://www.youtube.com/watch?v=ubKCHtZ20-0&pp=ygUXaG93IHRvIG9pbCBhIGJpa2UgY2hhaW4%3D']

--------------------

AIMessage: I found a couple of YouTube videos that explain how to oil a bike chain:

1. [Video 1: How to oil a bike chain](https://www.youtube.com/watch?v=X1Vze17bhgk&pp=ygUXaG93IHRvIG9pbCBhIGJpa2UgY2hhaW4%3D)

2. [Video 2: Step-by-step guide on oiling a bike chain](https://www.youtube.com/watch?v=ubKCHtZ20-0&pp=ygUXaG93IHRvIG9pbCBhIGJpa2UgY2hhaW4%3D)

You can watch these videos to learn how to oil your bike's chain effectively.

--------------------Now, let’s ask the agent about previous queries:

response = execute(agent, query='What have I asked you so far?', thread_id='123')

print(response){'messages': [HumanMessage(content="Explain how to oil a bike's chain using a YouTube video", id='8254142b-fb77-4958-8ad9-0a0283c6611a'), ...

]As expected, the agent is returning the previous messages! Now, we only need a chat UI like that of ChatGPT and we have got ourselves a custom chatbot.

Future Trends And Developments

Throughout the article, we have caught a glimpse of where LangChain is going in terms of agents. Up until very recently, LangChain had mainly used the AgentExecutor class but it is slowly being replaced by langgraph agents.

Pure LangChain agents are fine to get started, but they require more lines of code to build the same agent than in LangGraph. Also, after a certain point, the AgentExecutor framework won't provide the flexibility LangGraph has for building complex multi-tool agents.

That’s why now is a great time to ride the wave and start with LangGraph directly.

We highly recommend to start using LangSmith as well, which has become a core part of the LangChain ecosystem for building production-grade LLM applications. Here are some of its key benefits:

- Debugging: LangSmith provides detailed traces of your agent’s execution, making it easier to identify and fix issues.

- Performance Optimization: With LangSmith, you can analyze token usage, latency, and other performance metrics to optimize your agent’s efficiency.

- Testing and Evaluation: LangSmith facilitates the creation and management of test datasets, enabling you to rigorously evaluate your agent’s performance across a range of scenarios.

- Monitoring: In production environments, LangSmith offers real-time monitoring capabilities, allowing you to track your agent’s performance and detect anomalies quickly.

Here is how you can get started with LangSmith:

- Sign up for a free account here.

- Set environment variables.

export LANGCHAIN_TRACING_V2="true"

export LANGCHAIN_API_KEY="..."

and you are good to go! As you start querying language/chat models, LangSmith starts logging various metrics about each run:

Conclusion

In this article, we explored what makes LangChain agents distinct from chains and the important building blocks used in constructing them. We first introduced what agents are and how they differ from the more traditional chain constructs regarding flexibility and capability in making decisions.

Then we looked at the key components you need to know about in order to build an agent: chat models, tools, and prompt templates. Finally, we ran through two examples demonstrating how to build simple and advanced agents. Natural language processing is developing continually, and LangChain agents are at the forefront of this progression, paving the way for an even more intelligent and versatile family of AI.

Here are some related resources to increase your LangChain:

- Developing LLM Applications with LangChain Course

- An Introduction to Prompt Engineering with LangChain

- How to Build LLM Applications with LangChain Tutorial

- Building a GPT Model with Browsing Capabilities Using LangChain Tools

- LangChain vs LlamaIndex: A Detailed Comparison

Thank you for reading!

I am a data science content creator with over 2 years of experience and one of the largest followings on Medium. I like to write detailed articles on AI and ML with a bit of a sarcastıc style because you've got to do something to make them a bit less dull. I have produced over 130 articles and a DataCamp course to boot, with another one in the makıng. My content has been seen by over 5 million pairs of eyes, 20k of whom became followers on both Medium and LinkedIn.