Track

For us programmers, coding is perhaps one of the most important applications of LLMs. The ability to generate accurate code, debug, and properly “understand” code is what makes an LLM a key assistant to many programmers, along with its many enterprise benefits.

Mistral has recently released their latest model, Codestral 25.01, also known as Codestral V2—an upgrade to their first Codestral model released in 2024. In this article, I’ll review Codestral 25.01, evaluate its performance in benchmarks, guide you through its setup, and demonstrate it in action.

What Is Mistral’s Codestral 25.01?

Quoting from Mistral’s announcement, Codestral 25.01 is “lightweight, fast, and proficient in over 80 programming languages (...) optimized for low-latency, high-frequency use cases and supports tasks such as fill-in-the-middle (FIM), code correction and test generation.”

Many developers have used the previous model, Codestral, for code-related tasks. Codestral 25.01 is an upgraded version, with an improved tokenizer and a more efficient architecture that allows it to generate code twice as fast.

Mistral has not yet released the model or provided any details about its architecture or size. However, we do know that it has fewer than 100 billion parameters and supports a 256K context length.

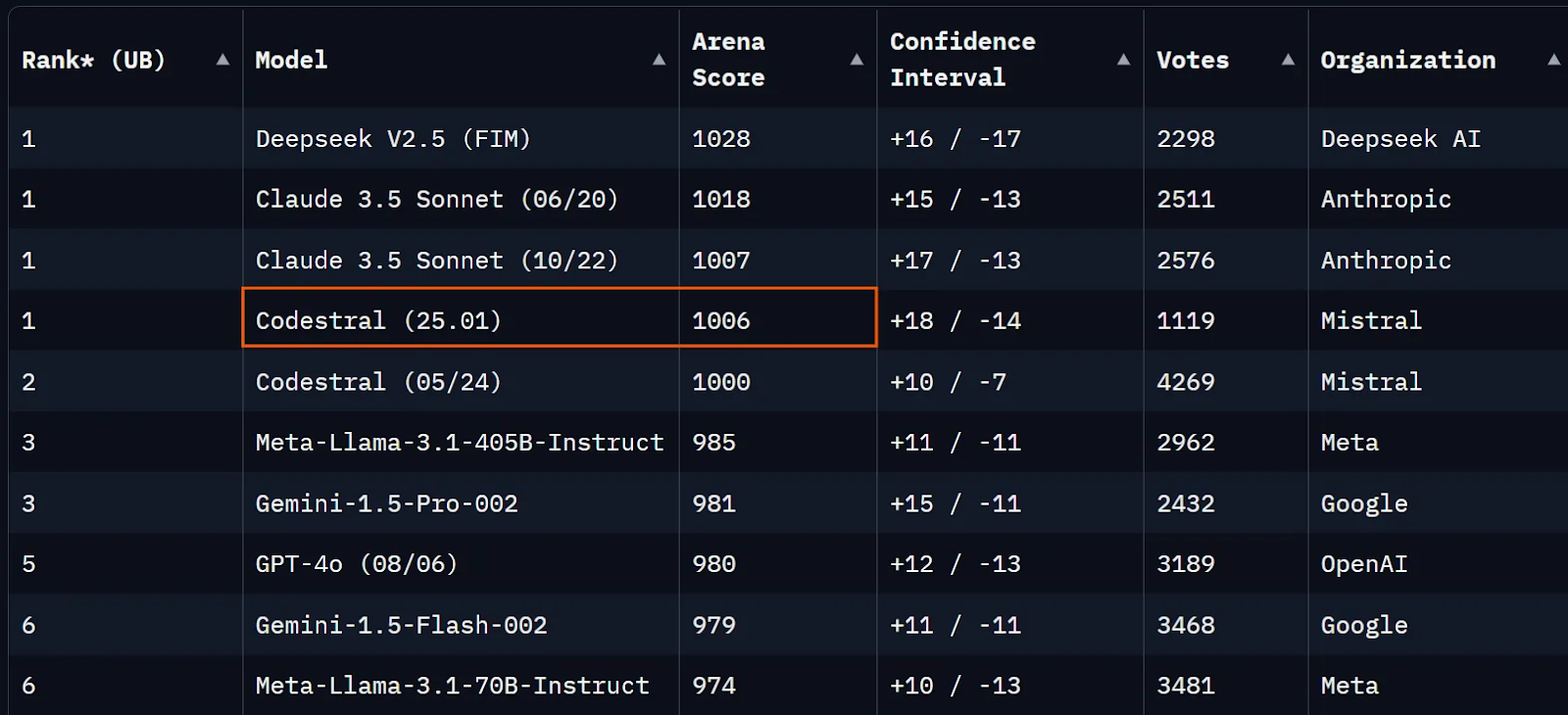

At the time of writing this article, Codestral 25.01 is only a few points below Claude 3.5 Sonnet series in the Chatbot Arena (formerly LMSYS) and only slightly over Codestral V1.

Source: Chatbot Arena

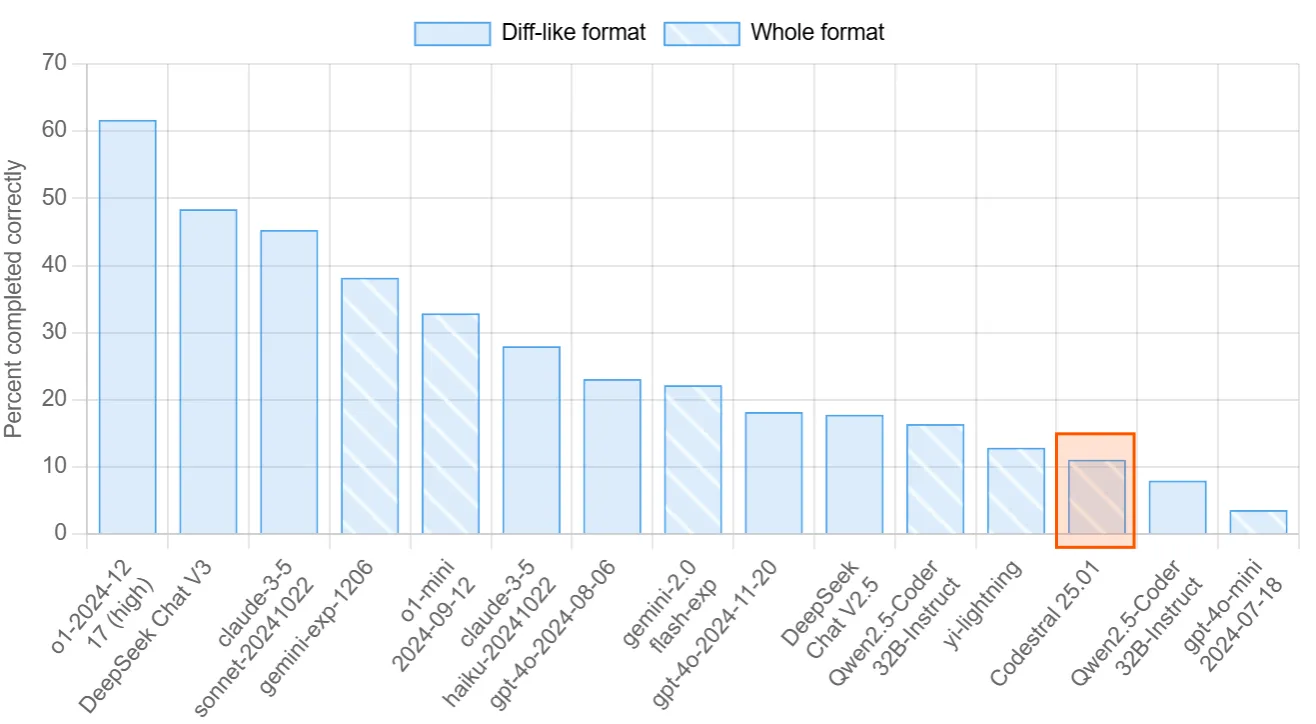

Another leaderboard focused on evaluating the coding abilities of LLMs is Aider’s Polyglot. In this benchmark, models are prompted to complete 225 coding exercises in popular programming languages. Codestral 25.01 scored 11%, while Qwen2.5-Coder-32B-Instruct achieved 16%.

Source: Aider leaderboard

Finally, let’s look at the official benchmarks published by Mistral. The released benchmark scores demonstrate a clear step up compared to Codestral V1, Codellama 70B, or DeepSeek Coder 33B. It can be more informative to compare Codestral 25.01’s scores with the more recent Qwen2.5-Coder-32B-Instruct (you can read Qwen’s scores here).

Overview for Python:

|

Model |

Context length |

HumanEval |

MBPP |

CruxEval |

LiveCodeBench |

RepoBench |

|

Codestral-2501 |

256k |

86.6% |

80.2% |

55.5% |

37.9% |

38.0% |

|

Codestral-2405 22B |

32k |

81.1% |

78.2% |

51.3% |

31.5% |

34.0% |

|

Codellama 70B instruct |

4k |

67.1% |

70.8% |

47.3% |

20.0% |

11.4% |

|

DeepSeek Coder 33B instruct |

16k |

77.4% |

80.2% |

49.5% |

27.0% |

28.4% |

|

DeepSeek Coder V2 lite |

128k |

83.5% |

83.2% |

49.7% |

28.1% |

20.0% |

Benchmarks for multiple languages:

|

Model |

HumanEval Python |

HumanEval C++ |

HumanEval Java |

HumanEval Javascript |

HumanEval Bash |

HumanEval Typescript |

HumanEval C# |

HumanEval (average) |

|

Codestral-2501 |

86.6% |

78.9% |

72.8% |

82.6% |

43.0% |

82.4% |

53.2% |

71.4% |

|

Codestral-2405 22B |

81.1% |

68.9% |

78.5% |

71.4% |

40.5% |

74.8% |

43.7% |

65.6% |

|

Codellama 70B instruct |

67.1% |

56.5% |

60.8% |

62.7% |

32.3% |

61.0% |

46.8% |

55.3% |

|

DeepSeek Coder 33B instruct |

77.4% |

65.8% |

73.4% |

73.3% |

39.2% |

77.4% |

49.4% |

65.1% |

|

DeepSeek Coder V2 lite |

83.5% |

68.3% |

65.2% |

80.8% |

34.2% |

82.4% |

46.8% |

65.9% |

How to Set Up Codestral 25.01 on VS Code

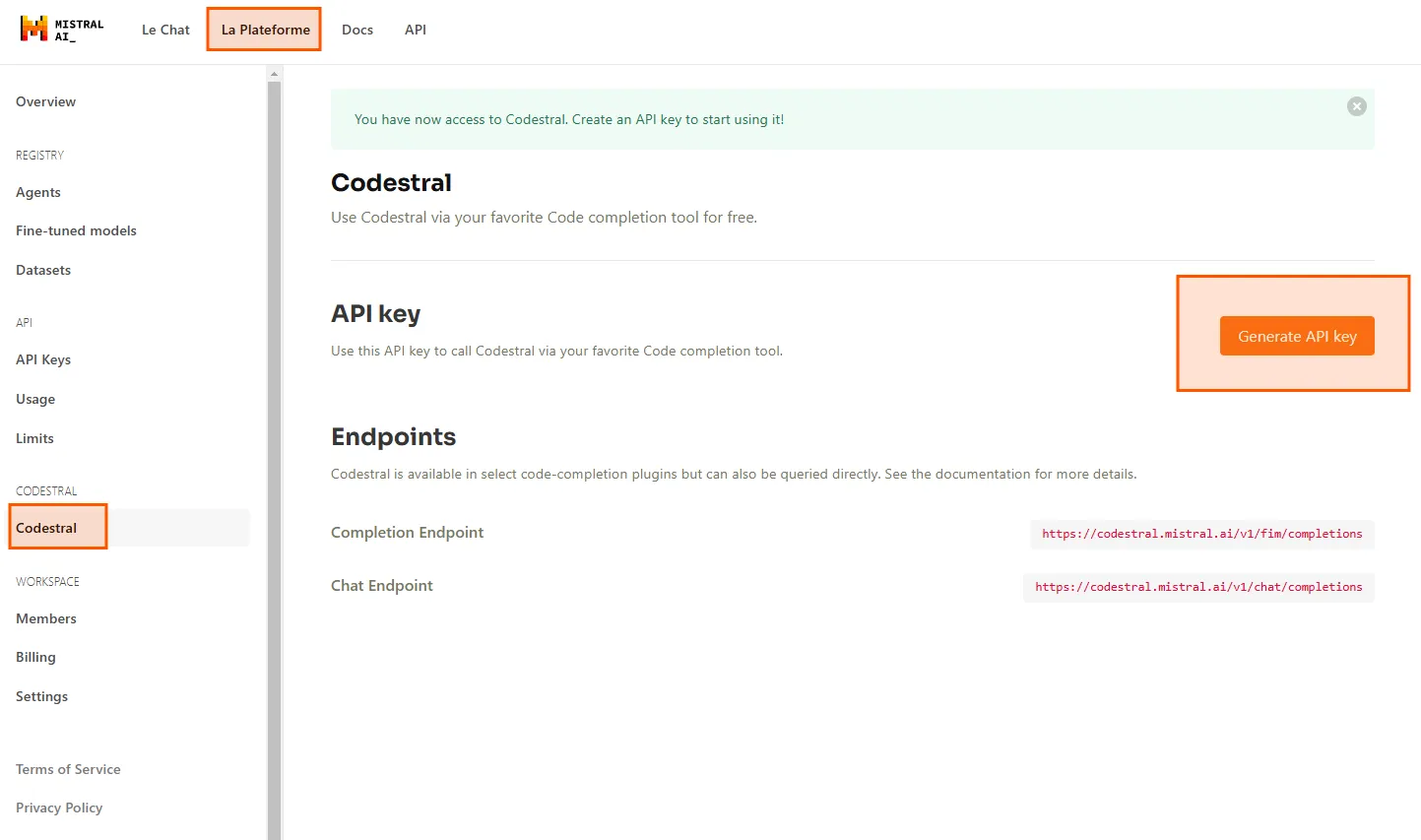

Unfortunately, the model isn’t open-weight, and you cannot run it locally. The good news is that you can access the model via API or through VsCode and PyCharm for free as a code assistant.

First, we need an API key. Head over to Le Plateforme, and navigate to Codestral. Here, you can generate your API key to use Codestral 25.01 in your IDE.

I will be using Codestral in VS Code through the Continue extension. You can search for it in the extension marketplace and install it for free.

After you install the extension, click the Continue button at the bottom right of VsCode, select Configure autocomplete options, and paste your API key under Codestral. Now, you can use Codestral as your autocomplete model.

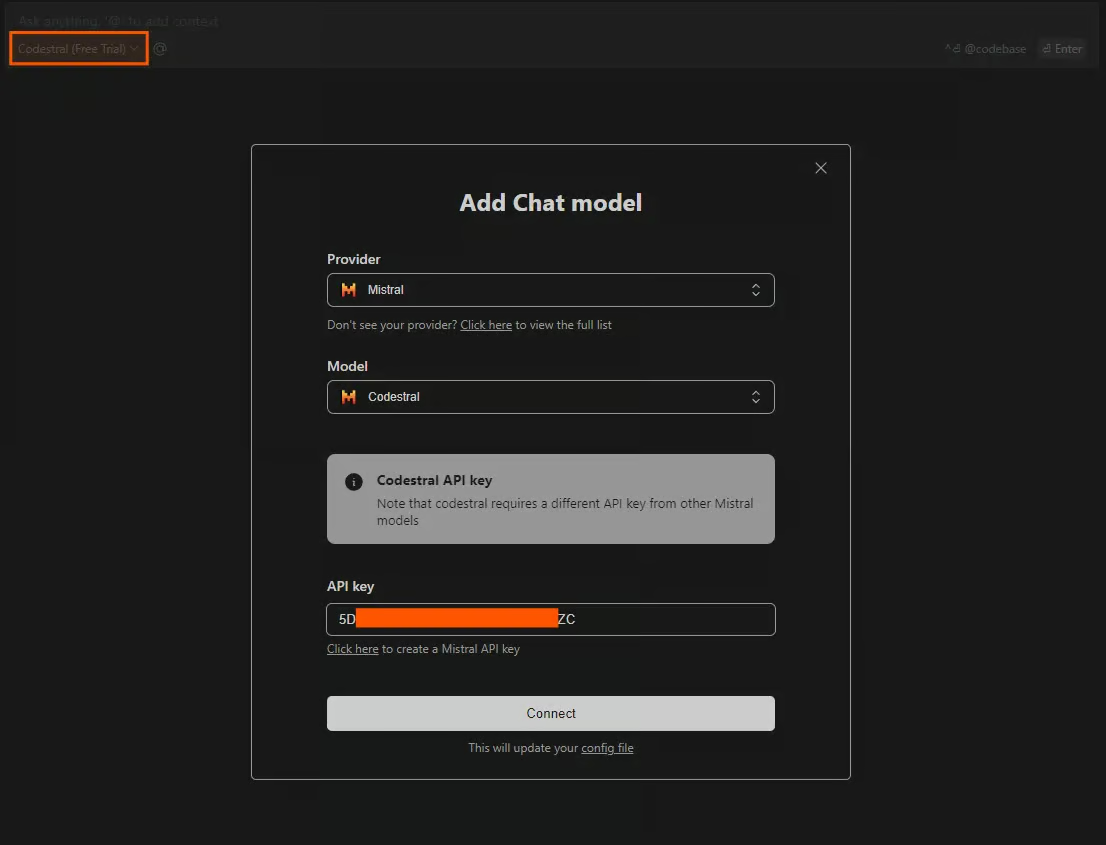

Another method to use Codestral in IDE is to chat with the model. To access the chat window, press CTRL+L. You can select a variety of models to use as chat models, but they are limited without providing an API key. To have free access to Codestral, click on the model in the chat section and select Add Chat model. Select Mistral as the provider, Codestral as the model, and paste your API key.

Testing Codestral 25.01

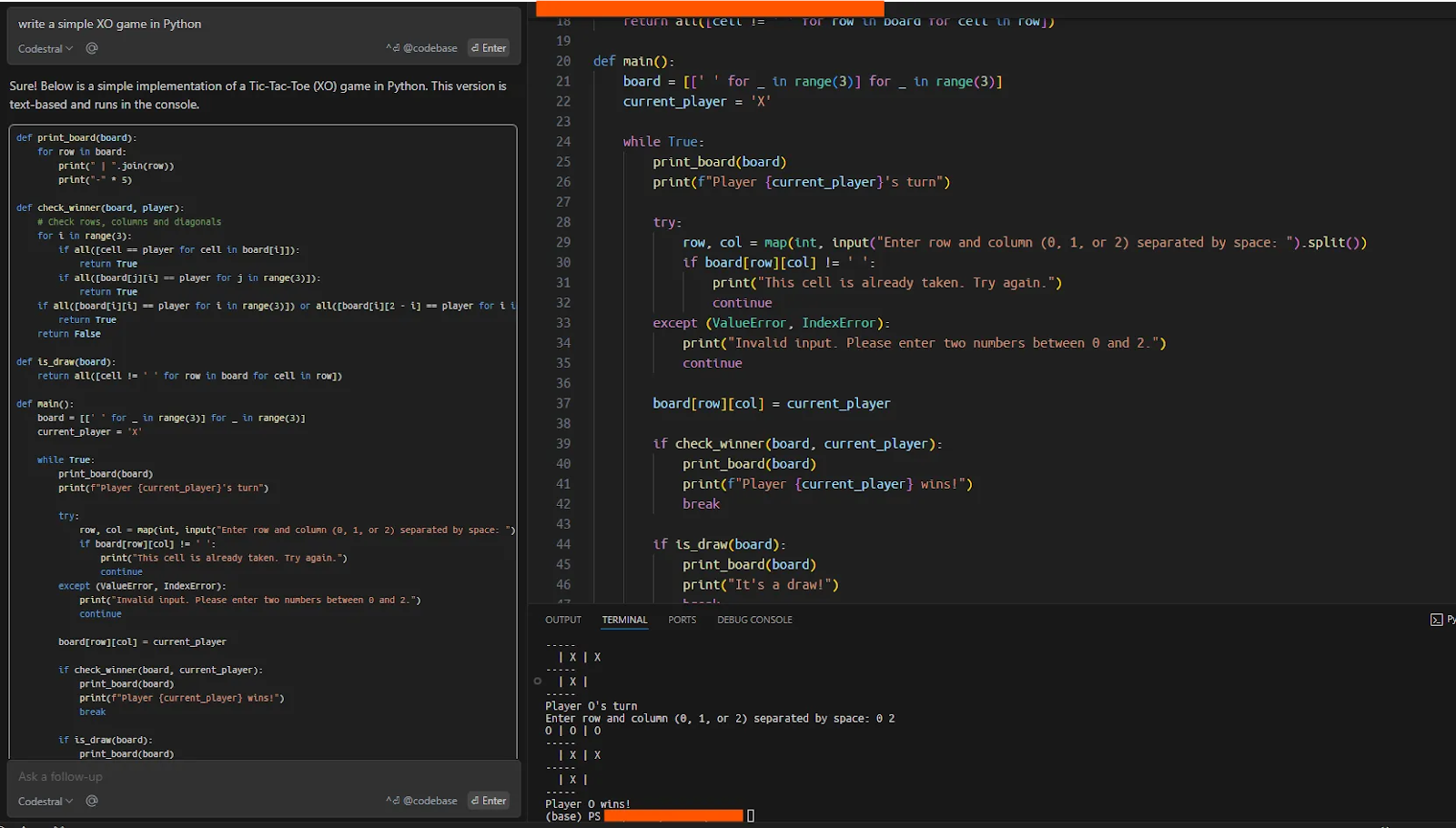

Now, to test that everything is running smoothly, I asked Codestral to write a simple XO game in Python, which the model executed correctly in one shot.

Using Codestal in the chat window

One of the most common and useful applications of a code LLM is working on existing codebases. These codebases can be big and complex enough to simulate a real-world scenario using these models. Here, I will test Codestral’s ability to work with the smolagents framework, which is Hugging Face’s repository for building agents (learn more in this tutorial on Hugging Face’s Smolagents).

I will clone the repository, give the folder as context to the model, and ask Codestral to help me with writing a simple demo with smolagents.

After cloning the repository, I gave the complete codebase to Codestral. You can choose which data, code, or documents to give to the model as context, using “@” in the chat. To give the entire codebase as the context, the prompt would be: “@Codebase what is this codebase about?”.

Initially, when I prompted Codestral to write a simple demo of smolagents (which is clearly explained in the repository's README with four lines of code), the model was unable to give a coherent response.

I then asked the model to explain the repository and what it is about, which delivered a good summarization.

My next prompt was: “how can you run a simple agent with smolagents?” The model gave me a good answer based on the README file.

In the third step, I asked “build me an agent,” to which the model responded by building a simple agent just as instructed by the documentation. It was surprising that the model was unable to build a demo when I first asked it, but it seems that asking the model about the repository first, gives it more context to work with.

Working on an existing codebase with Codestral

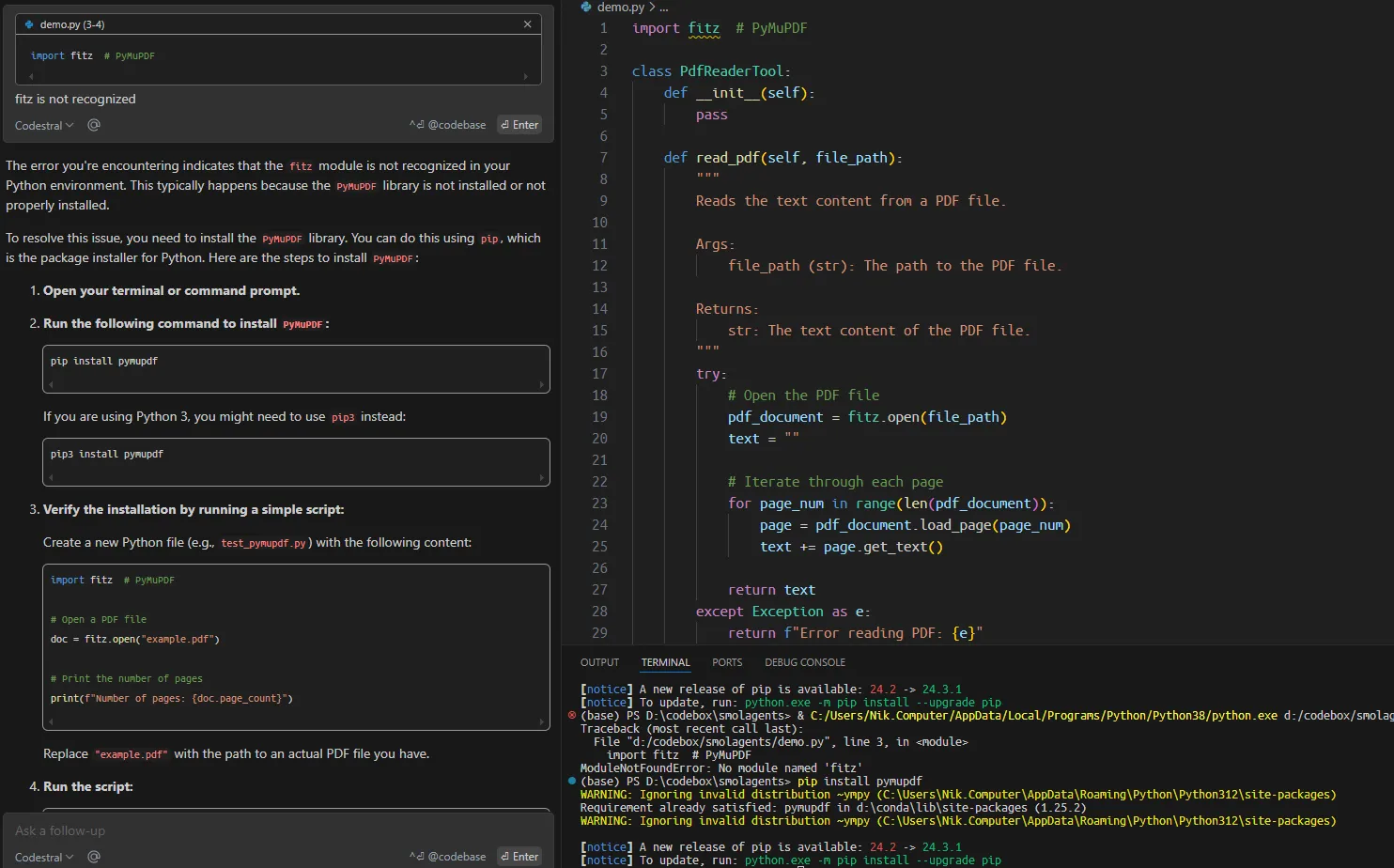

I then prompted the model to build a custom agentic tool for reading PDF files based on the repository’s documentation. Surprisingly, the suggested code contained multiple mistakes that could have been easily avoided.

While the speed of generating responses is noticeable, and the model offers productive autocompletes, in more serious cases of building an application or working on an existing codebase, you must break down the task into much smaller pieces and specific context for it to deliver.

Conclusion

We started this article by introducing Codestral 25.01 and reviewing its performance in benchmarks, which demonstrate that it is a strong code LLM. We then showed how to set up Codestral 25.01 in VsCode and how to use it in action.

While the new version of Codestral might not be the best coding LLM, it’s very convenient that we can use it for free as a code assistant in IDEs. The overall experience resembles that of Cursor AI, but for those reluctant to install Cursor or buy API credits of other models, the free experience of Codestral can be a good alternative.

Master's student of Artificial Intelligence and AI technical writer. I share insights on the latest AI technology, making ML research accessible, and simplifying complex AI topics necessary to keep you at the forefront.