Track

First, there were data warehouses. They stored data in rows and columns because all the Internet and computers of the time were capable of distributing was simple text information. Much later came data lakes — they could store nearly any type of data you could collect. They were great for the social media and YouTube age.

But they both had disadvantages — data warehouses were expensive and unsuitable for modern data science, while data lakes were messy and often turned into data swamps. So, companies started having two separate tech stacks — warehouses for BI and analytics and lakes for machine learning.

However, managing two different data architectures was such a pain that companies often had poor results. This issue gave rise to the lakehouse architecture, which is precisely what Databricks is famous for.

Databricks is a cloud-based platform that allows users to derive value from both warehouses and lakes in a unified environment. This article will give an overview of the platform, showing its most important features and how to use them.

What We’ll Cover in this Databricks Tutorial

Databricks is such a massive platform that its documentation itself could be turned into a book. So, the article’s goal is to provide you with a concepts hierarchy — linearly ordered explanations of Databricks features that will take you from a beginner to a decent Databricks practitioner. If you’re a total newcomer, you may also want to check out our Introduction to Databricks course.

Let’s get started!

1. What is Databricks?

When you read the word Databricks, you should immediately think of it as a platform, not as some framework or Python library. Typically, platforms offer a wide range of features, and Databricks is no exception. It is one of the very few platforms that can be used by any data professional, from data engineers to the modern machine learning engineers (or what the press calls AI programmers today).

Databricks has the following core components:

- Workspace: Databricks provides a centralized environment where teams can collaborate without any hassles. The environment is accessible through a user-friendly web interface.

- Notebooks: Databricks has a version of Jupyter notebooks specifically designed for collaboration and flexibility.

- Apache Spark: Databricks loves Apache Spark. It is the engine that powers all parallel processing of humongous datasets, making it suitable for big data analytics.

- Delta Lake: An enhancement on data lakes by providing ACID transactions. Delta Lakes ensures data reliability and consistency, addressing traditional challenges associated with data lakes.

- Scalability: The platform scales horizontally rather than vertically, which is ideally suited for organizations dealing with ever-increasing data demands.

Databricks benefits

These components, in combination, unlock a wide range of benefits:

- Cross-team collaboration: engineers, analysts, scientists and ML engineers can work seamlessly in the same platform.

- Consistency: with notebooks, users can transition between tasks and programming languages without the need for context-switching.

- Efficient workflows: Users can perform tasks such as data cleaning, transformation, and machine learning in a cohesive manner

- Integrated data management: users can ingest data into the platform from multiple sources, create tables, and run SQL

- Real-time collaboration: shared notebooks and collaborative editing features enable real-time collaboration. Multiple team members can work on the same notebook simultaneously.

If I’ve got you convinced of Databricks’ importance in the data world, let’s get you up and running with the platform.

2. Account Setup

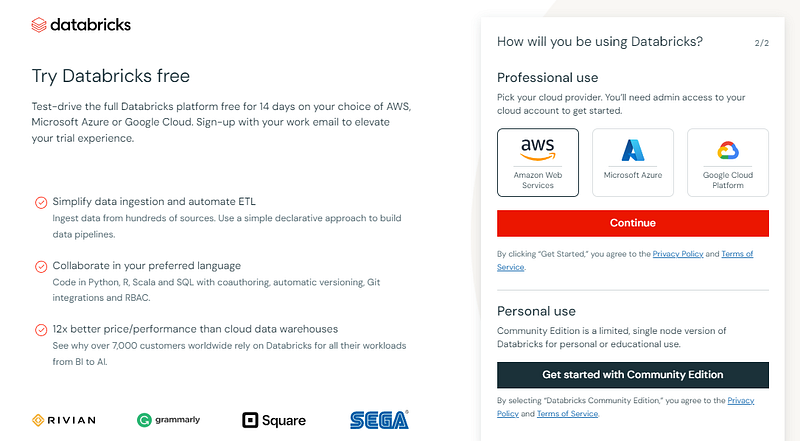

To set up your account, go to https://www.databricks.com/try-databricks and sign up for the Community Edition.

Community Edition has fewer features than the Enterprise version, but it doesn’t require a cloud-provider set up, which is great for small-use cases like tutorials.

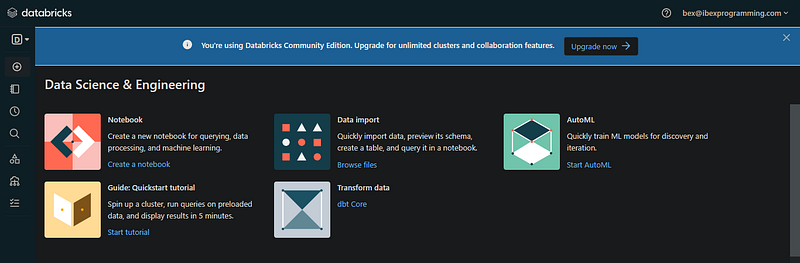

If you have this page after email verification, you are good to go:

3. Databricks Workspace

The interface you discovered is the Workspace for your email address (the community edition workspace can easily be found). In practice, usually an account admin from your company creates a single Databricks account and manages access to the workspace.

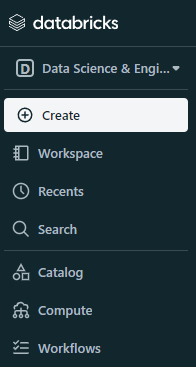

Now, let’s understand the UI of the platform. On the left panel, we have the menu for the different components Databricks offers. The enterprise version will have even more buttons:

The first option in the menu is the type of workspace, which is set to data science and engineering by default. If you can change it to machine learning, a new Experiments option pops up:

On the surface, it may look like it doesn’t do much, but once you upgrade your account and start tinkering, you will notice some great features of the platform:

- Central hub for resources: notebooks, clusters, tables, libraries and dashboards

- Notebooks in multiple languages

- Cluster management: managing computational resources for the workspace to execute code

- Table management

- Dashboard creation: DB users have the ability to collect visuals into dashboards right in the workspace

- Collaborative real-time editing of notebooks

- Version control for notebooks

- Job scheduling (a powerful feature): users can execute notebooks and scripts at specified intervals

and so on.

Now, let’s look at some of these components more closely.

4. Databricks Clusters

Clusters in Databricks refer to the computational resources used to execute data processing tasks. Usually, clusters are served by your chosen cloud provider during account setup.

The community edition clusters are limited in RAM and CPU power, and GPUs aren’t included. However, premium users can often do the following tasks with clusters in a straightforward way:

- Data processing: Clusters are used to process and transform large volumes of data, using parallel processing powers of Spark.

- Machine learning: You can use Python (or any other language) and its libraries for model training and inference.

- ETL workflows: Clusters also support Extract, Transform, Load workflows by efficiently processing and transforming data from source to destination.

To create a cluster, you can use the “Create” button or the “Compute” options from the menu:

When creating the cluster, choose an appropriate Spark version for your environment and wait a few minutes for it to become operational.

5. Databricks Notebooks

Once you have a running cluster, you are ready to create notebooks. If you’ve worked with Jupyter, Colab, or DataCamp Workspaces, this will be familiar:

But in a world where real Jupyter exists, why would you go for something “similar to Jupyter”? Well, Databricks notebooks have the following advantages over Jupyter notebooks:

- Collaboration: Built-in collaborative features allow multiple users to work on the same notebook at the same time. Changes are tracked in real-time.

- Execution environment: Most Jupyter environment providers or local instances rely on single machines with predefined hardware. Users must install external libraries and dependencies on their own. In contrast, Databricks notebooks are powered by clusters, which automatically handle resources and scaling by the workload. They also come with pre-populated environments.

- Integration with Big Data tech: Jupyter can work with Apache Spark, but users need to manage Spark sessions and dependencies manually. Since Databricks was founded by Spark creators, it supports the framework natively. Spark sessions and clusters are automatically managed by the Databricks platform.

There are many other advantages of Databricks notebooks over Jupyter, so here is a table summarizing the differences:

|

Feature |

Jupyter Notebooks |

Databricks Notebooks |

|

Platform |

Open-source, runs locally or on cloud platforms |

Exclusive to the Databricks platform |

|

Collaboration and Sharing |

Limited collaboration features, manual sharing |

Built-in collaboration, real-time concurrent editing |

|

Execution |

Relies on local or external servers |

Execution on Databricks clusters |

|

Integration with Big Data |

Can be integrated with Spark, requires additional configurations |

Native integration with Apache Spark, optimized for big data |

|

Built-in Features |

External tools/extensions for version control, collaboration, and visualization |

Integrated with Databricks-specific features like Delta Lake, built-in support for collaboration and analytics tools |

|

Cost and Scaling |

Local installations are often free, cloud-based solutions may have costs |

Paid service, costs depend on usage, scales seamlessly with Databricks clusters |

|

Ease of Use |

Familiar and widely used in the data science community |

Tailored for big data analytics, may have a steeper learning curve for Databricks-specific features |

|

Data Visualization |

Limited built-in support for data visualization |

Built-in support for data visualization within the notebook environment |

|

Cluster Management |

Users need to manage Spark sessions and dependencies manually |

Databricks platform handles cluster management and scaling automatically |

|

Use Cases |

Versatile for various data science tasks |

Specialized for collaborative big data analytics within the Databricks platform |

Ultimately, the above advantages of Databricks notebooks come into effect in specific use cases. If you want to play around with a CSV dataset with Pandas on your laptop, Jupyter is much better.

But, for enterprise-level applications, Databricks as a platform may be a better option.

6. Data Ingestion into Databricks

Data ingestion refers to the process of importing data from various sources. Databricks supports ingestion from a variety of sources including:

- AWS S3

- Azure Blob Storage

- Google Cloud Storage

- Relational databases (MySQL, PostgreSQL, etc.)

- Data lakes (Delta Lake, Parquet, Avro, etc.)

- Streaming platforms (Apache Kafka)

- Google BigQuery

- That local CSV file you have

and so on.

Now, let’s actually see how you can load certain types of data into Databricks. We will start with local files:

Once you follow the steps in the GIF, you will have a file stored in the workspace. Here is how you can load it with Spark:

# Importing necessary libraries

from pyspark.sql import SparkSession

# Creating a Spark session

spark = SparkSession.builder.appName("S3ImportExample").getOrCreate()

# Defining the CSV path to the data

path = "dbfs:/FileStore/tables/diamonds.csv"

# Reading data from S3 into a DataFrame

data_from_s3 = spark.read.csv(path, header=True, inferSchema=True)

# Displaying the imported data

data_from_s3.show()

Pay attention to the dbfs: prefix. All workspace files must include it for the file to be loaded correctly with Spark. DBFS stands for databricks file system.

Importing data from an S3 bucket is similar (for enterprise accounts):

# Importing necessary libraries

from pyspark.sql import SparkSession

# Creating a Spark session

spark = SparkSession.builder.appName("S3ImportExample").getOrCreate()

# Defining the S3 path to the data

s3_path = "s3://your-bucket/your-data.csv"

# Reading data from S3 into a DataFrame

data_from_s3 = spark.read.csv(s3_path, header=True, inferSchema=True)

# Displaying the imported data

data_from_s3.show()

For other types of data, you can check the Data engineering and Connect to data sources sections of the Databricks documentation.

7. Running SQL in Databricks

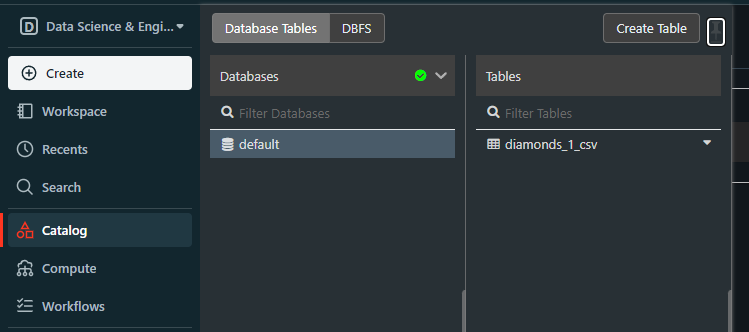

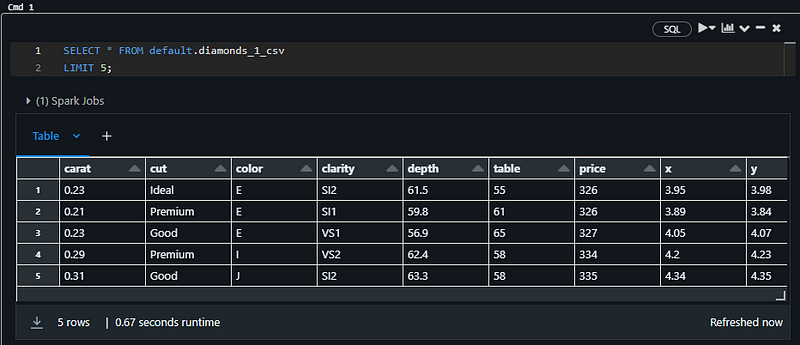

When we uploaded the diamonds.csv file, it became a Databricks table in a database called default:

This default database is created whenever we try to load structured files without creating the database first.

If we’ve got a database, that means we can query it with SQL, not just with Spark. To do so, create a new notebook or change the language of the current notebook to SQL. Then, try the following code snippet:

SELECT * FROM default.diamonds_1_csv

LIMIT 5;

It must return the top five rows of the diamonds table:

Note: I am using an SQL notebook for the above snippet

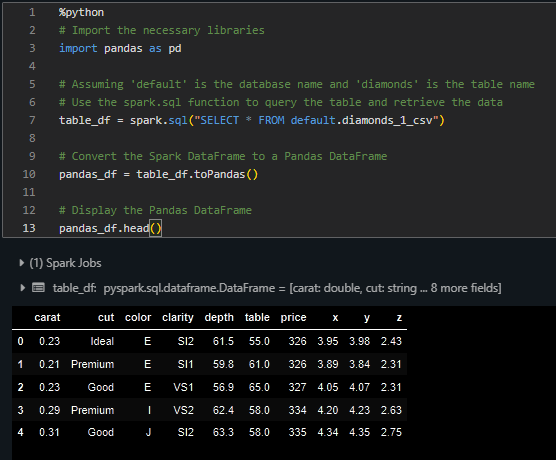

You can load this table in Pandas as well. Within the same notebook, paste this snippet:

%python

# Import the necessary libraries

import pandas as pd

# Assuming 'default' is the database name and 'diamonds' is the table name

# Use the spark.sql function to query the table and retrieve the data

table_df = spark.sql("SELECT * FROM default.diamonds_1_csv")

# Convert the Spark DataFrame to a Pandas DataFrame

pandas_df = table_df.toPandas()

# Display the Pandas DataFrame

pandas_df.head()

It should print the head of the table:

Now, you can do any typical data analysis task on the table with both SQL and Pandas.

Conclusion and Further Steps

We’ve managed to learn and do a lot using our bare-bones Databricks community edition account. To continue learning about the platform, the first step is to use the two-week free trial Databricks offers for premium accounts.

Then, you can fully enjoy the lessons of the Introduction to Databricks course offered by DataCamp. Apart from account set up, you will learn and practice using the following core features of DataCamp:

- Administering a Databricks workspaces

- Reading and writing to external databases

- Data transformations

- Data orchestration, aka scheduling jobs

- Comprehensive overview of Databricks SQL

- Using Lakehouse AI for large-scale machine learning.

I am a data science content creator with over 2 years of experience and one of the largest followings on Medium. I like to write detailed articles on AI and ML with a bit of a sarcastıc style because you've got to do something to make them a bit less dull. I have produced over 130 articles and a DataCamp course to boot, with another one in the makıng. My content has been seen by over 5 million pairs of eyes, 20k of whom became followers on both Medium and LinkedIn.