Track

Microsoft recently introduced Phi-4, the latest addition to the Phi family of small language models. Because it excels in mathematics, I decided to use Phi-4 to build to build a homework checker integrated with Gradio.

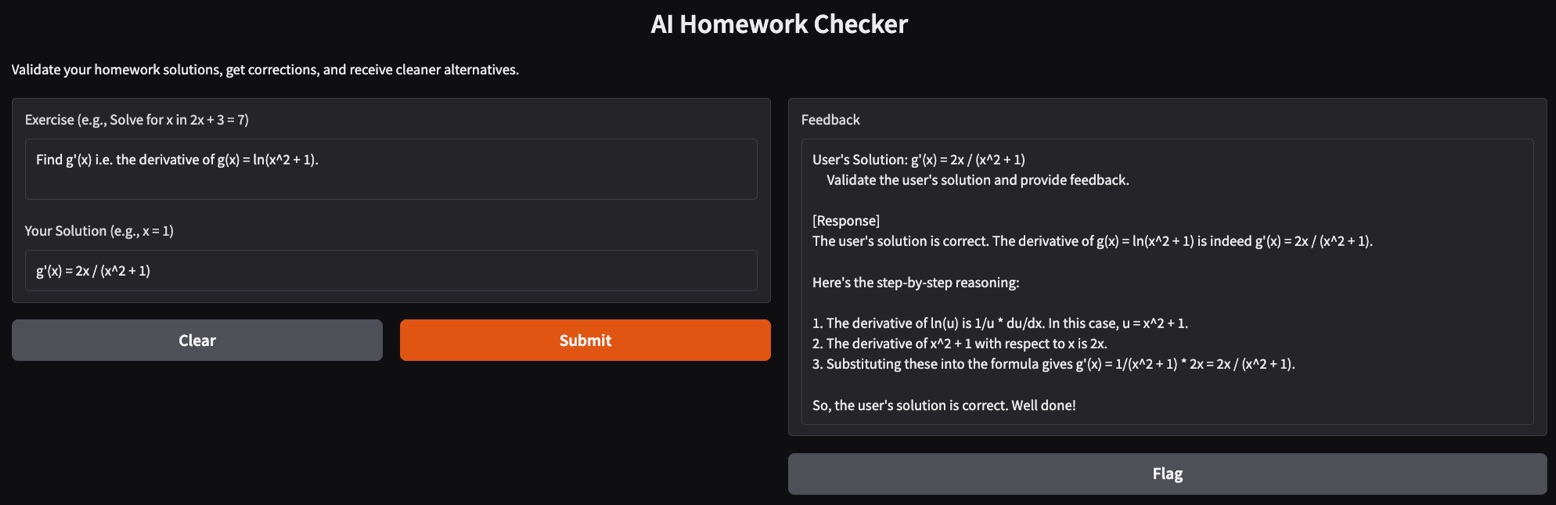

In this tutorial, I’ll guide you step by step through building a functional web app capable of validating solutions, correcting errors, and providing alternative approaches—just like a virtual teaching assistant!

What Is Microsoft’s Phi-4 Model?

Phi-4 excels in complex reasoning tasks, particularly in mathematics, while maintaining proficiency in conventional language processing. Its key features are:

- Advanced reasoning capabilities: Phi-4 is trained on high-quality synthetic datasets and uses innovative post-training techniques to outperform larger models in math-related reasoning tasks.

- Efficiency and accessibility: With 14 billion parameters, Phi-4 offers high-quality results without extensive computational resources, making it accessible for a wider range of applications.

- Availability: Phi-4 is currently accessible through Azure AI Foundry under a Microsoft Research License Agreement (MSRLA) and Hugging Face.

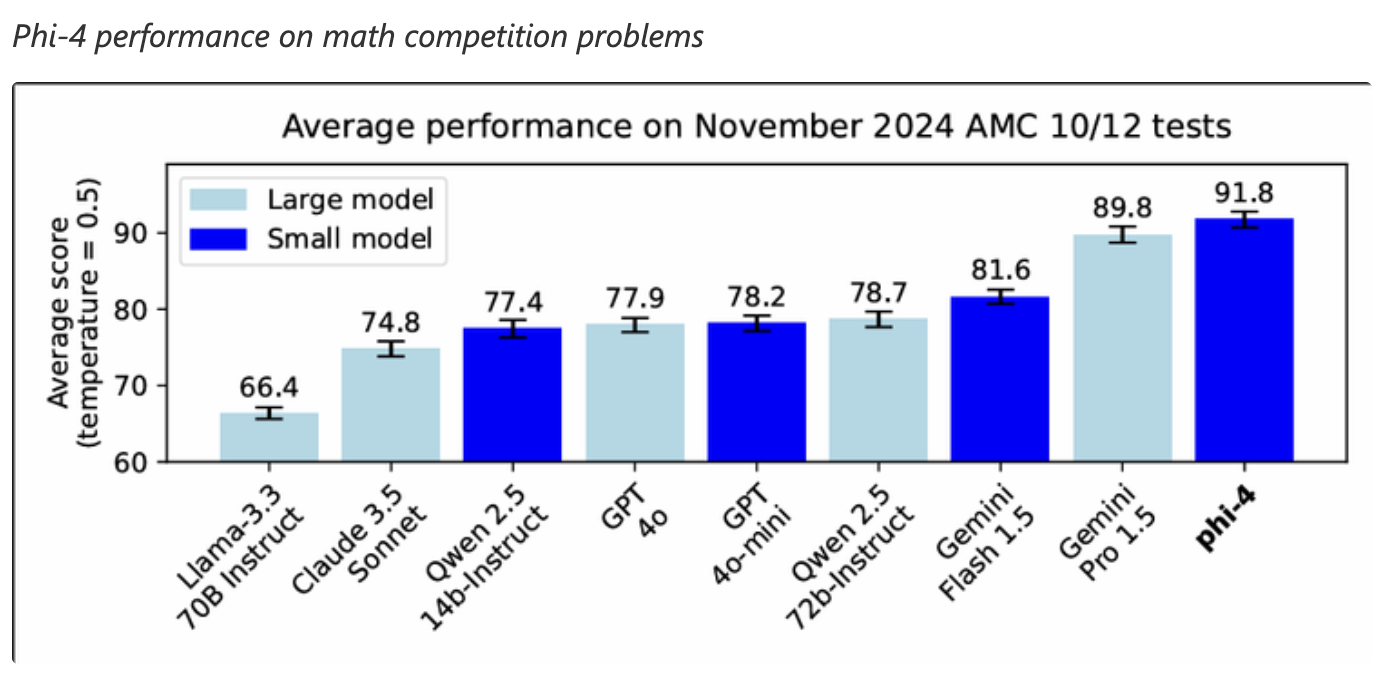

Phi-4 has demonstrated great performance on mathematical reasoning tasks, even surpassing larger models like Gemini Pro 1.5 in math competition problems. This makes it a good choice for applications that require advanced mathematical problem-solving capabilities.

Source: Microsoft

Phi-4 Homework Checker: Implementation Overview

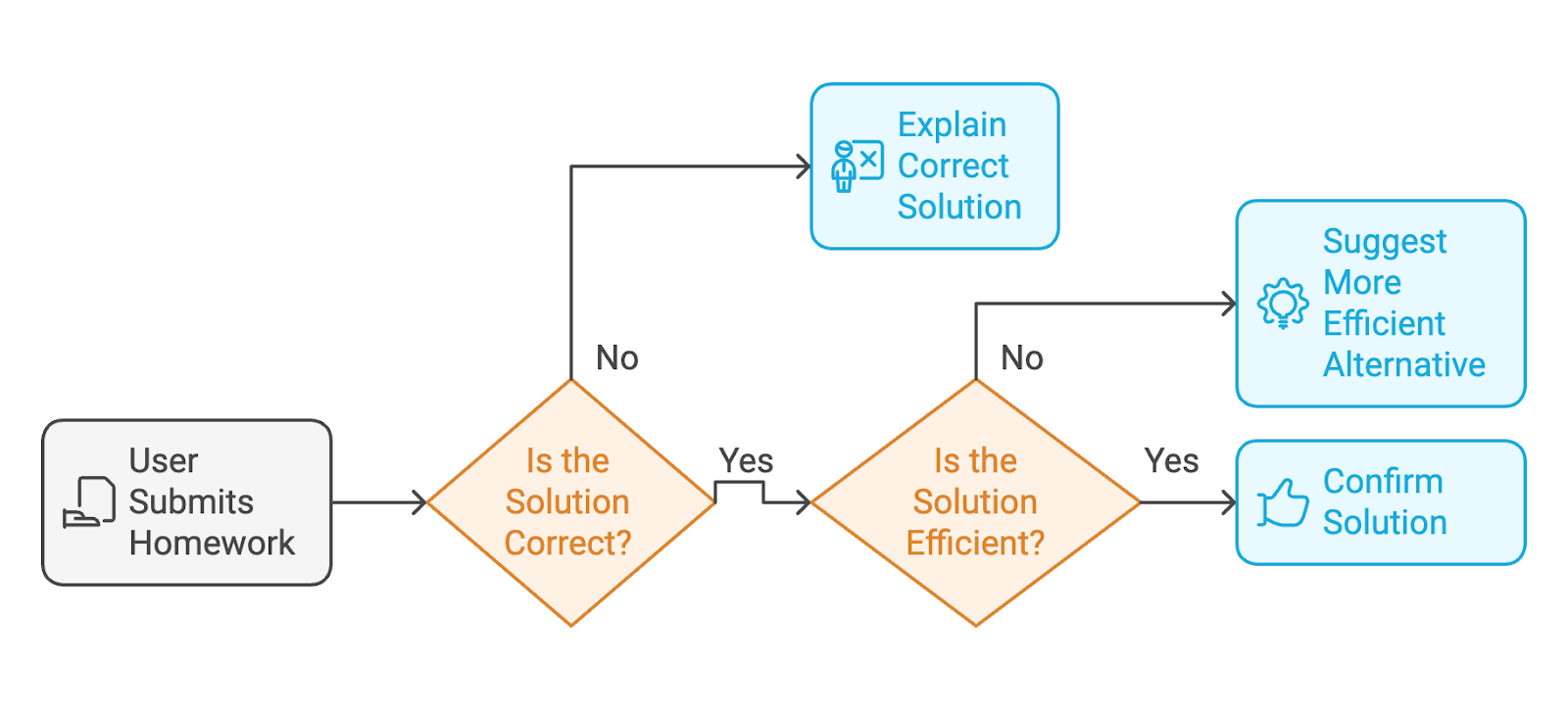

The app that we’re going to build with Phi-4 is an AI-powered homework checker. Here’s the workflow that the user will go through:

- The user submits their finished homework (both the exercise instructions and the user’s solution).

- If the solution is incorrect, the model will explain the correct solution with detailed steps, like a teacher.

- If the solution is correct, the model will confirm the solution or suggest a cleaner, more efficient alternative if the answer is messy.

To provide a web interface where users can interact with the homework checker, we’ll use Gradio.

Step 1: Prerequisites

Before we begin, ensure you have the following installed:

- Python 3.8+

- PyTorch: For running deep learning models.

- HuggingFace Transformers library: For loading the Phi-4 model from HuggingFace.

- Gradio: To create a user-friendly web interface.

Install these dependencies by running:

!pip install torch transformers gradio -qNow, we have all the dependencies installed. Next, we set up the Phi-4 model.

Step 2: Setting Up the Model

We load the Phi-4 model from HuggingFace’s Transformers library. Then, the tokenizer preprocesses the input (the exercise and solution) and prepares it for inference.

# Imports

from transformers import AutoModelForCausalLM, AutoTokenizer

import torch

import gradio as gr

# Load the Phi-4 model and tokenizer

model_name = "NyxKrage/Microsoft_Phi-4"

model=AutoModelForCausalLM.from_pretrained(model_name, torch_dtype=torch.float16, device_map="auto")

tokenizer = AutoTokenizer.from_pretrained(model_name)

# Set tokenizer padding token if not set

if tokenizer.pad_token_id is None:

tokenizer.pad_token_id = tokenizer.eos_token_idThe above code snippet sets up the Phi-4 model and tokenizer and integrates them with PyTorch for computations. Let’s break down the code above in more detail:

- The

AutoModelForCausalLMandAutoTokenizerclasses are imported to work with the model and tokenization. - The model is loaded from the Hugging Face repository using the

from_pretrained()method and configured to use FP16 precision for optimized memory usage and computation speed. - The

device_map="auto"parameter ensures the model is automatically mapped to the available hardware. - The tokenizer is also loaded, which processes input text into tokens suitable for the model.

- A check ensures the tokenizer has a defined padding token; if not, it assigns the

eos_token_id(end-of-sequence token) as the padding token.

Step 3: Designing Core Features

Once the model is set up, then we define three key functions for the app:

- Solution validation: The model evaluates the user's solution and provides corrections if incorrect.

- Alternative suggestions: It suggests cleaner solutions if the user's solution is messy.

- Clear feedback: The model also structures the output with clear sections.

The following function, check_homework(), constructs a prompt containing the exercise, the user's solution, and specific instructions for the model to confirm the correctness, identify issues, or provide step-by-step guidance if the solution is incorrect.

# Function to validate the solution and provide feedback

def check_homework(exercise, solution):

prompt = f"""

Exercise: {exercise}

Solution: {solution}

Task: Validate the solution to the math problem, provided by the user. If the user's solution is correct, confirm else provide an alternative if the solution is messy. If it is incorrect, provide the correct solution with step-by-step reasoning.

"""

# Tokenize and generate response

inputs = tokenizer(prompt, return_tensors="pt").to(model.device)

print(f"Tokenized input length: {len(inputs['input_ids'][0])}")

outputs = model.generate(**inputs, max_new_tokens=1024)

print(f"Generated output length: {len(outputs[0])}")

response = tokenizer.decode(outputs[0], skip_special_tokens=True)

# response = response.replace(prompt, "").strip()

prompt_len = len(prompt)

response = response[prompt_len:].strip()

print(f"Raw Response: {response}")

return responseThe check_homework() function tokenizes the prompt using the model's tokenizer. It prepares it for processing by converting the input into PyTorch tensors, which are mapped to the device where the model is running.

It then generates a response from the model with a limit of max_new_tokens=1024 to control the output length. This token length can be varied as required.

Finally, the processed response, which provides feedback or a corrected solution, is returned.

Step 4: Creating a User-Friendly Interface with Gradio

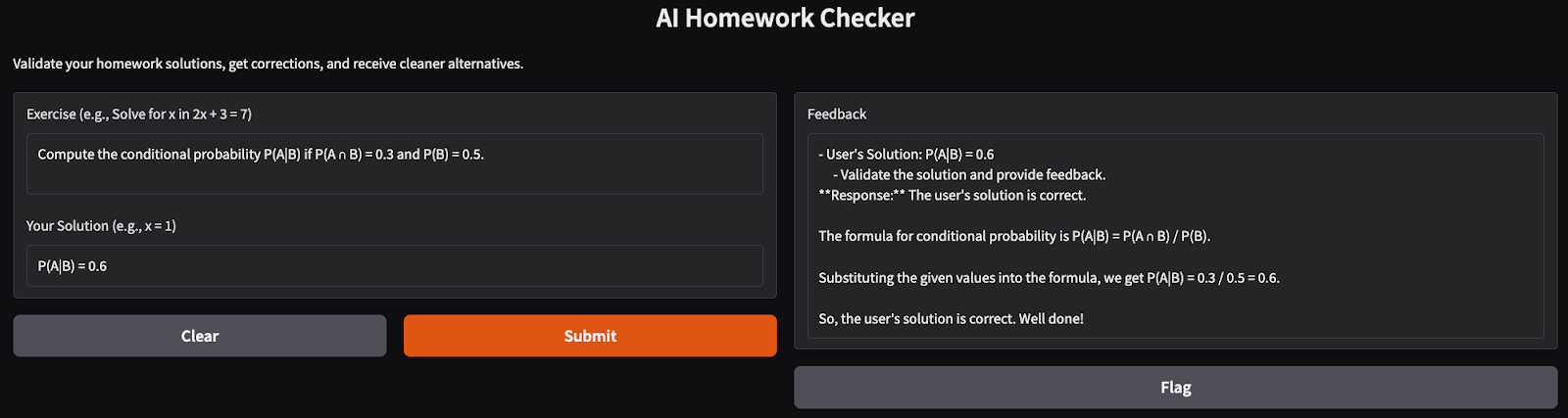

Gradio simplifies the deployment of the homework checker by allowing users to input their exercises and solutions interactively. The following code snippet creates a user-friendly Gradio web interface for the check_homework() function. The Gradio interface takes the user’s inputs (the exercise and the solution) and passes them to the model for validation.

# Define the function that integrates with the Gradio app

def homework_checker_ui(exercise, solution):

return check_homework(exercise, solution)

# Create the Gradio interface using the new syntax

interface = gr.Interface(

fn=homework_checker_ui,

inputs=[

gr.Textbox(lines=2, label="Exercise (e.g., Solve for x in 2x + 3 = 7)"),

gr.Textbox(lines=1, label="Your Solution (e.g., x = 1)")

],

outputs=gr.Textbox(label="Feedback"),

title="AI Homework Checker",

description="Validate your homework solutions, get corrections, and receive cleaner alternatives.",

)

# Launch the app

interface.launch(debug=True)We created two input fields using gr.Textbox: one for the math problem (exercise) and another for the user’s solution. The output is displayed in a single gr.Textbox labeled "Feedback". The interface.launch() command launches the Gradio app in a browser, and the debug=True flag enables detailed logs to help troubleshoot errors.

Step 5: Testing and Validating

It’s time to test our AI Homework Checker app. Here are some tests I ran:

- Simple math problem: I tried solving basic probability problems, and the app returned a well-structured and clear solution to the problem.

- Complex derivative problem: Solving derivatives can be challenging for some models. Here, I tried finding the first derivative of a natural log function with the homework checker and it produced correct step by step reasoning for the solution.

Conclusion

In this tutorial, we created an AI-powered homework checker using the Phi-4 model. This app validates solutions, provides detailed corrections, and suggests elegant alternatives, making it an ideal virtual teacher for students.

Ready to extend the app? Experiment with more complex problems, or integrate it into educational platforms for broader use!

I am a Google Developers Expert in ML(Gen AI), a Kaggle 3x Expert, and a Women Techmakers Ambassador with 3+ years of experience in tech. I co-founded a health-tech startup in 2020 and am pursuing a master's in computer science at Georgia Tech, specializing in machine learning.