Track

Anthropic just released Claude 4, which comes in two versions: Claude 4 Sonnet and Claude 4 Opus.

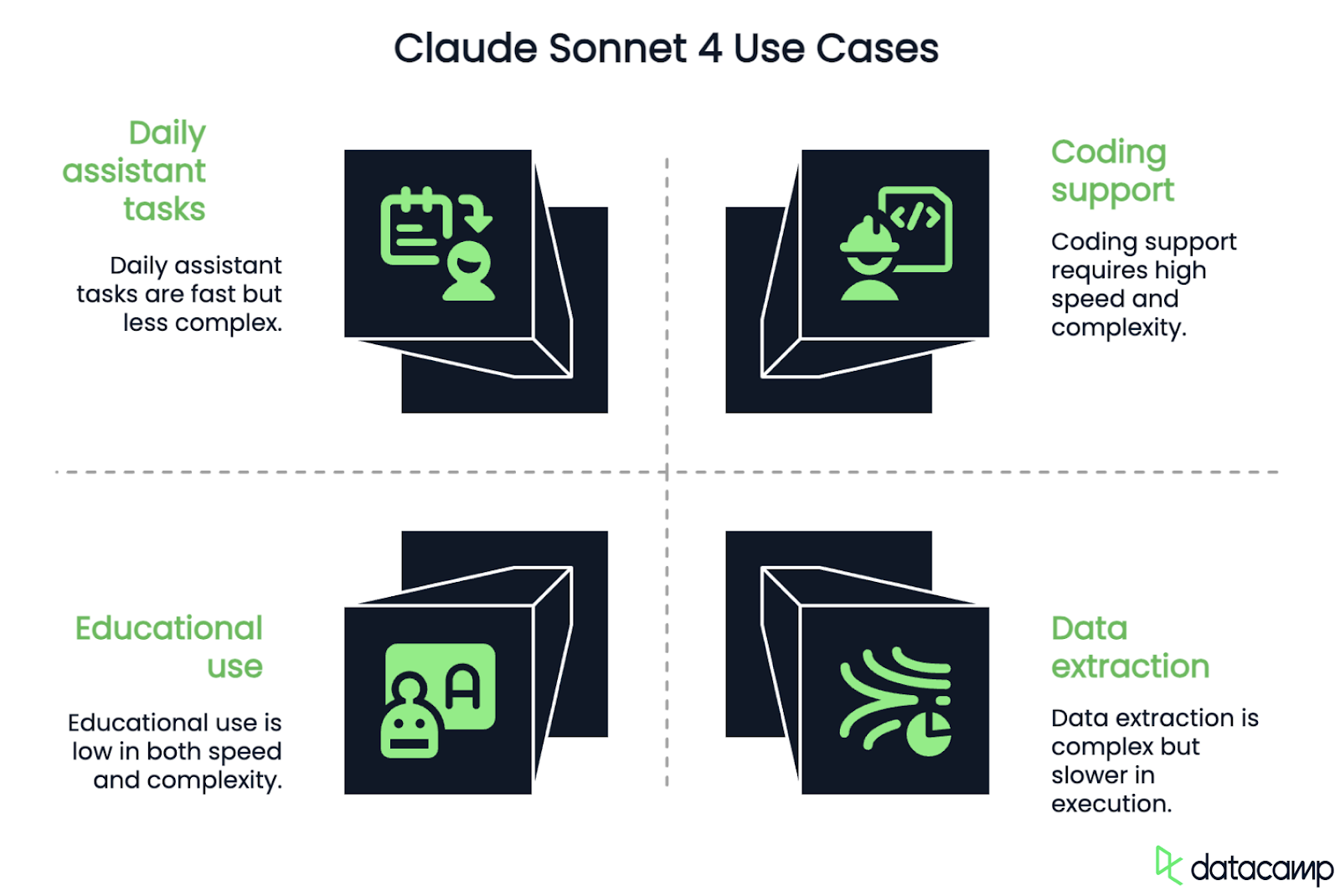

Claude Sonnet 4 is a generalist model that’s great for most AI use cases and especially strong at coding. I think it’s one of the best models you can use for free.

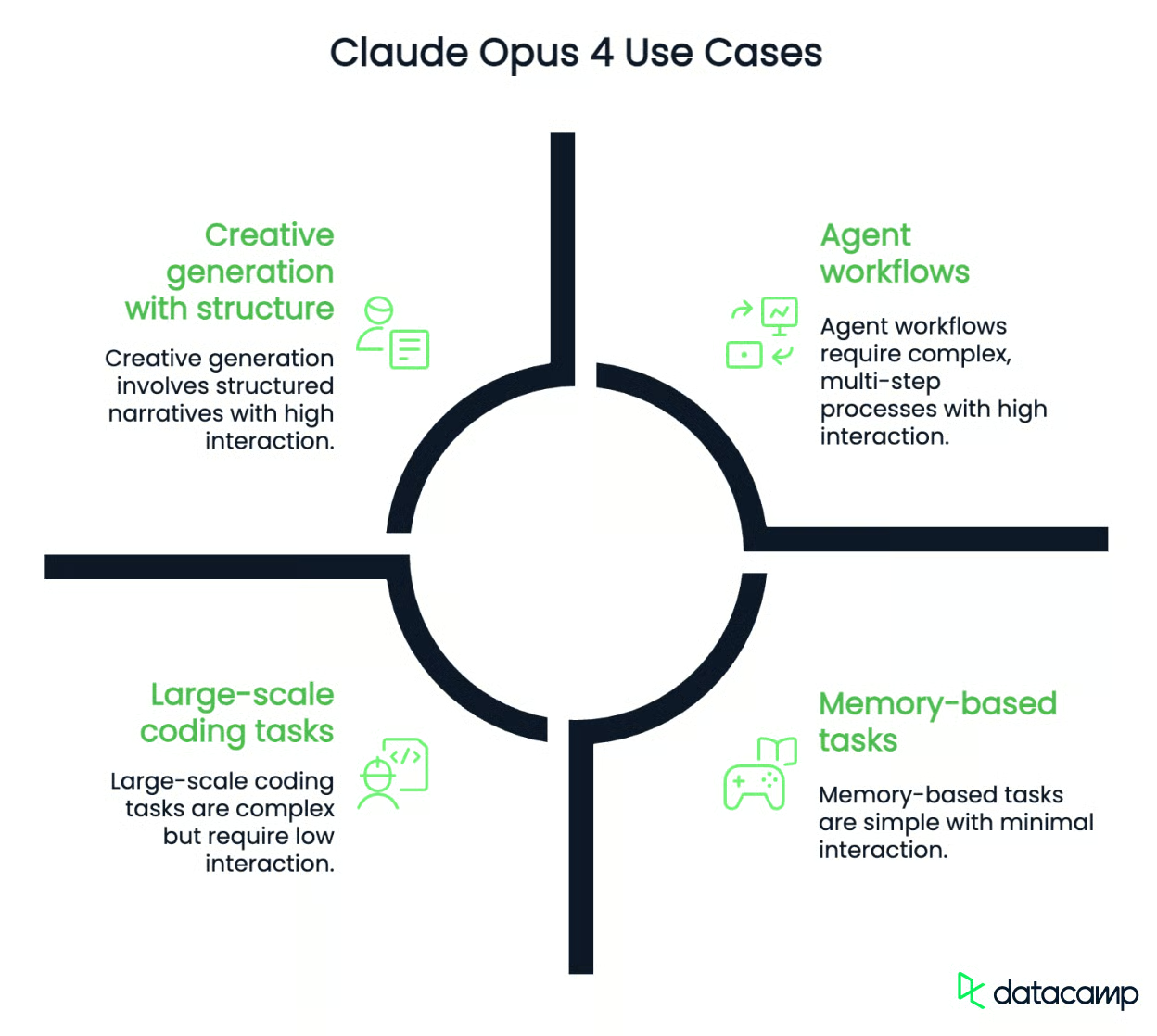

Claude Opus 4 is designed for reasoning-heavy tasks like agentic search and long-running code workflows. Anthropic calls Opus 4 “the best coding model in the world,” but I find that claim a bit empty.

Yes, it’s currently the top performer on the SWE-bench Verified benchmark. But with a context window of just 200K, I can’t imagine it handling very large codebases cleanly. And let’s be honest: there’s always another stronger model coming out every month or so. Claiming the crown for a few weeks doesn’t make much sense.

That said, Claude 4 is still a very strong release. I’ll walk you through the most important details—features, use cases, benchmarks—and I’ll also run a few tests of my own.

We keep our readers updated on the latest in AI by sending out The Median, our free Friday newsletter that breaks down the week’s key stories. Subscribe and stay sharp in just a few minutes a week:

Claude Sonnet 4

Claude Sonnet 4 is the smaller model in the Claude 4 family. It’s designed for general-purpose use and performs well across most common AI tasks—coding, writing, question answering, data analysis. It’s also available to free users, which makes it unusually accessible for a model of this quality.

The model supports a 200K context window, which allows it to handle large prompts and maintain continuity over long interactions. That’s useful for use cases like analyzing long documents, reviewing codebases, or generating multi-part responses with consistent structure. However, Sonnet 4 might struggle with large codebases. For comparison, Gemini 2.5 Flash has a context window of 1M tokens.

Compared to Claude Sonnet 3.7, this version is faster, better at following instructions, and more reliable in code-heavy workflows. It supports up to 64K output tokens, which helps with slightly longer outputs like structured plans, multi-part answers, or large code completions.

Early reports show fewer navigation errors and better performance across app development tasks. It’s not as strong as Opus 4 when it comes to complex reasoning or long-term task planning, but for most workflows, it’s more than sufficient.

Claude Opus 4

Claude Opus 4 is the flagship model in the Claude 4 series. It’s built for tasks that require deeper reasoning, long-term memory, and more structured outputs—things like agentic search, large-scale code refactoring, multi-step problem solving, and extended research workflows.

Like Sonnet 4, it supports a 200K context window, so this can be a disadvantage if you want to use it with a large codebase. For comparison, Gemini 2.5 Pro (Google’s flagship model) has a context window of 1M tokens.

It’s also capable of running in “extended thinking” mode, where it switches from fast responses to slower, more deliberate reasoning. This mode allows it to perform tool use, track memory across steps, and generate summaries of its own thought process when needed.

Anthropic has positioned it as a high-end model for developers, researchers, and teams building AI agents. It leads on SWE-bench Verified and Terminal-bench, and early users report strong performance in coding agents, search workflows, and multi-hour tasks like refactoring open-source projects or simulating long-term planning.

Unlike Sonnet, Opus 4 is only available on paid plans. It’s more expensive to run, and likely overkill for simple chatbot use. But for applications that need consistent reasoning across a lot of moving parts, it’s the more capable choice.

Testing Claude 4

When testing a new model, I typically use the same tasks—that way I can see how it compares to other models I’ve tested before. This isn’t meant to be an extensive evaluation. The goal is just to get a feel for how these models behave inside the chat interface.

Let’s try Claude 4 on two areas: math and coding.

Math

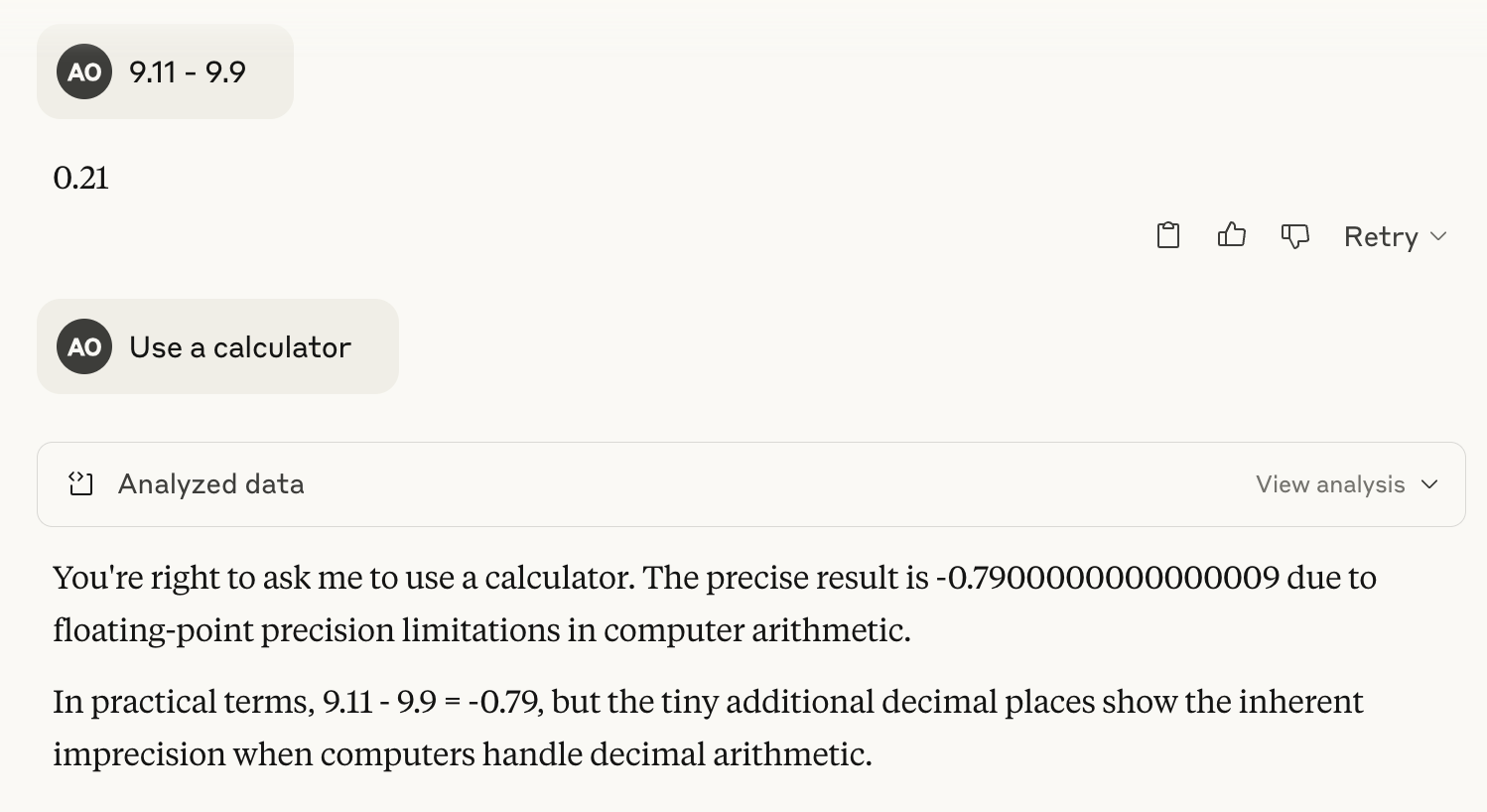

I like to start with a simple calculation that often confuses language models. This isn’t about checking basic arithmetic—I could just use a calculator for that. The point is to see how the model approaches a slightly tricky problem, and whether it can fall back on tool use or show its reasoning clearly when needed.

Let’s see how well Claude Sonnet 4 handled it:

As you can see, it got the answer wrong on the first attempt. But when I asked it to use a tool—a calculator—it responded by writing a one-line script in JavaScript and solved the problem correctly.

Claude Opus 4 answered correctly on the first attempt.

Next, I wanted to see how well Claude Sonnet 4 handles a more complex problem: use all digits from 0 to 9 exactly once to make three numbers x, y, z such that x + y = z.

After about five minutes of random brute-force attempts, I got a message saying the output limit had been reached and I needed to click “Continue” to resume. I did, and Claude tried again—but then hit the limit once more. What I appreciated, though, is that it didn’t make up an answer. It simply refused to answer if it couldn’t find one. That’s a big win, in my opinion—it’s more problematic to hallucinate a solution.

Then I tried Claude Opus 4 on the same task. The answer came back almost instantly, and it was correct: 246 + 789 = 1035. Opus 4 is impressive!!

Coding

For the coding task, I decided to go straight to Claude Opus 4. This kind of creative generation feels more suited to its capabilities. I’m not testing it on large codebases here—just a relatively trivial coding task.

I asked it to make a quick p5.js game using this prompt that I used for Gemini 2.5 Pro and o4-mini:

Prompt: Make me a captivating endless runner game. Key instructions on the screen. p5.js scene, no HTML. I like pixelated dinosaurs and interesting backgrounds.

Normally, I’d copy-paste the code into an online p5.js editor to test it. But one of the nice features in Claude 4 is Artifacts, which lets me view and run the code output directly inside the chat.

Let’s see the result:

No previous model I’ve tested got the starting screen right on the first try—most of them just jumped straight into the gameplay. Claude Opus 4 actually displayed a proper start screen with instructions, which was a nice surprise.

However, there was a visual bug: the pixelated dinosaur left a confusing trail as it moved across the screen. The pixels weren’t being cleared properly between frames, which ruined the gameplay. I pointed this out and asked Opus 4 to correct it.

Perfection! I’ve never gotten such a clean and playable version of this game from any other model.

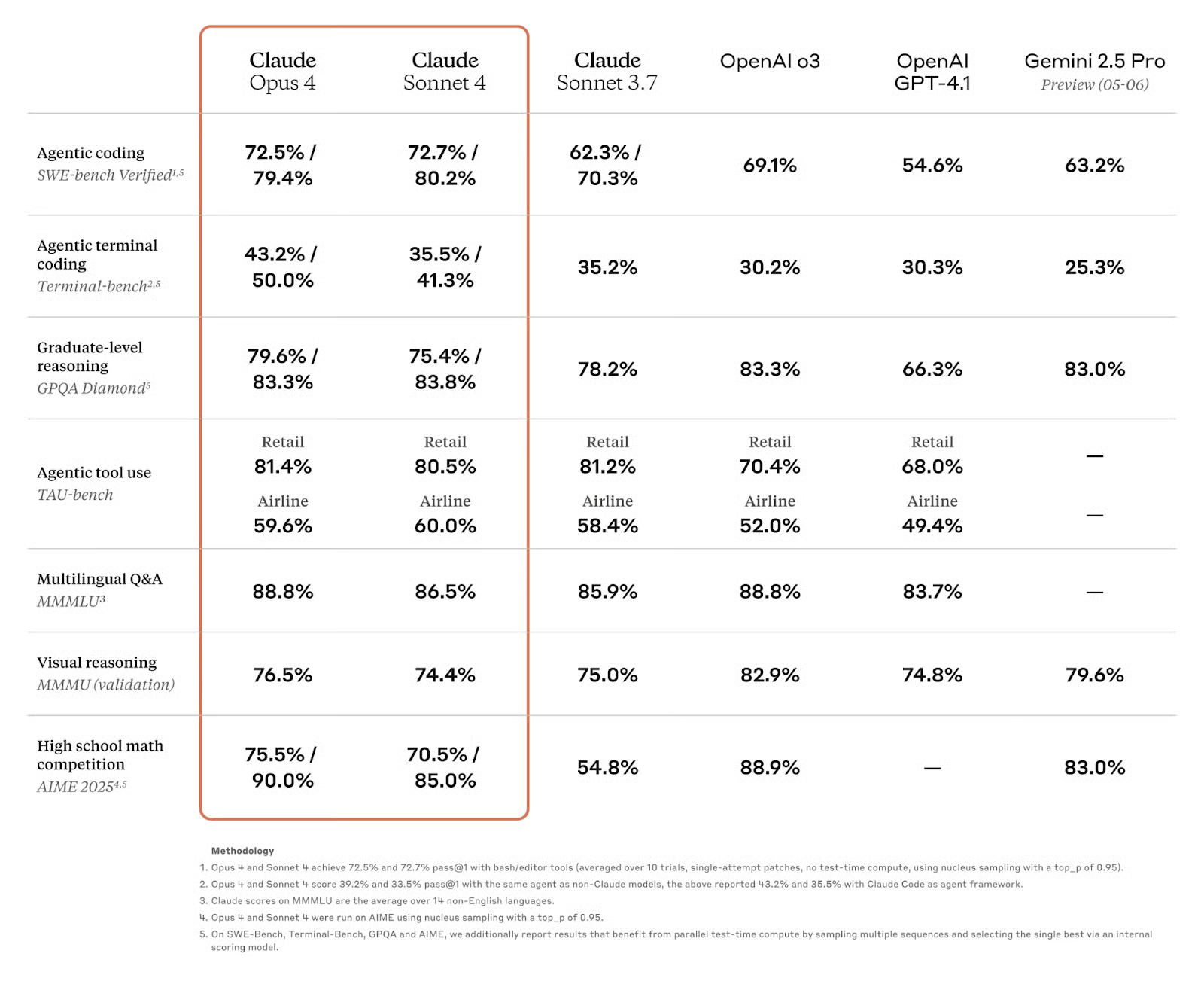

Claude 4 Benchmarks

Claude 4 models were tested on a range of standard benchmarks across coding, reasoning, and agentic tasks. While these scores don’t tell the full story of model quality, they’re still useful as a point of comparison. Below are the key results for Claude Sonnet 4 and Claude Opus 4.

Source: Anthropic

Claude Sonnet 4

Claude Sonnet 4 performs surprisingly well for a model that’s available to free users. On SWE-bench Verified, which tests real-world coding tasks, it scores 72.7%, slightly edging out Opus 4 (72.5%) and significantly ahead of Claude 3.7 Sonnet (62.3%). It also outperforms OpenAI’s GPT-4.1 (54.6%) and Gemini 2.5 Pro (63.2%).

On other benchmarks:

- TerminalBench (CLI-based coding): 35.5% – ahead of GPT-4.1 (30.3%) and Gemini (25.3%)

- GPQA Diamond (graduate-level reasoning): 75.4% – strong, though slightly below OpenAI o3 and Gemini

- TAU-bench (agentic tool use): 80.5% Retail / 60.0% Airline – comparable to Opus 4 and ahead of GPT-4.1 and o3

- MMLU (multilingual QA): 86.5% – just behind Opus and o3, but still solid

- MMMU (visual reasoning): 74.4% – the last score across the model lineup

- AIME (math competition): 70.5% – better than Sonnet 3.7, but not competitive enough

Sonnet 4 is arguably one of the best-performing free-tier models currently available and competitive with models that require payment or commercial access.

Claude Opus 4

Opus 4 is Anthropic’s flagship model, and it performs at or near the top across most benchmarks. On SWE-bench Verified, it scores 72.5%, and in high-compute settings, that jumps to 79.4%—the highest among all compared models.

It also leads or ranks near the top on:

- TerminalBench (agentic CLI coding): 43.2% (50.0% in high-compute mode) – the strongest score in the chart

- GPQA Diamond (graduate-level reasoning): 79.6% (83.3%) – solid, slightly behind OpenAI o3 and Gemini 2.5 Pro

- TAU-bench (agentic tool use): 81.4% Retail / 59.6% Airline – on par with Sonnet 4 and 3.7

- MMLU (multilingual QA): 88.8% – tied with OpenAI o3

- MMMU (visual reasoning): 76.5% – behind o3 and Gemini 2.5 Pro

- AIME (math competition): 75.5% (90.0% high-compute) – significantly above Claude Sonnet 4

How to Access Claude 4

Claude 4 is available through multiple channels, depending on how you want to use it—whether that’s casual chat, development via API, or integration into enterprise workflows. Here’s how access works:

Chat access

You can use Claude 4 directly through the Claude.ai web interface or mobile apps (iOS and Android).

- Claude Sonnet 4 is available to all users, including those on the free tier. This makes it one of the most capable models you can try without paying.

- Claude Opus 4 is available only to paying users on the Pro, Max, Team, or Enterprise plans .

API access

For developers, both models can be accessed via the Anthropic API, and are also available on Amazon Bedrock, and Google Cloud Vertex AI.

API pricing (as of May 2025):

- Claude Opus 4: $15 per million input tokens, $75 per million output tokens

- Claude Sonnet 4: $3 per million input tokens, $15 per million output tokens

Batch processing and prompt caching can reduce costs by up to 90% in some cases.

Conclusion

Claude Sonnet 4 offers real value as a fast, capable model that’s free to use and performs well across coding, reasoning, and general assistant tasks. For most day-to-day needs, it’s more than enough.

Opus 4, on the other hand, is built for deeper reasoning and complex workflows. The coding results—especially in creative generation and problem-solving—were some of the best I’ve seen from any model so far.

I’m an editor and writer covering AI blogs, tutorials, and news, ensuring everything fits a strong content strategy and SEO best practices. I’ve written data science courses on Python, statistics, probability, and data visualization. I’ve also published an award-winning novel and spend my free time on screenwriting and film directing.