Track

Containerization has become the go-to solution for efficiently building, deploying, and scaling modern applications. Two major names in this space are Kubernetes and Docker, and while they're often mentioned together, they actually serve different purposes. Both are crucial, but they do distinct jobs.

In this guide, I will help you understand what sets Kubernetes and Docker apart, their features, and when to use each one.

What is Containerization?

Before we get into Docker and Kubernetes, let’s first understand what they’re all about, which is containerization.

Containerization is a lightweight form of virtualization that packages an application and its dependencies into a single unit called a container.

Unlike traditional virtual machines, containers share the host system's operating system but maintain isolation between applications. This makes them more efficient, lightweight, and faster to start up!

Containerization helps developers create consistent, portable, and easy-to-manage environments, regardless of where they’re running—whether on a developer's laptop, in a data center, or in the cloud.

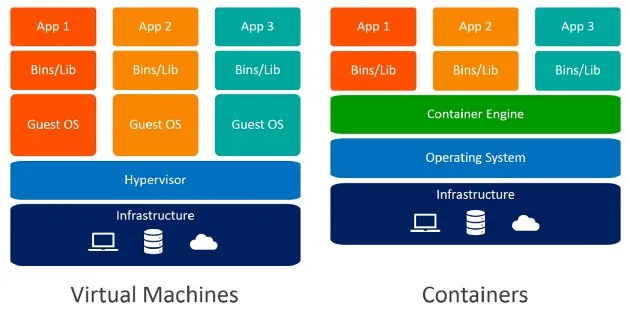

Containerization vs virtualization

It is useful to compare containerization to traditional virtualization to better understand it. Virtual machines (VMs) virtualize entire hardware systems, which means each VM includes a full operating system along with the necessary binaries and libraries. This approach offers isolation but comes with a significant resource overhead—each VM requires its own OS, making it resource-intensive and slower to start up.

Containers, on the other hand, share the host operating system's kernel, which makes them much lighter and quicker to spin up. Instead of virtualizing hardware, containers virtualize the operating system. This allows containers to run isolated processes without the overhead of a full OS for each instance, leading to better resource utilization and efficiency.

While VMs are great for full isolation and running multiple different operating systems on the same hardware, containers are better suited for efficient, scalable, and consistent application deployment.

Virtual Machines vs. Containers. Image source: contentstack.io

If you want to learn more about the essentials of VMs, containers, Docker, and Kubernetes, check out the free course on Containerization and Virtualization Concepts on Datacamp.

Now, let’s get to the details of Docker and Kubernetes!

Become a Data Engineer

What is Docker?

Docker is an open-source platform that provides a lightweight, portable way to create, deploy, and manage containers. Unlike traditional virtual machines, Docker containers package everything—including the application code, runtime, system tools, and libraries—allowing applications to run consistently across different environments.

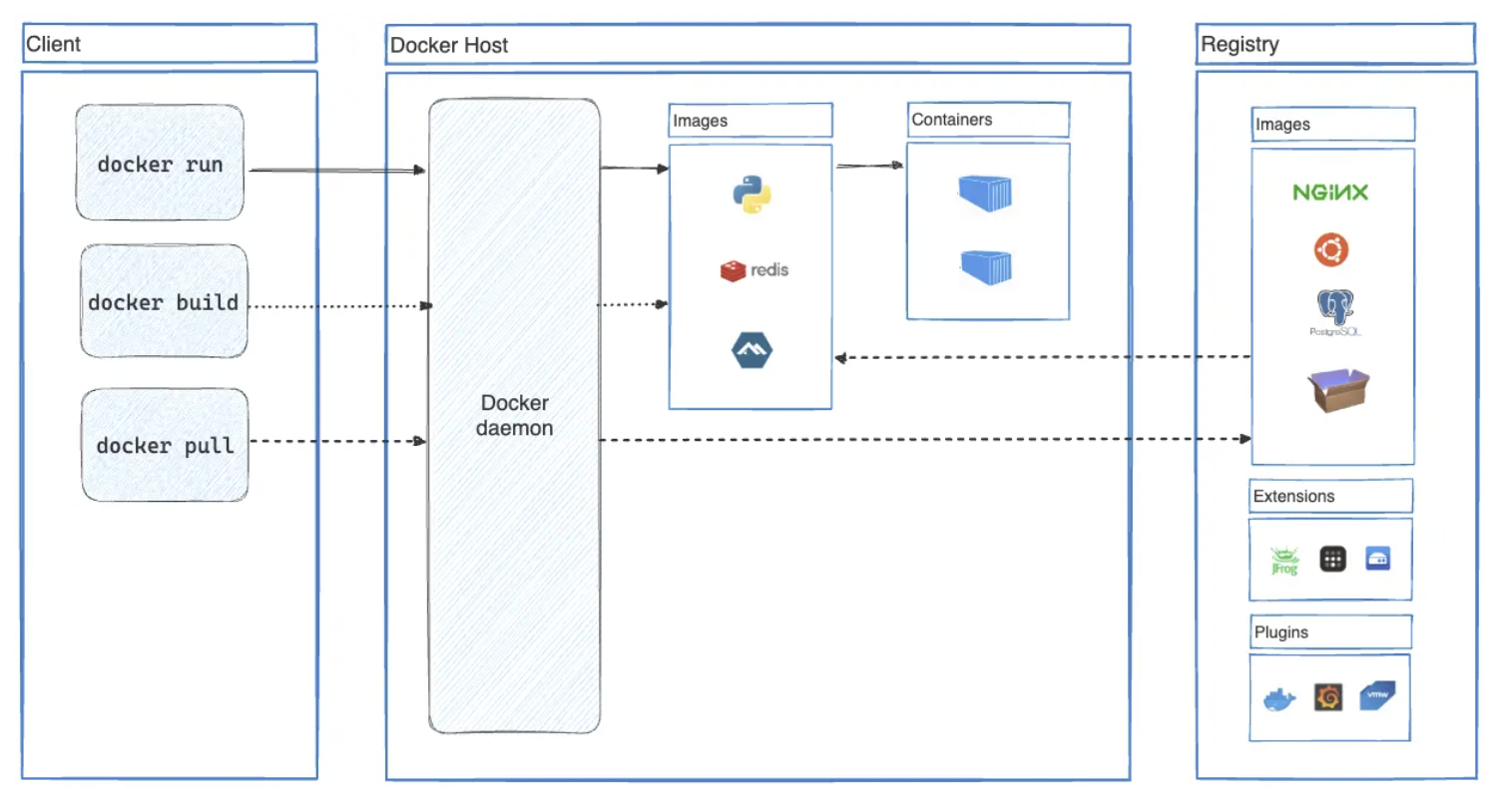

How Docker works

Docker works by creating containers, which, as we have seen before, are lightweight packages that encapsulate all the necessary components to run an application.

Containers are built from Docker images, which act as a blueprint defining what goes inside each container. A Docker image can include an operating system, application binaries, and configuration files, making it easy to replicate environments.

Once an image is created, developers can use Docker to run containers based on that image. One of Docker's biggest strengths is its simplicity and consistency: no matter where a container is run—whether on a developer's local machine, in an on-premises data center, or the cloud—the behavior remains the same.

Overview of Docker architecture. Image source: Docker documentation

The following example provides an overview of how Docker images are implemented. Take a look at the Dockerfile below:

# Use the official Python base image with version 3.9

FROM python:3.9

# Set the working directory within the container

WORKDIR /app

# Copy the requirements file to the container

COPY requirements.txt .

# Install the dependencies

RUN pip install -r requirements.txt

# Copy the application code to the container

COPY . .

# Set the command to run the application

CMD ["python", "app.py"]A Dockerfile is a script that contains a series of instructions for Docker to build an image, which can then be used to create a container.

After you create a Dockerfile in your project, the next step is to build the Docker image. This is done using the docker build command, which reads the instructions in the Dockerfile to assemble the image.

For example, running docker build -t my-app . in the terminal tells Docker to build an image with the tag my-app from the current directory (denoted by .).

During the build process, Docker executes each step in the Dockerfile, such as pulling the base image, installing dependencies, and copying the application code into the image. Once the image is built, it serves as a template that can be reused to create multiple containers.

After the image is successfully built, you can create and run containers from it using the docker run command. For instance, docker run my-app starts a new container based on the my-app image, launching your application within the isolated environment provided by Docker.

If you want to learn more about common Docker commands and industry-wide best practices, check out the blog Docker for Data Science: An Introduction.

Features of Docker

- Portability: Docker containers can run consistently on different systems, providing a seamless experience across development, testing, and production environments.

- Ease of use: Docker's command-line interface and comprehensive set of tools make it accessible for developers, even those new to containerization.

- Lightweight: Docker containers share the same OS kernel, reducing the resource overhead compared to full virtual machines.

- Fast startup times: Docker containers can be started in seconds, making them highly effective for applications that need rapid spin-up and teardown.

Check out DataCamp’s Docker cheat sheet, which provides an overview of all the available Docker commands.

What is Kubernetes?

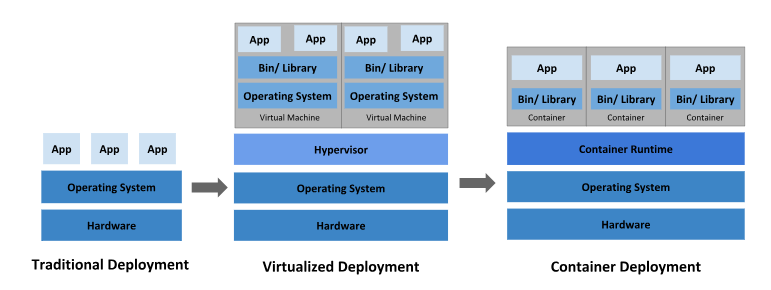

Kubernetes is a powerful open-source container orchestration platform designed to manage containerized applications across clusters of machines.

Initially developed by Google, Kubernetes—commonly known as K8s—handles deployment, scaling, and operations of application containers, making it an essential tool for managing containers at scale.

Evolution of deployment strategies over time. Image source: Kubernetes.io

How Kubernetes works

Kubernetes is built on the concept of clusters, nodes, and pods, forming a layered architecture that provides flexibility and scalability. A cluster represents the entire infrastructure, consisting of multiple nodes (virtual or physical machines).

These nodes work together to host and manage containerized applications. Nodes can be either master nodes, which control and manage the cluster, or worker nodes, which run the application workloads. The master node is responsible for managing the cluster’s state, making scheduling decisions, and monitoring its health.

Each worker node runs one or more pods, which are the smallest deployable units in Kubernetes and consist of one or more containers.

Pods act as logical hosts for containers and share the same network and storage, which makes it easier for the containers within a pod to communicate with each other. Pods are ephemeral by nature, meaning they can be created, destroyed, or replicated dynamically based on the application's needs.

Overview of Kubernetes architecture. Image source: Kubernetes.io

Kubernetes abstracts away the complexity of managing infrastructure by providing a powerful API and a suite of tools to manage containerized applications. It keeps applications running smoothly by distributing workloads, scaling resources based on demand, and restarting containers if they fail.

Kubernetes also manages the desired state of your applications, ensuring that the number of pods and their configuration always match what you specify and that disruptions are automatically corrected. This automation reduces the manual effort needed for infrastructure management and enhances the reliability and resilience of your applications.

Features of Kubernetes

- Automated scaling: Kubernetes can scale applications automatically based on resource demands, optimizing usage and keeping performance consistent.

- Load balancing: Kubernetes distributes incoming network traffic effectively across multiple containers, ensuring availability and resilience.

- Service discovery: Kubernetes provides services to automatically discover containers, eliminating the need to manage endpoints manually.

- Rolling updates: Kubernetes allows updating applications with minimal downtime, ensuring stability and reliability during upgrades.

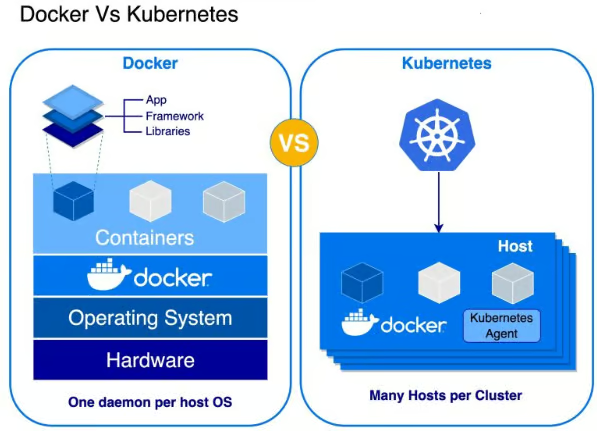

Kubernetes vs Docker: Core Differences

We now have a better understanding of Docker and Kubernetes, so it’s time to highlight their main differences:

1. Purpose and function

Docker and Kubernetes solve different problems in the containerization process. Docker is used for building, shipping, and running containers—it provides the means to create isolated environments for applications.

On the other hand, Kubernetes focuses on orchestrating containers, meaning it helps manage, scale, and ensure the smooth operation of large collections of containers.

2. Container management

Docker manages individual containers, whereas Kubernetes manages multiple containers across clusters.

Docker provides basic orchestration capabilities through Docker Compose and Docker Swarm, but Kubernetes takes orchestration to the next level, handling complex scenarios involving thousands of containers.

3. Application orchestration

Regarding advanced orchestration, Kubernetes provides features such as self-healing, load balancing, automated rollouts, and scaling.

Docker Swarm is Docker's own orchestration tool, but Kubernetes has emerged as the preferred solution for orchestrating large-scale, complex environments due to its advanced capabilities and broader ecosystem support.

Docker vs Kubernetes. Image source: Alex Xu / ByteByteGo

Use Cases for Docker

With the previous information about Docker in mind, here are some of the most common use cases for it:

1. Local development and testing

Docker is invaluable for local development. Developers can create containerized environments that mimic production settings, ensuring consistent behavior across the software development lifecycle.

2. Lightweight applications

Docker is an excellent choice for simpler use cases that don't require orchestration. Its simplicity shines in scenarios such as running small-scale applications or deploying standalone services.

3. CI/CD pipelines

Docker is widely used in Continuous Integration and Continuous Deployment (CI/CD) pipelines. It ensures that every step—from code creation to testing—is done in a consistent, reproducible environment, leading to fewer surprises during production.

Use Cases for Kubernetes

Kubernetes is most commonly used in the following scenarios:

1. Managing large-scale containerized applications

Kubernetes shines in large-scale environments. It can manage thousands of containers across many nodes in a distributed cluster. Organizations like Spotify and Airbnb use Kubernetes to keep their complex microservices-based applications running smoothly.

2. Automated scaling and resilience

Kubernetes automatically scales containers based on system requirements, dynamically responding to fluctuating demands. Additionally, Kubernetes has built-in self-healing mechanisms—restarting failed containers and replacing unresponsive nodes to maintain application uptime.

3. Microservices architecture

Kubernetes is ideal for managing microservices in production environments. Its ability to manage numerous services and their dependencies while facilitating communication between them makes it perfect for complex, distributed applications.

Can Kubernetes and Docker Work Together?

By now, it’s easy to see that Docker and Kubernetes are meant to work together.

Kubernetes uses container runtimes to run individual containers, and Docker has traditionally been one of these container runtimes. While Kubernetes and Docker have distinct roles, they work really well together! Docker builds and runs containers, while Kubernetes orchestrates those containers across clusters.

Docker Swarm vs Kubernetes

Docker Swarm is Docker's native orchestration tool, suitable for simpler, less demanding environments.

Kubernetes, however, has become the industry standard for container orchestration due to its richer feature set, scalability, and strong community support. While Docker Swarm is easier to set up, Kubernetes offers more sophisticated orchestration features and flexibility.

Choosing Between Kubernetes and Docker

In summary, when should one choose Docker, Kubernetes, or both? Here are some general guidelines to help you select.

When to use Kubernetes

Kubernetes is ideal for managing complex, large-scale environments. If you're building a microservices architecture or need to scale applications dynamically with minimal downtime, it's the preferred choice. Its ability to orchestrate distributed systems makes it an industry standard for larger, more complex deployments.

When to use Docker

Docker is well-suited for developing small, standalone applications or environments where orchestration is unnecessary. When working on a personal project, local development, or managing lightweight apps without needing to scale across multiple nodes, Docker provides everything you need.

When to use Kubernetes and Docker together

As mentioned, Kubernetes and Docker can (and should) also be used together in certain situations.

For example, developers often use Docker to containerize applications during development and then deploy and orchestrate those containers with Kubernetes in production. This workflow allows teams to leverage Docker's ease of use for development and Kubernetes' advanced features for orchestration.

Want to show the world your Docker skills? If you are ready for certification, check out this Free Complete Docker Certification (DCA) Guide for 2024.

Conclusion

Kubernetes and Docker are both essential tools for containerization, but they serve different purposes.

Docker makes it easy to create and run containers, making it perfect for local development and lightweight applications. On the other hand, Kubernetes is a robust platform for orchestrating those containers at scale, making it indispensable for managing complex, distributed environments.

Ultimately, choosing between Kubernetes and Docker depends on your project's needs—small-scale development environments benefit from Docker, while large-scale production systems require Kubernetes for effective orchestration. These tools complement each other in many cases, providing a comprehensive approach to building and deploying modern applications.

If you're ready to advance your skills, check out Introduction to Kubernetes and Intermediate Docker on DataCamp to deepen your understanding and practical expertise.

Become a Data Engineer

FAQs

What is the role of Docker Compose, and how does it differ from Kubernetes?

Docker Compose is a tool for defining and running multi-container Docker applications on a single host. It’s ideal for local development and simple deployments but lacks the scaling, self-healing, and orchestration capabilities that Kubernetes provides. Kubernetes, on the other hand, is designed to handle multi-container applications at scale across clusters of machines.

Can Docker Swarm be used as an alternative to Kubernetes for orchestration?

Yes, Docker Swarm can orchestrate containers and provides native clustering capabilities for Docker containers. However, it’s simpler and lacks the advanced features, scalability, and ecosystem that Kubernetes offers. Kubernetes is generally preferred for production-level deployments, while Docker Swarm may be sufficient for smaller, simpler projects.

How does the learning curve of Kubernetes compare to that of Docker?

Docker has a gentler learning curve, as it focuses on containerization basics and is relatively easy to set up and manage on a single system. Kubernetes, however, has a steeper learning curve due to its complex features like cluster management, scaling, and networking. It’s recommended to start with Docker fundamentals before diving into Kubernetes.

Are there any performance differences between using Docker and Kubernetes?

Docker containers are lightweight and run efficiently on a single host, making them suitable for applications that require minimal resource overhead. Kubernetes introduces additional resource consumption for managing the cluster, which can be heavier on system resources compared to standalone Docker. However, Kubernetes’ orchestration capabilities often outweigh this tradeoff in large-scale applications where reliability and scalability are prioritized.