Course

Containerization has become a cornerstone of application deployment. It is a technology that enables the packaging of an application and its dependencies into a single, lightweight, and portable unit called a container. Containerization is often used to break applications into smaller, manageable services (microservices).

A container is simply a running image. An image is an executable package that includes everything needed to run a piece of software, such as the application code, runtime, libraries, environment variables, and dependencies.

Containers allow us to build applications once and run them anywhere, ensuring consistency across development, testing, and production environments. But how can we use containers to manage, scale, and deploy our applications easily? We would have to use a container orchestration tool.

Two well-known AWS container orchestration tools are the Elastic Container Service (ECS) and Elastic Kubernetes Service (EKS). In this article, we will explore Amazon ECS and EKS to compare their ease of use, scalability, flexibility, and cost to help you decide which would better fit your use case.

What is Container Orchestration?

Container orchestration is the automated management of containers throughout their lifecycle. It allocates containers to run on available resources (e.g., a server), restarts them if they crash, and more. Container orchestrators can also be configured to load balance applications across multiple servers, ensuring high availability and scaling them horizontally depending on traffic.

Orchestration helps containers and microservices work cohesively in distributed environments—whether across multiple servers, on-premises, or in the cloud. Tools like Kubernetes, Amazon ECS, and Docker Swarm simplify the management of complex modern applications, enhancing their reliability and operational efficiency.

AWS Cloud Practitioner

What is ECS?

ECS is Amazon’s proprietary managed container orchestration service. It provides a simple and secure way to run containerized applications. Designed for simplicity, ECS reduces customers' decisions regarding computing, network, and security configurations without sacrificing scale or features.

There are a few terminologies and components that you need to familiarize yourself with for ECS.

- Cluster

- Capacity provider

- Task definition

- Task

- Service

Cluster

An ECS cluster comprises services, tasks, and capacity providers. It also contains the ECS control plane, which is managed by AWS and not visible to us.

Capacity provider

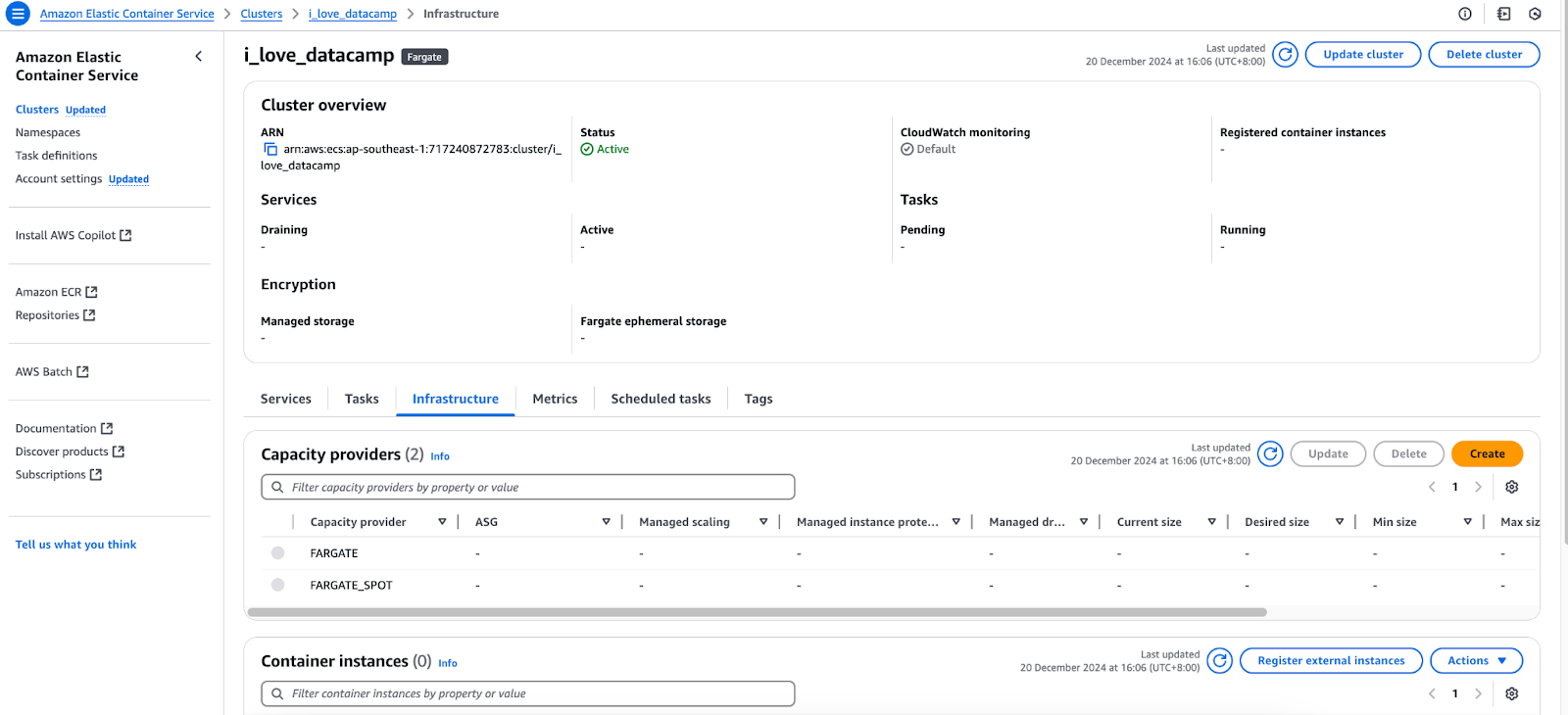

Containers need a server to run on, whether a virtual machine in the cloud or your local computer. In the context of ECS, you can select the “Launch Type” to run your containers on Elastic Compute Cloud (EC2) or Fargate.

Infrastructure tab of AWS ECS console.

Task

A task is a set of running container(s) configured according to a task definition. Essentially, it represents an instance of a task definition, similar to how a container serves as an instance of an image.

Task definition

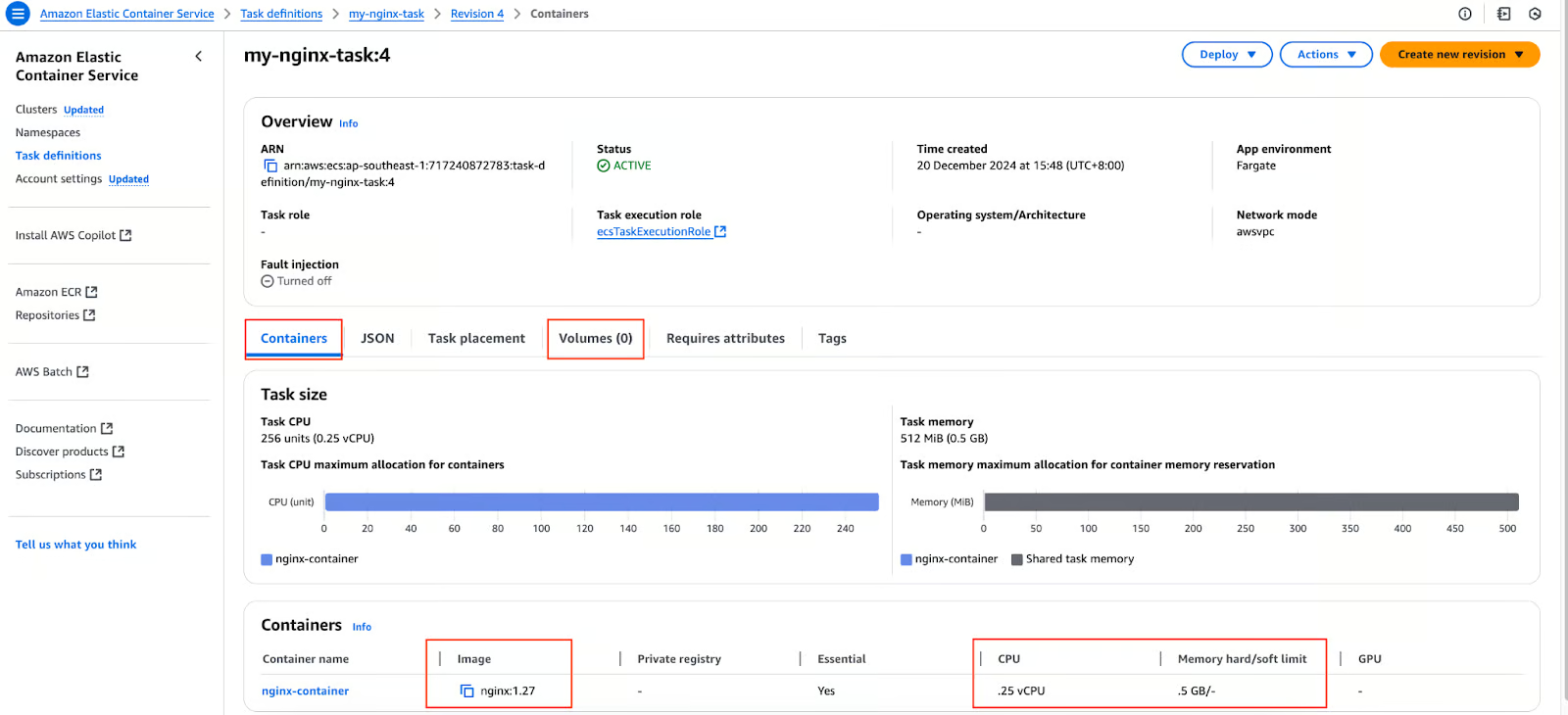

We have a Dockerfile for our application and created an image from it. We pushed the image to a container registry such as DockerHub or AWS ECR. Now what? We proceed to create a task definition, which is a configuration or blueprint for our containers (to be deployed on an ECS cluster) that includes the following information:

- Image(s). A task can contain more than one image.

- The port number that will be used to communicate with our application.

- Volumes.

- Resources (CPU and memory) to reserve for our applications.

Task definition in AWS ECS console.

Service

What happens when we run a stand-alone container and it crashes? Nothing happens. It stays in the crash state. This behavior is identical if we create a stand-alone task on ECS. We use the ECS service to ensure that our applications are always up. A service ensures that a desired number of tasks are up and running at all times.

If a task crashes or the underlying server hosting it (and therefore the container) goes down, the service will create a new task in an available capacity provider.

Implementation of ECS

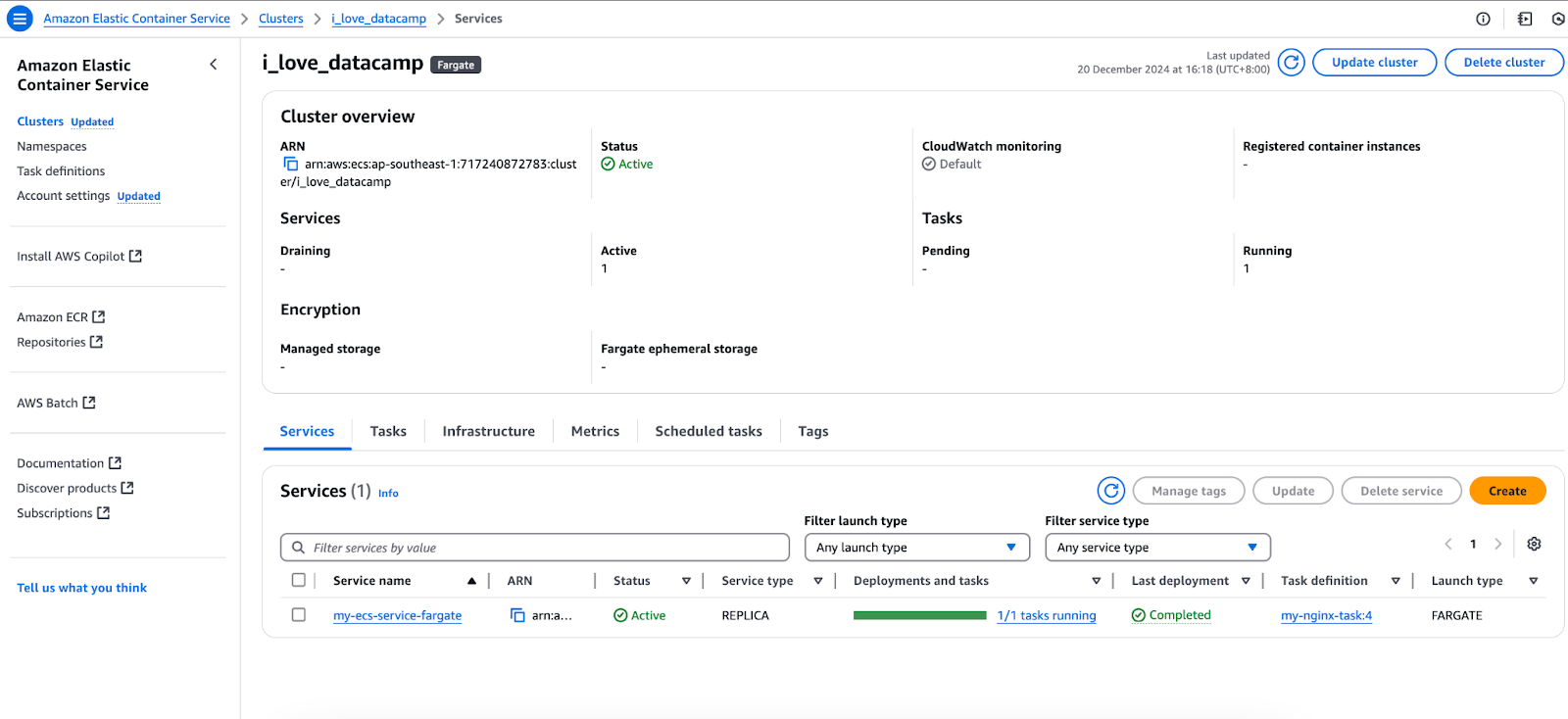

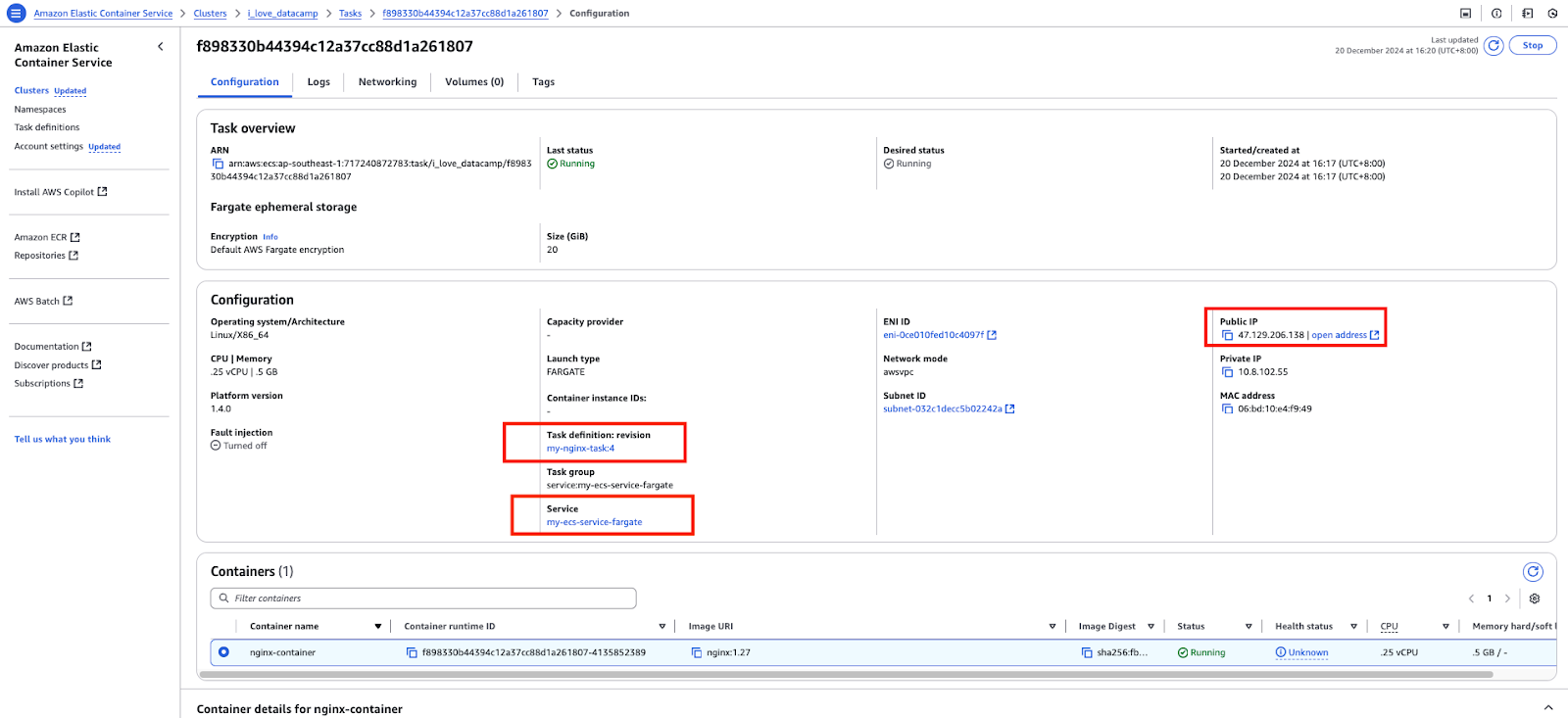

Now that we have covered the basics of ECS let's run a simple Nginx image and access it. Using the above ECS cluster and task definition, I will create a service that references the task definition:

Services in AWS ECS console.

This service will, in turn, create a task.

A task in AWS ECS console.

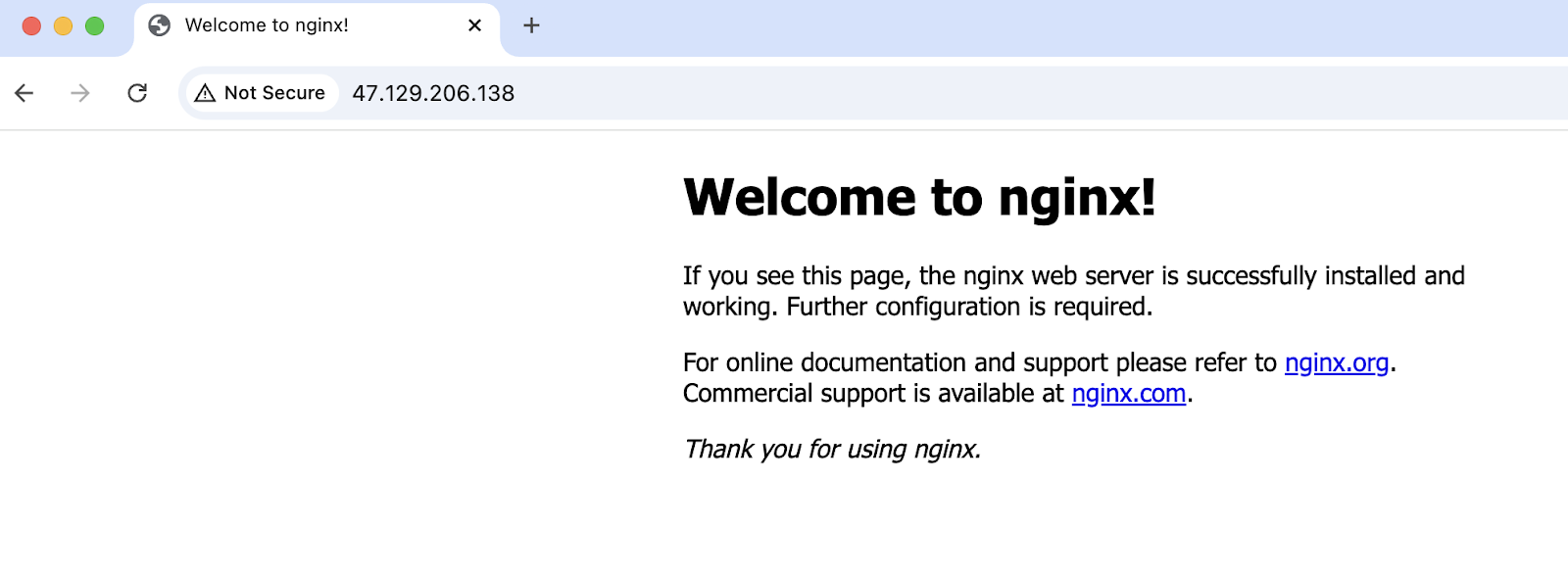

Accessing the public IP address of the task:

Successfully accessed application running on ECS.

What is EKS?

Before diving into Elastic Kubernetes Service (EKS), it’s essential to understand what Kubernetes is. Kubernetes is an open-source container orchestration engine that automates containerized applications' deployment, scaling, and management.

To learn more, I recommend checking out the Introduction to Kubernetes course.

However, bootstrapping and managing a Kubernetes cluster from scratch can be complex and time-consuming. Many components and configurations required to set up Kubernetes are similar across organizations and teams. To abstract this undifferentiated heavy lifting and simplify Kubernetes adoption, AWS developed EKS.

EKS is a managed Kubernetes service provided by AWS that handles much of the operational overhead. It simplifies Kubernetes deployment, scaling, and management by handling the control plane on behalf of users. By abstracting the need to manage these components, Amazon EKS allows users to focus on running their containerized workloads and managing worker nodes or using AWS Fargate for serverless computing.

This section briefly discusses EKS. For more, see the article Fundamentals of Container Orchestration With AWS Elastic Kubernetes Service (EKS).

There are a few terminologies and components that you need to familiarize yourself with for EKS:

- Cluster (master and worker nodes)

- Manifest files

- Pods

- ReplicaSet

kubectlcommand line utility

Cluster

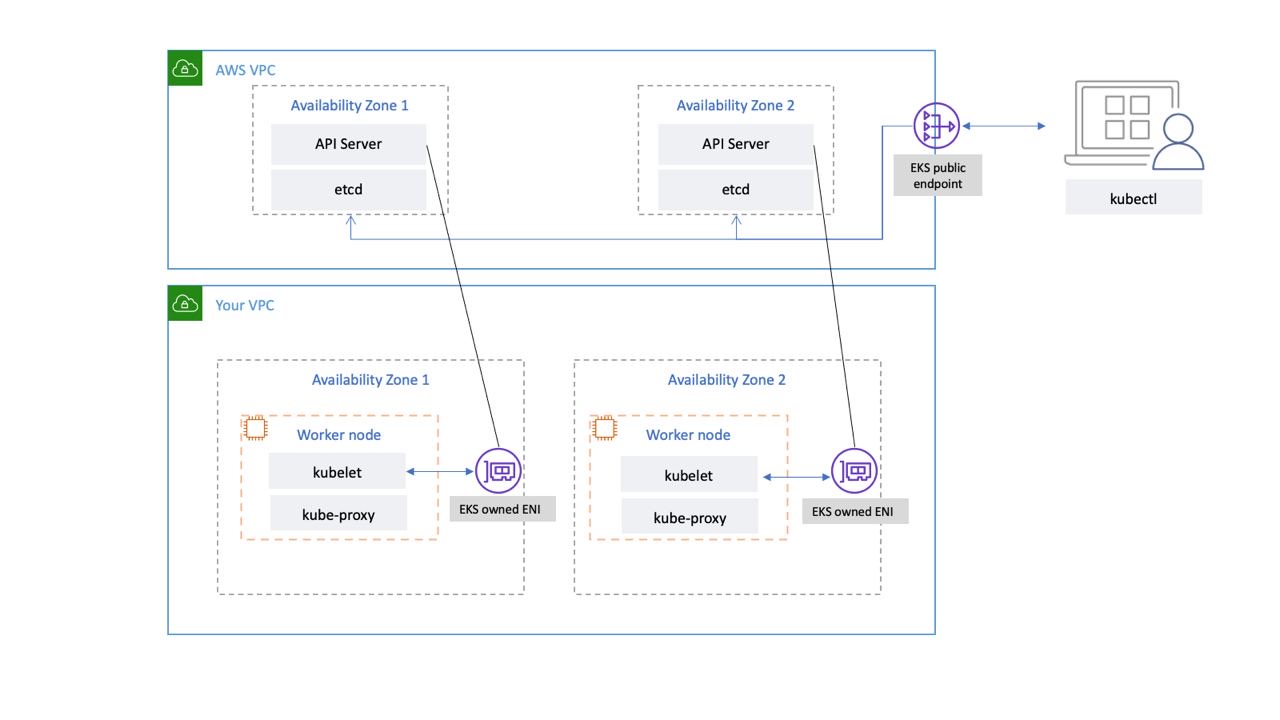

A Kubernetes cluster is a group of servers or virtual machines on which the Kubernetes workloads run. It consists of the master node (commonly referred to as the control plane) and worker nodes.

In EKS, the master node is managed by AWS in its own VPC; it is abstracted from us. Meanwhile, we can manage the worker nodes via EC2 instances or go serverless using Fargate.

EKS control plane network connectivity. Image source: AWS

Pods

A pod is a Kubernetes API resource. They are the smallest deployable units in Kubernetes. They are a group of one or more containers and contain specifications on the image to be used, port, commands, etc.

Resources tab in the AWS EKS console.

ReplicaSet

Identical to a pod, a ReplicaSet is also a Kubernetes API resource. It ensures the desired number of pods are always running.

Manifest files

Kubernetes manifest files are configuration files used to define the desired state of Kubernetes resources in a declarative manner. These files describe what resources should exist in a Kubernetes cluster, their configurations, and how they interact with one another.

Manifest files are typically written in YAML:

# pod.yml - Pod manifest

apiVersion: v1

kind: Pod

metadata:

name: nginx-pod

labels:

app: nginx

spec:

containers:

- name: nginx-container

image: nginx:1.27

ports:

- containerPort: 80# service.yml - Kubernetes Service manifest

apiVersion: v1

kind: Service

metadata:

name: nginx-node-port-service

spec:

type: NodePort

selector:

app: nginx

ports:

- protocol: TCP

port: 8080

targetPort: 80

nodePort: 31000kubectl

Command-line utility to communicate with the Kubernetes cluster. We can use the kubectl command to create, manage, and delete Kubernetes resources imperatively or use it together with the manifest files declaratively.

# Create a pod that runs a container with the nginx:1.27 image

kubectl run nginx-pod --image nginx:1.27

# Create a replicaset with 2 pods

kubectl create replicaset --replicas=2 --image nginx:1.27Implementation of EKS

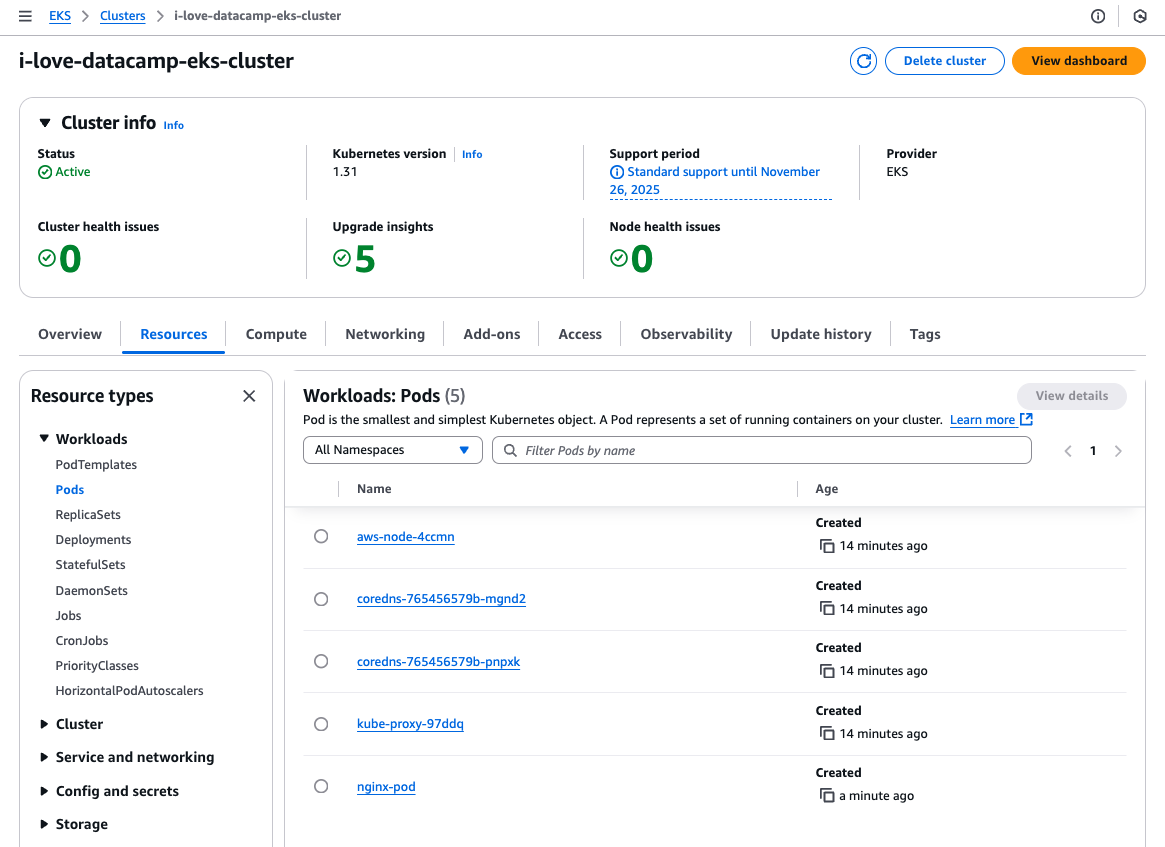

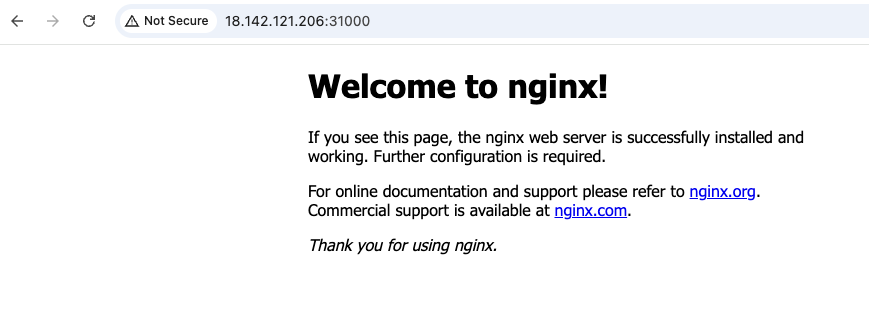

Let’s now demonstrate how EKS works by running and accessing the same Nginx image.

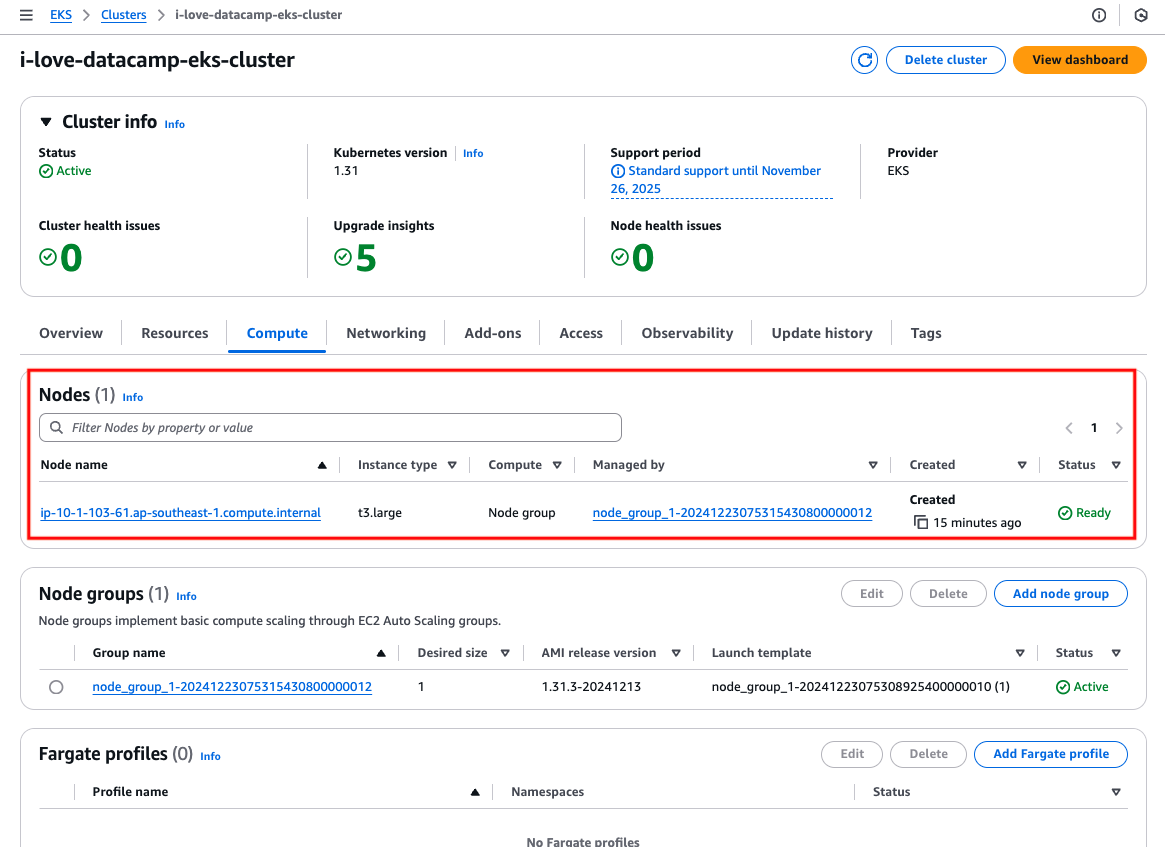

To keep it simple, I will create an EC2 instance/worker node in a public subnet, deploy the application there, expose it via a Kubernetes service resource of type NodePort, and access it via the instance's public IP.

I will use the pod and service manifest above and the kubectl command to create the pod:

# Update local .kube/config file

aws eks update-kubeconfig --region ap-southeast-1 --name i-love-datacamp-eks-cluster

# Create the pod

kubectl create -f pod.yml

# Create the service

kubectl create -f service.ymlThe EKS cluster has one EC2 instance:

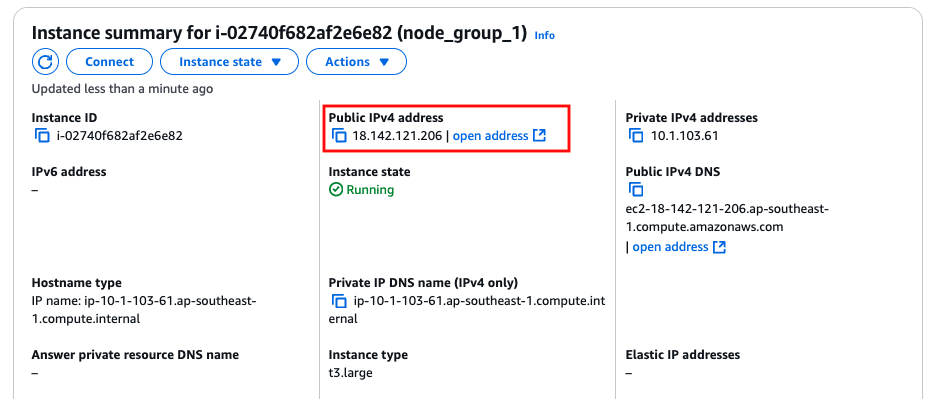

EC2 instance/worker node.

Locate the public IPv4 address of the instance.

The public IP address of EC2 instance/ worker node.

Accessing the public IP address of the instance with the port 31000:

Successfully accessed application running on EKS.

ECS vs. EKS: Similarities

Now that we have covered the basics of ECS and EKS, we can see some similarities between them. This is unsurprising, as they are both container orchestration tools.

Compute capacity

Both services allow applications to run on EC2, where users are responsible for maintaining, patching, and upgrading the EC2 instances, or on AWS Fargate, the serverless compute option where AWS manages the underlying servers, removing the need for infrastructure management.

Workload management model

Both services have similar structures and approaches to managing and maintaining application resources.

In AWS ECS, the configuration that defines how containers should run is called a task definition, while in AWS EKS, this is achieved using a pod manifest file. Once deployed, the containerized application in ECS runs within a task, whereas in EKS, it runs inside a pod. To ensure a specified number of tasks or pods are always running, ECS uses a service, while EKS relies on a replica set for this functionality.

Deep integration with other AWS services

As a container service on AWS, Amazon made a great effort to ensure that ECS and EKS integrated well with other AWS services.

For networking, there’s Amazon VPC, which allows containers to run in isolated environments with full control over networking configurations, security groups, and subnets. Load balancers for routing traffic to containers.

Solutions, such as EBS, EFS, and S3, are available to handle persistent storage requirements for containerized applications.

Both services use AWS IAM to control access and permissions granularly. This dictates which AWS resources the containerized application can access, such as S3, DynamoDB, or RDS.

Summary of key similarities

|

ECS |

EKS |

|

|

Compute capacity |

Both EC2 or Fargate |

|

|

Workload management model |

Uses task definitions to define container configuration; tasks run within a service to maintain desired count. |

Uses pod manifest files to define container configuration; pods run within a replica set to maintain desired count. |

|

Deep integration with AWS |

Integrates with VPC, load balancers, EBS, EFS, S3, and IAM for networking, storage, and access control. |

|

ECS vs. EKS: Differences

Understanding the differences between Amazon ECS and Amazon EKS is important when deciding between them.

In this section, we will break down their core differences in ease of use, scalability, customization, and cost, helping you determine which service best aligns with your technical requirements and business goals.

Ease of use and learning curve

EKS is built upon Kubernetes, so familiarity with Kubernetes concepts and tools is required. Thus, the learning curve for EKS will be steeper than for ECS. This involves understanding how the kubectl command works and some other essential basic Kubernetes API resources, such as services (not to be confused with the term “services” used in the context of ECS), config maps, ingress, etc. The section “What is EKS” above barely scratches the surface of what EKS offers.

ECS and EKS require knowledge of AWS services such as CloudWatch, IAM, ASG, etc. Fortunately, that is all needed for ECS, but not for EKS. Thus, ECS wins in terms of ease of use.

Scalability

Both services can scale equally well when they are configured properly. However, how they scale differs. ECS supports autoscaling via ECS Service Auto Scaling for tasks and ECS Cluster Auto Scaling for instances. It uses only AWS native services such as CloudWatch metrics and ASG scaling policies.

However, EKS uses Kubernetes-native scaling features, such as Horizontal Pod Autoscaler (HPA) for pods and Cluster Autoscaler for instances. Cluster Autoscaler is not installed on the EKS cluster by default and, thus, requires the user to install it themselves. This adds complexity as the proper configuration is required on both the AWS resources (e.g., IAM roles) and Kubernetes API resources (e.g., service accounts) for the Cluster Autoscaler to function, which requires making AWS API calls to scale in and out.

A recently rolled-out new feature for EKS, EKS AutoMode, can address this added complexity. It offloads the management and scaling of EKS worker nodes to AWS but comes with extra costs.

Flexibility and customization

As mentioned, ECS is Amazon’s proprietary managed container orchestration service designed for simplicity. AWS handles most cluster management tasks, offering predefined configurations that suit common container workloads but may not cater to niche requirements. While it reduces complexity, it limits customization options compared to Kubernetes.

EKS provides the full power of Kubernetes, enabling users to customize deployments, pod scheduling, storage classes, and networking policies to suit their needs. Advanced orchestration features, such as custom resource definitions (CRDs) and operators, allow unparalleled flexibility in application design.

EKS can also be integrated with various open-source tools and plugins from the Kubernetes ecosystem, such as Prometheus, Grafana, and Istio, enabling developers to build highly tailored solutions. Thus, EKS wins the competition for flexibility and customization.

Cost

In addition to the standard costs for networking resources (e.g., public IPv4 addresses, NAT gateways) and compute and storage resources (e.g., EC2 instances, Fargate, and EBS volumes) that apply to both ECS and EKS, ECS has a lower cost and a much more straightforward cost structure.

There are no charges for the ECS control plane. In other words, if you were to create an ECS cluster without any running task, you would not incur any cost. Meanwhile, for EKS, there are costs to the AWS-managed control plane regardless of whether you have any applications running in the Kubernetes cluster.

The cost of the EKS control plane varies depending on the Kubernetes version used. A Kubernetes version under standard support costs $0.10 per cluster per hour, while one under extended support costs $0.60 per cluster per hour.

Summary of key differences

|

Feature |

ECS |

EKS |

|

Orchestration platform |

AWS-native |

Kubernetes-based |

|

Complexity |

Simple |

More complex |

|

Portability |

AWS only |

Multi-Cloud |

|

Scaling |

ECS Service Auto Scaling |

Kubernetes Autoscalers |

|

Cost |

No control-plane charges |

Minimally $0.10/hour for control-plane |

When to Choose ECS?

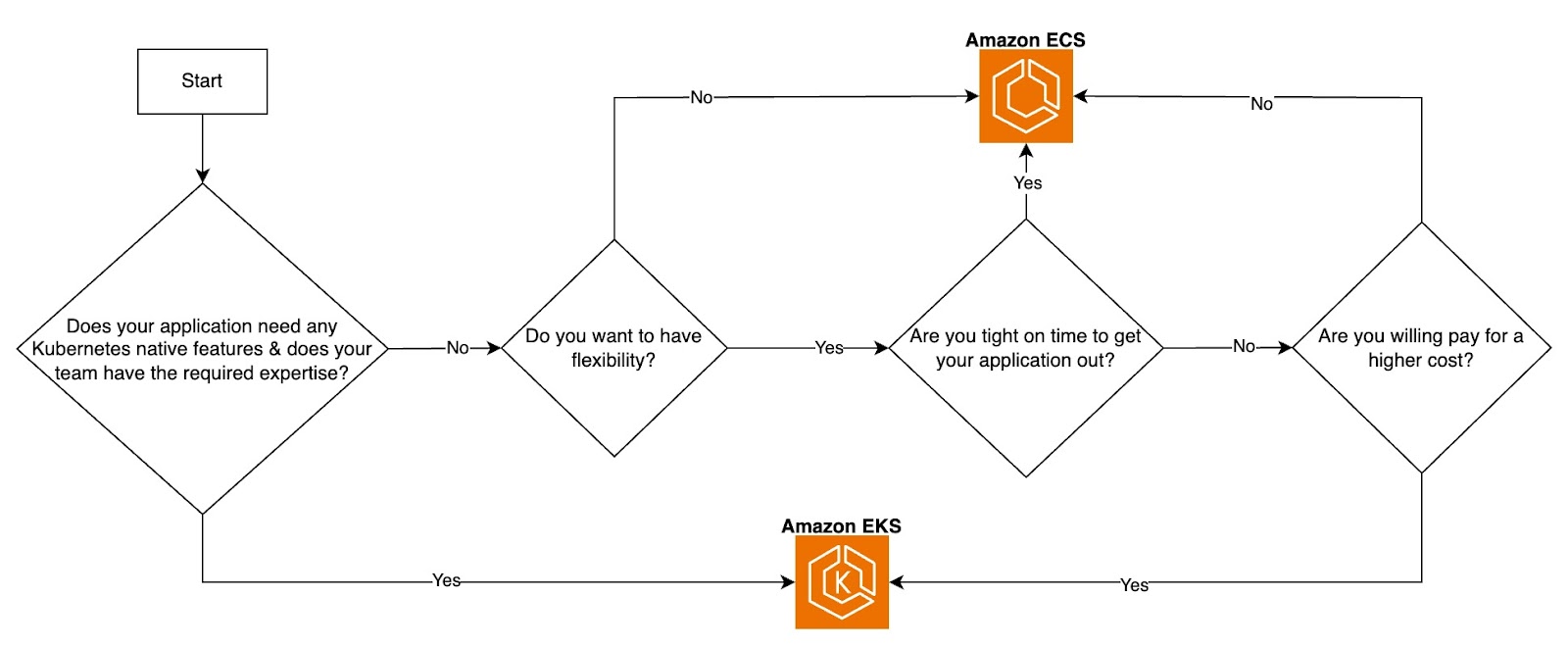

The choice depends on your application's requirements, business goals, team expertise, operational budget, and overall priorities.

ECS, being simpler and more cost-effective, is ideal for teams with tighter budgets, shorter time-to-market needs, and a preference for streamlined operations. It is particularly well-suited for applications not requiring portability across multi-cloud or hybrid environments and is designed to run exclusively on AWS. As an AWS-specific service, ECS aligns seamlessly with workloads confined to the AWS ecosystem.

While both ECS and EKS support Fargate for serverless container deployments, ECS offers a more straightforward integration. With ECS, you can directly choose tasks to run on Fargate without additional configuration. In contrast, Fargate with EKS requires specifying pod labels to determine which workloads should run on Fargate, adding a layer of complexity. This makes ECS an attractive choice for teams seeking a simpler serverless experience.

When to Choose EKS?

Kubernetes, the underlying platform for EKS, is cloud-agnostic, enabling workloads to run consistently across AWS, other cloud providers, and on-premises environments. This portability is particularly beneficial for organizations operating in multi-cloud setups or hybrid on-premises infrastructure. Azure and Google Cloud also offer managed Kubernetes services—Azure Kubernetes Service (AKS) and Google Kubernetes Engine (GKE), respectively—highlighting Kubernetes' ubiquity in modern cloud environments.

EKS is ideal if your application or use case requires advanced customization and extensibility. For instance, Kubernetes supports the creation of Custom Resource Definitions (CRDs), which allow you to define custom resources to manage specific application configurations or integrate with external systems. This flexibility is a key differentiator for EKS.

While EKS abstracts the control plane and relies on AWS to manage it, operating effectively in an EKS environment still demands familiarity with Kubernetes API resources and concepts. Therefore, the team's Kubernetes expertise is crucial to effectively leverage the platform.

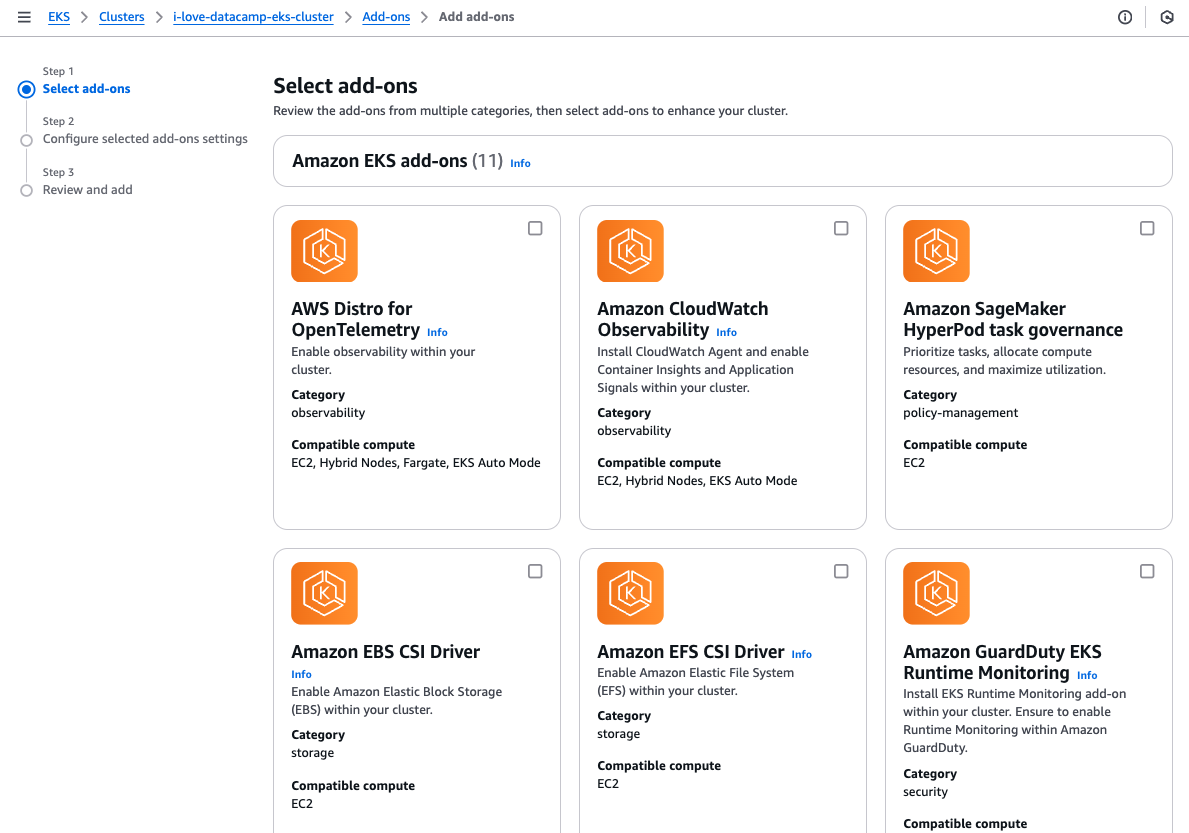

EKS also offers a broad range of customizable add-ons that can be installed to extend cluster functionality. These add-ons make provisioning a cluster with the necessary operational tools easier, simplifying setup and enhancing productivity.

EKS add-ons selection.

In summary, EKS is well-suited for microservices architectures or applications with numerous interconnected components requiring high control and granularity. It’s particularly advantageous for teams that need advanced customization options or operate in environments where workload portability is essential.

ECS vs. EKS decision flowchart. Image by Author.

Tools and Integrations

Whether it is a containerized application or an application running directly on a VM, monitoring and observability are paramount. Being in the AWS ecosystem, both ECS and EKS can tap into AWS tools and third-party services to enhance the entire Software Development Lifecycle (SDLC) for containerized applications.

Monitoring and logging

Monitoring and logging are essential for visibility into your applications and infrastructure. AWS provides native tools and supports third-party integrations for both ECS and EKS, such as Amazon CloudWatch for metrics, logs, and alarms.

AWS X-Ray for tracing requests across microservices makes it easy to analyze and debug performance issues in ECS tasks or EKS pods. Third-party tools like Datadog, Prometheus, Grafana, and New Relic provide enhanced visualization and alerting capabilities tailored to containers.

CI/CD pipelines

CI/CD pipelines help automate tests, scans, and deployments, ensuring faster iterations and reduced manual effort. Popular CI/CD tools, such as GitLab pipelines and GitHub Actions, integrate with ECS and EKS to enable container builds, tests, and deployments. These tools work with Docker containers for ECS or Helm charts for EKS.

Infrastructure as Code (IaC)

IaC is crucial for creating consistent and repeatable infrastructure, minimizing provisioning time, and reducing the risk of human error associated with manual configurations via the AWS Management Console.

Popular IaC tools include Terraform and AWS CloudFormation. While both are widely used, Terraform is often preferred for its cloud-agnostic capabilities, enabling teams to manage infrastructure across multiple cloud providers using a single tool.

Networking and security

AWS provides robust networking and security features for both ECS and EKS to ensure secure communication and access control, such as AWS VPC networking to isolate resources within private subnets. AWS WAF and Shield: Protect applications hosted on ECS and EKS from common web vulnerabilities and DDoS attacks. AWS KMS to encrypt EBS volumes and Kubernetes secrets stored in etcd.

Conclusion

ECS and EKS are both robust container orchestration services provided by AWS, each tailored to meet different application needs and team priorities.

ECS is easier to start with, simpler to provision, deploy, and maintain, and has lower operational costs. In contrast, EKS has a steeper learning curve and higher initial complexity but offers unmatched flexibility and advanced customization options, making it ideal for teams with specialized or complex requirements.

The choice between ECS and EKS depends on your application's needs, the team's expertise, and the desired balance between simplicity and control.

If you’re looking to strengthen your AWS knowledge, the AWS Concepts course is a great place to start. To dive deeper into AWS tools and technologies, check out the AWS Cloud Technology and Services course. Additionally, for those interested in mastering containerization, the Containerization and Virtualization Track offers valuable insights to help you excel in managing and deploying containers.

AWS Cloud Practitioner

FAQs

Is there a command line utility for ECS that is similar to kubectl for EKS?

Yes, there is the AWS Copilot command line interface.

How much do I need to know about Kubernetes before I can use EKS?

It really depends on your application's requirements and complexity. Nonetheless, Kubernetes API resources such as pods, replica sets, deployment, and services are a must-know regardless of your requirements or application's simplicity.

Can ECS and EKS integrate with third-party tools and services?

Yes, although the extent and ease of integration differ between the two. ECS integrates with Datadog, New Relic, and Prometheus via exporters to collect container metrics and logs.

ECS can be used with the AWS App Mesh, which offers features similar to those of third-party service meshes like Istio and Linkerd.EKS provides extensive monitoring options, with native support for Prometheus and Grafana and integrations with tools like Datadog, New Relic, and Splunk.

Fully compatible with Istio, Linkerd, and AWS App Mesh for implementing advanced service-to-service communication and observability.

What is the difference in cost between ECS and EKS?

Assuming the same computing resources (EC2 instance types, spot pricing, or Fargate usage) are used for both ECS and EKS, EKS will minimally cost more by $2.4/day for the control plane. Which can add up over a period of time. Other cost differences like networking overhead, operational complexity, and add-on resource usage can vary between ECS and EKS.

A seasoned Cloud Infrastructure and DevOps Engineer with expertise in Terraform, GitLab CI/CD pipelines, and a wide range of AWS services, Kenny is skilled in designing scalable, secure, and cost-optimized cloud solutions. He excels in building reusable infrastructure, automating workflows with Python and Bash, and embedding security best practices into CI/CD pipelines. With extensive hands-on experience in Kubernetes and various observability tools, Kenny is adept at managing and orchestrating microservices while ensuring robust observability and performance monitoring. Recognized for his leadership, mentoring, and commitment to innovation, Kenny consistently delivers reliable and scalable solutions for modern cloud-native applications. He remains dedicated to staying at the forefront of industry trends and emerging technologies, continuously expanding and deepening his skill set.