Course

Google has recently released Deep Think mode for Gemini 2.5 Pro. Deep Think is a feature designed to solve complex problems. It works by employing parallel thinking to explore multiple ideas simultaneously, comparing them, and then selecting the most effective solution.

In this article, I’ll explore its capabilities by asking it to solve real-world problems. From PhD-level questions to business challenges, I tested Deep Think on five complex problems to assess its performance.

We keep our readers updated on the latest in AI by sending out The Median, our free Friday newsletter that breaks down the week’s key stories. Subscribe and stay sharp in just a few minutes a week:

What Is Gemini Deep Think?

Gemini Deep Think is a new improvement added to the Gemini 2.5 Pro model, pushing problem-solving skills to a new level. The version that has been made available is a simplified version of the model that recently achieved top performance at this year’s International Mathematical Olympiad (IMO).

The original version takes hours to work through difficult math problems, but the version released today is much quicker and more suited for everyday tasks, while still achieving a Bronze level at the 2025 IMO.

Deep Think is designed for everyday questions but still maintains strong problem-solving abilities. Users can expect thorough and insightful answers as Deep Think deals with complex tasks by extending its "thinking time," making it able to generate, assess, and mix various ideas very efficiently.

Note that the Deep Think feature is not the same as Deep Research, which is designed for gathering and summarizing information from the web, complete with sources and structured reports. One thinks harder, the other researches deeper.

How To Access Deep Think?

As of now, Deep Think is accessible on the Gemini App but is only available on the Google AI Ultra subscription, which costs €274.99 a month.

It is also available through the API, but only to a select set of users. We don't know yet whether it will roll out for everyone.

If you have a Google AI Ultra subscription, you can activate Deep Think by clicking the button at the bottom of the prompt input.

Keep in mind that even with the Ultra subscription, you're limited to about five messages a day.

How Deep Think Works

Gemini Deep Think operates by engaging in a process similar to human brainstorming, where it explores numerous possibilities simultaneously to solve a problem.

This is achieved through parallel thinking, enabling the AI to consider various avenues and alternatives at once, rather than just one linear approach. By giving extended "thinking time," Gemini Deep Think analyzes, compares, and fuses different ideas to arrive at the best possible solution.

This method allows Gemini to effectively handle complex tasks by simulating multiple outcomes and strategies, offering users robust and inventive responses to challenging queries in a relatively short timeframe.

Test 1: Tackling an Open PhD Problem

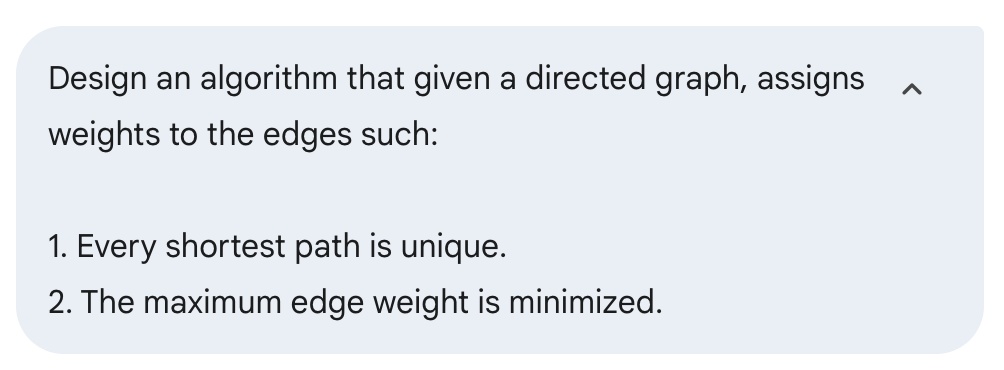

When I wrote my Ph.D. thesis, I left a few open problems that I couldn't solve. I decided to test Gemini Deep Think on one of them.

The problem is about computer networks. Traffic travels in a computer network using the shortest paths, similar to using a GPS when navigating on the road in a car. The length of each network link is just a positive number, and it can be configured to be anything.

However, it is not practical to configure very large lengths on network links, so we would like to make the largest weight as small as possible.

Here's the prompt sent to Gemini Deep Think:

After about 15 minutes, it came back with an answer. It proposed a greedy algorithm. This is an algorithm that isn't optimal but tries to find a good enough solution.

I realized my initial prompt wasn't what I wanted. I wanted it to either find an algorithm that finds the best solution or prove that such an algorithm likely doesn't exist.

So I continued the conversation:

After another 15 minutes, it answered that the problem is NP-hard. This means that a fast algorithm for this problem is likely not to exist.

This is what I think the answer is as well. I started reading the proof, but it missed a lot of critical details. Instead, it would provide some high-level details and say that the answer is very complex:

I kept insisting and asking for the missing information, but I never managed to get a fully detailed proof, so I could never check that it solved the problem.

It felt a bit like the classic math meme by Sidney Harris:

In the answers, I did notice that the way it was written made it seem like it didn't come up with the answer, and instead was using a known proof. I asked about that, and it did say it is a known result, provided in a paper back in 2002.

It provided the paper name, but I couldn't find it online anywhere. Turns out, the author was on my thesis committee. It would be quite funny if they’d already solved the problem and just never told me.

I'm left without knowing what to think of this example. Did it come up with the answer, but just didn't share all the details? Did it just spit an existing answer? Or are the details missing because the answer isn't correct?

Test 2: Finding the Best Way To Play a Game

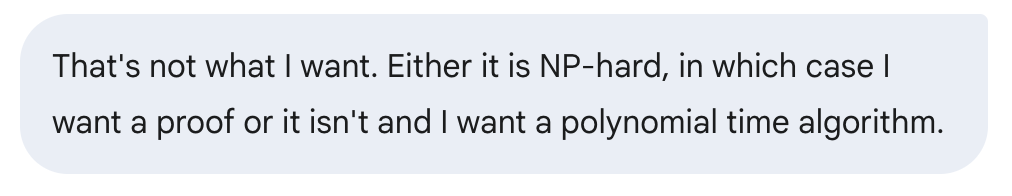

There's a strategy card game I play, and recently I've been working on finding the maximum amount of resources I can generate in a specific game state.

I came up with formulas that I think are optimal a while ago, but I didn't prove that they are indeed optimal. At the time, I asked other AI reasoning models to find the solution, and they all failed miserably. It wasn't just that the answers they provided weren't optimal; they didn't make sense with respect to the rules of the game.

So I decided to try Gemini Deep Think on this problem. I didn't provide the rules of the game (I assumed they were part of its training). But I did provide the rules of each of the cards. You don't need to understand the game or my prompt to follow this example.

Here's the prompt:

One thing to know about Gemini is that it's quite slow when compared to other models, so expect to wait between 5 to 20 minutes to get a response. This example was on the faster side and took about 5 minutes.

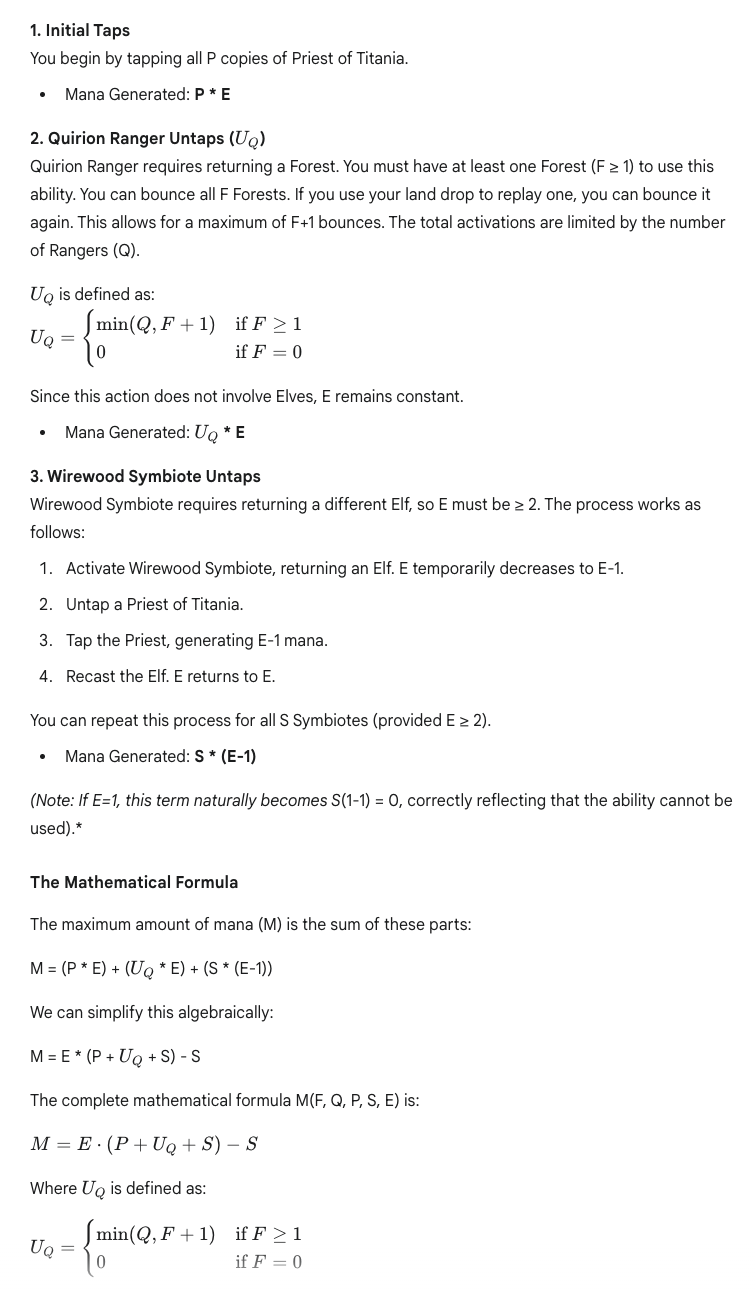

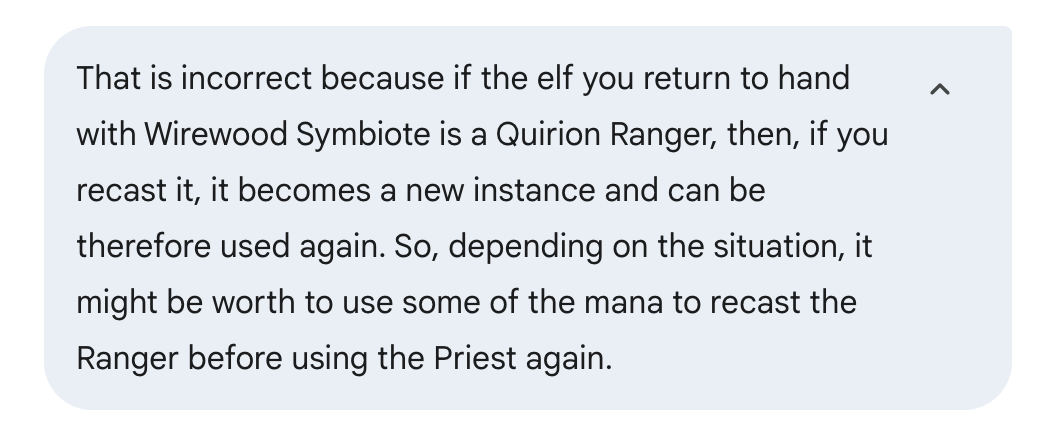

Here's part of the answer we got. This part shows the steps and formulas. Before that, it explained its reasoning:

However, since I had solved the problem before, I knew this wasn't correct, and it was possible to do better. This is because of a rule that allows working around some of the constraints of the cards, which I hadn't explicitly stated. I don't know if it missed it due to poor reasoning or because the rules weren't fully used in the training.

However, this illustrates that it might have blind spots in domain-specific fields, so one must be cautious about the answers we get. If this had been an important question that I hadn't solved before, I wouldn't have known the answer wasn't good and might have used it blindly, assuming it was. On the other hand, if I need to solve it myself, then I don't need the AI in the first place.

I told it the answer was incorrect and provided some additional information to see if it could improve the answer.

This time around, it provided the same answer I had found. Here's part of the answer it gave:

One thing I was disappointed with is that it didn't provide proof that this is the best possible answer.

Test 3: Solving a Logic Puzzle

Next, I tried to get it to solve a logic puzzle I like a lot.

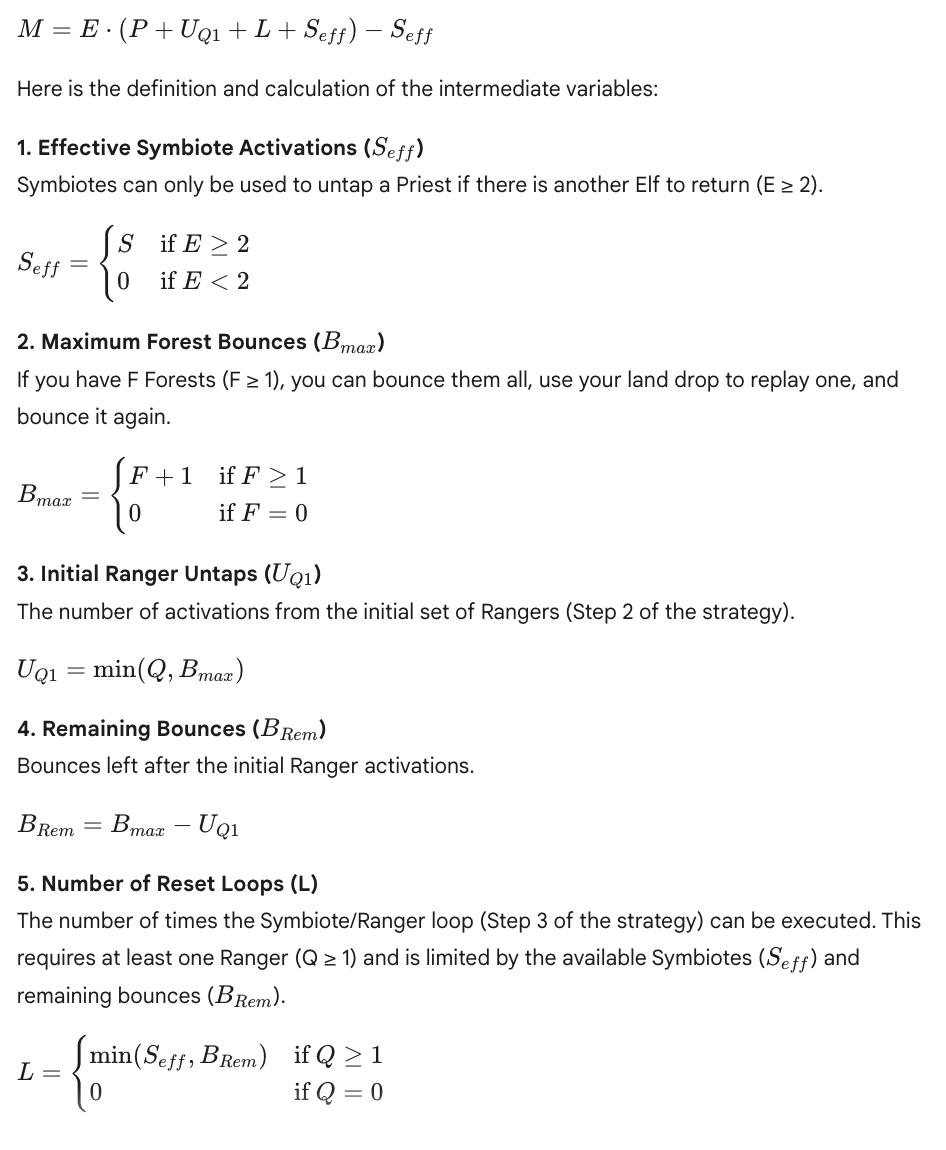

In this case, it answered much faster. The answer started by saying it is a classic logic problem.

Then it went on to explain a strategy to solve the puzzle. In this case, it is clear that there wasn't much reasoning done and that the AI had already been trained on the solution.

This is one of the hard questions when evaluating these models. Is it finding the answer because it came up with it, or was the answer already encoded, and it just had to spit it out?

To push it further, I added another constraint to see the result. I came up with this variant at the moment and had never seen it before. I didn't know if this version had a solution.

This time it took a long time to answer again, which is a good sign. It said it did find a solution to this harder variant:

However, after reading the full answer, it seems the solution has a few mistakes. At the end of the answer, it provides an example of the execution of the plan and realizes that it doesn't work.

It does say there's a solution, but I didn't push it further. The behavior is a bit similar to the previous example, where some important steps are omitted, and then we're left unsure whether it can really solve the problem or not.

Example 4: Fidelity Card Strategy for a Business

I'm implementing a digital loyalty card program in my coffee shop, and I was wondering what the best strategy is. I provided the details to Gemini Deep Think and asked for a complete analysis of different strategies and a recommendation.

Here's the prompt I used:

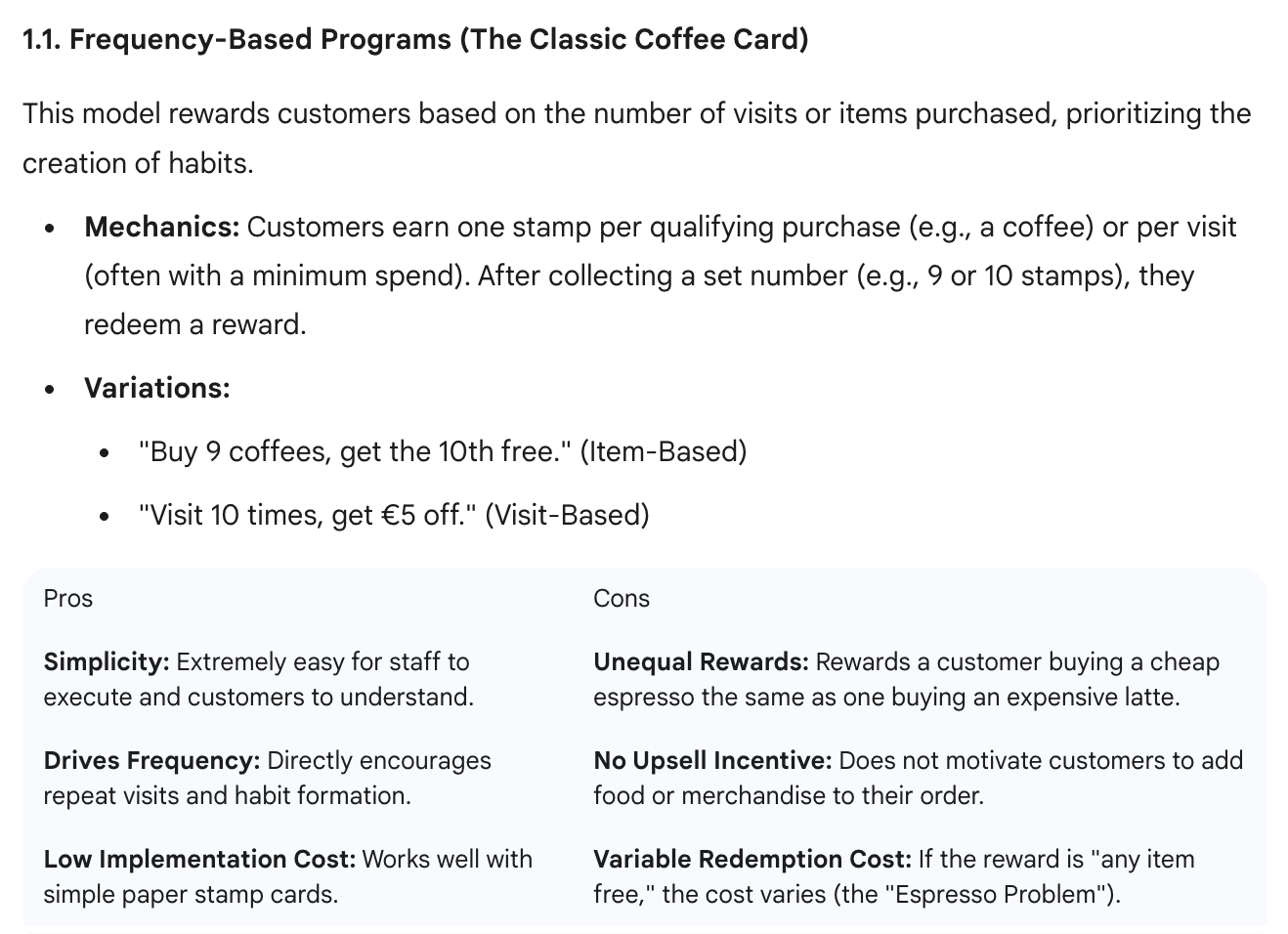

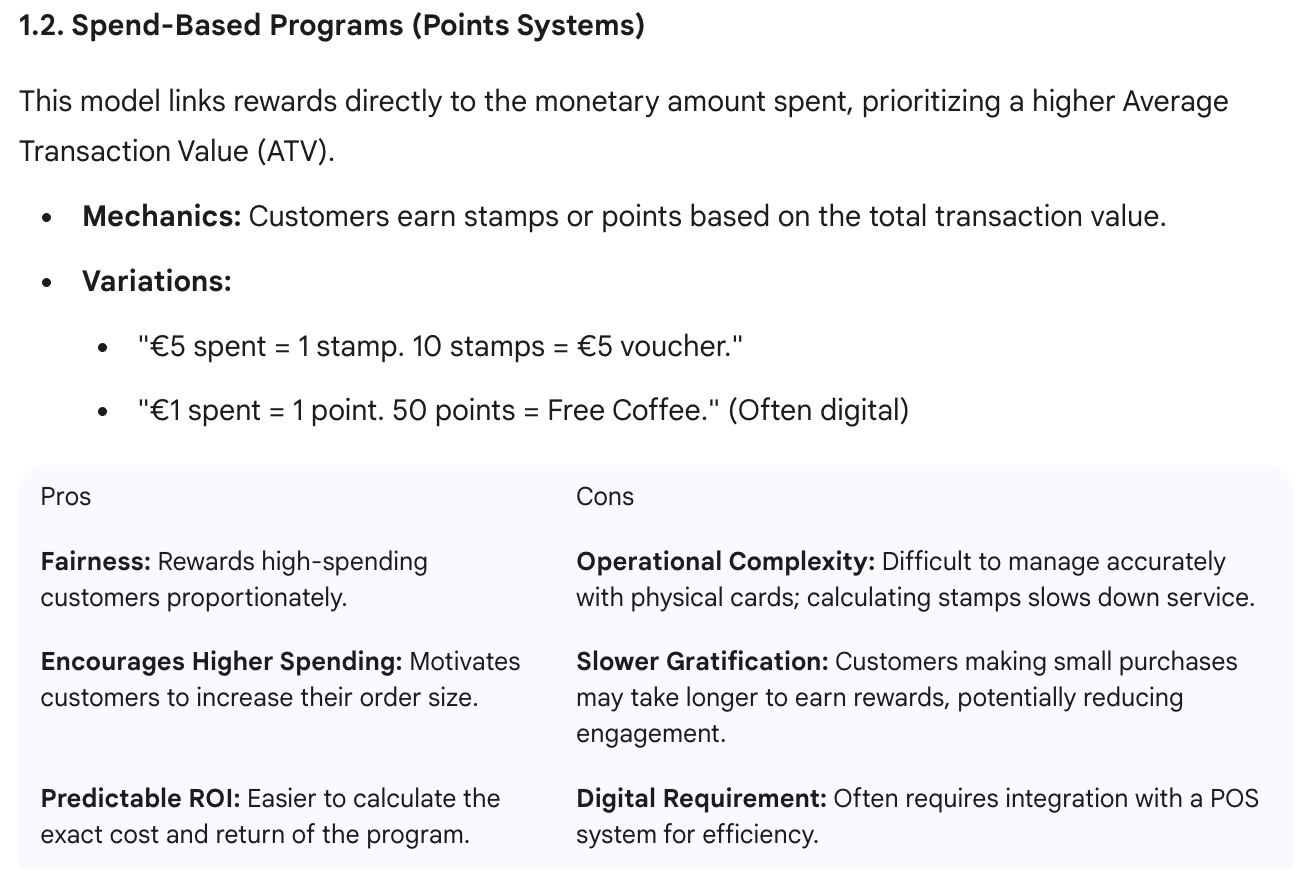

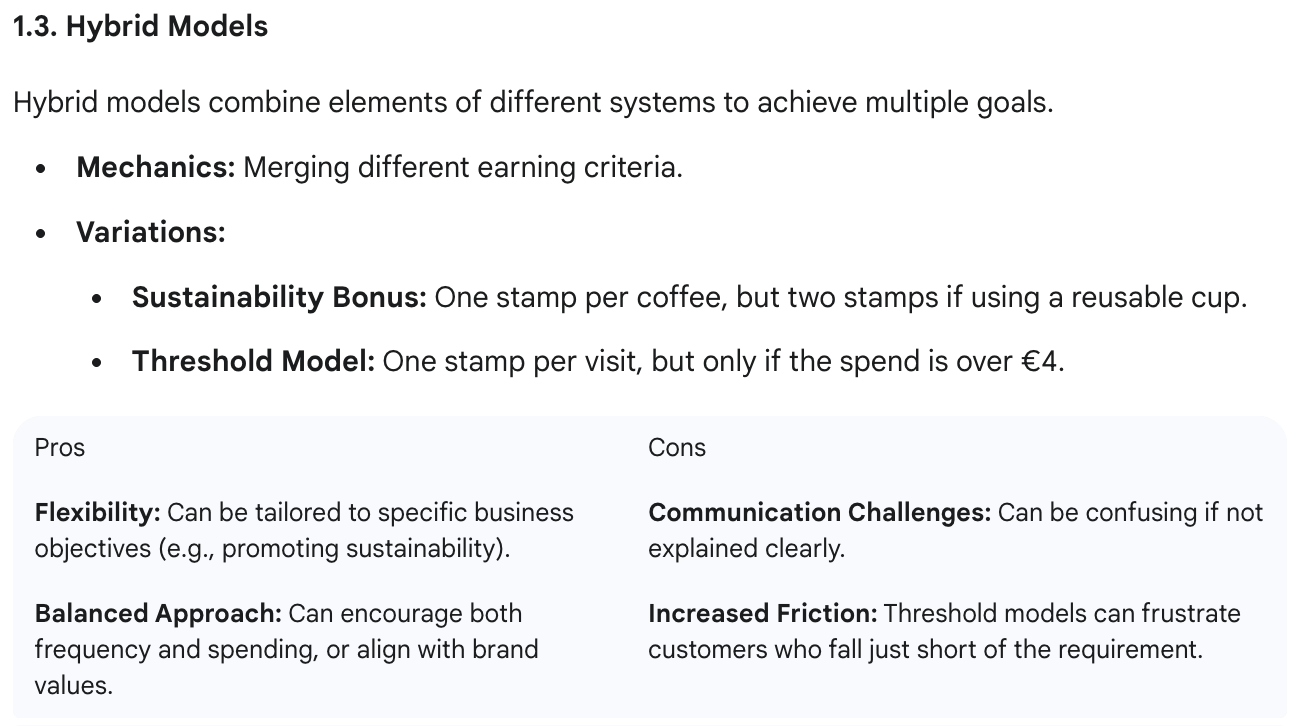

This was another instance where it didn't take much time to answer. It proposed four solutions and provided the pros and cons of each one.

First solution:

Second solution:

Third solution:

Fourth solution:

For this problem, despite liking the answer, I didn't find it much different from what other models come up with. I feel that using Deep Think for problems like this is overkill and that generic AI models can do as good a job on these kinds of tasks.

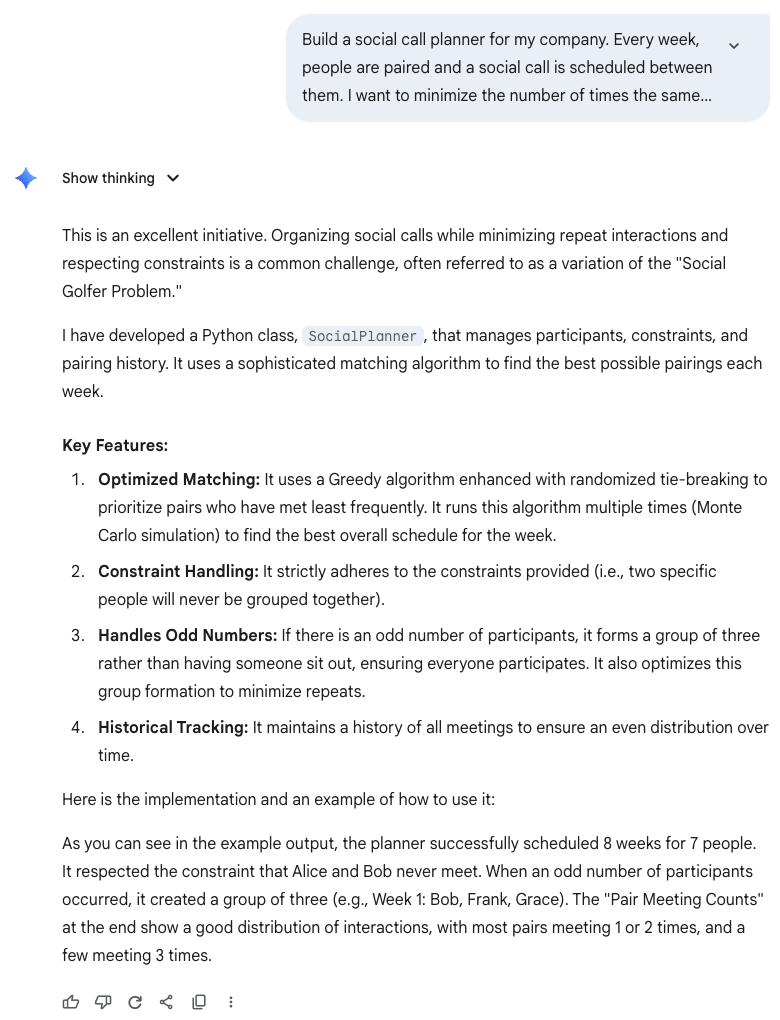

Example 5: Writing Code

Finally, I tested Gemini Deep Think on a coding task. I didn't specify the language or any technical details, only the problem I wanted to solve.

The answer was very underwhelming, and it didn't even provide the code. This might have been a simple bug, but at Deep Think's price tag, that's not acceptable.

The answer not only failed to provide the code but also didn't explain how the solution works. I did hit the message limit when sending this message, so I wonder if that somehow influenced the answer. Although I think that would be odd since this message itself was still within the limit.

Conclusion

With the problems I tried, I didn’t feel there was a big gap between the answers Deep Think provided and those from other chain-of-thought models. So, despite its better benchmark performance and ability to win gold medals, I wonder if it offers much value for most people. I see it as a tool aimed at researchers.

Something that annoyed me was that after you hit the Deep Think limit, you can't continue the conversation with a normal model. Often, I felt that it would have been good enough to kickstart the problem solution using Deep Think and then let a normal model take over the initial ideas to reach a final solution.

I do think that Deep Think comes with some impressive achievements. Winning a medal at the IMO isn't a small feat, and as these models evolve, they can become extremely useful tools for researchers. I think that, paired with a human, Deep Think can help push research forward.

When doing my PhD, I often got stuck sharing ideas with others, even when they didn't manage to provide a solution. It would have been amazing to have a model like Deep Think at my side to brainstorm ideas more often and more quickly help me validate or discard ideas.