Track

In 1965, Mr. Gordon Moore made a prediction about the pace of technological progress that proved to be quite prescient. He noticed that the number of transistors in integrated circuits roughly doubled every two years. Because the number of transistors in a circuit is directly correlated with the computing power of that circuit, Moore predicted an exponential increase in computing power as a result.

This idea became known as Moore’s law. While it was initially a prediction, it turned into an aspiration for the tech industry. It has fueled a constant push for innovation in transistor technology. This idea has become so ingrained in our culture that it has even influenced our media. Movies like The Matrix and Her exploit this idea of exponential technological innovation and imagine a future if we maintain this trajectory. But, unfortunately, it may be nearing its physical limits. I mean, how much smaller can transistors really get? So, is Moore’s law still relevant today? What happens when it reaches its endpoint?

What Is Moore’s Law?

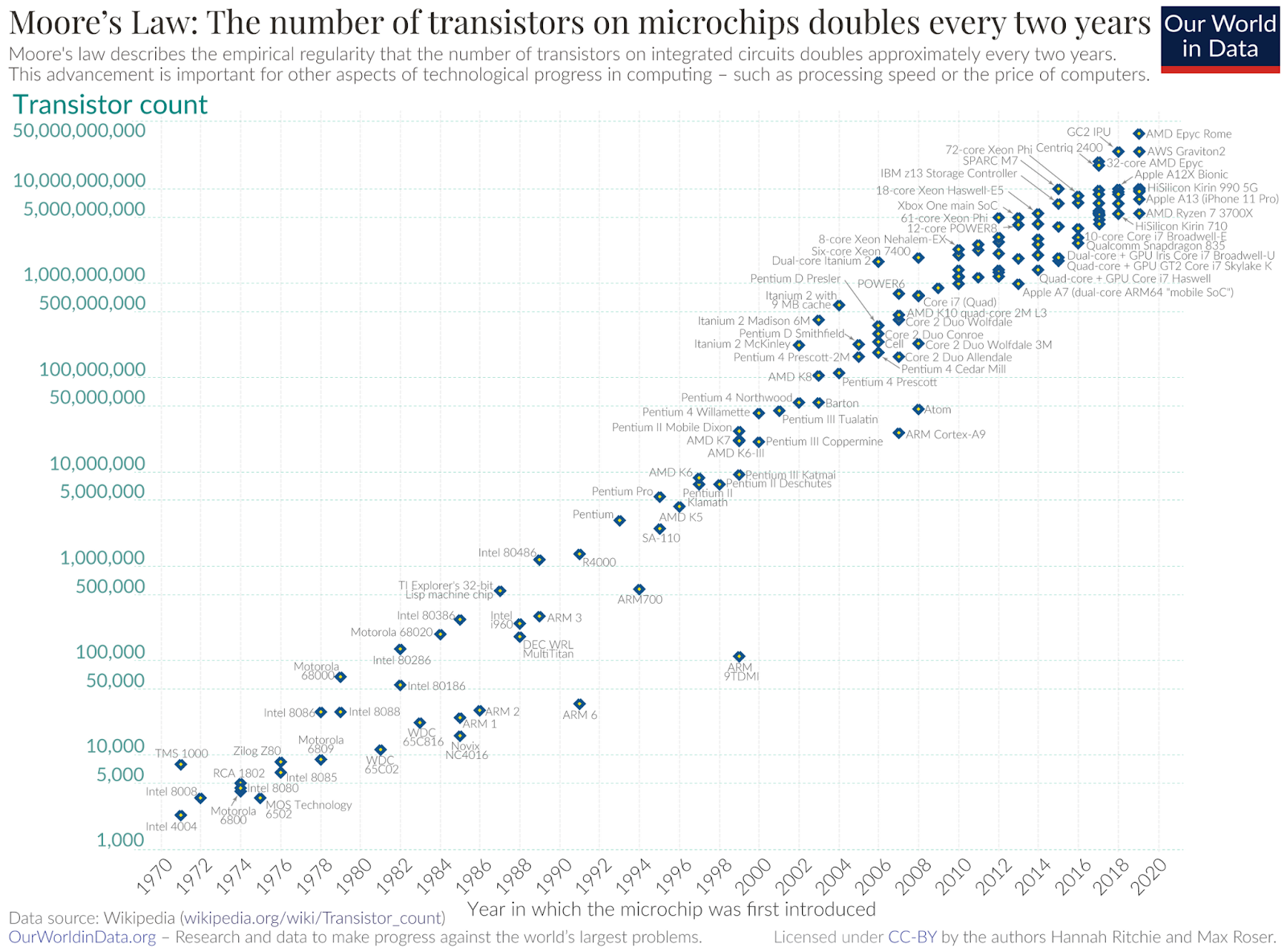

As I mentioned in the intro, Moore’s law is based on the observation that the number of transistors on a microchip doubles about every two years. The most often way I hear this phrased is, “computing power doubles every two years.”

Transistors are the tiny switches that control electrical signals, and more transistors generally means more processing power. For this reason, Moore’s law predicts that processing power see an indefinite exponential increase.

I highly recommend watching this short YouTube video from Real Engineering about transistors. He explains the history and science of both vacuum tubes and transistors intuitively.

The Origins of Moore’s Law

Gordon Moore was the co-founder of Intel. He first made his observation in 1965, based on trends he saw in semiconductor manufacturing at the time. These were the early days of the semiconductor industry, when computers were massive and expensive, and transistor technology was just starting to replace vacuum tubes.

His observation wasn’t based on any fundamental law of physics, but rather on the rapid pace of innovation he had witnessed. Initially, he predicted that transistor counts would double every year, but he later adjusted this to every two years. And this timeline has held remarkably true for decades.

The Science Behind Moore’s Law

The key to Moore’s law’s implementation is miniaturization. Engineers keep finding ways to make transistors smaller, allowing more of them to fit on a single chip. This not only increases processing power but also reduces costs and energy consumption. For over 50 years, this shrinking process has fueled technological progress.

This graph from Wikipedia shows the rough doubling of the number of transistors per chip over the past 50 years. Note that the y-axis is a logarithmic scale.

Impact of Moore’s law on computing

Although Moore's law was a prediction, it’s become almost a self-fulfilling prophecy as engineers and executives hold it as the goal. This drive has had significant effects on the tech industry and on society as a whole.

Technological advancements

Thanks to Moore's law, the push for faster, smaller technology is constantly accelerating.

For example, the earliest computers took up an entire room! Later versions could easily fit on a large desk, and now we have tiny laptops, tablets, and cell phones. Just in the relatively short time between my childhood and now, my family went from one, bulky, shared desktop to every individual having their own laptop, tablet, and phone! And there are a number of tasks I can accomplish on my phone now that I couldn’t do on my desktop then.

Cloud computing has been another significant advancement. The rapid increase in processing power has enabled the creation of the internet and the development of modern cloud services that offer shared computing power and storage. These services are now so extensive that they can meet the needs of entire businesses and analyze mind-bogglingly large datasets.

AI and machine learning have also flourished due to advancements in hardware. GPUs and TPUs provide the necessary computational power to train complex models. This has led to breakthroughs in various applications, from natural language processing to image recognition. The push for AI to get more efficient is also driven by environmental concerns. You can read more about these ideas in our blog post, Sustainable AI: How Can AI Reduce its Environmental Footprint?.

Industry Applications

You may not realize how many things around you have integrated chips. Everything from your dishwasher to your car contains microchips and has benefitted from Moore’s law.

In healthcare, advancements in semiconductor technology have enabled AI-powered diagnostic tools that analyze medical imaging and wearable health trackers that monitor vital signs in real-time. These powerful chips allow for much closer monitoring of chronic illness, fertility tracking, and the earlier detection of disease.

In the automotive sector, the rise of autonomous vehicles relies heavily on the real-time processing of massive amounts of sensor data, including radar, LiDAR, and cameras. Thanks to faster and smaller transistors, modern vehicles are equipped to make instantaneous decisions, thus improving safety.

The finance industry has also benefited immensely from the capabilities provided by smaller transistors. Lightning-fast computations enable trading algorithms to capitalize on microsecond-level market changes.

Unsurprisingly, telecommunications has experienced transformations, also, such as with the rollout of 5G networks and the expansion of Internet of Things devices.

What Is The Limit of Moore’s Law?

For decades, Moore's law has been a reliable predictor of progress. But can it keep up this pace forever? Is Moore's law reaching its limit?

Physical barriers

Currently, we are implementing Moore's law by continually shrinking transistor size. But there are several physical barriers to overcome if we continue down that path.

The first challenge is heat. Smaller transistors generate more heat, making cooling systems a big concern. Smaller transistors tend to have less insulation, and because more of them can be packed onto one chip, more electrons are flowing in a smaller area. This causes a lot more heat per area.

Another problem you encounter at extremely small scales is electron “leak.” This is a phenomenon where electrons “leak” out of their track on the circuit and move to another part of the chip. The close quarters and limited insulation of these small transistors exacerbate this issue. This can lead to power loss and weird behavior.

Illustration of electron leak. Image by Author using Copilot.

The above graphic I put together is a very simplified explanation of one problem with shrinking transistors and other components: electron leak.

Most semiconductors used in transistors are currently made from silicon (which is where we get the moniker, Silicon Valley). Silicon’s properties, such as electron mobility and heat tolerance, become less effective as transistors shrink to very small sizes. This makes it increasingly difficult to maintain efficiency.

Researchers are looking into using different materials at these small sizes, such as silicon-germanium, gallium arsenide, or even cutting-edge options like graphene and carbon nanotubes. These might allow us to temporarily circumvent some of these physical barriers, and continue our miniaturization process.

Economic and manufacturing challenges

Ironically, another significant challenge for Moore's law arises from Moore’s second law, also referred to as Rock’s law. This principle states that the cost of building semiconductor factories doubles approximately every four years. While processing power continues to increase, the expenses associated with creating that power are also on the rise. Mckinsey has suggested that the cost of processing power necessary to power our AI revolution could reach $7 trillion by 2030! Only time will tell if companies can find a way to keep this as a viable trajectory.

Is Moore’s Law Still Valid?

The truth is, no one knows if Moore's law will continue to represent reality. Transistor miniaturization seems to be hitting physical and economic limits, and industry leaders are divided on whether it can continue for much longer. Some, like Nvidia CEO Jensen Huang, believe the physical and economic barriers to transistor miniaturization have been reached, and that Moore's law is dead. Others, like Intel CEO Pat Gelsinger, argue that miniaturization is not the only way, and point to new technologies to pull us out of this Moore's law funk.

Previous challenges to Moore's law

Believe it or not we’ve had this discussion before. There have been several challenges along our miniaturization journey that have cause us to question Moore's law.

In the 1990s and early 2000s, as transistors shrank below 100nm, engineers faced significant heat dissipation challenges, which they initially thought would limit further miniaturization. However, advancements in transistor design, along with improvements in materials and chip-level thermal management, allowed continued progress despite these obstacles.

Then again in 2016, Intel struggled with the transition between 14nm and 10nm transistors. Manufacturing complexities and power leakage issues slowed development significantly. However, Intel and other semiconductor companies overcame these obstacles by refining extreme ultraviolet (EUV) lithography, optimizing materials, and improving transistor designs, allowing the industry to push forward once again. This transition, however, took closer to 5 years, leading researchers to question whether Moore’s law was still applicable.

Future innovations

There’s reason to believe the challenges we currently faced can be surpassed as well. Recent innovations are shifting away from the traditional approach of transistor miniaturization, instead exploring alternative architectures to enhance performance.

One promising advancement is 3D chip stacking, where engineers increase transistor density by building vertically rather than continuing to shrink them horizontally. This is an architectural change to avoid relying on miniaturization.

Quantum computing is an entirely new computational paradigm that makes use of qubits, which can exist in several states (not just 1 and 0) to solve problems that challenge classical computers. You can think of this as giving each “transistor” more abilities. This would increase processing power, without necessarily increasing the number of “transistors”, but rather changing what the “transistors” can do.

Some researchers are even investigating optical computing, which uses light-based processing to transmit data faster than conventional electronic systems. Photons move much faster than electrons, allowing information to travel through the system more quickly. So here, again, we wouldn’t be increasing the number of transistors. But the processing power would presumably be increased by increasing the speed of movement through them.

What Is the Future of Computing?

Even if Moore's law slows or breaks down altogether, the future of computing remains incredibly promising.

One exciting development is the introduction of new materials, such as graphene and indium gallium arsenide. These materials offer superior electrical properties and could eventually replace silicon as the foundation of next-generation chips. They can help address some of the fundamental limitations of conventional semiconductor technology.

There’s also a brand-new type of computing emerging called neuromorphic computing. Inspired by the structure and function of the human brain, neuromorphic chips use artificial neurons and synapses to operate in a way that mimics biological brains.

Artificial intelligence is also driving a transformation in computing. Advancements in AI and machine learning will likely depend on more than just hardware improvements, but also on software efficiency. As AI models grow increasingly sophisticated, so will the need for specialized processors designed to handle AI workloads.

Conclusion

Moore's law has been a reliable goal for technological progress for over 50 years, arguably shaping whole industries. Although we may be nearing capacity for the number of transistors we can pack into a space, innovations like 3D chip stacking, quantum computing, and neuromorphic architectures may continue to increase our processing power.

What do you think? Is Moore’s law dead? Do we need to rethink our expectations for processing power progress? Or will humanity once again find an innovation to continue the trend?

Beyond Moore's law, what other steadfast tech concepts might be falling by the wayside? Check out the DataFramed discussion, Is Big Data Dead? MotherDuck and the Small Data Manifesto with Ryan Boyd Co-Founder at MotherDuck.

And if this type of thinking fascinates you, you may be interested in a job in AI. Check out these 7 Artificial Intelligence (AI) Jobs You Can Pursue in 2025.

I am a PhD with 13 years of experience working with data in a biological research environment. I create software in several programming languages including Python, MATLAB, and R. I am passionate about sharing my love of learning with the world.

FAQs

What is Moore's law?

Moore’s law is a prediction that the number of transistors on a computer chip doubles every year, leading to an exponential increase in processing power.

Who created Moore's law?

Moore's law was first stated by Gordon Moore, the co-founder of Intel.

How has Moore's law been achieved over the years?

The primary way that Moore's law has been achieved is through shrinking transistor technology.

What are some challenges to shrinking transistors?

As transistors continue to shrink, they face issues with heat dissipation and electron leak, among others.

Is Moore's law dead?

Industry leaders are divided about whether or not Moore's law is reaching its physical limit.