Track

Innovation is the quest for new scientific ways to solve or improve the limitations of state-of-the-art technologies. If we look at the current state of AI, it is extremely powerful and capable of doing things that just a few years ago seemed impossible. However, many of these systems are inefficient and resource-intensive.

As we explained in a separate article on Sustainable AI, training and running modern AI systems requires enormous amounts of electricity, water, and resources, resulting in a rapidly increasing environmental footprint. Against this backdrop, It’s not surprising that Sam Altman, CEO of OpenAI, the company behind ChatGPT and other successful generative AI models, stated that the future of AI depends on energy breakthroughs.

However, while we wait for the long-awaited advent of new sources of (clean) energy, researchers are looking for new ways to reduce the energy bill of current AI technologies through novel, more efficient computing approaches.

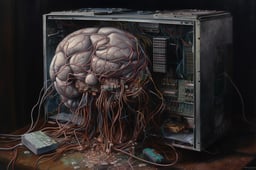

Here is where neuromorphic computing comes in. Neuromorphic computing is an approach to computing that is inspired by the structure and function of the human brain. It entails a paradigm shift that simulates the neural and synaptic structures and functions of the brain to process information. The goal is to create novel AI systems that go beyond today’s deep learning models in terms of capabilities and efficiency.

This article will tell you everything you need to know to get started in the exciting and promising field of neuromorphic computing. We will cover the basics of this computing paradigm, its main applications, as well as its advantages and challenges.

What Is Neuromorphic Computing?

Neuromorphic computing is a revolutionary approach to designing hardware and software that mimics the human brain, offering a more efficient and sustainable alternative to traditional computing for powering advanced AI systems.

The last decades have witnessed a continuous improvement in computer capabilities. From simple calculators to complex systems that leverage the power of AI and machine learning to predict all kinds of events with unprecedented accuracy and generate content (e.g., ChatGPT), the evolution of computers tells us a lot about modern society and how we got here.

However, modern computers are reaching their limits. With the rise of AI, current computing approaches have proven inefficient, slow, and energy-intensive. The amount of resources required to make AI systems ubiquitous is arguably excessive and difficult to afford from an environmental perspective.

Neuromorphic computing is a novel approach that tries to address the limitations of current machines. Despite the term not being new (it dates back to the 1980s when researchers Misha Mahowald and Carver Mead developed the first silicon retina and cochlea and the first silicon neurons and synapses that pioneered the neuromorphic computing paradigm), it is rapidly gaining momentum.

It proposes a complete revision of how hardware and software are conceived and developed. The goal is to design computer components that emulate the human brain and nervous system.

How Does Neuromorphic Computing Work?

To understand how neuromorphic computing works, we first need to understand how the human brain works.

In simple words, processing and memory functions are performed by neurons and synapses. These neurons and synapses send and carry information from the brain with near-instantaneous speed and near-to-zero energy consumption. Such functions explain, for example, why we can rapidly distinguish a cat from a dog or stop our car when we see a pedestrian in the middle of the road.

The key to our outstanding capacity to navigate the complexities of reality is that processing and memory functions take place in the same location. This is fundamentally different as compared to traditional computers, which have separate memory and processing units.

While this design proves extremely effective in complex operations, such as predicting whether it will rain tomorrow, it’s far from ideal in particular tasks that humans find easy, such as image recognition, reasoning, or walking.

The revolution in neuromorphic computing promises that these tasks will be performed on one chip, specifically designed to run so-called spiking neural networks (SNNs), which is a type of artificial neural network composed of spiking neurons and synapses. Check out our dedicated article to discover what neural networks are in more detail.

SNNs depart from conventional neural networks because they remove the need to transfer data between memory (RAM) and processing units (CPU or GPU). They are also event-driven, meaning that they only process information when relevant events occur (based on a spiking mechanism that relies on thresholds to activate neurons).

As a result, SNNs can speed up processing time and reduce the energy use involved. Such gains in latency can make a big difference, particularly in tasks where computers rely on real-time sensor data, such as the cameras in self-driving cars.

Applications of Neuromorphic Computing

Neuromorphic computing can advance a revolution with deep implications for the industry. If the promises of unprecedented efficiency gains become true and mainstream, neuromorphic computing can be a game-changer for many applications, speeding up their development and implementation.

Below, you can find a list of the most compelling applications that can benefit from neuromorphic computing.

Artificial intelligence and machine learning

AI is one of the most disruptive and transformative technologies we’ve developed. The uses of AI are endless, however, as we have already mentioned, current computers are not well-placed to exploit the full potential of AI.

Fortunately, neuromorphic computing provides an innovative computing approach that has already proved particularly well suited for tasks and problems that require energy efficiency, parallel processing and adaptability, such as pattern recognition and sensory processing. For example, neuromorphic machine learning has been used for recognizing patterns in natural language or for facial recognition purposes.

Autonomous vehicles

A great example of how neuromorphic computing can be a game-changer in applications that require sensory processing is autonomous cars. Manufacturers of self-driving cars use multiple cameras and intelligent sensors to acquire images from the environment so that their self-driving cars can detect objects, lane markings, and traffic signs to drive safely.

Thanks to their high performance and low latency, neuromorphic computers can help improve autonomous vehicles' navigational capabilities, enabling quicker and more accurate decision-making, which is essential to minimize potential accidents, while allowing a significant reduction in energy use. This would ultimately result in extended battery life for cars.

If you are interested in the technicalities of autonomous vehicles, here is a DataCamp project to detect traffic signs with deep learning that may help you get started in the field.

Robotics

In the same vein as autonomous vehicles, neuromorphic computing is regarded as a key technology that will take robotics to a new stage of development. New robots powered with neuromorphic sensors may be better suited to address classical challenges in the field, such as how to enable real-time learning and decision-making in real-life scenarios, taking into account battery-related constraints.

Interested in how machines interact with their surroundings? Read this article to learn more about machine perception and smart sensors.

Edge computing

Most advanced AI systems often rely on remote cloud servers for their heavy lifting. This approach can be problematic for applications needing quick responses, such as self-driving cars or those on devices with limited resources. Edge computing tries to do this by bringing the AI capabilities directly to the device itself.

Advancements in neuromorphic computing are likely to revolutionize edge computing. Thanks to the low power consumption of neuromorphic chips, smartphones and all kinds of smart wearables –that normally have short battery life—, will be able to perform many new tasks that previously required a lot of energy.

Advantages of Neuromorphic Computing

Here, we cover some of the most notable reasons why PwC recently called neuromorphic computing to be one of the eight essential emerging technologies right now.

Energy efficiency

Neuromorphic computing could be the foundation for a new phase in smart computing. Novel hardware and software components will translate into efficiencies in both data processing and power consumption. Such improvements will help companies drive operational costs down, develop faster and more accurate AI systems, and, hopefully, reduce their carbon footprint.

Parallel processing

Parallel processing refers to taking a computing task and breaking it up into smaller tasks across multiple servers, which then complete these tasks simultaneously and faster. The characteristics of SNNs make them particularly well-suited for tasks that require parallel processing, such as pattern recognition.

Real-time learning and adaptability

Neuromorphic computers based on SNN are great at real-time learning and adaptability. This flexibility and versatility can be vital in AI applications that require continuous learning and quick decision-making, such as robots or autonomous vehicles.

Challenges and Limitations

While the benefits of neuromorphic are clear, the technology is relatively nascent and not ready to become mainstream. Here are some of the challenges ahead.

Lack of standards

While there is a growing number of neuromorphic projects out there, the majority of them reside in well-funded research labs and universities. That suggests that technology is not ready yet to reach the market. There is still a lack of hardware and software standards, without which scalability is hardly impossible.

Limited accessibility

A lack of standards basically means we still lack the words to name and describe the components and properties of neuromorphic computers. That explains why only a small number of experts worldwide are familiar with neuromorphic computing. As Andreea Danielescu, an associate director at Accenture Lab, says:

Even for people with extensive AI and machine learning backgrounds. It requires extensive knowledge in various domains, including neuroscience, computer science, and physics

Andreea Danielescu, Associate Director at Accenture Lab

Integration with existing systems

Finally, even if the promises of the technology prove true, making its way through the market and the technology ecosystem will take time and resources. Neuromorphic computing proposes a 180-degree revision in computing design. However, pretty much all deep learning applications out there are based on traditional neural networks, which are ultimately based on traditional hardware. This situation will create important difficulties in incorporating neuromorphic systems into current computing infrastructures.

The Future of Neuromorphic Computing

Without a paradigm shift, we won’t have enough resources to sustain the AI revolution. Daniel Bron, a tech consultant, points in this direction, suggesting the potential role of neuromorphic computing:

AI is not going to progress to the point it needs to with the current computers we have. Neuromorphic computing is way more efficient at running AI. Is it necessary? I can’t say that it’s necessary yet. But it’s definitely a lot more efficient.

Daniel Bron, Tech Consultant

Everyone in the AI industry is aware of the limitations of current computers and is looking for innovative technologies, including neuromorphic computing and quantum computing, to drive costs down and increase profits. That’s why the global neuromorphic computing market size is expected to grow by 20% in the next five years.

In the medium term, we can expect the development of hybrid conventional computers with novel neuromorphic chips that will improve the performance of current AI applications. In the long term, the combination of neuromorphic computing and quantum computing may take us to a completely new era of computing.

Conclusion

Neuromorphic computing is one of the top emerging technologies in 2025, and it’s worth keeping track of the latest developments in the industry. In the meantime, DataCamp will keep working to offer the best and most insightful courses and material. Here are some resources to help with your learning journey:

Neuromorphic Computing FAQs

What is a spiking neural network?

A spiking neural network (SNN) is a type of artificial neural network that mimics the structure and function of neurons in the human brain.

What are the main limitations of current neural networks?

The latest developments in AI have shown the limits of modern computers, which are extremely inefficient, slow, and energy-intensive to perform complex tasks.

What are the main applications of neuromorphic computing?

Some of the most promising applications of neuromorphic computing are AI, autonomous vehicles, robotics, and edge computing.

What are the challenges of neuromorphic computing?

Some of the most pressing challenges of neuromorphic computing are the lack of standards, limited accessibility, and integration problems with existing technologies.

I am a freelance data analyst, collaborating with companies and organisations worldwide in data science projects. I am also a data science instructor with 2+ experience. I regularly write data-science-related articles in English and Spanish, some of which have been published on established websites such as DataCamp, Towards Data Science and Analytics Vidhya As a data scientist with a background in political science and law, my goal is to work at the interplay of public policy, law and technology, leveraging the power of ideas to advance innovative solutions and narratives that can help us address urgent challenges, namely the climate crisis. I consider myself a self-taught person, a constant learner, and a firm supporter of multidisciplinary. It is never too late to learn new things.