Course

One of the main challenges in building an effective regression model is what we refer to as multicollinearity. Multicollinearity arises when two or more independent variables in a model are highly correlated, leading to unreliable statistical inferences. This can be a big problem if you need to accurately to intepret your regression coefficients or if you need to test your confidence in them.

Here, I will guide you through the key concepts of multicollinearity, how to detect it, and also how to address it. If you are new to linear regression, read our tutorial, Simple Linear Regression: Everything You Need to Know as a starting point, but be sure to follow up with Multiple Linear Regression in R: Tutorial With Examples, which teaches about regression with more than one independent variable, which is the place where multicollinearity can show up.

What is Multicollinearity?

Building accurate machine learning models is a challenging task because there are so many factors at play. The data should be of decent quality, volume, etc. Then you need to build the right type of machine learning algorithms. However, in between the two there is a stage of data preparation, which isn’t as glamorous as building predictive models, but for sure this stage is often the make or break deciding factor in the machine learning lifecycle process.

During data preparation, we watch out for multicollinearity, which occurs when independent variables in a regression model are correlated, meaning they are not independent of each other. This is not a good sign for the model, as multicollinearity often leads to distorting the estimation of regression coefficients, inflating standard errors, and thereby, reducing the statistical power of the model. This also makes it difficult to determine the importance of individual variables in the model.

Types of Multicollinearity

Multicollinearity can take two main forms, each affecting how the independent variables in a regression model interact and how reliable the resulting estimates are.

Perfect multicollinearity

Perfect multicollinearity happens when one of the independent variables in a regression model can be exactly predicted using one or more of the other independent variables. This means that there is a perfect relationship between them.

Imagine you have two variables, X1 and X2, and they are related with the equation: X1 = 2*X2 + 3, this basically means that the regression model won't be able to separate the effects of X1 and X2 because they are essentially telling the same story. In other words, one variable is a perfect linear function of the other.

Imperfect multicollinearity

In imperfect multicollinearity, variables are highly correlated, but not in a perfect, one-to-one manner like in case of perfect multicollinearity. The variables may share a high correlation, meaning when one variable changes, the other tends to change as well, but it's not an exact prediction.

In this situation, it’s not that the model will not give you the results. The only problem is that these results might be unstable, which means that even small changes in the data can lead to large changes in the estimated coefficients. This makes it harder to interpret the importance of each variable reliably.

Structural multicollinearity

Structural multicollinearity arises from the way the model has been built, not as much from their natural relationships. This often occurs when you include interaction terms or polynomial terms in your model.

For example, if you think that the effect size of one variable increases as another variable increases, you might consider adding an interaction term. However, the problem is that if these variables are already somewhat correlated, adding the interaction term could really overdo it and lead to multicollinearity issues.

Multicollinearity in Regression

Multicollinearity affects regression analysis by creating problems when you're trying to estimate the relationship between the independent variables (the predictors) and the dependent variable (the outcome). Specifically, multicollinearity increases the variance of the coefficient estimates, making them sensitive to minor changes in the model or the data.

When the coefficients become unstable, the standard errors become larger, which, in turn, can result in insignificant p-values even when the variables are truly important. It's important to know that multicollinearity doesn't affect the overall predictive power of the model. It does, however, affect the interpretation of the model because our model will have inflated errors and unstable estimates.

How to Detect Multicollinearity

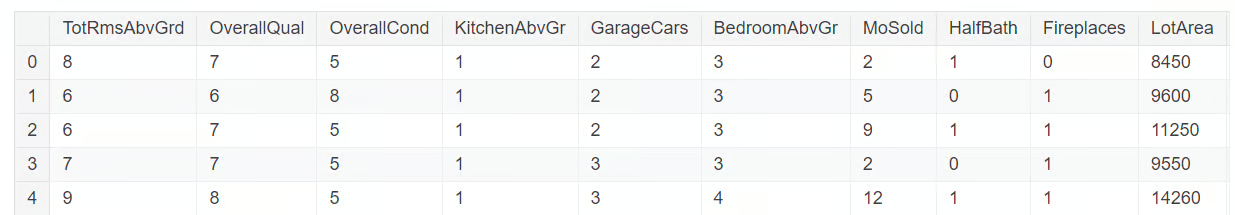

Detecting multicollinearity requires several diagnostic tools. We’ll explore these using a subset of the dataset taken from the Housing Prices Competition on Kaggle. The subset of the data that we will be using you can find at this GitHub repository. Let’s load and explore the dataset with the code below:

import pandas as pd

import numpy as np

import seaborn as sns

from statsmodels.stats.outliers_influence import variance_inflation_factor

df = pd.read_csv('mc_df.csv')

df.head()

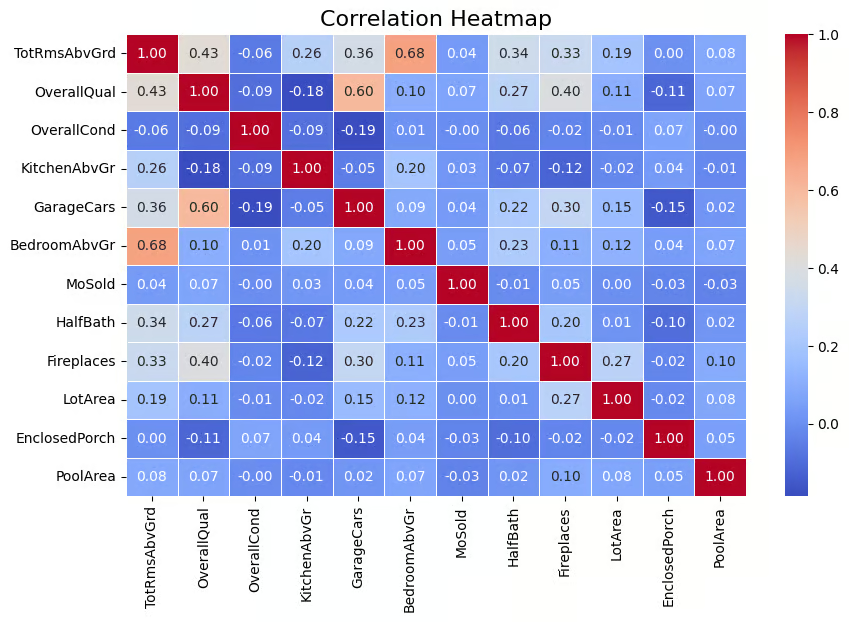

Correlation matrix

One widely used technique to detect multicollinearity is through a correlation matrix that helps visualize the strength of relationships between variables. The matrix shows the pairwise correlation coefficients between the variables, which indicates how strongly they are linearly related (values range from -1 to 1). A rule of thumb I use is that the absolute correlation values above 0.6 indicates strong multicollinearity.

Since multicollinearity is detected for independent variables, we need to remove the target variable, SalePrice, from our dataset. This is done with the code below.

multi_c_df = multi_c_df.drop('SalePrice', axis=1)Now we are all set for the correlation analysis. The code below calculates the correlation matrix for the DataFrame multi_c_df. After calculating the correlations, the code uses Seaborn's heatmap() function to visually represent the correlation matrix as a heatmap. The annot=True argument adds the numerical correlation values directly onto the heatmap.

# Correlation matrix

correlation_matrix = multi_c_df.corr()

# Set up the matplotlib figure

plt.figure(figsize=(10, 6))

# Create a heatmap for the correlation matrix

sns.heatmap(correlation_matrix, annot=True, cmap="coolwarm", fmt=".2f", linewidths=0.5)

# Title for the heatmap

plt.title("Correlation Heatmap", fontsize=16)

# Show the heatmap

plt.show()

Correlation matrix represented by heatmap. Image by Author

The output above shows that there is correlation among some of the independent variables. For example, BedroomAbvGr and TotRmsAbvGrd have a relatively high correlation (0.68). Also, GarageCars and OverallQual have a correlation of 0.60, indicating that they are also related. So there are independent variables that have decent correlation suggesting presence of multicollinearity for some, if not all, of these variables.

At this juncture, it’s very important to note that multicollinearity can arise even when there is no obvious pairwise correlation between variables, because one variable could be correlated with a linear combination of more than one other variable. This is why it’s important to also consider the variance inflation factor, which we will cover next.

Variance inflation factor (VIF)

Variance inflation factor (VIF) is one of the most common techniques for detecting multicollinearity. In simple terms, it gives a numerical value that indicates how much the variance of a regression coefficient is inflated due to multicollinearity. A VIF value greater than 5 indicates moderate multicollinearity, while values above 10 suggest severe multicollinearity.

Let’s explore this in our dataset. We’ll now compute the VIF value for each of these independent variables. This task is performed in the code below with the variance_inflation_factor() function.

# Calculate VIF for each numerical feature

vif_data = pd.DataFrame()

vif_data["feature"] = multi_c_df.columns

# Calculate VIF and round to 4 decimal places

vif_data["VIF"] = [round(variance_inflation_factor(multi_c_df.values, i), 4) for i in range(df.shape[1])]

# Sort VIF values in descending order

vif_data = vif_data.sort_values(by="VIF", ascending=False)

# Display the VIF DataFrame

print(vif_data)

VIF value for the numerical variables. Image by Author

You can see that there are several variables that have a VIF value greater than 10, indicating presence of multicollinearity.

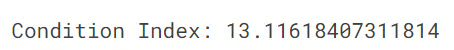

Condition index

Condition index is another diagnostic tool for detecting multicollinearity, with values above 10 indicating moderate multicollinearity and values above 30 indicating severe multicollinearity. The condition index works by checking how much the independent variables are related to each other by examining the relationships between their eigenvalues.

The code below calculates the condition index to check for multicollinearity in a regression model. It first computes the eigenvalues of the correlation matrix (which shows the relationships between variables). The condition index is then calculated by dividing the largest eigenvalue by the smallest one.

from numpy.linalg import eigvals

# Calculate the condition index

eigenvalues = eigvals(correlation_matrix)

condition_index = max(eigenvalues) / min(eigenvalues)

print(f'Condition Index: {condition_index}')

In our case, the condition index value is 13, which indicates moderate multicollinearity in the model. While this level of multicollinearity, as per the condition index, is not severe, it may still affect the precision of the regression coefficient estimates and make it more difficult to distinguish the individual contributions of the correlated variables. If you are interested in knowing more about eigenvalues, read our article, Eigenvectors and Eigenvalues: Key Insights for Data Science.

How to Address Multicollinearity

To effectively manage multicollinearity in the regression models, there are several techniques one can apply. These methods help ensure that the model remains accurate and interpretable, even when independent variables are closely related.

Removing redundant predictors

One of the simplest ways to deal with multicollinearity is to simply remove one of the highly correlated variables, often the one with the highest VIF value. This is effective, but the drawback is that it can result in the loss of useful information if not done carefully.

Combining variables

The other technique is that when two or more variables are highly correlated, we can combine them into a single predictor using techniques like Principal component analysis (PCA). This reduces the dimensionality of the model while retaining the most critical information. The major drawback is the loss of interpretability as it is difficult to explain the science and math behind PCA to a non-technical audience.

Ridge and lasso regression

For data scientists, applying regularization techniques with ridge and lasso regression is another popular technique to deal with the multicollinearity problem. These regularization techniques apply penalties to the regression model, shrinking the coefficients of correlated variables and therefore, mitigating the effects of multicollinearity.

Common Errors and Best Practices

When dealing with multicollinearity, there are few common mistakes that can lead to poor model performance. It’s important to be cognizant of these errors and follow best practices to create more reliable regression models.

Misinterpreting high VIF values

When you see a high variance inflation factor (VIF) for a variable, it’s tempting to immediately remove that variable from your model, assuming it's causing multicollinearity. However, this can be a mistake because even if a variable has a high VIF, it could still be very important for predicting the outcome. So if you remove it without checking its importance score, your model might perform worse. The key is to carefully evaluate whether the variable is essential before deciding to remove it.

Over-reliance on correlation matrices

A correlation matrix is a useful technique to identify the magnitude and direction of relationship between variables, but the problem is that it only shows linear relationships. What about the complex, non-linear relationships? Unfortunately, that won’t be captured in the matrix. So being dependent only on a correlation matrix is not going to give you a complete picture. That’s why it’s important to use other metrics such as VIF and condition index to get a more complete picture.

Alternatives to Address Multicollinearity

In addition to traditional approaches, there are several advanced methods also available to handle the multicollinearity issue. Some of these techniques are discussed below.

Feature selection methods

Automated feature selection methods like Recursive Feature Elimination (RFE) can be a good alternative. These methods analyze the importance of each predictor and automatically remove those that don’t add much value. This streamlines the process, making it easier to reduce multicollinearity without having to manually decide which variables to remove.

Collecting more data

Increasing the sample size can reduce multicollinearity by adding more variation to the dataset, making it easier to distinguish between the contributions of different predictors. So another solution is to simply collect more data. When the dataset size increases, it adds more variation to the variables, making it easier to distinguish between the effects of different predictors. This in turn helps in reducing the impact of multicollinearity.

Conclusion

Understanding and addressing multicollinearity is vital for building robust and interpretable regression models. By detecting multicollinearity using techniques like VIF, correlation matrices, and condition index, and resolving it with methods like lasso and ridge regression or removing redundant predictors, you can ensure reliable and meaningful model results. Always check for multicollinearity in your regression models and apply appropriate solutions to maintain the integrity of your analyses.

For further learning and a refresher on how to do linear regression in your favorite workspace, consider exploring the following sources:

- Essentials of Linear Regression in Python: Learn what formulates a regression problem and how a linear regression algorithm works in Python.

- Linear Regression in Excel: A Comprehensive Guide For Beginners: A step-by-step guide on performing linear regression in Excel, interpreting results, and visualizing data for actionable insights.

- How to Do Linear Regression in R: Learn linear regression, a statistical model that analyzes the relationship between variables using R.

Become an ML Scientist

Seasoned professional in data science, artificial intelligence, analytics, and data strategy.

Questions about Multicollinearity

What is multicollinearity?

Multicollinearity occurs when two or more independent variables in a regression model are highly correlated.

What is the variance inflation factor (VIF)?

VIF measures how much the variance of a regression coefficient is increased due to multicollinearity. A high VIF means a variable is highly related to others, which might make the model unstable and less reliable.

How does multicollinearity affect my regression model?

Multicollinearity inflates the standard errors of the regression coefficients, making it harder to identify which variables are significant.

What’s the difference between perfect and imperfect multicollinearity?

Perfect multicollinearity occurs when one variable is an exact linear combination of another, making it impossible to compute the model's coefficients. In contrast, imperfect multicollinearity happens when variables are highly correlated but not exactly. However, it can also still lead to unstable model estimates.

How does the condition index help with detecting multicollinearity?

If the condition index is above 10, that indicates moderate multicollinearity, but if it is above 30, it indicates serious multicollinearity.