Course

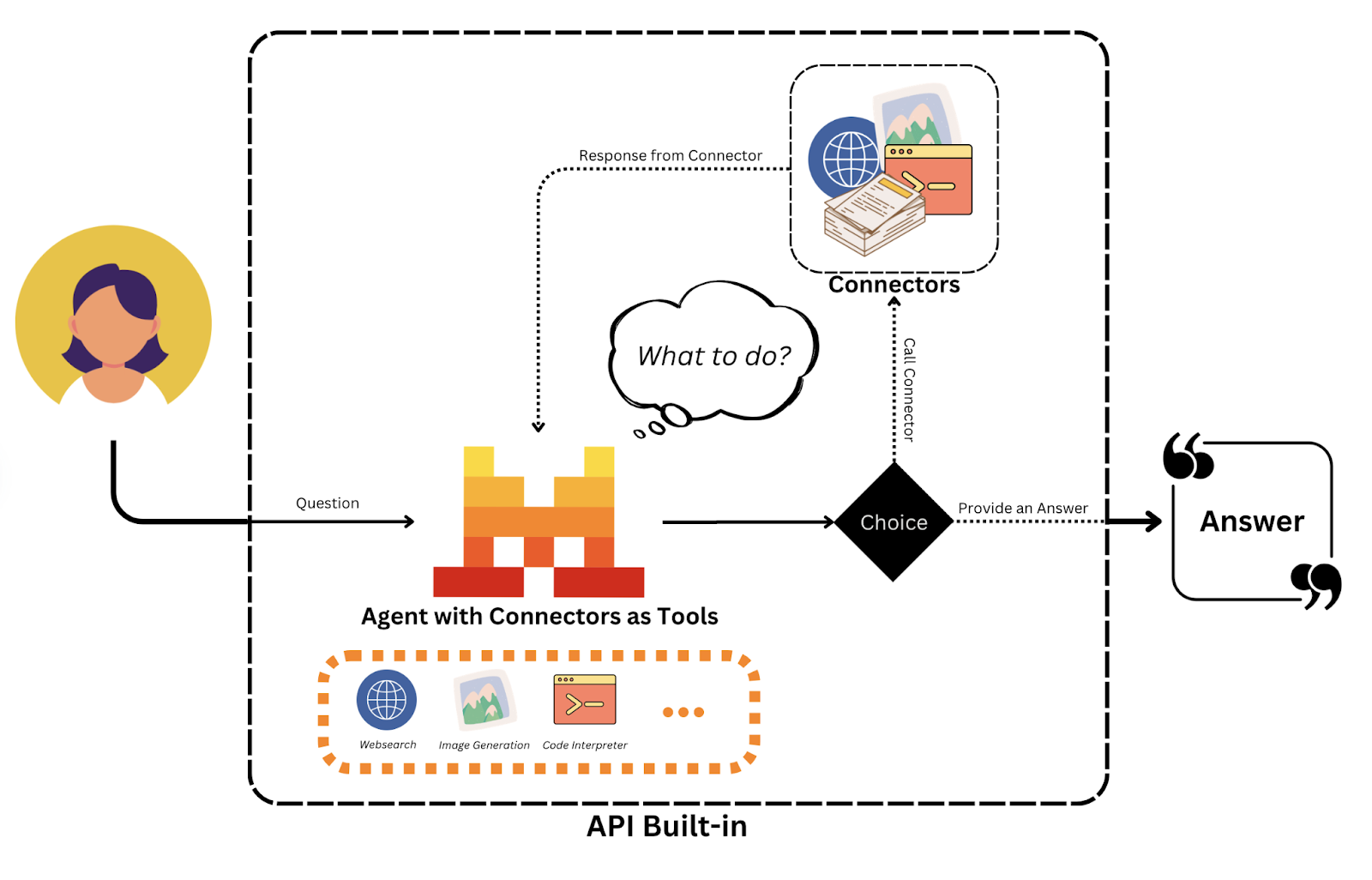

Mistral has released the Agents API, a framework that facilitates the development of intelligent, autonomous AI agents capable of performing complex, multi-step tasks. It extends beyond traditional language models by integrating tool usage, persistent memory, and orchestration capabilities.

In this tutorial, I’ll walk you through how to use the Mistral Agents API to build intelligent assistants capable of:

- Using tools like web search, code execution, and image generation

- Retaining memory across long conversations to preserve context

- Delegating tasks across multiple agents

You’ll learn the core concepts behind Mistral’s agentic framework, explore the built-in connectors and orchestration features, and build a working multi-agent assistant.

We keep our readers updated on the latest in AI by sending out The Median, our free Friday newsletter that breaks down the week’s key stories. Subscribe and stay sharp in just a few minutes a week:

What Is Mistral’s Agents API?

The Mistral Agents API is a framework that enables large language models to perform multi-step actions, use external tools, and maintain memory across interactions. Unlike simple chat APIs, it empowers AI agents to:

- Access the web, execute code, generate images, or retrieve documents

- Coordinate with other agents via orchestration

- Maintain persistent conversations with long-term memory

Currently, only mistral-medium-latest (also known as mistral-medium-2505) and mistral-large-latest are supported, but soon new models may be supported.

Core Concepts Explained

Before we get into the hands-on part, I think it’s useful to clarify the core concepts of the Agents API.

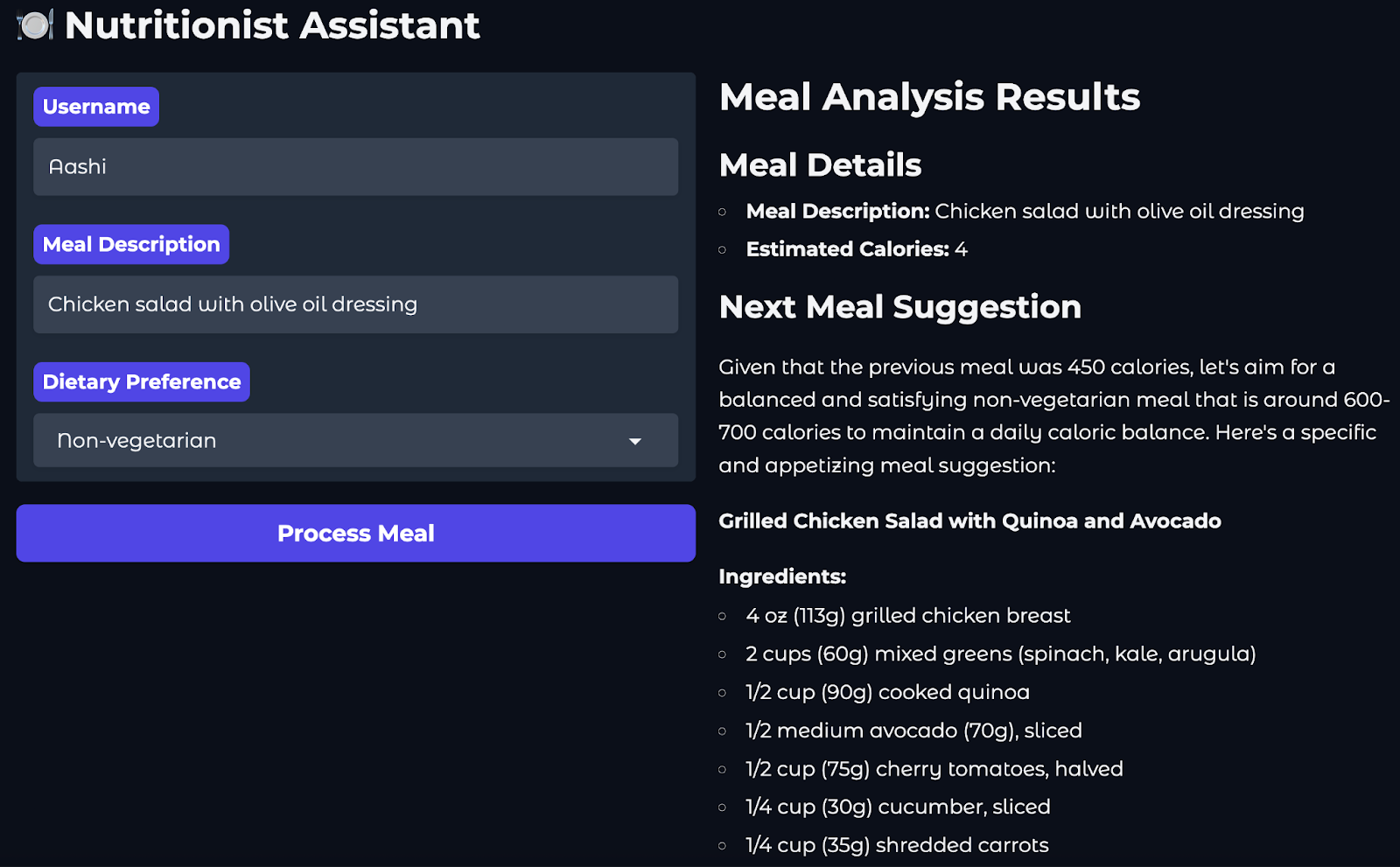

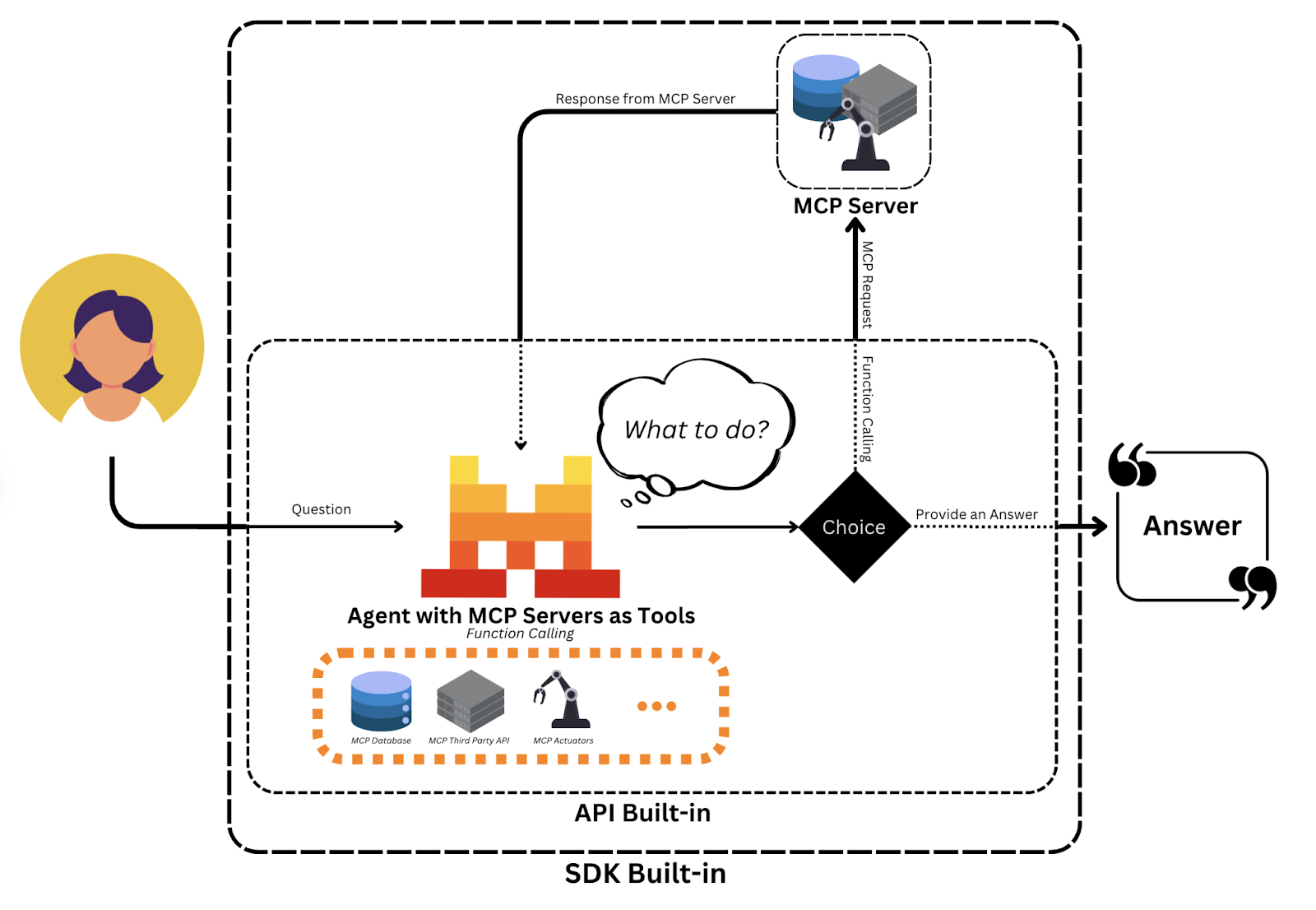

Source: Mistral AI

Agents

Agents in Mistral are model-powered personas enhanced with predefined instructions, tool access, and conversation state:

- Instructions guide the agent’s behavior (e.g., “You are a meal-logging nutrition coach”).

- Tools like

web_searchorimage_generationlet agents take real-world actions. - Memory enables agents to track prior messages and tool outputs across turns, supporting contextual reasoning.

This abstraction turns a basic chat model into a multi-functional assistant that can engage in complex workflows.

Conversations

Each interaction between a user and an agent is part of a conversation, which stores:

- Past messages and assistant responses

- Tool calls and results

- Streaming metadata for token-level visibility (optional)

These persistent conversations allow agents to carry out multi-turn tasks like planning, revising, or learning from earlier inputs.

Connectors and custom tool support

Mistral enables easy tool integration through:

- Connectors: These are pre-integrated tools like

web_search,code_execution,image_generation,document_librarythat work out-of-the-box. - MCP tools: These allow custom APIs hosted by developers that can be invoked as tools inside the agent workflow.

Think of connectors as built-in apps, and MCP Tools as plugins you write. Together, these allow agents to access both built-in capabilities and developer-defined endpoints for personalized logic.

Source: Mistral AI

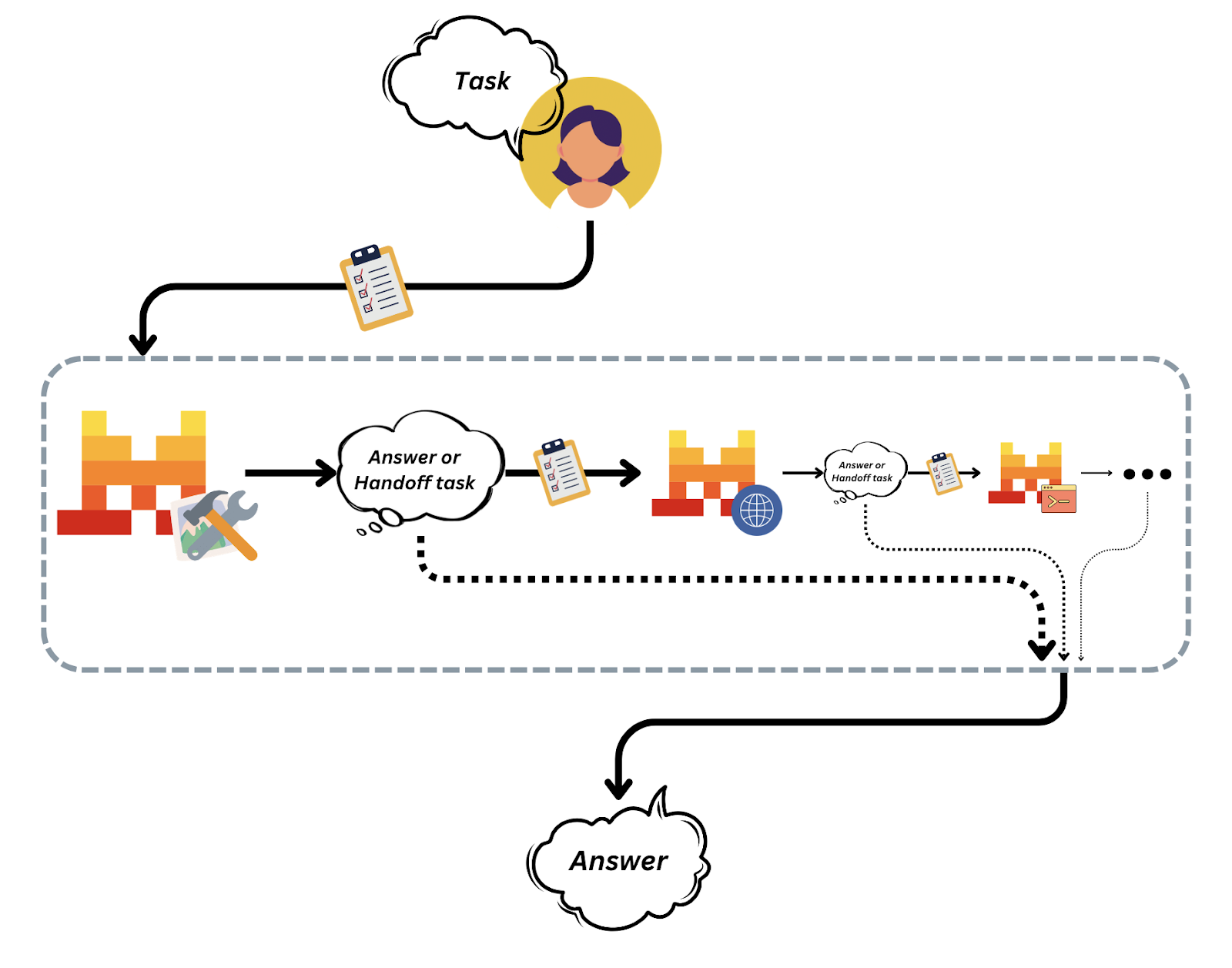

Entries and handoffs

Mistral tracks all actions in a conversation as structured “Entries,” enabling traceability and control over message exchanges and tool executions.

Agent handoffs are the ones where one agent delegates control to another to modularize workflows.

Source: Mistral AI

This orchestration architecture makes it easy to compose and manage complex multi-agent systems without losing transparency or control.

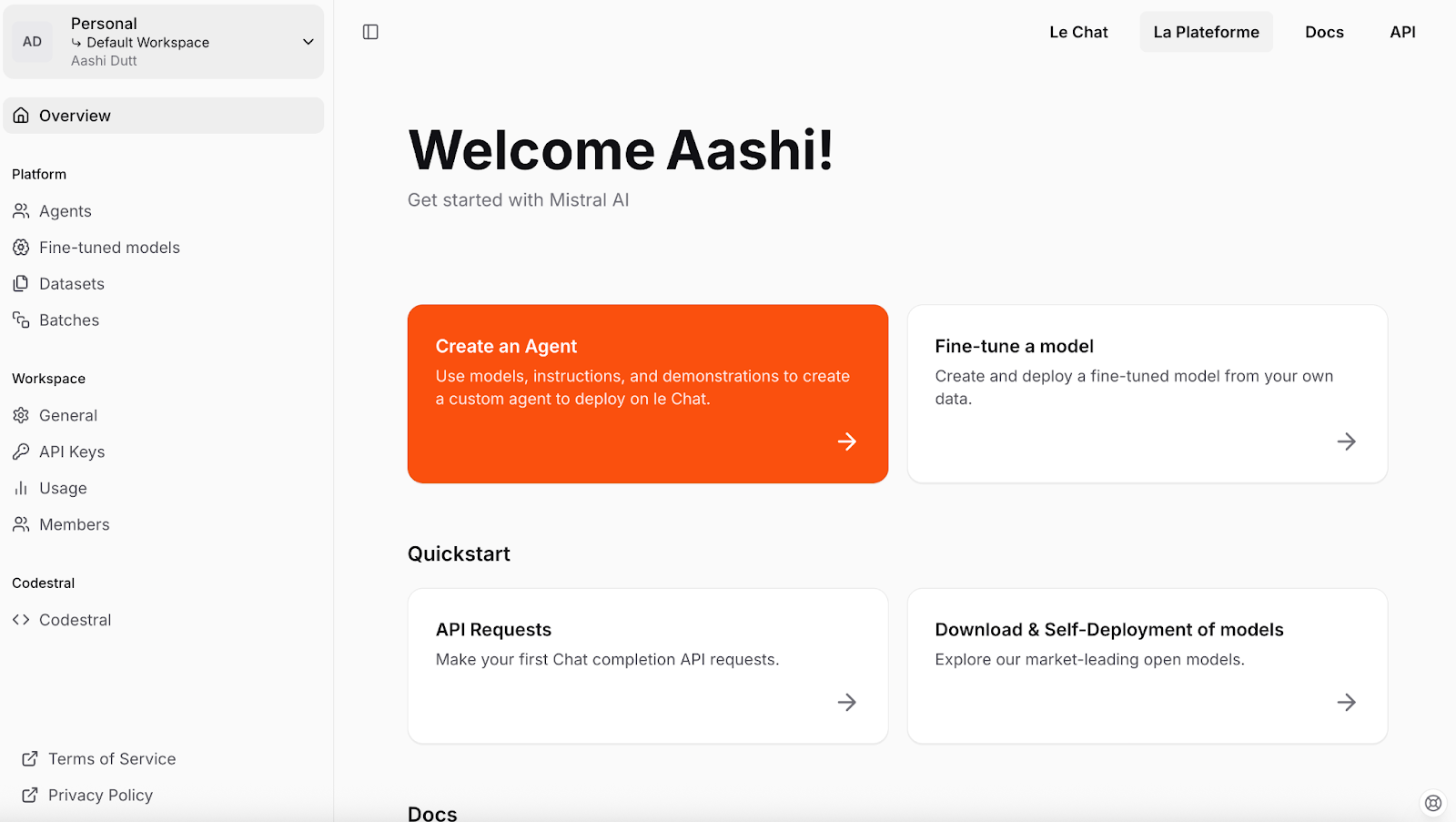

Setting Up Mistral API

Let’s get started with setting up Mistral. We’ll start by creating an API key for the project. Here are the steps you should follow:

- To get started, create a Mistral account or sign in at console.mistral.ai.

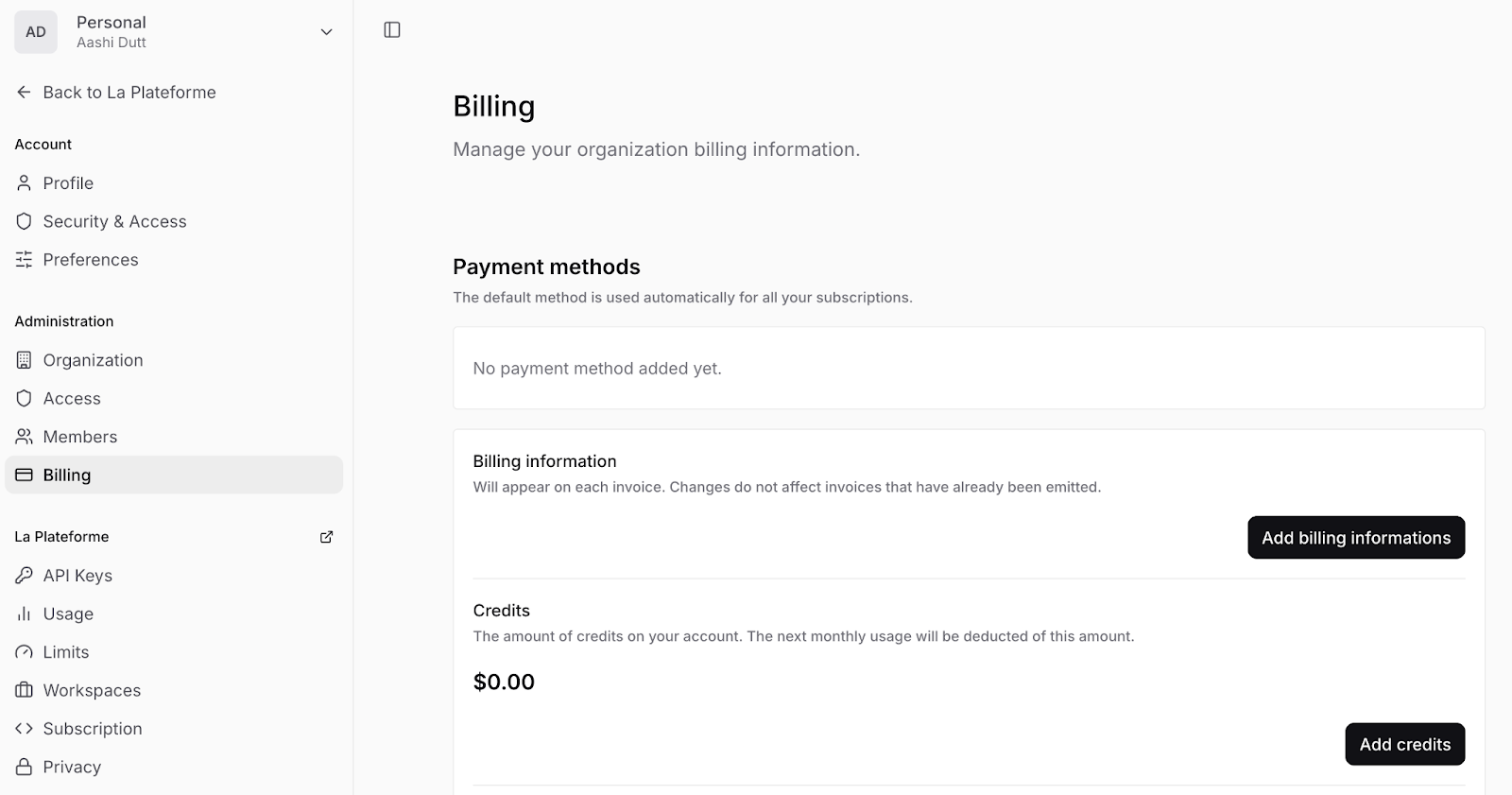

- Then, navigate to Workspace Settings (dropdown arrow near your name—top left corner) and click on Billing to add your payment information and activate payments on your account.

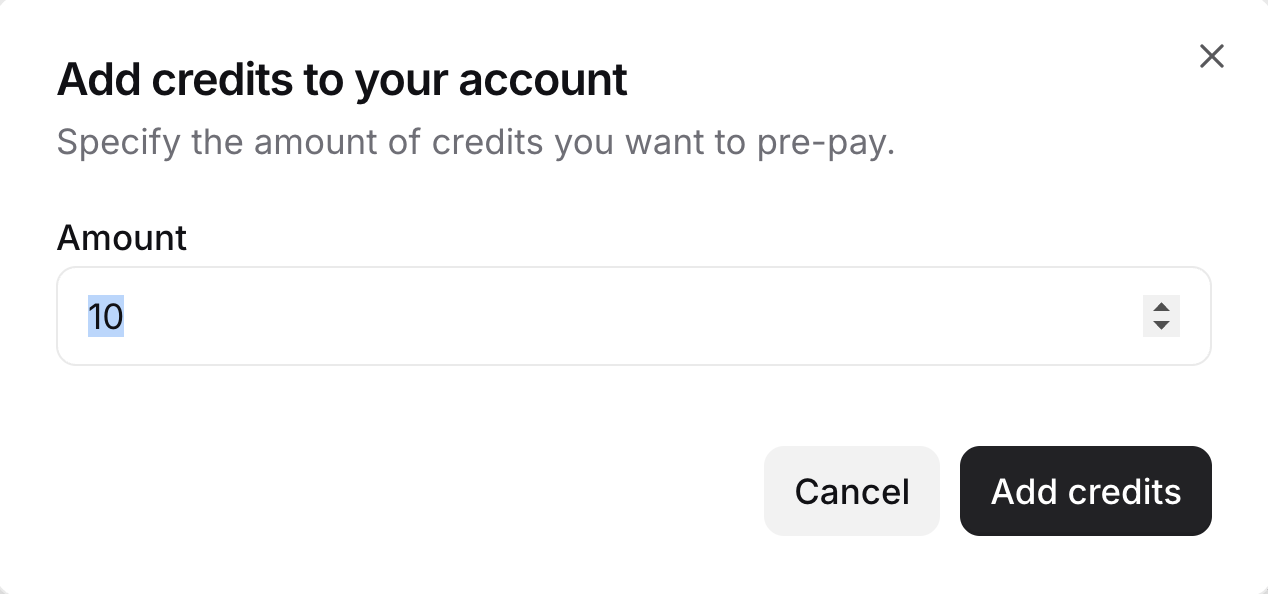

- Click on Add credits and fill in your billing information in the form. Then, fill in the number of credits you want. Note: You need to add at least 10 credits.

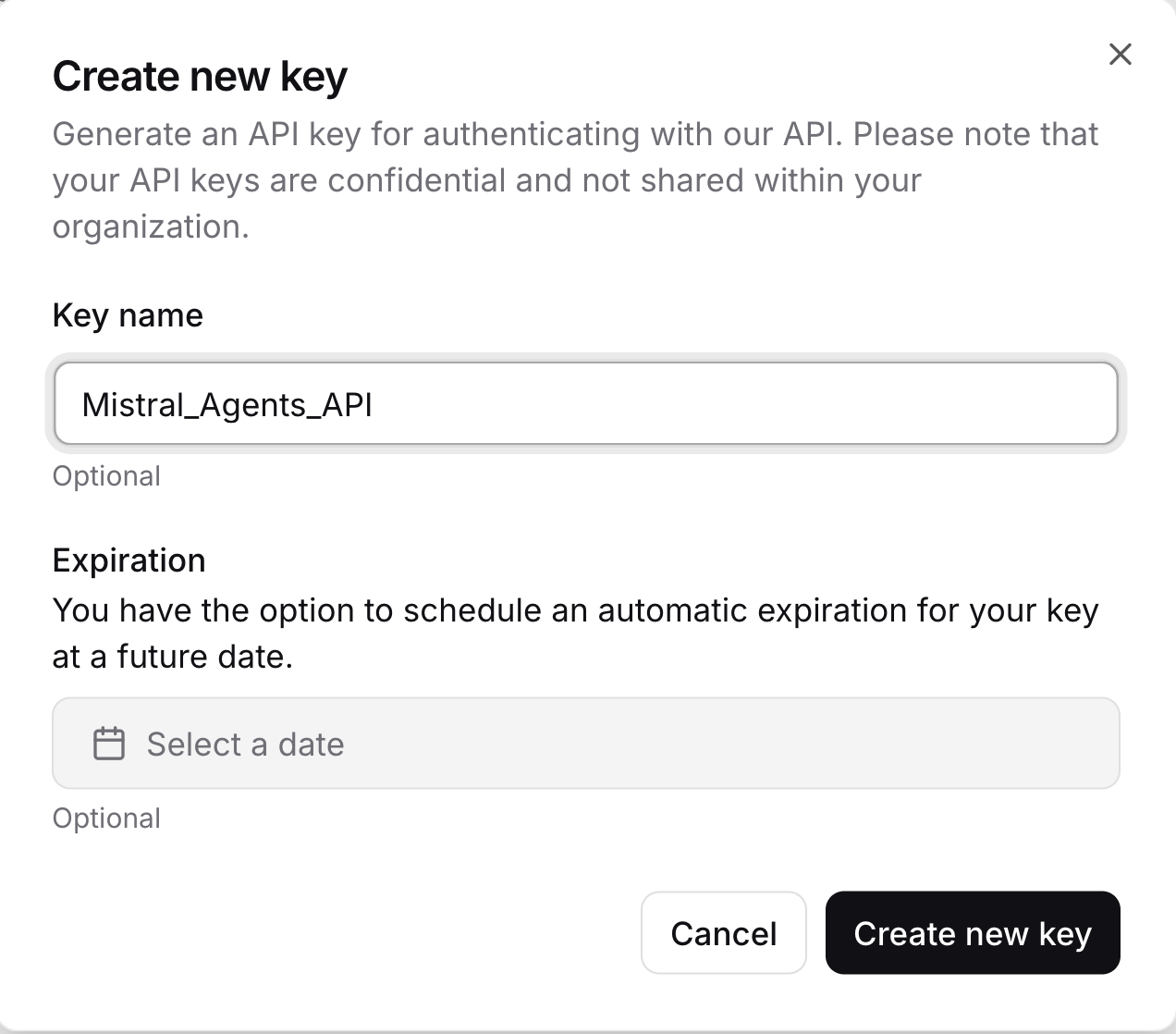

- Then, on the left side tab, click on API keys and make a new API key by clicking Create new key. Make sure to copy the API key, save it safely, and do not share it with anyone.

You now have a valid API key to interact with Mistral models and build intelligent agents.

Project Overview: Nutrition Coach with Mistral Agents

In this project, we’ll build an AI-powered Nutrition Coach using Mistral's Agents API. Here’s the user flow:

- The user submits a meal they just ate (e.g, "chicken salad with olive oil dressing").

- A web search agent uses the built-in connector to estimate calories for the meal.

- A logger agent records this entry under the user's name and timestamp.

- Finally, an image generation agent suggests a healthy follow-up meal, returning both an image and a description and recipe.

To provide a web interface where users can interact with the nutritionist, we’ll use Gradio.

Step 1: Prerequisites

Before we begin, ensure you have the following installed:

- Python 3.8+

- All required packages listed in

requirements.txt

python-dotenv==1.1.0

mistralai==0.0.7

typing-extensions==4.12.2

requests==2.31.0

gradio==4.34.0Also, set up a .env file that contains your Mistral API key. This approach keeps your API keys secure and allows different team members or deployment environments to use different credentials without modifying the source code.

MISTRAL_API_KEY=YOUR_API_KEYNote: It’s recommended to install these dependencies inside an isolated environment like conda, venv, or uv to avoid version conflicts with other Python projects.

Here are the commands to set up different environments:

Using venv

python -m venv mistral_env

source mistral_env/bin/activate # On Windows: mistral_env\Scripts\activateUsing Conda

conda create -n mistral_env python=3.10

conda activate mistral_envUsing uv

If you don’t have uv yet:

pip install uvThen:

uv venv mistral_env

source mistral_env/bin/activateTo install all the above-mentioned dependencies, run the following code in your terminal:

pip install -r requirements.txtNote: If you encounter any errors related to mistralai while using any agent or connector, run the following code:

pip install –upgrade mistralaiThis will upgrade the mistralai library to its latest version.

Step 2: Setting Up Mistral Tools

In this section, we will prepare our tools and client setup for interaction with the Mistral Agents API. We’ll define tool schemas, initialize the client, and verify authentication. These tools will later be referenced when setting up specific agents like calorie estimators or image generators.

Step 2.1: Tool registry and initialization

First, we define our tool schemas, model selection, and client instantiation. Then, we build helper methods like create_chat_completion() and check_client(). The following code goes into the configs.py file inside the tools folder:

#Configuration file

from mistralai import Mistral, UserMessage, SystemMessage

from dotenv import load_dotenv

import os

load_dotenv()

# Mistral model

mistral_model = "mistral-large-latest"

client = Mistral(api_key=os.environ.get("MISTRAL_API_KEY"))

# Agent IDs

web_search_id = "web_search_agent"

food_logger_id = "food_logger_agent"

user_assistant_id = "user_assistant_agent"

image_generator_id = "image_generator_agent"

# Tool definitions

tools = {

"log_meal": {

"name": "log_meal",

"description": "Log a user's meal with calorie count",

"input_schema": {

"type": "object",

"properties": {

"username": {"type": "string"},

"meal": {"type": "string"},

"calories": {"type": "number"},

"timestamp": {"type": "string", "format": "date-time"}

},

"required": ["username", "meal", "calories", "timestamp"]

}

},

"web_search": {

"name": "web_search",

"description": "Search the web for information about a meal",

"input_schema": {

"type": "object",

"properties": {

"query": {"type": "string"}

},

"required": ["query"]

}

}

}We start by importing dotenv to load sensitive variables like Mistral API key from a local .env file into Python’s environment. This keeps credentials secure and out of the source code. Then, we select the default model mistral-large-latest and instantiate a Mistral client using the API key.

Next, we define internal tool specifications (schemas) using JSON schema format. They help with the following:

- Simulate MCP tool behavior (though they are not registered via McpTool library)

- Describe expected inputs like "username", "meal", and "calories"

- Help ensure consistent input formatting across modules

Now, let’s add in our helper methods that create chat completions, register tools, agents, and initializethe client.

def create_chat_completion(messages, model=mistral_model):

# Convert messages to new format

formatted_messages = []

for msg in messages:

if msg["role"] == "system":

formatted_messages.append(SystemMessage(content=msg["content"]))

else:

formatted_messages.append(UserMessage(content=msg["content"]))

return client.chat.complete(

model=model,

messages=formatted_messages

)

# Register tools

def register_tools():

for tool_name, tool in tools.items():

print(f"Registered tool: {tool_name}")

# Register agents

def register_agents():

print("Registered User Assistant Agent")

print("Registered Web Search Agent")

print("Registered Food Logger Agent")

# Initialize everything

def initialize():

register_tools()

register_agents()

check_client()

# Check if the client is initialized

def is_client_initialized():

return client is not None and os.environ.get("MISTRAL_API_KEY") is not None

def check_client():

if not is_client_initialized():

print("Mistral client is not initialized. Please check your API key.")

return False

print("Mistral client is initialized.")

return True

initialize()The create_chat_completion() function wraps a call to the client.chat.complete() method, which is part of the Mistral client SDK, to send messages to the model.

- It reformats raw dict-style messages into

UserMessageandSystemMessageobjects required by the Mistral SDK. - It is also useful for standardized completions throughout the app.

The register_tools() and register_agents() functions help during local development by logging which tools and agents are active in the system. Meanwhile, the check_client() and is_client_initialized() functions ensure that the Mistral client has been properly instantiated and that the API key is available, helping avoid runtime errors during agent execution.

Step 2.2: Estimating calories with the web search agent

Now that we’ve initialized the base tools and client, let’s build a dedicated Nutrition Web Search Agent. This agent uses Mistral’s web_search connector to look up calorie estimates for meals in real-time. This code sits in the web_search.py file inside the tools folder.

# Web search tool

import re

from mistralai import Mistral, UserMessage, SystemMessage

from tools.configs import client

def search_calories(meal_desc):

try:

# Web search agent

websearch_agent = client.beta.agents.create(

model="mistral-medium-latest",

description="Agent able to search for nutritional information and calorie content of meals",

name="Nutrition Search Agent",

instructions="You have the ability to perform web searches with web_search to find accurate calorie information. Return ONLY a single number representing total calories.",

tools=[{"type": "web_search"}],

completion_args={

"temperature": 0.3,

"top_p": 0.95,

}

)

response = client.beta.conversations.start(

agent_id=websearch_agent.id,

inputs=f"What are the total calories in {meal_desc}?"

)

print("Raw response:", response)

# Extract the number from the response

if hasattr(response, 'outputs'):

for output in response.outputs:

if hasattr(output, 'content'):

if isinstance(output.content, str):

numbers = re.findall(r'\d+', output.content)

if numbers:

return int(numbers[0])

elif isinstance(output.content, list):

for chunk in output.content:

if hasattr(chunk, 'text'):

numbers = re.findall(r'\d+', chunk.text)

if numbers:

return int(numbers[0])

print("No calorie information found in web search response")

return 0

except Exception as e:

print(f"Error during web search: {str(e)}")

return 0In the above code, the search_calories() function uses the built-in connector tool for web search from Mistral Agents API, which enables agents to perform live web queries like calorie estimate, overcoming the limitations of static model training. Here is how it works:

- Agent creation: It first creates a “Nutrition Search Agent” using the

mistral-medium-latestmodel, which is equipped with theweb_searchtool and is instructed to return only calories. - Query execution: The agent is then used to start a conversation where it is asked about the total calories in the provided meal description.

- Response parsing: The response from the agent is parsed using regular expressions to extract the first numeric value (which should be the calorie count).

- Fallbacks: If no valid number is found or an error occurs, the function logs the issue and returns zero as a fallback.

Without web_search, most models can't answer questions about recent events or domain-specific meals not seen in their training data. With it, we can query nutritional information, restaurant menus, or news articles in real time.

There are two variants of web search:

web_search: A lightweight, general-purpose search engine connector (used in our project).web_search_premium: An enhanced version that includes news provider verification and broader context gathering.

Next, we’ll explore fallback calorie estimation using prompt-only logic.

Step 2.3: Estimation, logging, and meal suggestion

In this section, we implement three essential functions that act as fallbacks or enhancements. The following code sits inside the next.py file in the tools folder.

#Meal suggestions

from datetime import datetime

from tools.configs import client, create_chat_completion

from mistralai import SystemMessage, UserMessage

import re

def estimate_calories(meal_desc):

try:

# Messages for calorie estimation

messages = [

SystemMessage(content="You are a nutrition expert. Estimate calories in meals. Return ONLY a single number representing total calories."),

UserMessage(content=f"Estimate calories in: {meal_desc}")

]

response = client.chat.complete(

model="mistral-small-latest", # Using smaller model to avoid rate limits

messages=messages,

temperature=0.1,

max_tokens=50

)

content = response.choices[0].message.content

numbers = re.findall(r'\d+', content)

if numbers:

return int(numbers[0])

return 0

except Exception as e:

print(f"Error during calorie estimation: {str(e)}")

return 0

def log_meal(username, meal, calories):

try:

# Messages for meal logging

messages = [

SystemMessage(content="You are a meal logging assistant. Log the user's meal with calories."),

UserMessage(content=f"Log this meal: {meal} with {calories} calories for user {username} at {datetime.utcnow().isoformat()}")

]

response = client.chat.complete(

model="mistral-small-latest", # Using smaller model to avoid rate limits

messages=messages,

temperature=0.1,

max_tokens=100

)

return response.choices[0].message.content.strip()

except Exception as e:

print(f"Error during meal logging: {str(e)}")

return f"Logged {meal} ({calories} calories) for {username}"

def suggest_next_meal(calories, dietary_preference):

try:

# Messages for meal suggestion

messages = [

SystemMessage(content="You are a nutrition expert. Suggest healthy meals based on calorie intake and dietary preferences."),

UserMessage(content=f"Suggest a {dietary_preference} meal that would be a good next meal after consuming {calories} calories. Make it specific and appetizing.")

]

response = client.chat.complete(

model="mistral-small-latest",

messages=messages,

temperature=0.7

)

return response.choices[0].message.content.strip()

except Exception as e:

print(f"Error during meal suggestion: {str(e)}")

return "Unable to suggest next meal at this time."This script defines the core backend functions that power our nutrition workflow (from calorie estimation to follow-up meal planning). These functions use lightweight Mistral models and standard chat completion, without requiring any agent creation or connectors to be used when web search is not available, or to avoid rate limit errors. Here is what each function does:

estimate_calories(): This function is used as a fallback if theweb_searchconnector fails or is unavailable.- It sends a system message containing the assistant's role as a nutrition expert along with the user prompt which includes the meal user wants to log.

- The model is used with low

temperature=0.1for deterministic output. This means the model will produce consistent, repeatable responses for the same inputs tasks like calorie estimation and logging, where stable and factual outputs are preferred over creative variation. - It parses out the first number (using regex) from the model’s response.

log_meal(): This function logs a user’s meal, including a timestamp.- It uses a chat completion with the instruction and returns the assistant’s formatted confirmation message.

- This enables lightweight internal logging without requiring a dedicated backend database.

suggest_next_meal(): This function recommends the user’s next meal based on prior calorie intake and their dietary profile.- The model is invoked with

temperature=0.7to encourage more creative suggestions. - The response is then stripped and returned as the next meal idea.

Together, these functions allow calorie estimation, log confirmation, and healthy recommendations without needing external APIs. In the next step, we’ll visualize food suggestions with Mistral’s image generation connector.

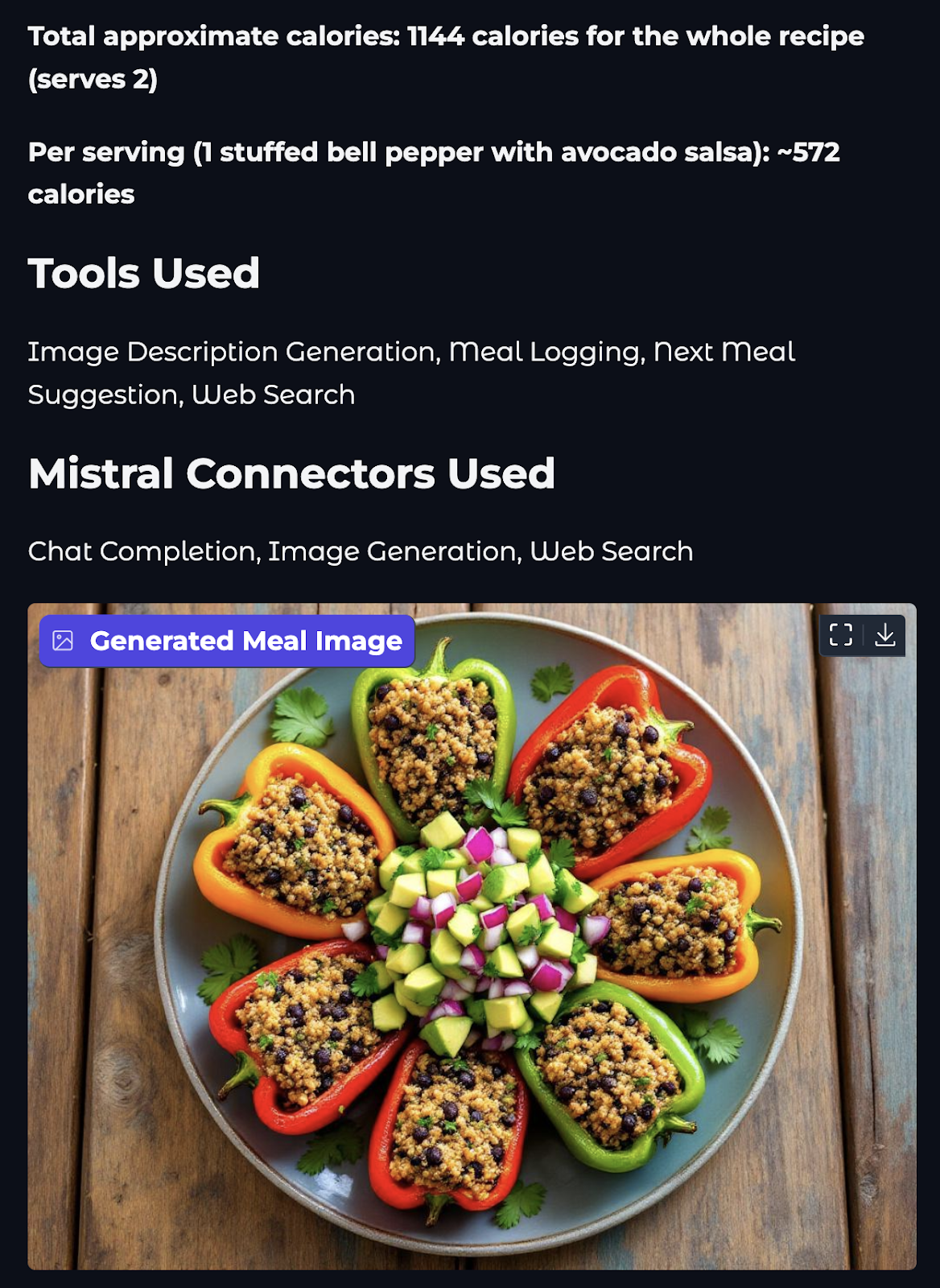

Step 2.4: Generating visuals with the image generation connector

Let’s now add a visual layer to our assistant. In this step, we’ll use Mistral’s built-in image_generation connector to generate an appetizing image of the next suggested meal.

#Image generation tool

import os

import re

from mistralai import Mistral, UserMessage, SystemMessage

from mistralai.models import ToolFileChunk

from tools.configs import client

from datetime import datetime

def generate_food_image(meal_description):

try:

print(f"Starting image generation for: {meal_description}")

# Image generation agent

image_agent = client.beta.agents.create(

model="mistral-medium-latest",

name="Food Image Generation Agent",

description="Agent used to generate food images.",

instructions="Use the image generation tool to create appetizing food images. Generate realistic and appetizing images of meals.",

tools=[{"type": "image_generation"}],

completion_args={

"temperature": 0.3,

"top_p": 0.95,

}

)

print("Created image generation agent")

response = client.beta.conversations.start(

agent_id=image_agent.id,

inputs=f"Generate an appetizing image of: {meal_description}",

stream=False

)

print("Got response from image generation")

os.makedirs("generated_images", exist_ok=True)

# Process the response and save images

timestamp = datetime.now().strftime("%Y%m%d_%H%M%S")

image_paths = []

if hasattr(response, 'outputs'):

print(f"Processing {len(response.outputs)} outputs")

for output in response.outputs:

if hasattr(output, 'content'):

print(f"Processing content of type: {type(output.content)}")

if isinstance(output.content, list):

for i, chunk in enumerate(output.content):

print(f"Processing chunk {i} of type: {type(chunk)}")

if isinstance(chunk, ToolFileChunk):

print(f"Found ToolFileChunk with file_id: {chunk.file_id}")

try:

# Download the image

file_bytes = client.files.download(file_id=chunk.file_id).read()

# Save the image

image_path = f"generated_images/meal_{timestamp}_{i}.png"

with open(image_path, "wb") as file:

file.write(file_bytes)

image_paths.append(image_path)

print(f"Successfully saved image to: {image_path}")

except Exception as e:

print(f"Error processing image chunk: {str(e)}")

else:

print(f"Content is not a list: {output.content}")

if image_paths:

print(f"Successfully generated {len(image_paths)} images")

return image_paths[0] # Return the first image path

else:

print("No images were generated")

return "No image was generated”

except Exception as e:

print(f"Error during image generation: {str(e)}")

if hasattr(e, '__dict__'):

print(f"Error details: {e.__dict__}")

return f"Error generating image: {str(e)}" The generate_food_image() function generates a visual representation of a meal using Mistral’s built-in image_generation tool. Here's what each part does:

- Agent creation: A new agent is instructed to create realistic and appetizing food visuals.

- Conversation start: A prompt is sent asking the agent to generate an image of the given

meal_descriptionthat is generated by the next meal agent. - Response handling: The function checks if the response contains image chunks. If yes, it downloads each image via the Mistral client’s

files.download()method. The images are saved locally in agenerated_images/folder with timestamped filenames. - Output: It returns the path to the first successfully saved image, or a fallback error message if generation fails.

This tool adds a visual layer to our nutrition coach, making meal suggestions more engaging and realistic.

Step 3: Full Nutritionist Pipeline

Now that we’ve built the individual tools and agent capabilities, it’s time to put them into a single pipeline. This step glues everything together into a working assistant that handles:

- Calorie lookup (via web or estimation)

- Logging (user, meal, calories, timestamp)

- Suggesting a follow-up meal

- Visualizing the meal with an image

All of these steps are handled by calling each function from our previous files in the right order. The code for this pipeline sits outside the tools folder in the agent.py file.

# Pipeline logic file

from tools import estimate_calories, log_meal, suggest_next_meal, search_calories

from tools.image_gen import generate_food_image

from datetime import datetime

import time

def run_nutritionist_pipeline(username, meal_desc, dietary_preference):

tools_used = set()

print(f"Searching for calories for: {meal_desc}")

calories_text = search_calories(meal_desc)

tools_used.add("Web Search")

# If web search fails, fall back to estimation

if calories_text == 0:

print("Web search failed, falling back to estimation...")

time.sleep(1) # Add delay to avoid rate limits

calories_text = estimate_calories(meal_desc)

tools_used.add("Calorie Estimation")

estimated_calories = calories_text

print(f"Estimated calories: {estimated_calories}")

print(f"Logging meal for {username}...")

time.sleep(1) # Add delay to avoid rate limits

log_response = log_meal(username, meal_desc, estimated_calories)

tools_used.add("Meal Logging")

print("Generating next meal suggestion...")

time.sleep(1)

suggestion = suggest_next_meal(estimated_calories, dietary_preference)

tools_used.add("Next Meal Suggestion")

print("Generating image description for suggested meal...")

time.sleep(1)

meal_image = generate_food_image(suggestion)

tools_used.add("Image Description Generation")

return {

"logged": log_response,

"next_meal_suggestion": suggestion,

"meal_image": meal_image,

"tools_used": sorted(list(tools_used))

}The above code coordinates the full nutrition assistant workflow by chaining multiple agent tools into a single pipeline. Let’s break down the code above in more detail:

- The function

run_nutritionist_pipeline()accepts theusername,meal_desc, anddietary_preferenceas inputs to drive the recommendation process. - First, it attempts to determine the meal’s calorie content using the

search_calories()function powered by Mistral's web search connector. If this fails, it falls back to an estimation using a lightweight model viaestimate_calories(). - The obtained calorie value is then logged using the

log_meal()function, which tags the entry with a timestamp and associates it with the user. - Based on the consumed calories and dietary preference, the

suggest_next_meal()function suggests a follow-up meal using prompt-based reasoning. - Finally, the

generate_food_image()function uses Mistral’s image generation connector to produce a visual representation of the recommended dish. - Throughout the process,

time.sleep(1)is used to introduce brief pauses between requests to avoid triggering rate limits during API calls. - The function returns a dictionary containing the meal logging confirmation, next meal suggestion text, generated image path, and a list of tools that were used in this interaction.

This pipeline combines multiple capabilities such as search, estimation, logging, suggestion, and visualization into an AI-powered nutrition experience.

Step 4: Creating a User-Friendly Interface with Gradio

Now that the backend logic is complete, let’s wrap it up into a clean, user-friendly frontend using Gradio.

# Main files

import gradio as gr

from agent import run_nutritionist_pipeline

import os

import re

def process_meal(username, meal_desc, dietary_preference):

# Run the pipeline

result = run_nutritionist_pipeline(username, meal_desc, dietary_preference)

# Extract calories from the logged meal response

calories = "Not available"

if result['logged']:

calorie_match = re.search(r'(\d+)\s*calories', result['logged'], re.IGNORECASE)

if calorie_match:

calories = calorie_match.group(1)

else:

paren_match = re.search(r'\((\d+)\)', result['logged'])

if paren_match:

calories = paren_match.group(1)

else:

number_match = re.search(r'\b(\d+)\b', result['logged'])

if number_match:

calories = number_match.group(1)

# Map tool names to Mistral connectors

connector_map = {

"Web Search": "Web Search",

"Image Description Generation": "Image Generation",

"Meal Logging": "Chat Completion",

"Next Meal Suggestion": "Chat Completion"

}

connectors_used = set()

for tool in result['tools_used']:

if tool in connector_map:

connectors_used.add(connector_map[tool])

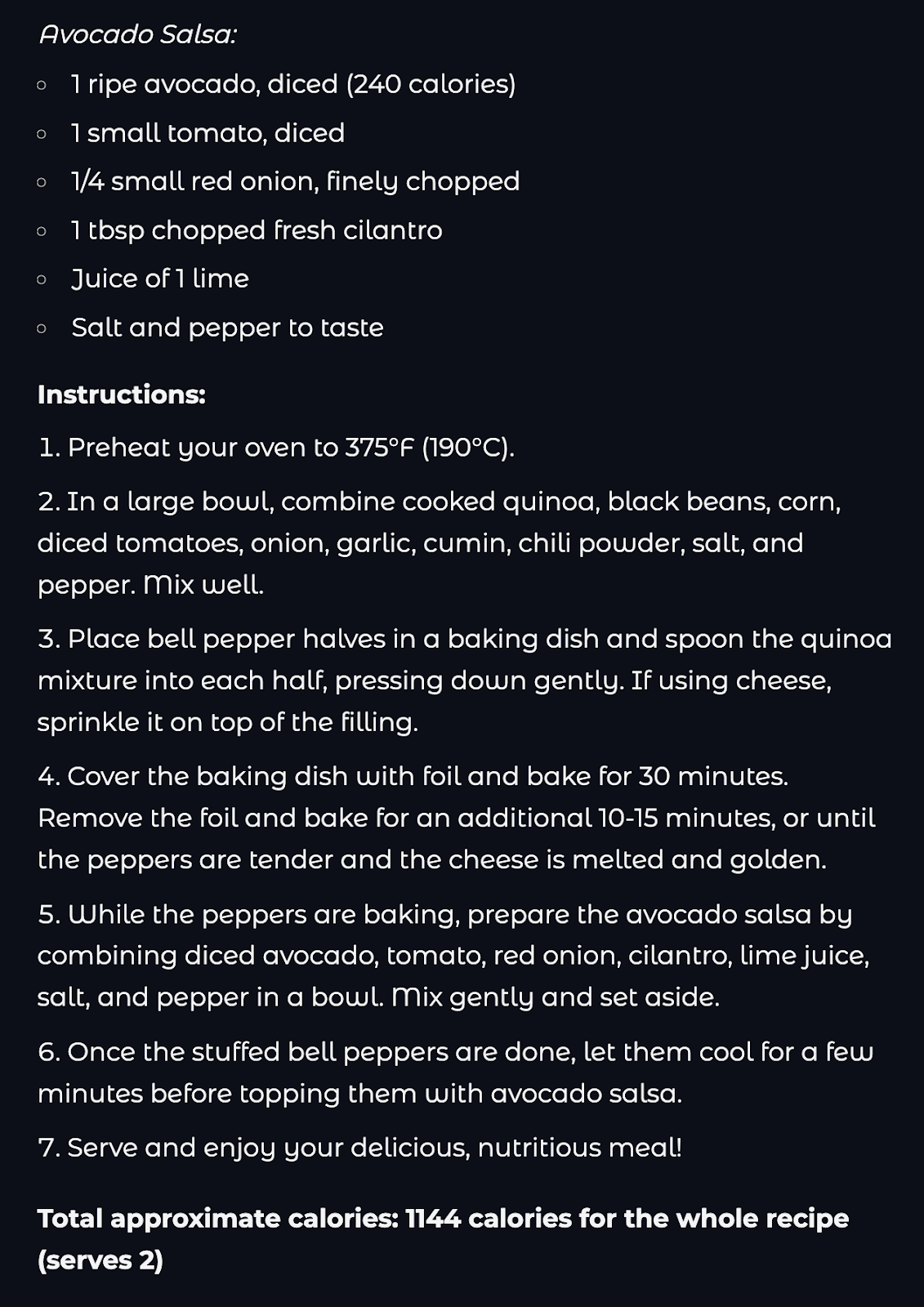

output = f"""

# Meal Analysis Results

## Meal Details

- **Meal Description:** {meal_desc}

- **Estimated Calories:** {calories}

## Next Meal Suggestion

{result['next_meal_suggestion']}

## Tools Used

{', '.join(result['tools_used'])}

## Mistral Connectors Used

{', '.join(sorted(connectors_used))}

""

# Get the image path and check if it is valid

image_path = result['meal_image']

if os.path.exists(image_path) and image_path.endswith('.png'):

return output, image_path

else:

return output, None

# Create the Gradio interface

with gr.Blocks(theme=gr.themes.Soft()) as demo:

gr.Markdown("# 🍽️ Nutritionist Assistant")

with gr.Row():

with gr.Column():

username = gr.Textbox(label="Username", placeholder="Enter your name")

meal_desc = gr.Textbox(label="Meal Description", placeholder="Describe your meal (e.g., 'Chicken salad with olive oil dressing')")

dietary_preference = gr.Dropdown(

choices=["vegetarian", "vegan", "Non-vegetarian", "gluten-free"],

label="Dietary Preference",

value="omnivore"

)

submit_btn = gr.Button("Process Meal", variant="primary")

with gr.Column():

output = gr.Markdown(label="Results")

image_output = gr.Image(label="Generated Meal Image", type="filepath")

submit_btn.click(

fn=process_meal,

inputs=[username, meal_desc, dietary_preference],

outputs=[output, image_output]

)

# Launch the app

if __name__ == "__main__":

demo.launch() In the above code snippet, we set up a complete Gradio UI interface for interacting with the Nutritionist Agent pipeline. Here is how it works:

- We start by importing

run_nutritionist_pipeline()function fromagent.py, which drives the core agent logic. - Then, the function

process_meal()acts as a wrapper around the agent pipeline, which acceptsusername,meal_desc, anddietary_preferenceas user inputs and triggers the multi-agent logic defined earlier. - Inside the

process_meal()function, the result dictionary is parsed to extract the meal summary, calories, suggested next meal, and connectors used. - The function also checks if the generated image path is valid before returning it for display. If the image is not found, then only the textual result is returned.

- The Gradio app is constructed using

gr.Blocks()and the layout includes two sides, one with input fields and a Process Meal button. While the other side contains an output area for meal analysis results and an image preview of the next meal. - The

submit_btn.click()callback wires the frontend button to trigger theprocess_meal()function, passing the inputs and receiving formatted markdown and image as outputs. - Finally, the app is launched via

demo.launch()if the script is run as the main module. You can setdebug = Trueto enable debugging mode of the app.

To run this demo, open a terminal in your root directory and type in the following command:

python app.pyThe overall structure of this project would look like this:

Mistral_Agent_API/

├── generated_images/ # Stores generated meal images

├── tools/

│ ├── configs.py # API setup, tool schema, environment config

│ ├── image_gen.py # Generates meal image

│ ├── next.py # Calorie estimator, logger, and next meal

│ └── web_search.py # Mistral web_search connector to find calorie

├── agent.py # Main pipeline connecting all tools

├── app.py # Gradio UI

├── requirements.txt # Project dependencies

├── .env # Environment variables You can find the complete code for this project on this GitHub repo.

Conclusion

In this tutorial, we built a fully functional AI Nutrition Coach using the Mistral Agents API. Along the way, we explored:

- How Mistral agents differ from raw LLM models

- The role of connectors like

web_searchandimage_generation - Orchestration across multiple agents in a single pipeline

- Building a user-friendly frontend with Gradio

This project shows the power of agentic workflows where LLMs are not just passive responders, but interactive entities that reason, take action, and coordinate tasks. From logging meals to suggesting healthy alternatives and even generating images, we showcased how to combine simple tools into powerful AI experiences.

To learn more about using AI agents, I recommend these hands-on tutorials:

Multi-Agent Systems with LangGraph

I am a Google Developers Expert in ML(Gen AI), a Kaggle 3x Expert, and a Women Techmakers Ambassador with 3+ years of experience in tech. I co-founded a health-tech startup in 2020 and am pursuing a master's in computer science at Georgia Tech, specializing in machine learning.