Track

Amazon EC2 (Elastic Compute Cloud) is a key building block of AWS. It allows us to spin up virtual servers in the cloud that can scale to meet our needs. However, not all EC2 instances are the same, and choosing the right type can make a big difference to our performance and costs.

In this guide, I’ll explain the different EC2 instance types, their uses, and how to choose the one that works best for you.

What Are EC2 Instance Types?

When you launch an instance in AWS, you’ll need to choose an instance type. Each type is a different package of computing power, which includes CPU, memory, storage, and network capacity. These types help match your application’s needs to the right amount of resources, so you don’t pay for more than you use.

That’s why choosing the right instance type is the foundation of building efficient, cost-effective infrastructure. It’s one of the best ways to make sure your cloud setup works smoothly and scales with your workload.

The role of instance families

AWS sorts its instance types into families. Each family group has similar performance profiles, so it’s easier to find the right one for your job.

For example:

- Compute-optimized instances are great for batch processing and high-performance computing.

- Memory-optimized instances are better suited for jobs that require a substantial amount of RAM, such as in-memory databases or real-time analytics.

- General-purpose instances are perfect for situations where a balance of compute, memory, and network resources is needed.

- Storage-optimized instances are optimized for low-latency input/output (I/O) operations.

This structure helps us quickly zero in on the options that fit our specific use case.

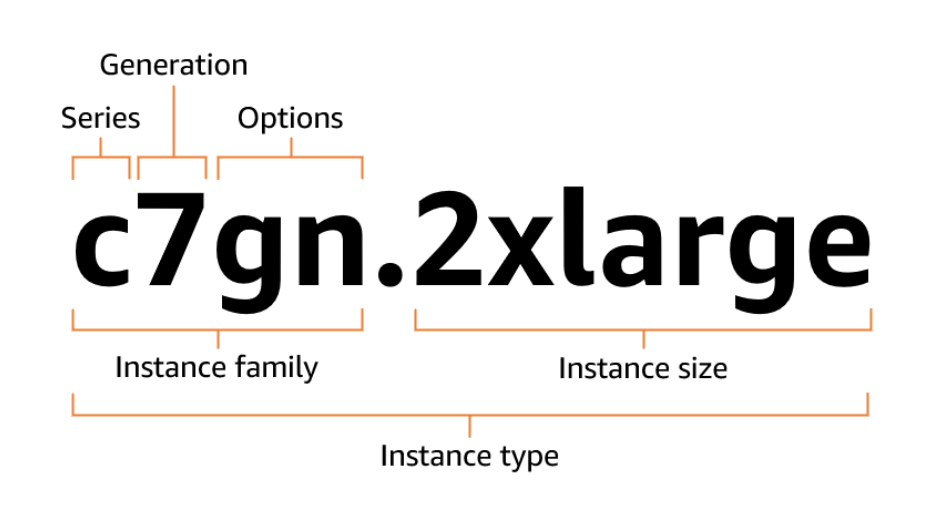

EC2 Instance Naming Convention

AWS EC2 instance types follow naming conventions that tell you what each instance can do or is optimized for. Let’s see how you can decode those names to choose the right one more confidently.

Format breakdown

Instance types are named based on their instance family and instance size. The first part of the instance name describes the instance family, and the second part tells the instance size. Both are separated by a period ..

Take an instance name like m5.large. It’s made up of three key parts:

- Series (

m) tells you the general purpose of the instance. In this case,mstands for general-purpose. - Generation (

5) indicates the version. Higher numbers usually mean newer hardware and better performance. - Size (

large) refers to the scale of resources like CPU and memory.

m5.large means you're getting a general-purpose instance, from the fifth generation, with a moderate amount of resources.

The image below visually explains the format:

EC2 instance type naming convention. Source: AWS docs

Examples and decoding

Let’s look at a few more examples and decode them:

t4g.microis a burstable instance using Graviton (ARM-based) processors, suited for lightweight, occasional workloads.r6g.xlargeis a memory-optimized instance from the 6th generation, also Graviton-based, ideal for memory-intensive applications, such as in-memory databases.c7gn.2xlargeis a compute-optimized instance, 7th generation, with enhanced networking and EBS support, powered by Graviton processors.

Here are some popular series and their corresponding options, along with notations.

|

Series |

Options |

|

|

For the complete list of series and options, check out the AWS documentation.

Now, let’s check different instance types in more detail.

General Purpose Instances

General-purpose instances provide a balanced mix of compute, memory, and networking power. They're a great choice when your workload doesn’t lean heavily in one direction, which is ideal for web servers, dev environments, and backend systems.

AWS offers two main series in this category: the T-series and the M-series. Let’s take a closer look at these:

T-series

T-series instances are built for burstable performance. They provide a baseline level of CPU power, but can ramp up temporarily when needed. This burst capability runs on a credit system, which means that when your instance uses less than its baseline CPU, it earns credits. When demand spikes, it spends those credits to boost performance.

If you enable “unlimited” mode, your instance can continue to burst beyond its credit balance, but you’ll be charged for the extra usage.

These instances are ideal for variable or low-to-moderate CPU workloads, like:

- Low-traffic web apps

- Development and test environments

- Small databases

- CI/CD pipelines

Suppose you run a small web app. Most of the time, it runs smoothly with minimal CPU usage. When you launch a campaign and traffic spikes, it uses saved credits to manage the extra load. After the rush, it returns to its baseline and starts earning credits again.

Its popular types include t4g, t3, and t2, available in sizes like t4g.nano.

M-series

M-series instances offer a balanced CPU-to-memory ratio, with approximately 4GB of RAM per vCPU. That makes them versatile for general-purpose workloads that don’t need specialized compute or memory configurations.

We can use them for:

- Small-to-medium databases (e.g., MySQL, PostgreSQL)

- Application servers

- Backend services for enterprise apps

- Caching layers

- Game servers

Some of its examples are m6i, m8g, and mac, each available in multiple sizes, such as m8g.medium.

Compute Optimized Instances

When your workloads are heavy on processing but light on memory needs, compute-optimized instances are a great fit. They deliver high-speed performance for CPU-intensive tasks, ideal for analytics, media encoding, or gaming infrastructure.

AWS offers this power through the C-series. Let’s explore them in detail:

C-series

C-series instances are designed for high compute performance with less memory overhead. That means you get more CPU power per gigabyte of RAM, perfect for applications where speed matters more than storage or memory.

For maximum throughput and efficiency, these instances use the latest processors, including Intel, AMD, and AWS Graviton chips.

We can use them for:

- High-performance web servers

- Video encoding and media transcoding

- Scientific simulations and modeling

- Batch processing and data crunching

- Game servers that need low latency and fast tick rates

Some examples you might come across are c6g, c7i, c5n, and c4. Each comes in a range of sizes, so you can scale up or down depending on your needs.

Here’s what sets compute-optimized instances apart:

- Low latency: They respond quickly, which makes them great for real-time applications like streaming and gaming.

- Fast processing speeds: With high clock speeds and optimized CPU cores, they can handle millions of requests per second.

- Cost-effective scaling: You can boost your compute power without overpaying for memory you don’t need.

Sustainability: Many C-series instances use AWS Graviton processors, which are more energy-efficient and help reduce your carbon footprint.

AWS Cloud Practitioner

Memory Optimized Instances

Memory-optimized instances are best for workloads that need more memory than CPU power or storage. These instances handle high-performance databases and in-memory applications that process large datasets entirely in memory for ultra-fast response times.

R-series

R-series instances are built for memory-heavy workloads. They offer a higher memory-to-vCPU ratio, which makes them a great fit when your app requires a lot of RAM but not necessarily a large amount of compute power.

We can use them for:

- In-memory caching: Ideal for tools like Redis or Memcached that rely on lightning-fast access to data.

- Real-time big data analytics: Perfect for processing and analyzing data in-memory, with low latency.

- Enterprise apps: Great for large applications that need solid memory allocation to run reliably.

Some of its popular options include r5, r6a, r8g, and r4. Each one comes in different sizes depending on your needs.

X-series and Z-series

X-series instances provide extreme memory capacity and are specifically designed for memory-intensive enterprise workloads. These are the go-to for:

- SAP HANA

- In-memory databases

- Real-time, large-scale analytics

Some of their standard models are x1e, x2gd, and x8g.

Z-series instances are a bit different. They combine high memory and high CPU frequency, which makes them ideal for:

- Electronic design automation (EDA)

- Financial simulations

- Large relational databases

- Computational lithography

If your workload requires strong single-threaded performance and a substantial amount of RAM, the Z-series (such as the Z1d) offers a balanced punch.

Storage Optimized Instances

Storage-optimized instances use locally attached, high-speed SSDs (NVMe), which are ideal for workloads requiring extremely fast and reliable storage. That’s why we can use them for NoSQL databases, data lakes, or large-scale data processing that requires high IOPS (Input/Output Operations Per Second).

Let’s look at some common storage-optimized instances available:

I-series, D-series, H-series

The following table shows details of each instance series:

|

I-series |

D-series |

H-series |

|

|

Primary use |

High IOPS transactional workloads and low-latency data access. |

Data-heavy applications, big data processing, and workloads needing large storage capacity. |

High-performance computing (HPC) applications with storage-bound requirements. |

|

Ideal for |

NoSQL databases (like Cassandra, MongoDB), transactional databases, real-time analytics, and distributed file systems. |

Data lakes, Hadoop distributed computing, log processing, and large-scale data analysis. |

HPC simulations, scientific computing, seismic analysis, and machine learning with large local datasets. |

|

Storage |

High-speed NVMe SSD local storage (ephemeral). |

High-capacity local storage, typically HDD or SSD (depending on instance type). |

High-throughput NVMe SSD local storage (ephemeral). |

|

Performance |

Very high IOPS and low latency. |

High sequential read/write throughput and less random IOPS than I-series but excellent for streaming large files. |

Delivers excellent throughput and high IOPS, tailored for data-intensive simulations and HPC workloads. |

|

Examples |

I8g, I7ie, I3en |

D3, D3en |

H1 |

For the complete list of I-series, H-series, and D-series instances, check out the documentation.

Local storage performance

Local storage uses NVMe SSDs, providing blazing-fast performance with very high IOPS (Input/Output Operations Per Second), sub-millisecond latency, and high throughput for your applications. However, it’s ephemeral, which means the data is lost when the instance is stopped or terminated.

That’s why ephemeral storage is used mainly for:

- Temporary scratch space

- Buffer/cache storage

- High-speed temporary databases (like Redis, Cassandra with replicas)

In contrast, EBS-backed volumes (Elastic Block Store) are persistent storage that remains available even if the instance is stopped. EBS is network-attached and is highly durable, offering features like snapshots for easy backup and recovery. This makes it ideal for workloads where data persistence is important, such as databases and critical files.

While ephemeral storage provides incredible performance, it doesn’t provide data durability. On the other hand, EBS-backed storage offers excellent durability and resilience, ideal for workloads that need both performance and data protection.

Accelerated Computing Instances

Accelerated computing instances, also called hardware-accelerated instances, are used when we need specialized hardware like GPUs (Graphics Processing Units), FPGAs (Field Programmable Gate Arrays), or AWS custom chips to offload compute-intensive tasks that would be too slow or inefficient on CPUs alone.

That’s why they provide improved performance and are suitable for workloads that require heavy parallel processing or complex calculations.

GPU-based instances (P and G families)

GPU-based instances are perfect for tasks that demand powerful parallel computing. With NVIDIA GPUs at their core, these instances are optimized for heavy-duty workloads like ML model training, 3D rendering, high-end gaming, and other graphics-intensive tasks.

Two key families in the GPU-based instance category are:

- P family (e.g.,

P4,P5): These are equipped with NVIDIA GPUs and are tailored for machine learning (ML) and high-performance computing (HPC) applications. - G family (e.g.,

G4,G5): Also powered by NVIDIA GPUs, these are ideal for graphics workloads, ML inference, and game streaming.

Specialized AI/ML instances (Infm and Trn families)

For AI and machine learning workloads, the Inferentia-optimized instance (Inf) and Trainium-optimized instance (Trn) families are built with custom AWS silicon like AWS Inferentia and AWS Trainium chips. They can train and infer deep learning models at scale to deliver top-tier price-performance.

- Inf family (e.g.,

Inf1,Inf2): These instances are perfect for low-latency, high-throughput inference tasks, backed by AWS Inferentia chips. - Trn family (e.g.,

Trn1,Trn2): Optimized for distributed deep learning model training, they provide high-speed interconnects and substantial compute capacity, driven by AWS Trainium chips.

These instances are scalable and support TensorFlow, PyTorch, and Apache MXNet frameworks to increase efficiency and provide seamless performance.

We can use them for:

- Deep learning training at scale: Use Trn1 for cost-effective, high-performance training of large models.

- AI inference at low cost: Use Inf1 for fast, low-cost model inference.

- Large-scale AI deployments: Support environments with millions of inferences per second, such as recommendation engines, speech recognition, and autonomous vehicles.

How To Choose the Right EC2 Instance?

With so many instance types out there, it’s quite challenging to know what to look for so you can make the most of what AWS offers. So, let’s see how you and your team can make confident choices that balance performance, cost, and scalability.

Key decision factors

Start by considering what your workload really needs. Identify the CPU power, memory, storage, and networking requirements that you need, as different workloads require different instance types.

You’ll also want to factor in how your traffic behaves. Is it steady and predictable, or does it spike at certain times? That can help us decide whether to go for something that can scale on demand.

And mostly, we work within cost constraints, so weigh performance against what we’re willing to spend. If we don’t need persistent storage, using ephemeral storage could save us a fair bit compared to EBS volumes.

AWS tools for selection

Thankfully, AWS gives us several tools to narrow things down. Here are some of these:

- Instance Explorer is a quick way to filter instance types based on the specs you’re after.

- AWS Compute Optimizer looks at how you’re currently using instances and suggests better options if they exist.

- AWS Pricing Calculator estimates costs upfront, which is great if we want to compare options before deployment.

Benchmarking and testing

Even with all these tools, it’s still worthwhile to run some tests yourself. Benchmarking different instances in our environment shows us how they hold up under real workloads. This gives us a better sense of performance, latency, and cost, all based on what actually matters to us.

Cost and Purchasing Options

When it comes to EC2, the way we purchase instances can impact our overall costs. Since AWS offers several purchasing options, each tailored to different workloads, let’s see how to choose the right one for our current project and how to manage our cloud bills effectively.

On-demand instances

On-demand instances give us the most flexibility. We can launch them whenever we need, pay by the second, and shut them down just as easily, no commitments required. They’re perfect for:

- Short-term projects

- Spiky or unpredictable workloads

- Getting started quickly without upfront planning

But that flexibility comes at a higher price. For long-running or predictable workloads, other options can save us a lot more over time.

Reserved and Spot instances

Reserved instances are ideal when we know a workload will run consistently for an extended period. By committing to a one or three-year term, we can save up to 75% compared to on-demand pricing. It’s a smart move for running stable, always-on apps.

Spot instances, on the other hand, are better for a tight budget. AWS offers unused capacity at massive discounts, up to 90% off, but this can be withdrawn with little notice. That means they’re best for jobs that can handle interruptions, like:

- Batch processing

- Data analysis

- CI/CD workloads

- Stateless services

Quick comparison: On-demand vs. reserved vs spot instances

Here’s a quick side-by-side table to help you decide:

|

Option |

Best for |

Cost |

Flexibility |

Good to know |

|

On-demand |

Short-term or unpredictable work |

Highest |

Very flexible |

Great for testing or unpredictable loads |

|

Reserved |

Long-term, steady workloads |

Lower (with commitment) |

Less flexible |

1 or 3-year term saves up to 75% |

|

Spot |

Interruptible, fault-tolerant tasks |

Lowest |

Limited (can be stopped anytime) |

Up to 90% savings, but no guarantees |

For a more detailed comparison, check out the AWS official website.

Cost optimization tips

To stretch our budget even further, here are a few tools and strategies we can lean on:

- Savings plans: These offer flexible, commitment-based pricing across EC2 and other services. We can save up to 72% by committing to consistent usage.

- Auto scaling: Automatically adjusts our capacity to match demand, so we’re never over-provisioning.

- Right-sizing: Regularly review instance types and sizes to make sure they still fit. Small tweaks can mean big savings over time.

Conclusion

There’s no one-size-fits-all when it comes to EC2 instances and that’s a good thing. Whether we’re setting up a quick dev environment or scaling a complex machine learning pipeline, AWS gives us the flexibility to choose exactly what we need.

The key is understanding our workload, knowing our performance goals, and staying mindful of cost. The more intentional we are with our choices backed by tools, testing, and a bit of trial and error, the more we’ll get out of our infrastructure.

If you’re keen to keep building your AWS skills or explore more of what EC2 can do, here are a few resources to check out:

- Introduction to AWS – A great starting point to understand the core AWS concepts and services.

- AWS Cloud Technology and Services – Explore AWS services and how they fit together in the cloud ecosystem.

- AWS Security and Cost Management – Learn about the best practices for securing your AWS environment and managing costs effectively.

- Introduction to AWS Boto in Python – A hands-on course that teaches you how to use Boto3 (AWS SDK for Python) to automate tasks and manage AWS resources.

These resources will help you take your AWS skills to the next level, with practical examples and real-world applications.

AWS Cloud Practitioner

FAQs

What is the difference between ECS and EC2?

EC2 is a virtual machine service where you manage the entire virtual machine, including the operating system and applications. ECS, on the other hand, is a managed container orchestration service that focuses on running and scaling containerized applications.

Is AWS EC2 serverless?

EC2 requires management and provisioning of the environment.

What is Elastic IP in AWS?

In AWS, an Elastic IP address is a static, public IPv4 address that you can associate with an instance or network interface to ensure a consistent public IP even if the instance is stopped or restarted, or moved to another region.

How do I delete an EC2 instance?

Log in to the AWS Management Console, go to the EC2 dashboard, and select the instance you want to delete. Then, choose "Terminate" from the Instance State dropdown menu. Confirm the termination when prompted.

What is AMI in AWS?

Amazon Machine Image (AMI) is a template containing the information needed to launch an Amazon Elastic Compute Cloud (EC2) instance.

How do Graviton-based instances compare to Intel or AMD-based ones?

Graviton instances use ARM architecture, offering better price-performance and energy efficiency for many workloads. However, compatibility with x86 apps should be verified.

I'm a content strategist who loves simplifying complex topics. I’ve helped companies like Splunk, Hackernoon, and Tiiny Host create engaging and informative content for their audiences.